Hint: It’s not found by counting the number of lines of code written each day.

How do you measure programmer productivity? Some managers measure productivity by counting the number of software lines of code (usually abbreviated as either LoC or SLoC) written each day. I recall, decades ago, a friend’s father telling me his company required programmers to write at least five lines of code per day, on average. At the time, the figure seemed low, but the key is on average. As every professional programmer will explain, you don’t write code every day. You also participate in planning, meetings, testing, meetings, design, meetings, bug fixing, and meetings.

LoC is not considered to be the best measurement of programmer progress, and it’s certainly not an indication of quality. It’s even debatable what LoC is: Do you include comments? Most say no. Others feel that since it’s frowned upon to not document your code, comments should be counted. Andrew Binstock recently made a similar argument while discussing the value of comments in code.

What about lines that consist of only braces? What about if statements split with line feeds? Do you count switch statement conditionals? Perhaps you should, because, after all, good structure counts, right?

Then there are advancements in Java such as lambdas, where the goal is to reduce the amount of code needed to achieve something that required more code in the past. No developer should be penalized for using modern constructs to help write fewer lines of more-effective code, right?

There are times I marvel at how many lines of code I’ve written for whatever project I’m working on. Additionally, common sense says that as you learn a new programming language, the more code you write, the more proficient you get. Looking at it this way, using LoC for progress can be rationalized.

However, it’s hard to get past the fact that elegant code is often more concise—and easier to maintain—than poorly written brute-force code. You don’t want to incentivize developers to be overly verbose or inefficient to achieve a desirable LoC metric.

Bill Gates once said, “Measuring programming progress by lines of code is like measuring aircraft building progress by weight.” Indeed, I’ve worked with folks who have valued lines of code removed more than new lines of code added. The most rewarding tasks often involve improving a system design so much that multiple screens-worth of code can be removed.

Given this, here are a few alternatives that can measure programmer progress.

Problems solved

Even when the goal is to track developer productivity using actual numbers, developers often have ample opportunity to make a positive impression with their problem-solving skills. Effective problem-solving requires both thought and research. If you can consistently provide meaningful, and timely, solutions to even the thorniest of problems, management will know you’ve put the time and effort into being prepared.

On a developer team, a person who can solve tricky problems has as much value as someone else who cranks out lots of fast code that implements that solution. Yet who gets the credit? Often, it’s the coder, not the problem solver.

Another issue is that problem-solving can fall into the perception-beats-reality category. Some people are louder and bolder than others, some are good at building on the suggestions of others, and simply overhear solutions, to put it nicely.

A “problems solved” metric probably won’t fly.

Agile measurements

You know the value of Agile development practices. Regardless of whether it’s Scrum, Scaled Agile Framework (SAFe), Kanban, or Scrum at Scale, each method uses iterative development with feedback loops to measure developer progress and effectiveness for meeting user needs and providing business value. Each of these practices also measures progress in terms of completed user stories instead of LoC, using measurements such as the following:

- Burn-down charts. These track the development progress not only at the sprint level but also for epics and releases over a longer period. By setting the assignee to a specific developer, you can track individual developer progress compared to the team. There are all sorts of reasons an individual’s performance may vary, such as vacationing during a sprint, attending meetings, or working on special assignments. However, teams that use this metric typically couple it with an individual developer’s capacity (which considers vacation time, sick leave, and special assignments) against their assigned workload.

- Velocity. This is the average amount of work a Scrum team completes during a sprint, and it can be a good measure for a team. However, velocity can also be used to track individual productivity as long as you compare apples to apples. You also need to use consistent measurements such as story points or hours instead of simply stories closed.

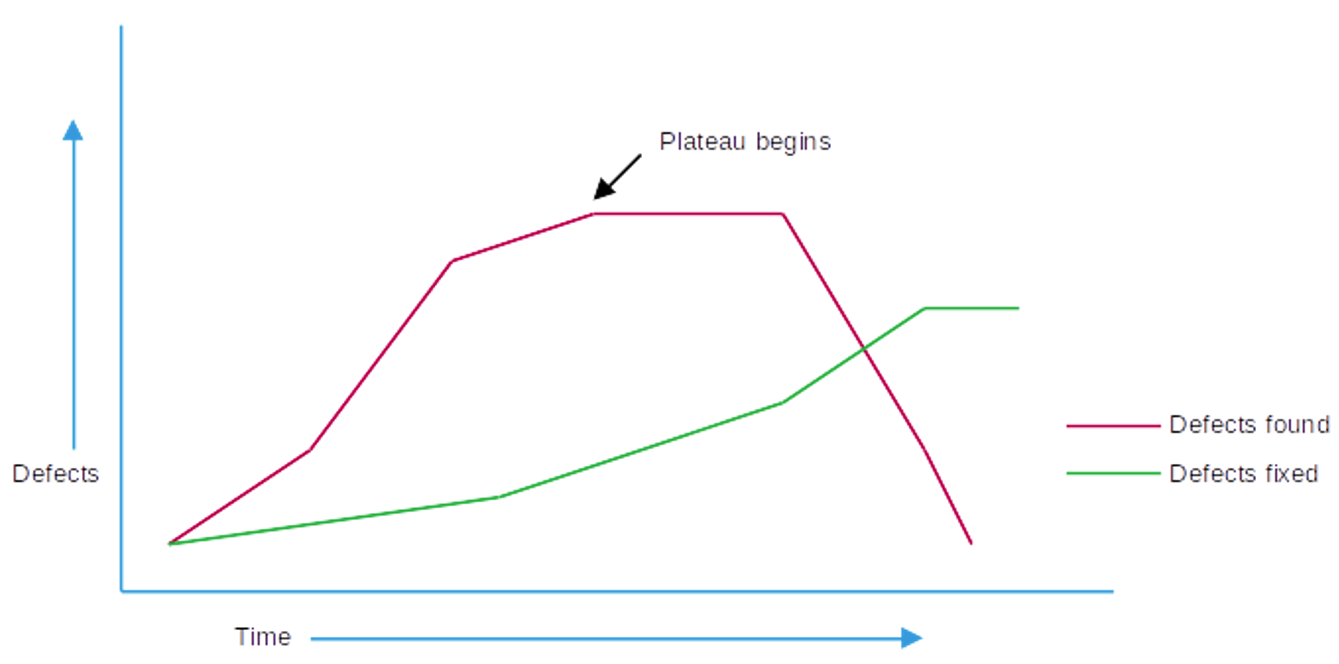

- Software quality. This includes defect tracking charts such as those shown in Figure 1, which measures rates of defects per release, team, and developer. However, realize that more-productive developers may also generate more defects as they complete more stories in a given time frame compared to others. Therefore, leverage tools that track defects to stories over time, essentially turning the measurement into a percentage, to get a more accurate view.

Figure 1. Software defect tracking chart

Also, bear in mind that rates of defects change over the lifetime of a project. Defects will ramp up early in the development cycle, and then they will plateau and drop off rapidly if they are fixed efficiently. I once had a manager who gained an accurate view of top performers on our development team not by measuring the number of defects but by tracking when the counts plateaued, how quickly the defects were resolved, and how often defects were reopened (indicating an ineffective fix was implemented).

In fact, user satisfaction, business value, and software quality are important measurements that counting LoC completely misses.

Customer and corporate satisfaction

Measuring software product success is a challenging metric, but it can be achieved. For example, you can measure sales, downloads, or user feedback to indicate how well the product satisfies the needs of its users. Measuring customer satisfaction assesses how well the software has met the requirements you originally defined. Then, by tracking the completion of individual features along with the developers who worked on them, you can get an accurate feel for how well individuals or entire development teams contributed to this satisfaction compared to others.

There are other soft measurements for developer productivity and effectiveness, such as the satisfaction of other developers and of the developer’s manager. Someone who is easy to work with yet average in terms of productivity and software quality will often be valued higher than someone who is above average yet hard to work with or just negative overall. This, once again, is a measurement that LoC will never tell you.

Hits of code

Borrowing from Gates’ statements on aircraft weight, there is an alternative measurement of programmer productivity that involves counting how many times a section of code has been modified. The theory is that the more you modify a body of code, the more effort is going into that part of the system. This is a measurement of hits of code (HoC) instead of lines of code, and I find this intriguing.

HoC, described nicely by Yegor Bugayenko in 2014, measures overall effort per developer for specific parts of the system being built or enhanced.

Beyond comparing the developer effort, HoC helps you gain an understanding of which parts of your system are getting the most attention—and then you can assess if that’s in line with what the business or customer needs. Further, you can measure the effort against your estimates to determine if you planned properly or if you need to assign more developers to work in an area with high HoC—both of which are reasons HoC could be a more valuable unit of measurement than LoC.

In his essay, Bugayenko shows how HoC is more objective than LoC and is more consistent, because it’s a number that always increases and takes the complexity of the codebase into account.

Whether it’s measuring HoC, using expanded Agile metrics, leveraging consistent soft-skills considerations, or valuing developers who have good ideas and problem-solving skills, there are many alternatives to measuring with LoC. Using even a small combination of these alternatives may finally slay the LoC-ness monster.

Dig deeper

- Curly Braces #9: Was Fred Brooks wrong about late software projects?

- Reduce technical debt by valuing comments as much as code

- Refactoring Java, Part 3: Regaining business agility by simplifying legacy code

- Book review: A Philosophy of Software Design

- Lazy Java code makes applications elegant and sophisticated