I wrote a Database In-Memory Compression blog post back in October and then we did an Ask TOM Office Hours session with a focus on compression and during that session a couple of items came up that I thought would be worth exploring.

The first item that I thought was worth addressing is the ability to use Automatic Data Optimization (ADO) policies to increase the level of compression for a populated object. ADO support for Database In-Memory was introduced in Oracle Database 12.2. Creating an ADO policy for compression can provide an automated way to increase the compression of an object based on Heat Map access, time or a custom PL/SQL policy.

In the compression blog post I showed that there is a tradeoff between compression level and query speed, and the best query speed was seen with the default MEMCOMPRESS FOR QUERY LOW level, but even at the highest compression level you can still see significant benefit in query speed versus not having the object populated at all.

As newer objects are created they could benefit the application if they were populated in the IM column store. However, there are older objects that still provide significant benefit from being populated as well. This is where ADO can help. Using ADO policies you can automatically compress object(s) in the IM column store to a higher level and make room for other objects to be populated.

Here’s an example to illustrate the point. What if our LINEORDER table is partitioned and we want to compress partitions that are older than 30 days from the default MEMCOMPRESS FOR QUERY LOW to MEMCOMPRESS FOR CAPACITY HIGH. The following policy will do that:

ALTER TABLE lineorder MODIFY PARTITION p201601 ILM ADD POLICY SET INMEMORY MEMCOMPRESS FOR CAPACITY HIGH AFTER 30 DAYS OF CREATION;

This policy will then cause the partition to be compressed at the capacity high level after 30 days. You might even want to implement another policy to evict the segment after 90 days to effectively create an in-memory lifecycle for the partition. This can extend the amount of usable space in the IM column store and allow more objects to be populated.

A second item that came up was that even if the query speed of an object is impacted by using a higher level of compression, is it possible that if the query has filter predicates that the impact could be much lower? In the Ask TOM Office Hours session I created multiple LINEORDER tables so that different compression levels could be shown at the same time. I added a suffix like QL and CH for query low and capacity high. The following shows a query run on each of those tables with filter predicates to illustrate that in a more real world scenario where In-Memory storage indexes are likely to prune out IMCUs, the impact on query time of higher levels of compression can actually be much lower.

Here’s an example using the LINEORDER table populated with the default compression level of MEMCOMPRESS FOR QUERY LOW. The table is called LINEORDER_QL and the query adds filter predicates to look at the shipping mode and order priority for just one day:

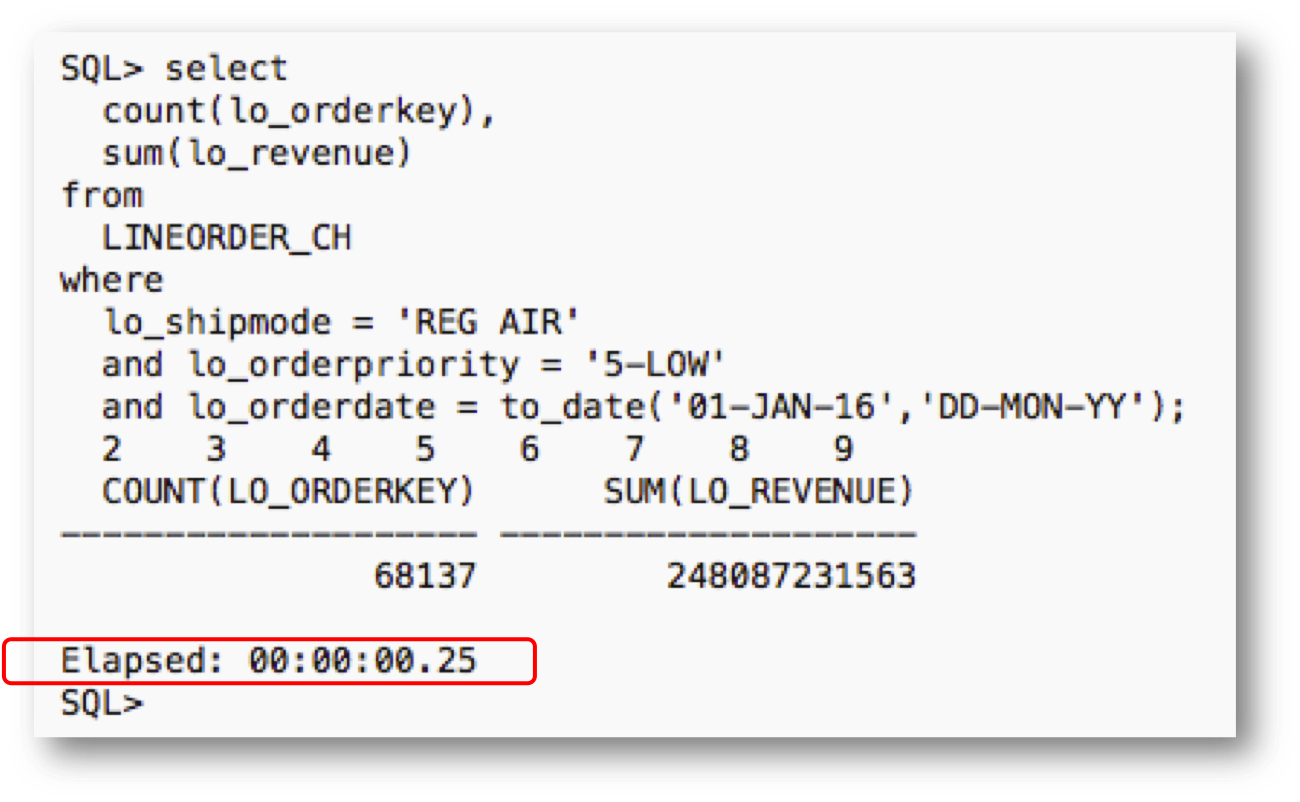

Notice that the query runs in only 0.04 seconds. Let’s take a look at the same query run against the LINEORDER table populated with MEMCOMPRESS FOR CAPACITY HIGH. The table name is now LINEORDER_CH:

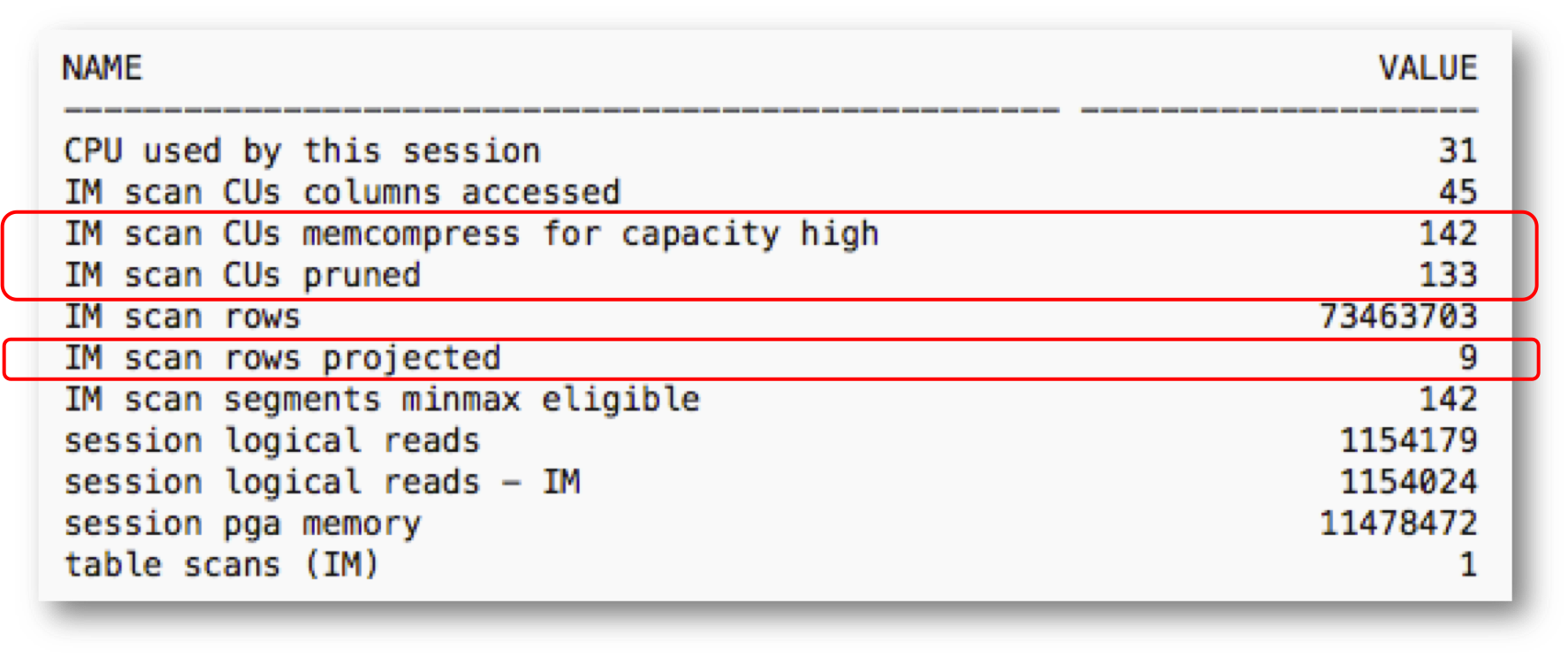

Notice that it runs in 0.25 seconds. While that is slower than the QUERY LOW query it is so fast that it is probably insignificant to the application, and given that the table takes up about a third of the memory that the query low version takes it makes using a higher compression level even more attractive. You might be wondering why these versions are so much faster than without filter predicates and the following shows the session statistics for the CAPACITY HIGH query:

Note that of the 142 IMCUs that make up the populated table (i.e. IM scan CUs memcompress for capacity high), Oracle was able to prune out 133 of those IMCUs (i.e. IM scan CUs pruned). This shows up in only having to return values for 9 IMCUs (i.e. IM scan rows projected). Less work means the query runs faster. The same is true of the QUERY LOW query. As I said earlier, In-Memory storage indexes can help make the performance impact of compression much less noticeable.

Hopefully this gives you more information to consider about making use of the different Database In-Memory compression levels, and allows you to populate more objects in your IM column store.

Original publish date: November 30, 2018