Meta’s Llama 4 models – Llama 4 Scout and Llama 4 Maverick are here! These models can help people build more personalized multimodal experiences, based on large improvements in image and text understanding and instruction following, and can accommodate a range of use cases and developer needs. Whether you’re building apps for reasoning, summarization, or conversational AI, Llama 4 Scout and Maverick deliver powerful performance with open access. Llama 4 models can be run, fine-tuned and deployed in Oracle Cloud Infrastructure (OCI) Data Science. Whether you’re a data scientist or a developer, OCI offers the infrastructure and tools to move fast in the evolving world of Generative AI.

What are Llama 4’s improvements?

Meta’s Llama 4 family includes:

- Llama 4 Scout: A powerful multimodal model that supports context window of up to 10M tokens with 17B active parameters, 16 experts and a total of 109B parameters that can fit on a H100 (with Int4 quantization).

- Llama 4 Maverick: A 17B active parameter model with 128 experts and a total of 400B parameters, delivering strong performance to cost ratio for reasoning and coding while remaining open-weight and customizable and can fit on a H100.

The new Llama 4 models use a mixture of experts (MoE) architecture. In MoE models, a single token activates only a fraction of the total parameters. MoE architectures are more compute efficient for model training and inference and, given a fixed training FLOPs budget, deliver higher quality models compared to dense architectures. Llama 4 models are designed with native multimodality, incorporating early fusion to seamlessly integrate text and vision tokens into a unified model backbone.

Llama 4 Scout and Llama 4 Maverick models are available today on Meta’s website llama.com and Hugging Face, an online model repository. Oracle Cloud Infrastructure (OCI) Data Science is a platform for data scientists and developers to work with open source models powered by OCI’s compute infrastructure with features that support the entire machine learning lifecycle. You can bring in Llama 4 models from Hugging Face or Meta to use inside OCI Data Science effortlessly.

Working with Llama 4 models through the Bring-Your-Own-Container approach

OCI Data Science supports a Bring Your Own Container approach for model deployment and jobs, which enables you to deploy and fine tune the Llama 4 models. The Bring-Your-Own-Container approach requires downloading the model from the host repository, either through the Llama website or Hugging Face, and creating a Data Science model catalog entry. Next, you would download the latest vLLM container and push it to the OCI Registry. The newly released vLLM 0.8.3 is compatible with the Llama 4 models. Then, you can deploy the model or run a fine tuning job with the vLLM container image in the OCI Registry. Once the model is deployed, you’re set to invoke the model with an HTTP endpoint. For more details, please check out our tutorials Deploy LLM Models using BYOC and Batch Inferencing guide.

Llama 4 Scout is a 17 billion active parameter model with 16 experts while Llama 4 Maverick is a 17 billion active parameter model with 128 experts. Llama 4 Scout (with Int4 quantization) fits on a H100 while Llama 4 Maverick fits on a H100. Working with a H100 in OCI Data Sciences requires a reservation for the shape. You can do so by submitting a service request and specifying the shape and region you are interested in using the shape. For additional information on working with GPU in OCI Data Science, please check this page.

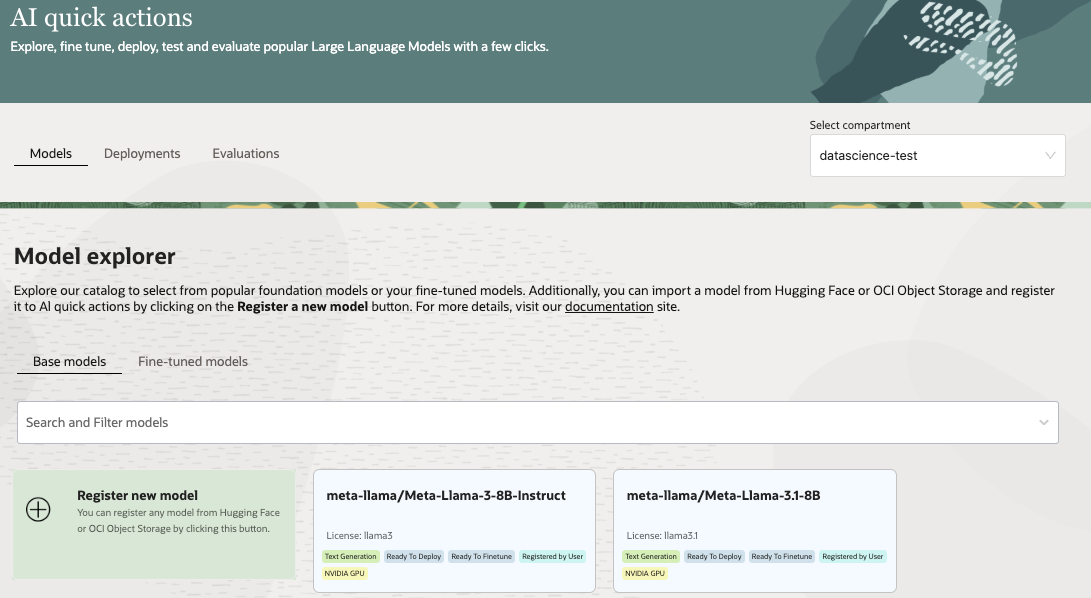

Coming Soon: OCI Data Science AI Quick Actions, shown in the image bellow, is a no code solution for deploying and fine tuning LLMs. AI Quick Actions already supports Llama 3.3 and will soon support Llama 4 models, providing a simplified process for working with these models.

Get started with OCI Data Science and Llama 4 models today!

OCI Data Science enables you to stay up to date with AI developments. Through our partnership with Meta, the availability of Llama 4 models in OCI Data Science represents a step forward for anyone looking to build, deploy, and refine AI solutions.

Explore:

References

- Meta’s release blog post