Originally published September 25, 2019

High performance database deployment relies on effective management of system resources. Only Oracle provides fully integrated management of system resources including the Oracle database, server compute resources, and I/O on the Exadata platform.

Demo Video

The Resource Manager demo video shows 4 pluggable databases (PDBs) within a container database (CDB) sharing a single Exadata quarter rack. The demo shows how Resource Manager controls how system resources including CPU and I/O are shared across databases.

The full demo can be viewed here: https://youtu.be/yZtFBPIEdYk

Across Databases & Within Databases

The two major use-cases for Oracle Resource Manager are to manage resource allocations across databases and within databases. Resource Manager is used to ensure databases each receive their allotted resources and prevents the “noisy neighbor” problem. Resource Manager can also be used within a single database using Consumer Groups to prevent users or jobs from consuming too many resources.

Virtual Machines for Isolation

Oracle strongly recommends AGAINST using Virtual Machine (VM) technology as a resource management vehicle. VMs should be used for ISOLATION where necessary, whereas Resource Manager (as the name implies) is for Resource Management. Proliferation of VMs simply produces virtual-sprawl and Oracle Resource Manager is easier, more flexible, more dynamic, and more effective.

Virtual Machines should be used for cases where isolation is required for mission critical applications, for purposes of Data Governance where sensitive data requires greater security and cases where maintenance schedules dictate isolation.

Demo Workload

The demo uses 4 databases running simulated workloads created using Swingbench from Dominic Giles. One of these databases (PDB1) runs a simulated Data Warehouse workload, while the remaining 3 databases (PDB2, 3, and 4) simulate OLTP workloads. The Swingbench tool can be downloaded from Dominic’s web site here: http://dominicgiles.com/swingbench.html

Container Database Home

The demo begins at the Container Database Home page, which shows activity on the container, including the 4 Pluggable databases. Notice that all of the databases are consuming equal amounts of resource on the system as shown in the consolidated (Container Level) Active Sessions graph. This is because Resource Manager is constraining all of these databases to the same amount of resource. The CPU graph (in green at bottom left) peaks at approximately 40% CPU usage due to the Resource Manager plan.

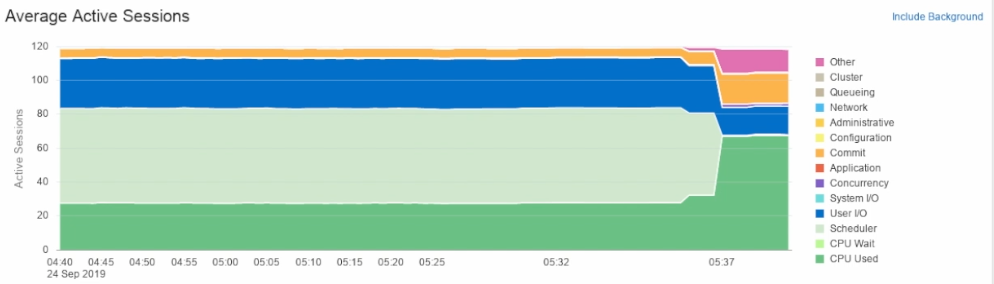

High Scheduler Waits

Resource Manager is throttling these databases, so we see high waits for the “scheduler” in the Average Active Sessions detailed performance graph. This same wait event will be seen as “resmgr: cpu quantum” in some tools, which simply means sessions are waiting for Resource Manager (resmgr) to allocate a “quantum” (or quantity) of CPU time. Based on this information, we know the users of these databases are requesting more resources than we have allocated to them.

Available System Resources

We can easily see the system has sufficient free resources including CPU and I/O by looking in Oracle Enterprise Manager (OEM) as well as using tools such as the Linux “top” command. This tells us that we can certainly allocate more resources to these databases to improve performance. Output from the TOP command shows 29.4% user, 4.6% system, and 64.7% idle time. This system clearly has extra capacity available.

OEM will also show the same information, although the collection interval of OEM means that the information is slightly delayed and will be an average number over the collection period. There may be slight variations in the information displayed, but it’s otherwise telling us the same story. This system has sufficient free capacity.

OEM also shows us the I/O utilization on the system at the Exadata storage layer. We see that the system is using a maximum of around 18% of Exadata I/O capacity. It’s possible to closely manage CPU and I/O separately on an Exadata system, but in this demonstration we are using a SINGLE setting for both. The Exadata storage inherits the same percentage allocation of I/O resources used in the CPU layer.

The tight integration of Database Resource Manager (DBRM) with the Exadata I/O Resource Manager (IORM) means that only a single setting is required to manage the entire stack of resources.

Workload Characteristics

This system includes 4 databases with different workload characteristics, including a Data Warehouse (PDB1) and 3 OLTP databases (PDB2, PDB3, and PDB4). The Data Warehouse workload is clearly I/O bound as we can see from the blue in the graph below:

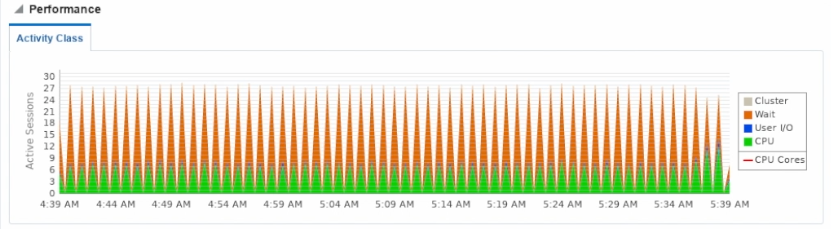

The remaining 3 databases all have a similar workload profile that is more CPU bound, but have high waits on CPU as shown in orange below:

Based on this information, we know the Resource Plan needs to be changed for this system. We know the Data Warehouse needs significantly more resources, including CPU, parallel servers, and I/O. We will also be over-provisioning the resources slightly to allow these databases to take advantage of quiet periods during the day or week.

Resource Plans

In this demo, we are using CDB Resource Plans to control the allocation of resources across databases. It’s also possible to manage the resources using the “Instance Caging” feature of the Oracle Resource Manager, but that approach is not shown in this demo. The Instance Caging feature is simply another way to denote how much resource is allocated to a particular database using the CPU_COUNT setting. As with Resource Plans, IORM allocates the same percentage of I/O resources to each database based on the CPU allocation (whether specified through a Resource Plan or CPU_COUNT). The currently active Resource Plan looks like this:

As shown in the video, we activate a new Resource Plan that gives 70% of resources (CPU and parallel processes) to the Data Warehouse, and 20% to the remaining 3 OLTP databases. Of course this means the system is over-provisioned at 130% of capacity. That over-provisioning means that the databases will take advantage of quiet periods:

Immediate Impact!

Resource Manager changes take effect immediately. We can see this using a command such as “top”, while OEM takes a while to see the changes. We could set a shorter OEM data collection interval, but this simply generates unnecessary overhead on the system. We can see the changes taking effect looking at “top” below:

We can see the system is now running at 65.9% user, 12.0% system, and 16.3% idle. The databases have clearly started using more CPU immediately once the new plan was activated.

OEM Starts Showing Changes

Within about a minute, we start seeing the Resource Plan changes taking effect in OEM. The Cluster Database Home shows higher usage of CPU resources:

The detailed Activity Class graph for PDB1 shows the database waits on I/O are starting to disappear. If we allowed this to run longer, we would see nearly all of the blue disappear from the graph:

We see a similar impact on the OLTP databases (PDB2, PDB3, and PDB4), where the waits have started to lessen. We see much less orange in the graph, and more time “on CPU” shown in green:

If we allow this demo to run longer, you will eventually see most of the orange disappear as well. We decided that the Data Warehouse was more critical for the purposes of this demo, so these OLTP databases will still have some waits for CPU.

Scheduler Waits Almost Gone

Recall the wide band of “scheduler” waits from earlier in this demo. Those waits have almost entirely disappeared and the databases are making much better use of the available system resources:

I/O Resources Follow CPU Settings

As noted previously, the Exadata I/O Resource Manager (IORM) inherits the same percentage allocations set in the Database Resource Manager. This applies to Resource Plans as well as Instance Caging (setting CPU_COUNT). There is not need to set IORM allocations separately if you simply want to match the DBRM resource allocations. You simply need to set the IORM objective to “auto” for IORM to follow the DBRM percentages.

Conclusion

Managing resources is made simpler using the built-in resource management capabilities of Exadata, including the Oracle Database Resource Manager (DBRM) and it’s companion, the Exadata I/O Resource Manager (IORM). This capability is much more effective and does not carry the heavy administrative burden of using Virtual Machines for the purpose of Resource Management. Oracle recommends using VMs for purposes of ISOLATION where necessary, and Resource Manager where it fits best. In short, Resource Manager is:

- Integrated

- Dynamic

- Easy to Manage

Be sure to watch for additional content on this and other topics related to Oracle database and Exadata.

See Also…