This is a guest blog written by the Avesha team.

A multi-cloud or hybrid strategy gives enterprises the freedom to use the best possible cloud native services for revenue generating workloads. Organizations utilize multi-cloud deployments for use cases such as a) Cloud bursting b) Disaster recovery and c) Multi-site active-active deployments.

Each cloud provider has a unique value proposition for workloads for enterprises to consider. For example, Oracle Cloud apart from computational capabilities, brings best-in-class database management functionalities, surrounding ecosystems of analytics as well as other cloud native offerings. The diverse value props provided by cloud providers thus makes multi-cloud an attractive option for enterprises. However, the ability to simplify deploying and connecting workloads across clusters remains a big challenge. With Avesha’s KubeSlice, in partnership with Oracle Cloud Infrastructure utilizing the managed Oracle Kubernetes Engine, enterprises will be able to solve their multi-cloud challenges.

KubeSlice creates a virtual cluster across multiple clusters that helps accelerate application velocity by removing the friction of network layout, multi-tenancy and microservice reachability across clusters.

Proliferation of Kubernetes

In the context of hybrid/multi cloud deployments, it’s important to note that the adoption of containerized application architectures has profoundly changed with Kubernetes and its flexible platform. Kubernetes has become de-facto standard for ephemeral containerized workloads orchestration. This proliferation of microservices in enterprise environments has led to growth of the Kubernetes ecosystem.

Challenges

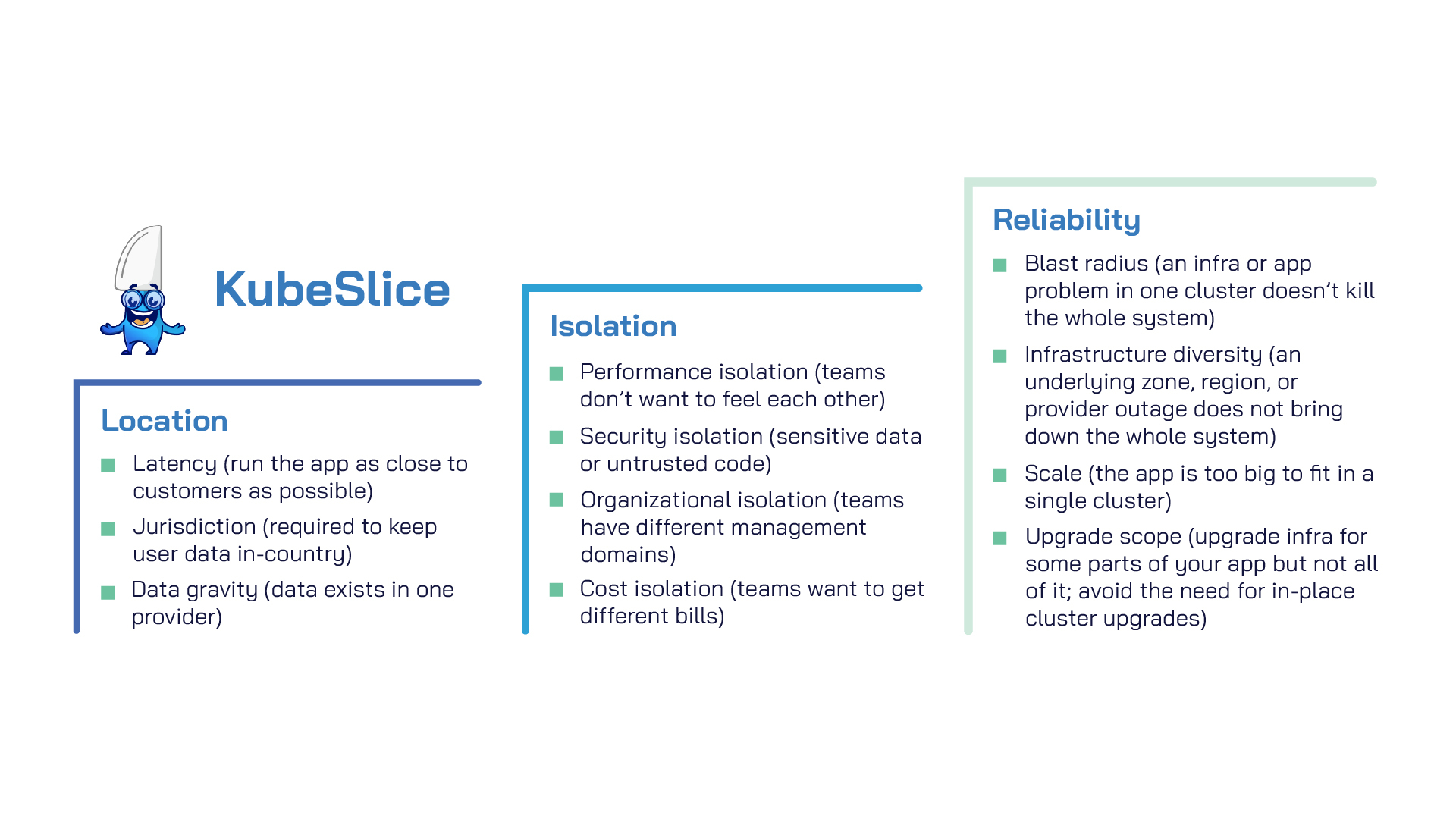

Deploying and effectively managing Kubernetes at scale demands robust design processes and rigid administrative discipline. Enterprises are faced with decisions based on type of workloads: highly transactional, data-centric or location-specific. Additionally, workload distribution needs to take into account factors like latency, resiliency, compliancy, and cost.

In addition, the complexity of microservices made interconnections the focal point of modern application architectures. Kubernetes handles such complexities well as long as you deploy all microservices in a single cluster and you maintain the trust between all the services running within the cluster. Kubernetes hasvarious resources like CPU, Memory, StorageClass, PersistentVolumes by default as shared objects in a cluster.

Here’s where things get complex. Multiple teams deploying applications in the same cluster leads to painful marshaling of shared resources, which includes security concerns and contention of resources from potential “noisy” neighbors. Kubernetes has a soft construct ‘namespace’ which provides isolation across applications. Managing and assigning namespace resources for teams with multiple applications leads to operational challenges that need to be constantly governed. Kubernetes natively does not treat multi-tenancy as a first-class construct for users and resources.

Mature deployments – the challenges grow

As organizations reach maturity in running Kubernetes, they expand their ecosystems to include multi-cluster deployments. These deployments are hosted in data centers or distributed in data centers as well as in hyperscalers’ clouds. Some of the reasons enterprises choose to deploy multi cluster environments are for team boundaries, latency sensitivity (where applications need to be closer to customers), geographic resiliency, and data jurisdiction (policies involving user data restrictions in countries where data cannot cross geographical boundaries like, GDPR and California Consumer Privacy Act – CCPA). While deployment of applications across clusters increases, there is a growing need for these applications to reach back to other applications in different clusters.

Platform teams have tedious operational management challenges when faced with providing infrastructure to application developers – (1) extending the construct of namespace sameness to multi-cluster deployments while also maintaining tenancy, (2) governing cluster resources and (3) cluster configuration without environmental configuration drift.

Kubernetes Networking – the ultimate challenge

Traditional Kubernetes networking is no small feat. While we are used to defining domains and firewalls at places where we need boundaries and control, mapping that in Kubernetes is challenging. Yes, you need additional flexibility beyond the namespace. Having to connect multiple clusters and in addition, distributing workloads across clouds would need serious planning from NetOps teams.

KubeSlice – the efficient hybrid/multi-cloud cluster connectivity & management solution

Avesha’s KubeSlice combines Kubernetes network services and manageability in a framework to accelerate application deployment in a Kubernetes environment. KubeSlice achieves this by creating logical application “Slice” boundaries which allow pods and services to communicate seamlessly.

Let’s take a deeper look at how KubeSlice simplifies the management and operation of Kubernetes environments.

KubeSlice brings the manageability, connectivity, and governance into one framework for deployments which need tenancy in a cluster and extends it across clusters, be it in data centers, in a single cloud or across multiple clouds.

KubeSlice is driven by a Custom Resource Definition (CRD) operator defining the “Slice” construct, which is analogous to a tenant. A tenant can be defined as (1) a set of applications which needs to be isolated from traffic of other applications; or (2) a team needing different security secrets and requiring strict isolation from those who have access to applications.

KubeSlice brings 3 types of functions to pods in it including: Kubernetes Services, Networking Services, and Multitenancy & Isolation.

Kubernetes Services: KubeSlice governs namespace management and application isolation by managing Kubernetes objects, namespaces, and RBAC rules for better operational efficiency. KubeSlice enables sharding or “slicing” of clusters for a set of environments, teams or applications that would expect to be reasonably isolated but share common compute resources and the Kubernetes control plane.

Network Services: Kubernetes clusters need the ability to fully integrate connectivity and pod to pod communications with namespace propagation across clusters. Existing intra-cluster communication remains local to the cluster utilizing the CNI interface. Native KubeSlice configuration allows for isolation of network traffic between clusters by creating an overlay network for inter-cluster communication. KubeSlice accomplishes this by adding a second interface to the pod allowing for local traffic to remain on the CNI interface, and traffic bound for external clusters route over the overlay network, to its destination pod, making KubeSlice CNI agnostic.

KubeSlice preserves the first principle of Kubernetes, i.e. the ability to talk to any pod in a cluster, by the creation of Slices, which enables pod to pod communication seamlessly. KubeSlice slice interconnect solves the complex problem of overlapping IP addresses between cloud providers, data centers and edge locations.

Multitenancy & Isolation: KubeSlice enables the creation of multiple logical Slices in a single cluster or a group of clusters to address true isolation all the way from the network to the application domain.

Use Cases

KubeSlice, because of its simple deployment & robust capabilities, can be the platform of choice for the following enterprise use cases:

- Isolation for enterprise teams: Each team operates multiple workloads such as services or batch jobs. These workloads frequently need to communicate with each other and have different preferential treatments. Enable isolation for this set of applications by defining sets of compute resources dedicated per team (especially GPU resources)

- Single operator for multi-customer enterprise (aka SaaS provider): B2B software vendors are increasingly providing SaaS based delivery and need tighter isolation from customer A vs. customer B. Today most delivery of such services are done by hard cluster boundaries, thus leading to cluster sprawl. Cost Optimization and operational efficiency are critical considerations.

- Hybrid deployment: Enterprises who have a data centric application in a data center (Oracle database) and would like to keep an instance of database in the datacenter for compliance reasons but have workloads in managed OKE.

- Cloud Bursting: As per Flexera 2021 State of the cloud report and summarized by Accenture, 31% of enterprises are looking for hybrid solutions for workload bursting (cloud-bursting). Enterprises requiring extra capacity on-demand but need connectivity back to the data store in the data center, or certain web properties running in the cloud (OCI) need to reach back to the data center for database access.

- Multi Cloud deployment: 76% of companies are adopting multi cloud and hybrid cloud approaches – according to a report by Jean Atelsek @ 451 Research. A similar survey from Accenture, 45% of enterprises responded, ‘Data integration between clouds’ as one of the use cases for multi cloud architecture. Enterprises who would like to take advantage of services like MySQL HeatWave, e-business suites, or Oracle Autonomous Database in OCI and have workloads in GCP/Azure/AWS or any other cloud provider.

Ready to get started?

KubeSlice make Kubernetes simple, manageable and muti-tenant at scale. Avesha has a suite of products that fast-tracks your digital transformation & bring ease, agility & scale to your application deployments.

Here are some of the handy links you can leverage to explore the Avesha and Oracle partnership activities further:

- If you’d like to try Avesha for yourself, you can explore the KubeSlice Community Edition.

- If you want to experience Avesha on Container Engine for Kubernetes for yourself, sign up for an Oracle Cloud Infrastructure account and start testing today!

- For more information, see the Oracle Container Engine for Kubernetes documentation.