Hello there,

This is another introduction post where I show some initial examples and codes for you being able to start playing with Oracle Streaming Service OSS.

For more introductions check out;

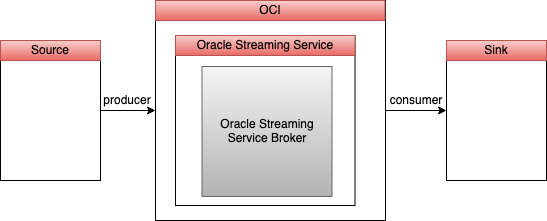

OSS has a notion of producer and consumer (The first one pushes messages to Kafka, while the second one fetches them).

Producer and Consumer architecture

Did you know that you can create a producer and a consumer with just some line of code?

The OSS documentation has some use cases and information on why you might use Streaming in your applications, so the idea here is to take a look at how.

Let’s start

Set up a Stream topic for this demo. Check out the OSS Getting Started blog post for initial account details.

1) we’ll need to grab the stream pool ID that our stream has been placed into. If you haven’t specified a pool, your stream will be placed in a “Default Pool”. Click on the pool name on the stream details page.

2) On the stream pool details page, copy the stream pool OCID and keep it handy for later.

3) On the user generate an auth token.

4) Copy the token and keep it handy – you can not retrieve it after you leave the ‘Generate Token’ dialog. This will be used as {authToken}

For your bootstrap servers, you can use the domain of the region with the 9092 port.

streaming.{region}.oci.oraclecloud.com:9092 |

Oracle provides some SDK and we have a Streaming example in each one;

OSS is Kafka compatible, and this means that you can use Kafka API including Kafka producer and Consumer. You can check here for more details of Kafka Producer and Consumer.

So let’s see an example using Kafka API.

For work with Kafka, we will need to set up the following properties for your Kafka client.

Producer

The application can be compiled using the following command.

javac -cp “/path/to/kafka/kafka_2.11-0.9.0.0/lib/*” *.java |

The application can be executed using the following command.

java -cp “/path/to/kafka/kafka_2.11-0.9.0.0/lib/*”:. Producer |

Consumer

The application can be compiled using the following command.

javac -cp “/path/to/kafka/kafka_2.11-0.9.0.0/libs/*" Consumer.java |

The application can be executed using the following command.

java -cp “/path/to/kafka/kafka_2.11-0.9.0.0/libs/*":. Consumer |

You can use Maven and compile/deploy using your IDE.

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>1.0.1</version>

</dependency> |

Here I demonstrated a Kafka Producer and Consumer using Confluent Java API, but in theory, just using the same authentication properties you will be able to use any Kafka API. You check the Confluent documentation for more.

You can check this GitHub for more code & demos.

If you have downloaded Kafka you can run a producer and consumer from terminal. Kafka already provides some scripts inside the ./bin folder. You can try and test some of them.

Inside Kafka folder create a jaas.conf file with

Create a consumer.properties file with

security.protocol=SASL_SSL sasl.mechanism=PLAIN |

Add the configuration in your Path

export KAFKA_OPTS="-Djava.security.auth.login.config=./jaas.conf" |

Now you can run the command line

Photo by Vladislav Klapin on Unsplash