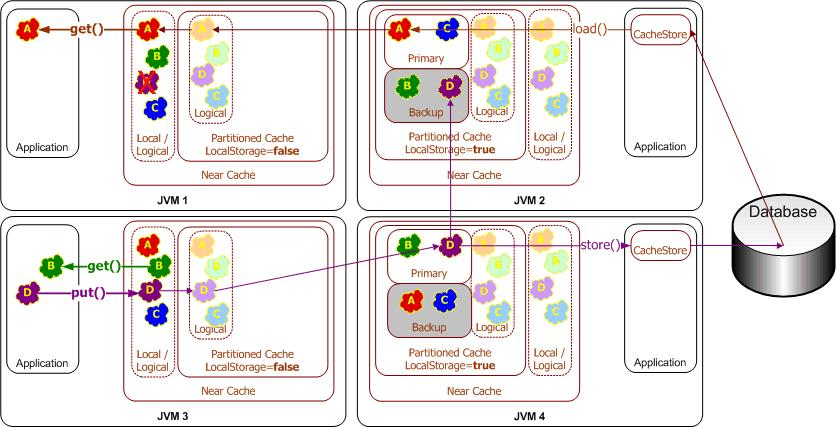

Coherence is an in-memory data grid solution that addresses the issues of working with distributed objects in memory (cache). Amongst its many features, Pluggable Cache Stores allows caching (i.e., load/store) contents from any persistence layer.

This way, the cache abstracts content persistence and loading through the CacheStore interface to be implemented by the user. The synchronization of updates, also decoupled from the persistence layer, is governed by the following strategies:

- Refresh-Ahead / Read-Through: whether you want data to be loaded in the cache before being actually requested vs staleness of the data in the cache

- Write-Behind / Write-Through: whether you expect better response time by doing the actualization of the data asynchronous vs immediate persistence of the change

Relational databases is the most common option for persistence, but its selection forces you to define an object-relational mapping from Java classes to the underlying relational model (using for example: hibernate, TopLink or any ad-hoc JPA implementation). But that is only half of the job to be done. It is also paramount to define how you will manage the connections to execute this persistence.

The following diagram depicts how Coherence persists in an RDBMS through the CacheStore interface:

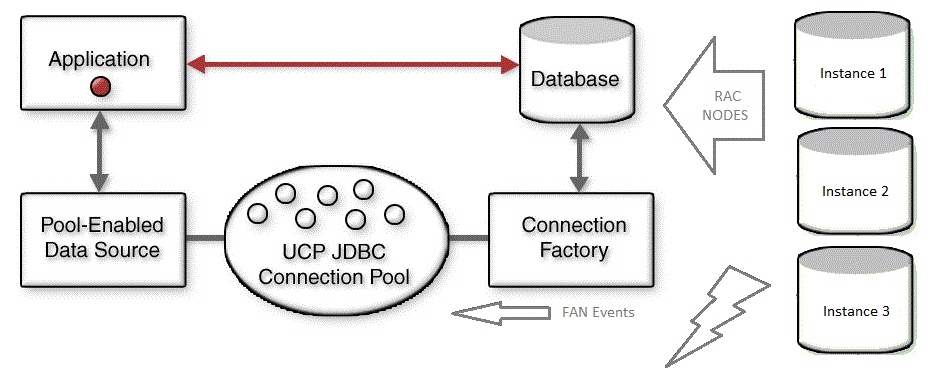

The CacheStore is responsible for handling the connection to the database. Not only in terms of connection creation/pooling but also in terms of how to detect changes in the instances of the database it is connected to.

Connection management is critical when you are looking for extreme performance and high availability; hence it’s critical for the CacheStore to handle connection acquirement properly.

Oracle Universal Connection Pool (UCP) provides you not only all the intrinsic advantages of connection pooling (connection re-use, management, availability, purge, etc.) but it also leverages the possibility to use all the features you have when connected to a RAC environment:

- Runtime Connection Load Balancing (RCLB)

- Fast Connection Failover (FCF)

- Transaction Affinity

- Built-in support for Database Resident Connection Pooling (DRCP) and Application Continuity (AC)

By taking this approach, you are not only setting the relationship between your cache and your persistence layer. You are also optimizing the configuration and management with your underlying RAC, by exploiting all its features in terms of connection handling.

For more information about UCP, please refer to: Introduction to UCP.

In a previous article we already discussed these features and how to exploit them from a JBoss Web application: Using Universal Connection Pooling (UCP) with JBoss AS

In this article we are going to show you how to use UCP from Coherence using Pluggable Cache Stores, hence also making it available for any type of Coherence client.

1. Download and install coherence standalone, UCP and Oracle JDBC Driver.

You can skip this step if you already have coherence.jar, ucp.jar and ojdbc7.jar.Download “Coherence Stand-Alone Install” from this location.

Unzip the downloaded file.

Run the universal installation jar:

java -jar fmw_12.2.1.0.0_coherence.jar

During install, select an Oracle Home location. In this location you will find the coherence jar that we will be using during this demo:

ORACLE_HOME/coherence/lib/coherence.jar.

Download UCP and Oracle JDBC Driver (ucp.jar and ojdbc.jar) UCP JDBC

For this sample, I copied these jars are in the home directory (~) and coherence.jar to ~/ucpcoherence dir:

~/ucpcoherence/coherence.jar

~/ucp.jar

~/ojdbc8.jar

2. Configure Cache and CacheStore.

You need to indicate coherence the scheme for your cache. The scheme will define its behavior (such as if it’s local, replicated or distributed, etc.). For our configuration, these are the most important things to understand:

- cache-mapping: this will indicate coherence what cache-names (ids) will match to this specific type (scheme). In our sample we’ll create cache “test1” to match it with “test*”, which in turns will associate with scheme-name “example-distributed” and under this scheme-name we’ll define our cache-scheme.

- class-name (in cachestore-scheme): here we will inject into our distributed cache the name of the class that will handle the persistence (load / loadAll / store / storeAll / erase / eraseAll) operations in our cache. In our case it will be ucp_samples.EmployeeCacheStore, which we will define later in this article.

- init-params (in class-scheme): here you can specify values that will be used in the constructor of our class.

<?xml version=“1.0”?>

<!DOCTYPE cache-config SYSTEM “cache-config.dtd”>

<cache-config>

<caching-scheme-mapping>

<cache-mapping><cache-name>test*</cache-name>

<scheme-name>example-distributed</scheme-name>

</cache-mapping></caching-scheme-mapping>

<caching-schemes>

<distributed-scheme><scheme-name>example-distributed</scheme-name>

<service-name>DistributedCache</service-name>

<backing-map-scheme>

<read-write-backing-map-scheme>

<internal-cache-scheme><local-scheme /></internal-cache-scheme>

<cachestore-scheme>

<class-scheme>

<class-name>ucp_samples.EmployeeCacheStore</class-name>

<init-params>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>jdbc:oracle:thin:@//localhost:1521/cdb1</param-value>

</init-param>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>scott</param-value>

</init-param>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>tiger</param-value>

</init-param>

</init-params></class-scheme>

</cachestore-scheme>

</read-write-backing-map-scheme>

</backing-map-scheme>

<autostart>true</autostart></distributed-scheme>

</caching-schemes></cache-config>

3. Provide CacheStore Implementation.

As configured in previous file, you need to provide an implementation for CacheStore that will execute its methods when objects in the cache are added or requested (it will store or load if the object is not on the cache). We provide an implementation for the load method and the constructor of the class (which shows how to use UCP). You can define the behavior for the rest of the methods as part of your test.

Notice that the values for UCP can be changed/monitored through JMX as it was explained in this article.

To check more in how to use UCP with Hibernate you can check in this article.

public class EmployeeCacheStore implements CacheStore {

private PoolDataSource pds = null;

private int INITIAL_POOL_SIZE = 5;private Connection getConnection() throws SQLException {

return pds.getConnection();}/**

* Constructor for the CacheStore, parses initial values and sets the

* connection pool.

*

* @param url

* @param user

* @param password

*/

public EmployeeCacheStore(String url, String user, String password) {

}try {

// Create pool-enabled data source instance

pds = PoolDataSourceFactory.getPoolDataSource();

// set the connection properties on the data source

pds.setConnectionFactoryClassName(“oracle.jdbc.pool.OracleDataSource”);pds.setURL(url);

pds.setUser(user);

pds.setPassword(password);// Override pool properties

pds.setInitialPoolSize(INITIAL_POOL_SIZE);

pds.setConnectionPoolName(this.getClass().getName());} catch (SQLException e) {

e.printStackTrace();}

/**

* When an object is not in the cache, it will go through cache store to

* retrieve it, using the connection pool. In this sample we execute a

* manual object relational mapping, in a real–life scenario hibernate, or

* any other ad–hoc JPA implementation should be used.

*/@Override

public Employee load(Object employeeId) {Employee employee = null;

try {PreparedStatement ps = getConnection().prepareStatement(“select * from employees where employee_id = ?”);} catch (SQLException e) {

ps.setObject(1, employeeId);

ResultSet rs = ps.executeQuery();

if (rs.next()) {employee = new Employee();}

employee.setEmployeeId(rs.getInt(“employee_id”));

employee.setFirstName(rs.getString(“first_name”));

employee.setLastName(rs.getString(“last_name”));

employee.setPhoneNumber(rs.getString(“phone_number”));

ps.close();

rs.close();

e.printStackTrace();

}

return employee;

}

}

4. Provide the Java Bean.

This is the class that will live in our cache. It’s a simple Java Bean which needs to implement Serializable in order to be able to be shared across the nodes of the cache. In a real-life scenario you will want to implement coherence’s PortableObject instead of only Serializable, so you will be using coherence ultra-optimized mechanisms to share/store objects: Portable Object Format Serialization (POF).

Note: getters/setters/constructors are removed to be easier to read this example. Also note that your implementation of toString() is what you are going to see in coherence’s console.

public class Employee implements Serializable {

int employeeId;

String firstName;

String lastName;

String email;

String phoneNumber;

Date hireDate;

String jobId;

@Override

public String toString() {return getLastName() + “, “}+ getFirstName() + “: “

+ getPhoneNumber();

5. Configure Operational File.

In order to provide some specific operational values for your cache, you will need to provide the following operational configuration file.

Take particular attention for the name of the cluser “cluster_ucp”, the address/port you will be synchronizing with other nodes of the cluster “localhost:6699” and the name of the configuration file you will use (you set this through the system property parameter “tangosol.coherence.cacheconfig=example-ucp.xml”, defined in 2nd step.

Note: since version 12.2.1 you no longer need to use prefix “tangosol”.

~/ucpcoherence/tangosol-coherence-override.xml

<?xml version=‘1.0’?>

<!DOCTYPE coherence SYSTEM “coherence.dtd”>

<coherence>

<member-identity>

</member-identity>

<unicast-listener><address>localhost</address>

<port>6699</port>

<time-to-live>0</time-to-live>

<well-known-addresses>

<socket-address id=“1”>

<address>localhost</address>

<port>6699</port>

</socket-address>

</well-known-addresses></unicast-listener>

</cluster-config>

<services><service id=“3”>

<service-type>DistributedCache</service-type>

<service-component>PartitionedService.PartitionedCache</service-component></service>

</services>

<configurable-cache-factory-config><class-name system-property=“tangosol.coherence.cachefactory”>com.tangosol.net.ExtensibleConfigurableCacheFactory</class-name>

<init-params><init-param><param-type>java.lang.String</param-type>

<param-value system-property=“tangosol.coherence.cacheconfig”>example-ucp.xml</param-value>

</init-param></init-params></configurable-cache-factory-config>

</coherence>

6. Start Cache nodes and client.

From command line you can start the nodes of your cache through DefaultCacheServer class and the following parameters:

(from ~/ucpcocherence, being ./ucp_samples/bin the output folder for your eclipse project or the place where you have your compiled custom classes)

../jdk1.8.0_60/jre/bin/java -Dtangosol.coherence.override=tangosol-coherence-override.xml -Dtangosol.coherence.cluster=cluster_ucp -cp coherence.jar:../ucp.jar:../ojdbc8.jar:./ucp_samples/bin com.tangosol.net.DefaultCacheServer

Note: as stated before, since 12.2.1 you no longer need to use tangosol prefix, it will also work with coherence.cluster.

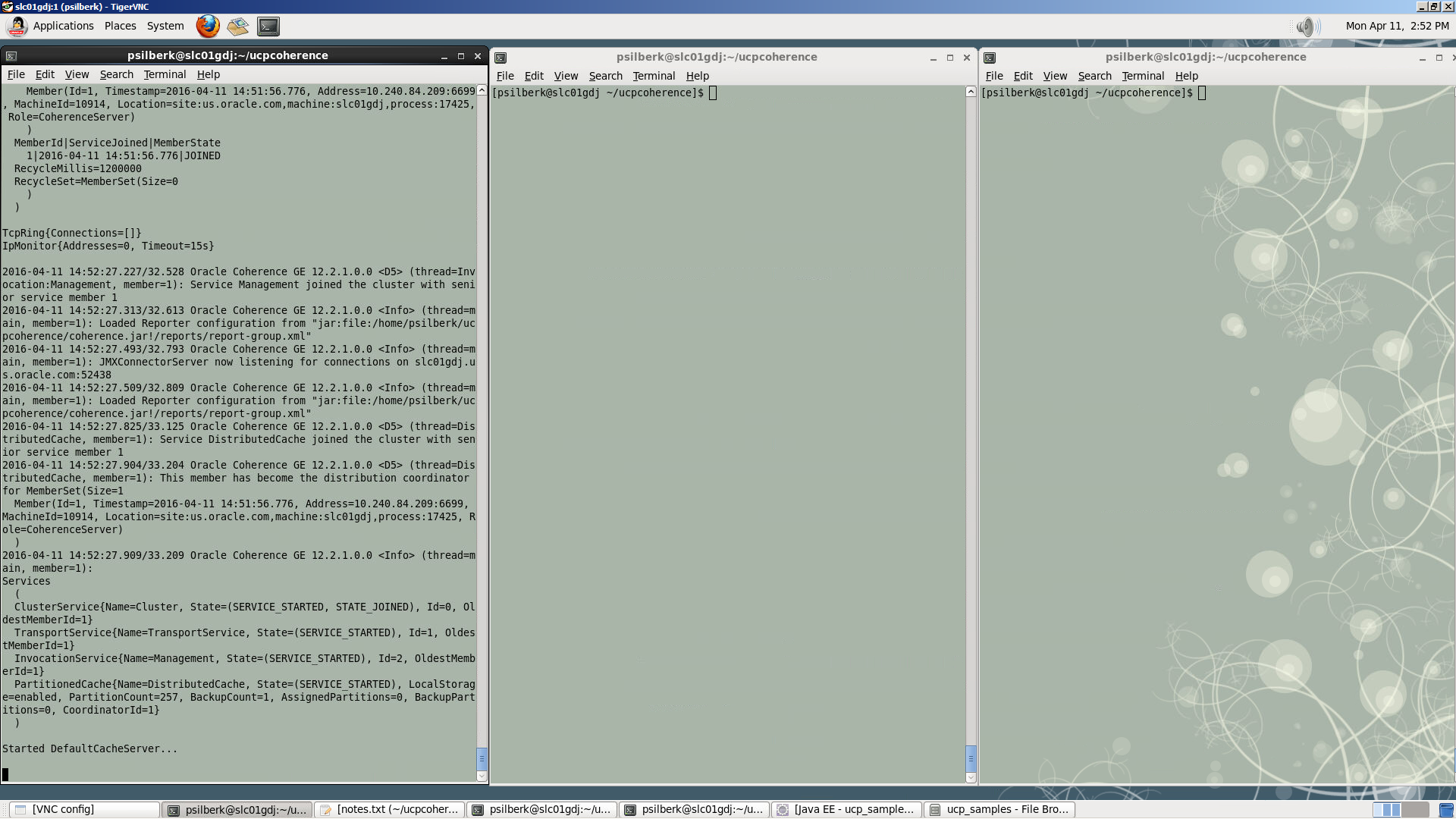

You can check by the output the name of the cluster (cluster_ucp), the addresses it’s listening (localhost:6699) and the name of the member you just started (Id=1).

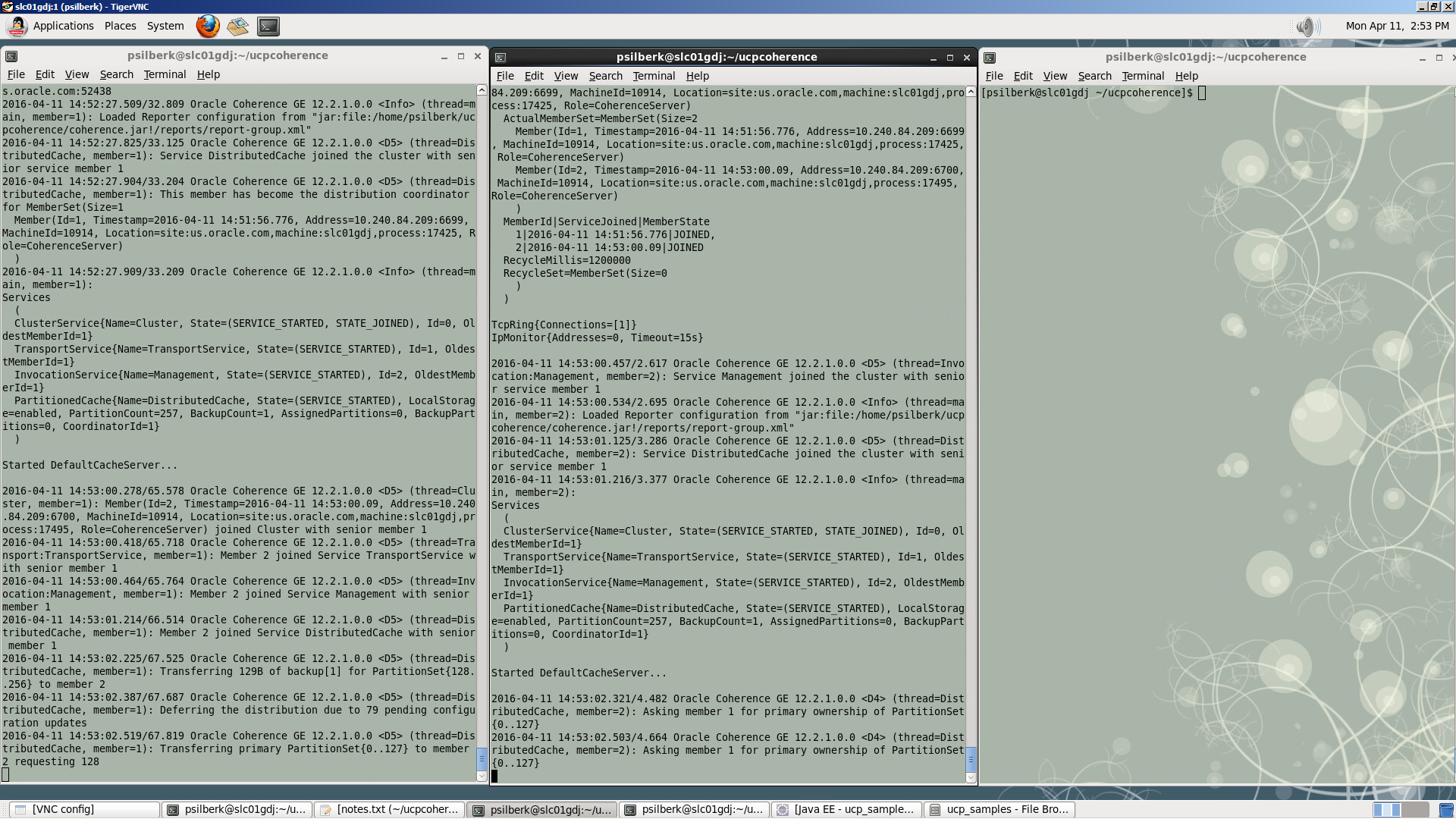

If you issue the same command on a separate process to start 2nd node:

You can check member id = 2 joining the cluster (it shows in both processes). This way now you have 2 nodes working for this cluster.

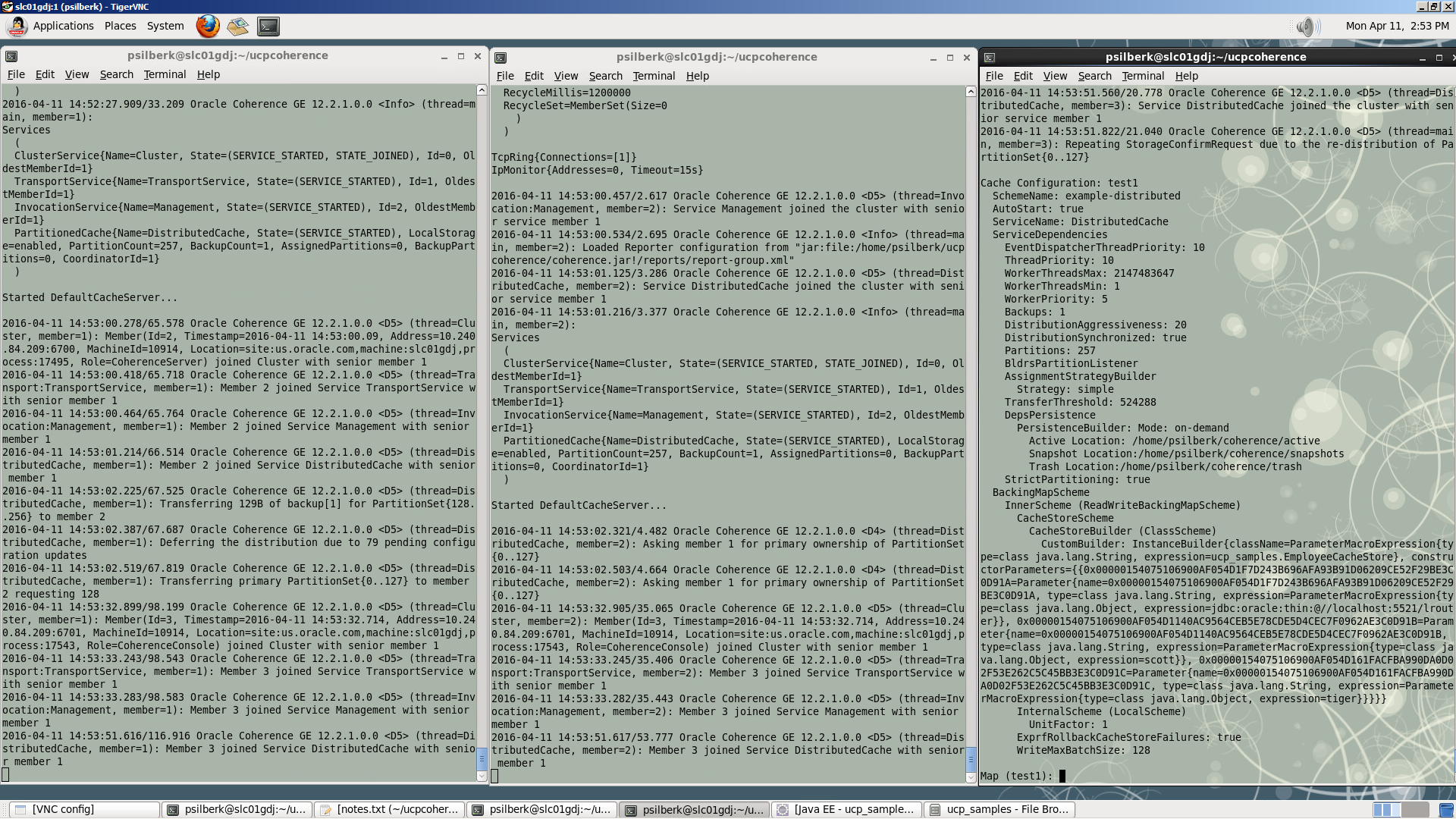

Lastly, you start the client for the cluster (which indeed is a new member itself) using CacheFactory class and running this command:

../jdk1.8.0_60/jre/bin/java -Dtangosol.coherence.override=tangosol-coherence-override.xml -Dtangosol.coherence.cluster=cluster_ucp -cp coherence.jar:../ucp.jar:../ojdbc8.jar:./ucp_samples/bin com.tangosol.net.CacheFactory

Note that if you wouldn’t want your client to be a storage member you can provide the option -Dtangosol.coherence.distributed.localstorage=false to achieve this behavior. This can also be done via configuration.

You see member id = 3 with command line option to interact with the cache.

The first thing you need to do is to start a cache with the same scheme as the one we defined. In order to do that you use the same name pattern we defined in cache-mapping using a name matching “test*”. So you issue:

cache test1

And you will notice now that the prompt is with this cache

Map (test1):

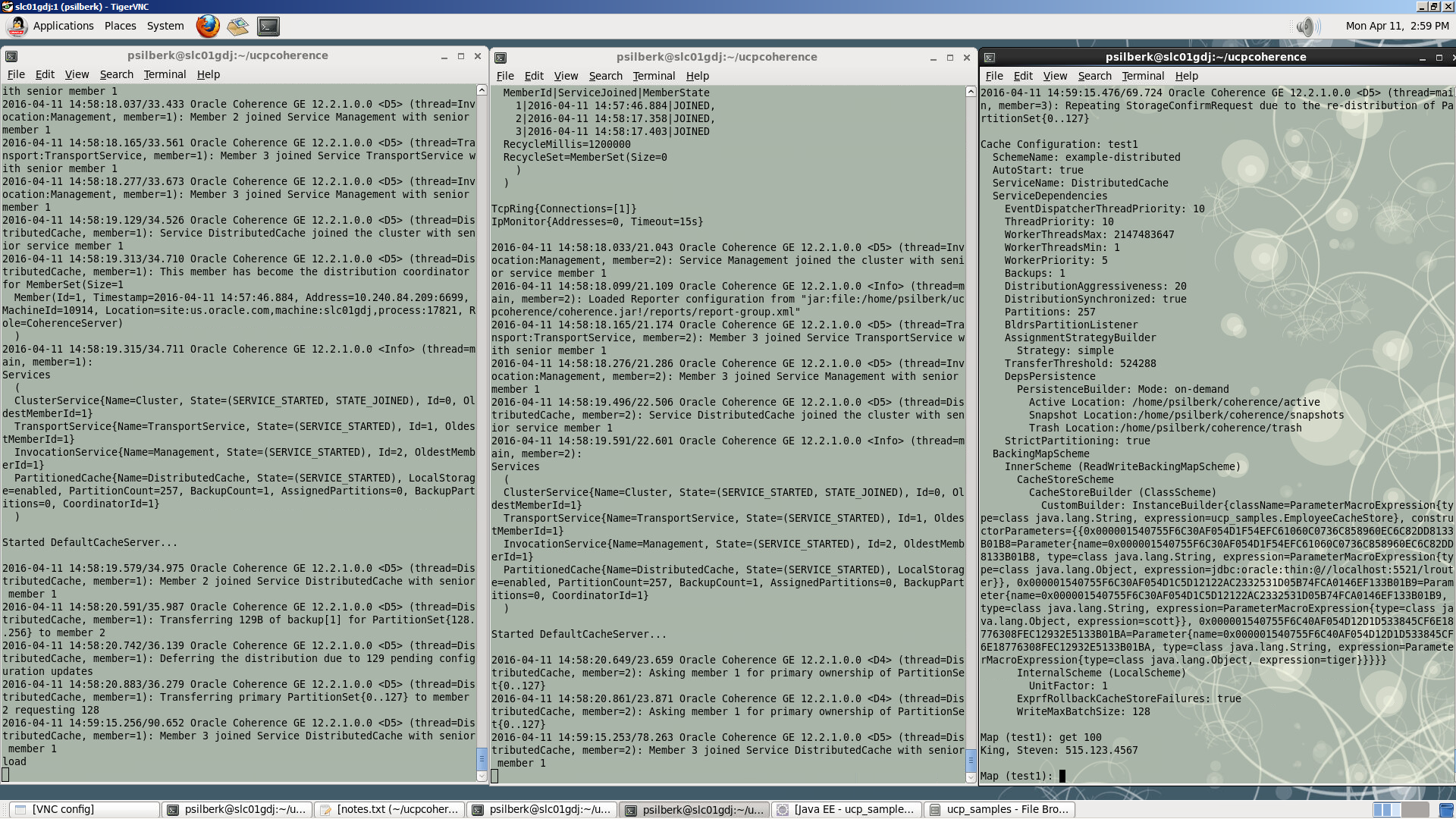

Now you try to load the first object by issuing:

get 100

You’ll notice for each new object loaded in the cache the additional time it takes to read its value from the database and you’ll see the output of the object retrieved in the console (executing Employee.toString() method).

Run again get 100 and you’ll notice the difference in the response time of using an object that’s already in the cache.

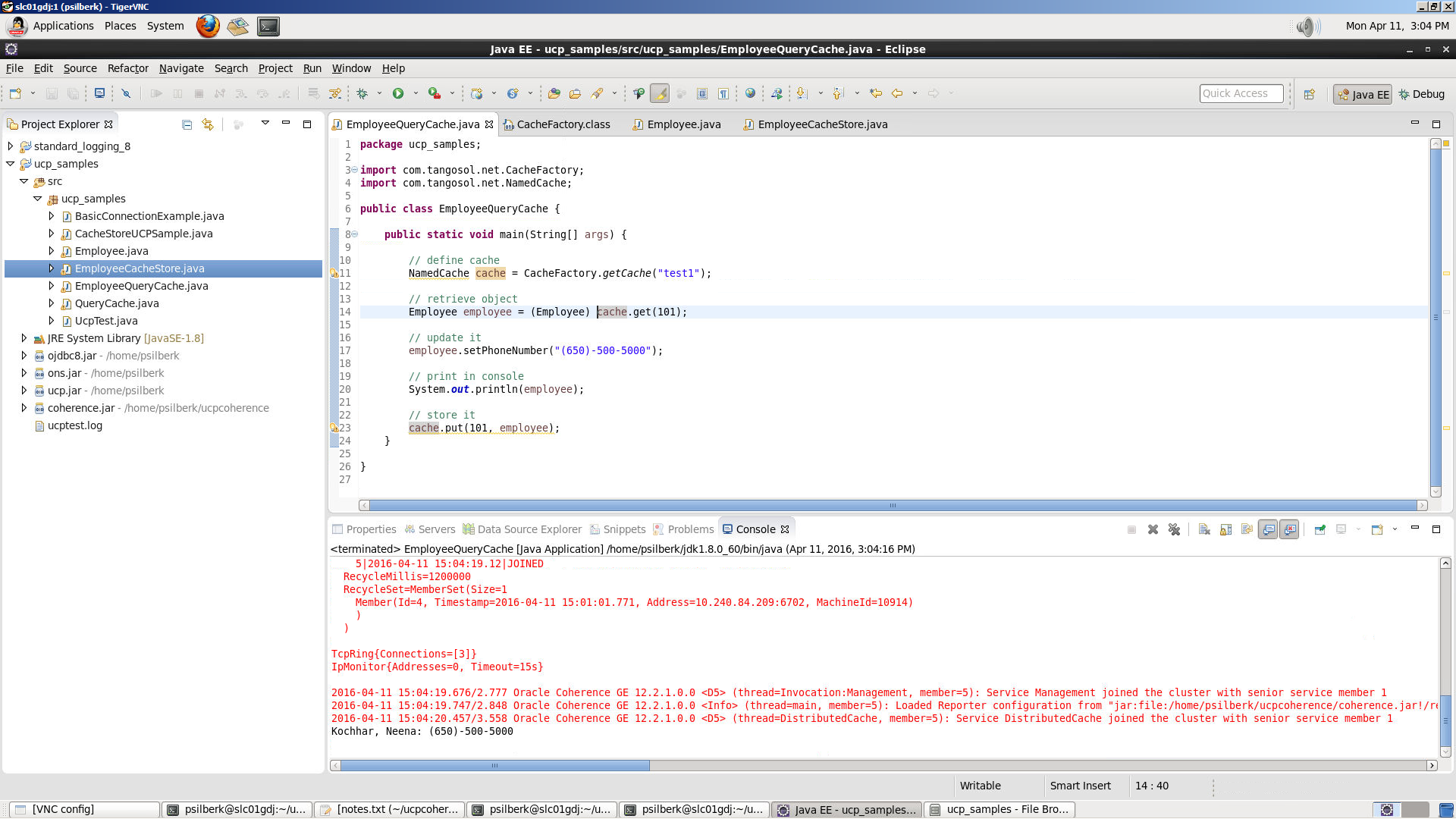

7. Interact with the cache from Java code

To interact with the cache from a java class it’s even easier. The only thing you should do is add the VM parameter -Dtangosol.coherence.override=tangosol-coherence-override.xml (pointing to the same one that started the nodes) to the following code:

public class EmployeeQueryCache {

public static void main(String[] args) {

// define cache

NamedCache cache = CacheFactory.getCache(“test1”);// retrieve object

Employee employee = (Employee) cache.get(101);// update it

employee.setPhoneNumber(“(650)-500-5000”);// print in console

System.out.println(employee);// store it

cache.put(101, employee);}

}

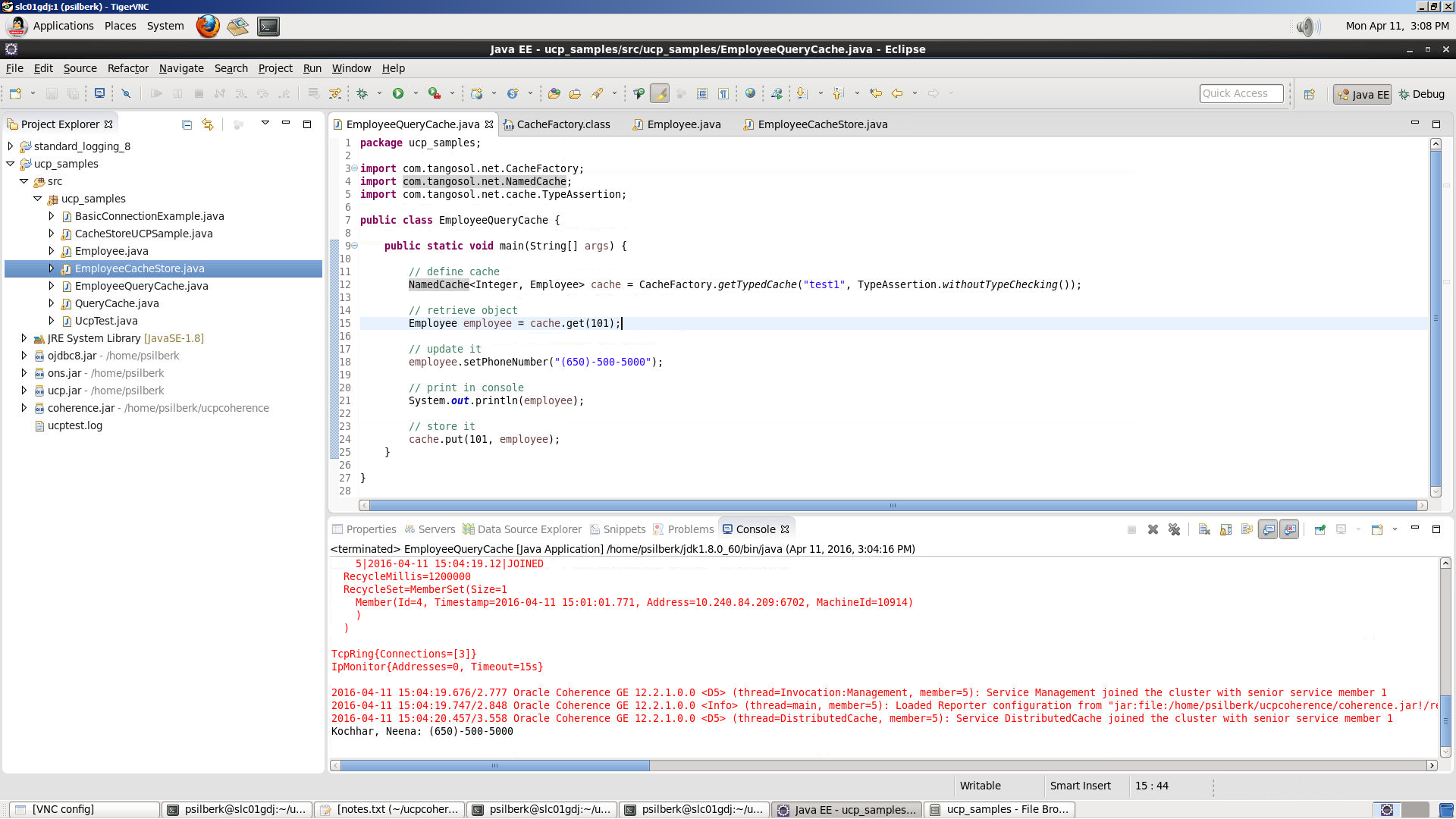

Since coherence 12.2.1 the interface NamedCache supports generics, so you might be able to update previous code with:

NamedCache<Integer, Employee> cache = CacheFactory.getTypedCache(“test1”, TypeAssertion.withoutTypeChecking());