Introduction

The field of Artificial Intelligence (AI) has seen tremendous advancements in recent years. One of the most exciting developments within the Data Integration and Analytics field is the growing interest in leveraging Large Language Models (LLM) to explore customer data. Companies are now seeking ways to empower their key power users with the capability of using Retrieval Augmented Generation (RAG) to extract valuable insights from their data in real-time. By doing so, they can offer their customers instant access to relevant information, enabling them to address their queries and issues more efficiently.

Use Case

Let’s assume a customer has some data in PostgreSQL that allows to perform some analysis, they have customer data on Oracle Database. The databases have different refreshment times. The user wants to consolidate that data to start a new project to use RAG on top of the database.

Main requirements:

- Consolidate data;

- Access data in near real time;

- Explore data without need to access the database.

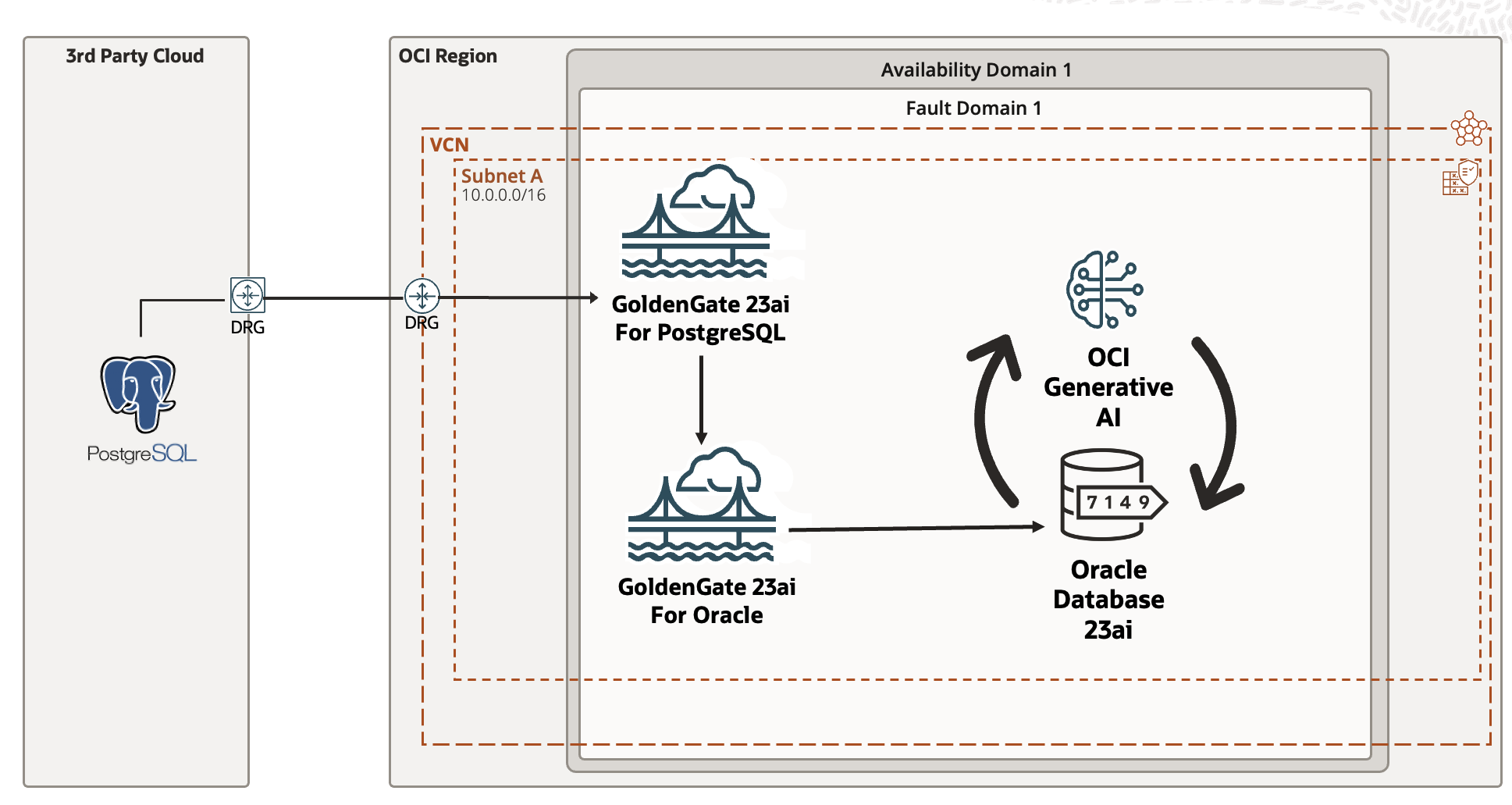

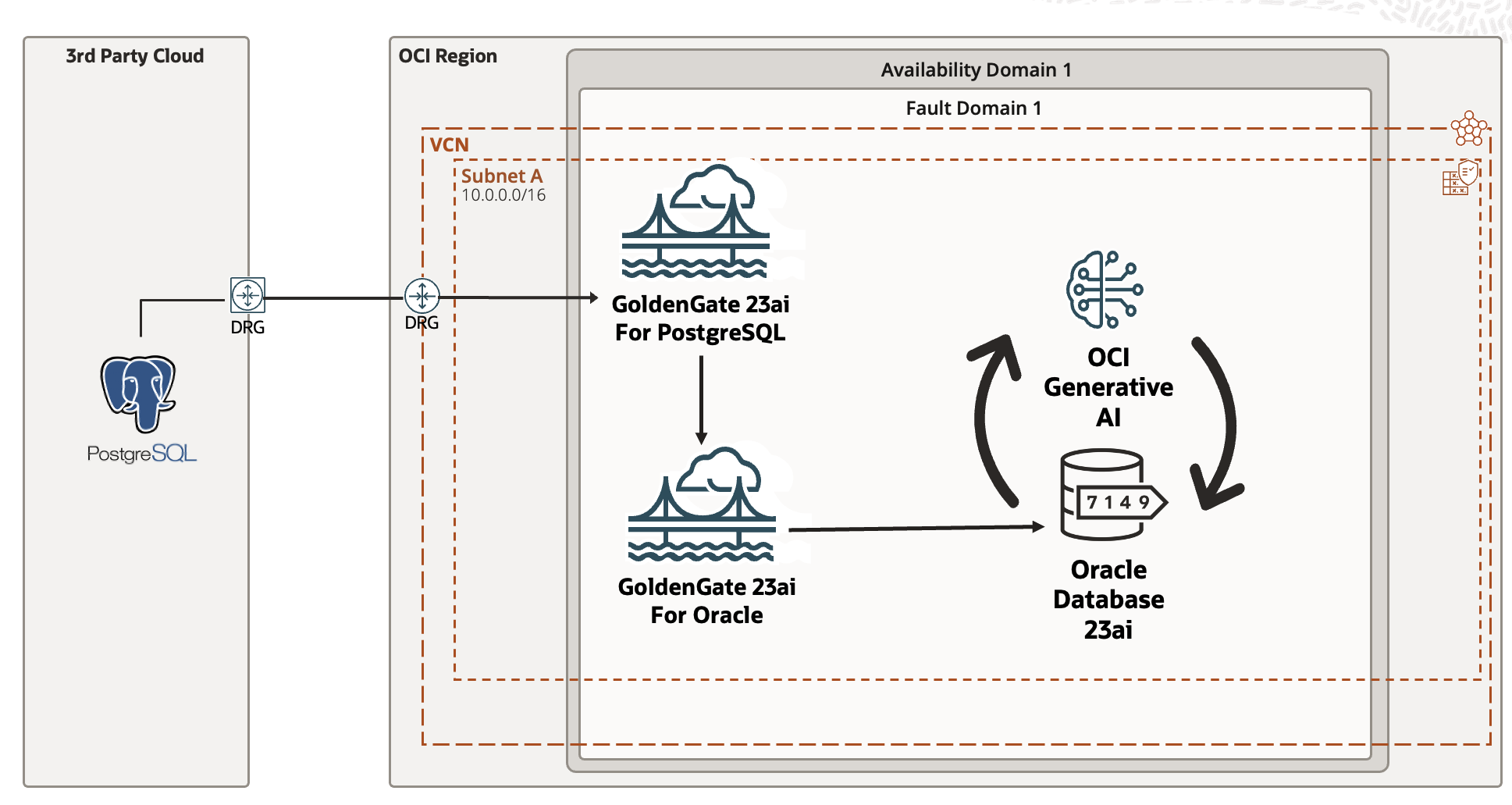

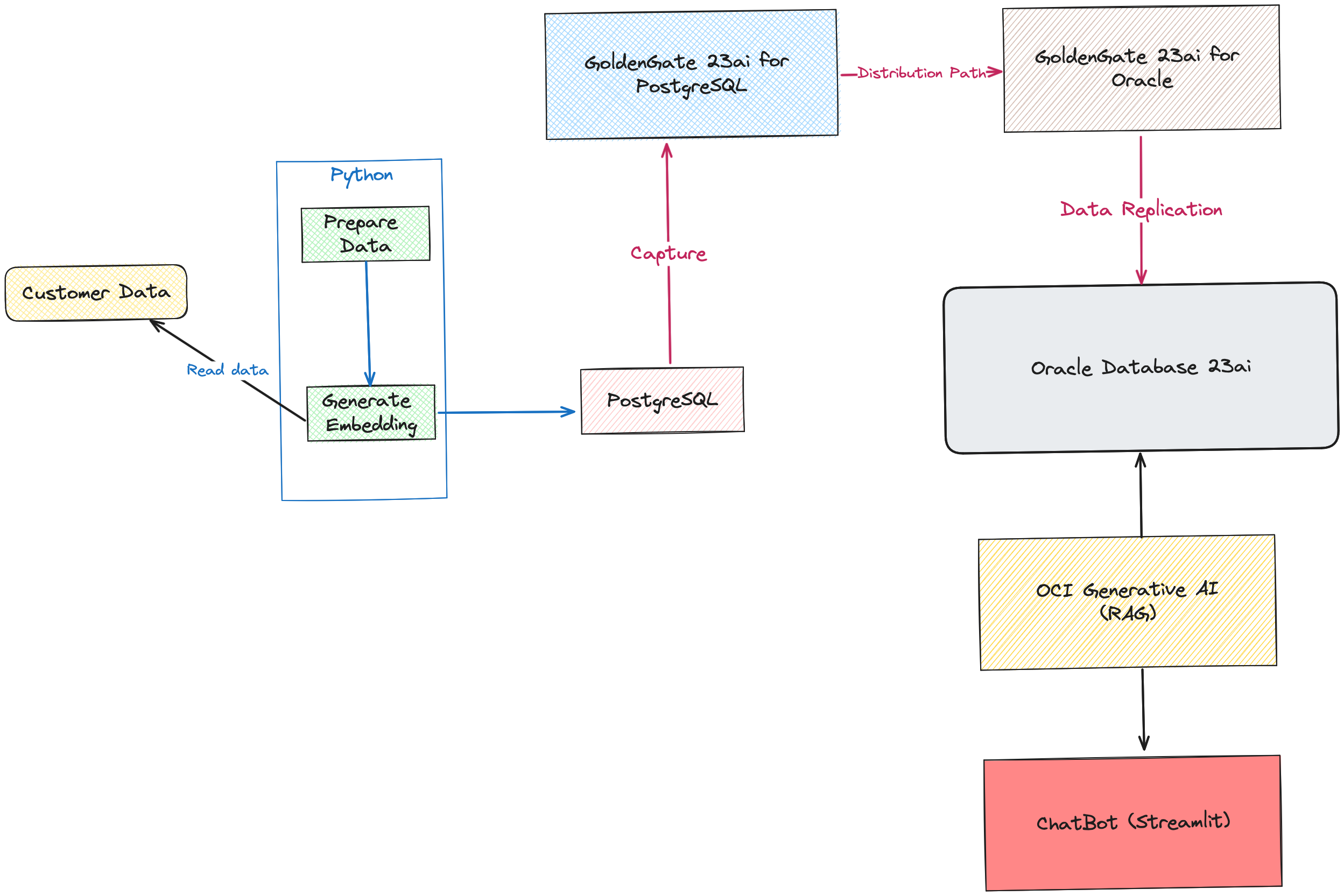

To fulfill these requirements, we need to select the right tooling, let’s analyze it on below physical diagram:

The PostgreSQL is located on 3rd party Cloud (it could be a different database, MySQL, SQL Server etc), and we have Oracle GoldenGate for PostgreSQL to capture data from PostgreSQL even the vector columns that will be extremely important for our RAG. The data is transfer to Oracle GoldenGate for Oracle and replicated into Oracle Database 23ai. Once the data is on Oracle Database, we have a python script that will help us to process the data and convert the oracle data into embeddings (vector column) and then a RAG function. Let’s analyze a more detailed diagram for all the steps required for this use case:

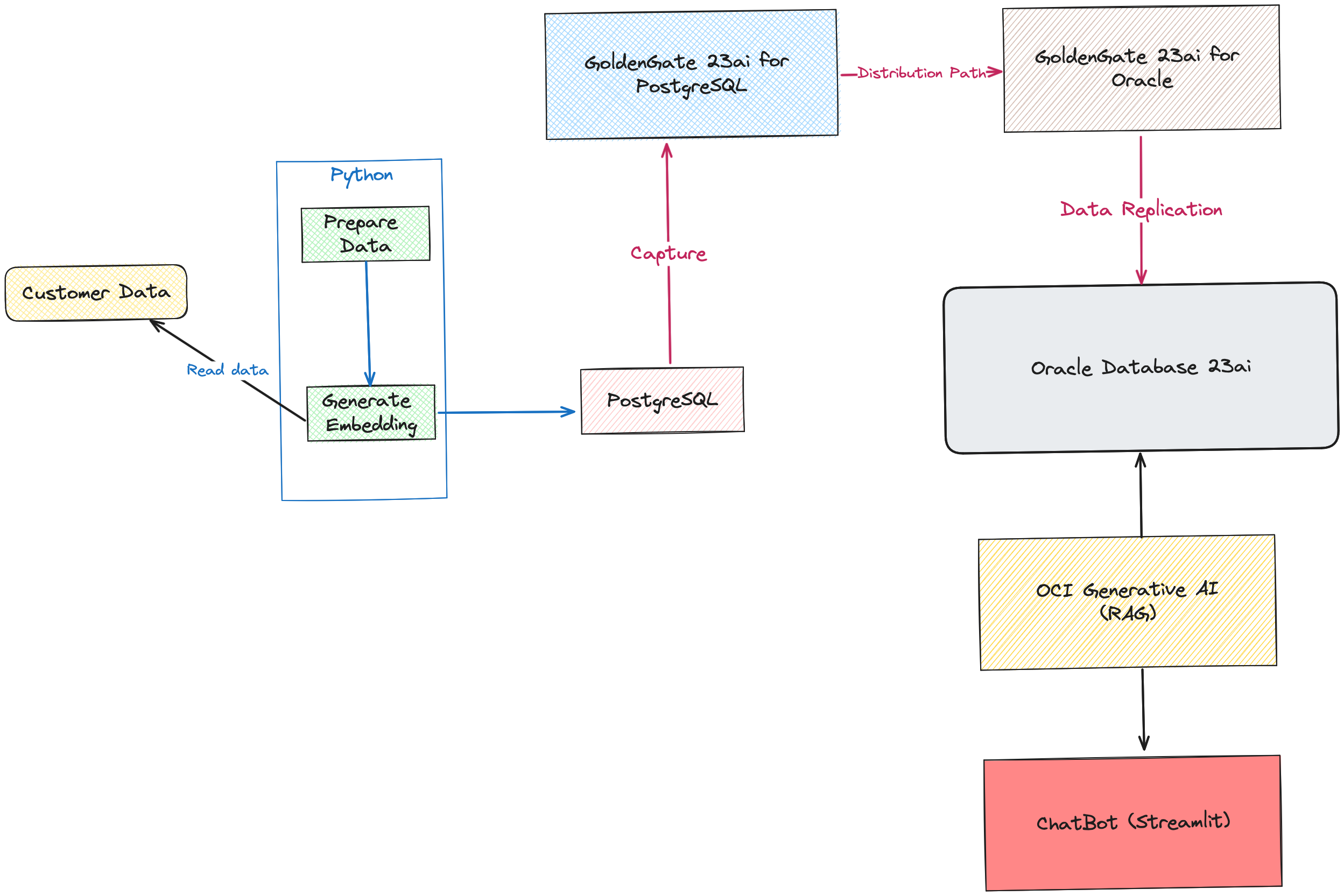

Use case steps:

- Customer Data is a CSV file that is processed by a Python script where using pandas dataframe is created an auxiliary column (Combined)

- Sentence Tranformers model I is used to generate the embeddings for Combined column;

- The data is loaded into PostgreSQL (embeddings column is vector type);

- The GoldenGate for PostgreSQL capture the data and send it over to GoldenGate for Oracle;

- Oracle Database 23ai as a centerpiece of this use case, stores the vector data from PostgreSQL;

- A python script is executed to generate the embeddings for Sales Orders Data with Langchain Oracle Vector Store;

- The output of retriever is sent to OCI Generative AI to adapt the text for end user

- Steamlit allows building a ChatBot that calls the previous functions and allow having a conversation.

The first 3 steps are optional, the main goal is to show how to generate embeddings and load into PostgreSQL.

Pre-requisites

- Oracle Cloud Database Service 23ai with OML4PY configured.

- Oracle GoldenGate for Oracle and PostgreSQL

- PostgreSQL database (version compatible with GoldenGate)

- OCI GenAI access

- Python installed

Configure ONNX model on Oracle database 23ai

One of the main pre-requesites is to have an onnx model configured. I’m not going to cover the configuration of OML4PY, just how to create a ONNX model in case you don’t have one:

Log in as Oracle user and type python3 to start python console:

from oml.utils import EmbeddingModel, EmbeddingModelConfig

EmbeddingModelConfig.show_preconfigured()

EmbeddingModelConfig.show_templates()

#generate from preconfigureded model “sentence-transformers/all-MiniLM-L6-v2”

em = EmbeddingModel(model_name=“sentence-transformers/all-MiniLM-L6-v2“)

em.export2file(“onnx_model_eloi”,output_dir=“/home/oracle”)

Create database username for this use case:

sqlplus / as sysdba

CREATE TABLESPACE tbs1

DATAFILE ‘tbs5.dbf‘ SIZE 20G AUTOEXTEND ON

EXTENT MANAGEMENT LOCAL

SEGMENT SPACE MANAGEMENT AUTO;

drop user vector cascade;

create user vector identified by <password> DEFAULT TABLESPACE tbs1 quota unlimited on tbs1;

grant DB_DEVELOPER_ROLE to vector;

create or replace directory VEC_DUMP as ‘/u01/app/oracle/product/23.0.0.0/dbhome_1/pump_dir/‘;

grant read, write on directory VEC_DUMP to vector;

EXECUTE dbms_vector.load_onnx_model(‘VEC_DUMP’, ‘onnx_model_eloi.onnx‘, ‘demo_model‘,

JSON(‘{“function” : “embedding”, “embeddingOutput” : “embedding” ,

“input”: {“input”: [“DATA”]}}’));

Consuming Data on PostgreSQL and Generating Embeddings

Since, I’m using the model all-MiniLM-L6-v2 on Oracle database, I’ll keep it to have embeddings compatible across the databases. Below, you can find the code to generate some data to PostgreSQL database. This step is not mandatory in case you have already a database with vectors created and loaded.

Python Code

# Copyright (c) 2024 Oracle and/or its affiliates.

#

# The Universal Permissive License (UPL), Version 1.0

#

# Subject to the condition set forth below, permission is hereby granted to any

# person obtaining a copy of this software, associated documentation and/or data

# (collectively the “Software”), free of charge and under any and all copyright

# rights in the Software, and any and all patent rights owned or freely

# licensable by each licensor hereunder covering either (i) the unmodified

# Software as contributed to or provided by such licensor, or (ii) the Larger

# Works (as defined below), to deal in both

#

# (a) the Software, and

# (b) any piece of software and/or hardware listed in the lrgrwrks.txt file if

# one is included with the Software (each a “Larger Work” to which the Software

# is contributed by such licensors),

# without restriction, including without limitation the rights to copy, create

# derivative works of, display, perform, and distribute the Software and make,

# use, sell, offer for sale, import, export, have made, and have sold the

# Software and the Larger Work(s), and to sublicense the foregoing rights on

# either these or other terms.

#

# This license is subject to the following condition:

# The above copyright notice and either this complete permission notice or at

# a minimum a reference to the UPL must be included in all copies or

# substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

# SOFTWARE.

import pandas as pd

from sentence_transformers import SentenceTransformer

import torch

import torch.nn.functional as F

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained(‘sentence-transformers/all-MiniLM-L6-v2‘)

model = AutoModel.from_pretrained(‘sentence-transformers/all-MiniLM-L6-v2‘)

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] # First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

def get_embedding(text):

# Tokenize sentences

encoded_input = tokenizer(text, padding=True, truncation=True, return_tensors=‘pt‘)

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling

sentence_embeddings = mean_pooling(model_output, encoded_input[‘attention_mask‘])

# Normalize embeddings

sentence_embeddings = F.normalize(sentence_embeddings, p=2, dim=1)

sentence_embeddings = sentence_embeddings.cpu().numpy().flatten().tolist()

print(sentence_embeddings)

return sentence_embeddings

# load & inspect dataset

input_datapath = “./orders/Customerdata.csv“

df = pd.read_csv(input_datapath, low_memory=False, skipinitialspace=True)

df.columns = df.columns.str.replace(‘ ‘, ”)

df = df.dropna()

df[‘Combined‘] = df.apply(lambda row: f“Customer {row.Customer} with account number {row.AccountNumber} with headquarters on {row.City}, {row.Country} and postal code {row.PostalCode}.”, axis=1)

top_n = 100

df = df.sort_values(“Customer“).tail(top_n * 2)

df[“embedding“] = df.Combined.apply(lambda x: get_embedding(x))

df.to_csv(“customer_embeddings.csv“, index=False)

Once the customer embeddings are generated, the next step is to load the data into PostgreSQL.

import psycopg2

import pandas as pd

import uuid

db_params = {

‘dbname‘: ‘<db name>‘,

‘user‘: ‘postgres‘,

‘password‘: ‘<password>‘,

‘host‘: ‘<hostname>‘,

‘port‘: ‘5432‘

}

def get_embedding():

df = pd.read_csv(“customer_embeddings.csv“, low_memory=False)

return df

def load_vector():

embedding = get_embedding()

for index, row in embedding.iterrows():

# Connect to PostgreSQL

conn = psycopg2.connect(**db_params)

cursor = conn.cursor()

# Insert content and embedding

cursor.execute(

“INSERT INTO VECTOR_PG VALUES (%s, %s, %s, %s)“,

(str(uuid.uuid1()),

row[‘Combined‘],

‘metadata‘,

row[‘embedding‘]))

conn.commit()

cursor.close()

conn.close()

load_vector()

Configuring OCI GoldenGate

As a pre-requisite the OCI GoldenGate deployments are up and running. Let’s start by creating the 2 connections we need for this use case.

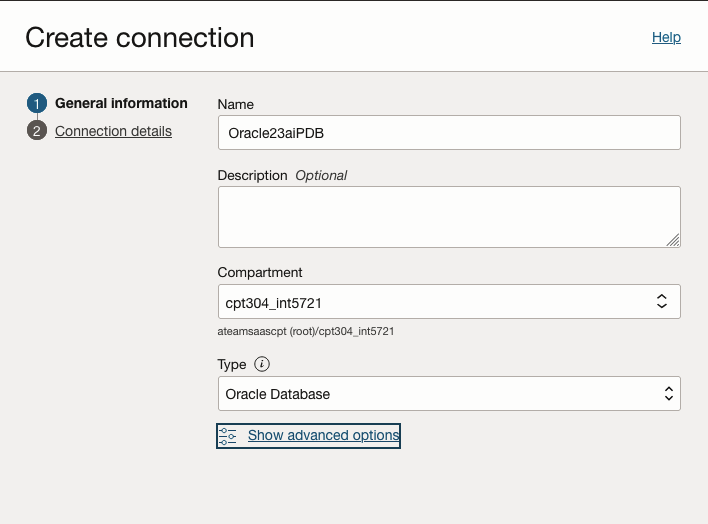

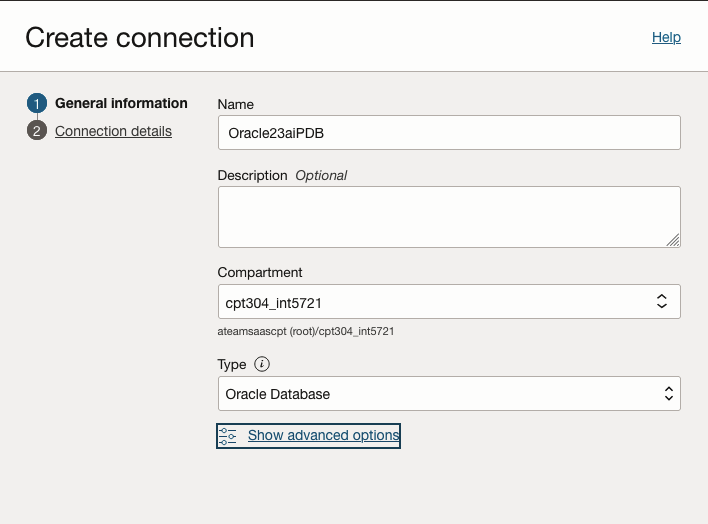

Oracle 23ai Connection

Navigate to Oracle Database > GoldenGate in the OCI Console, then click on Connections.

Click on “Create Connection”, provide a connection name, and select the option “Oracle Database”:

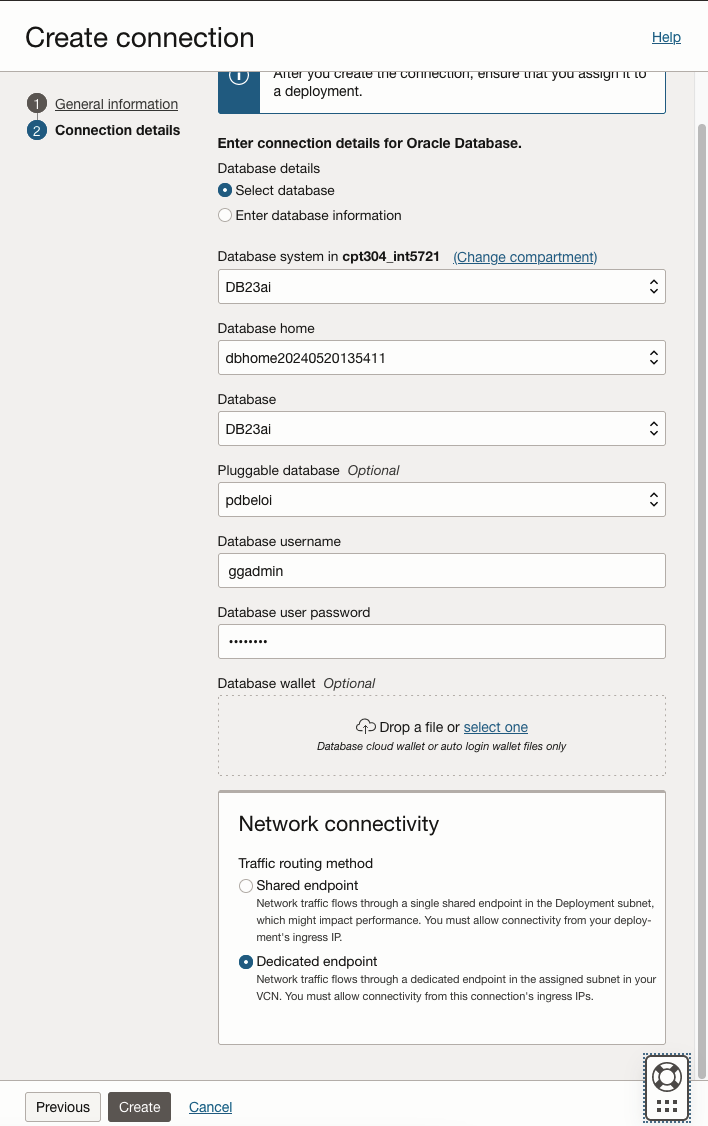

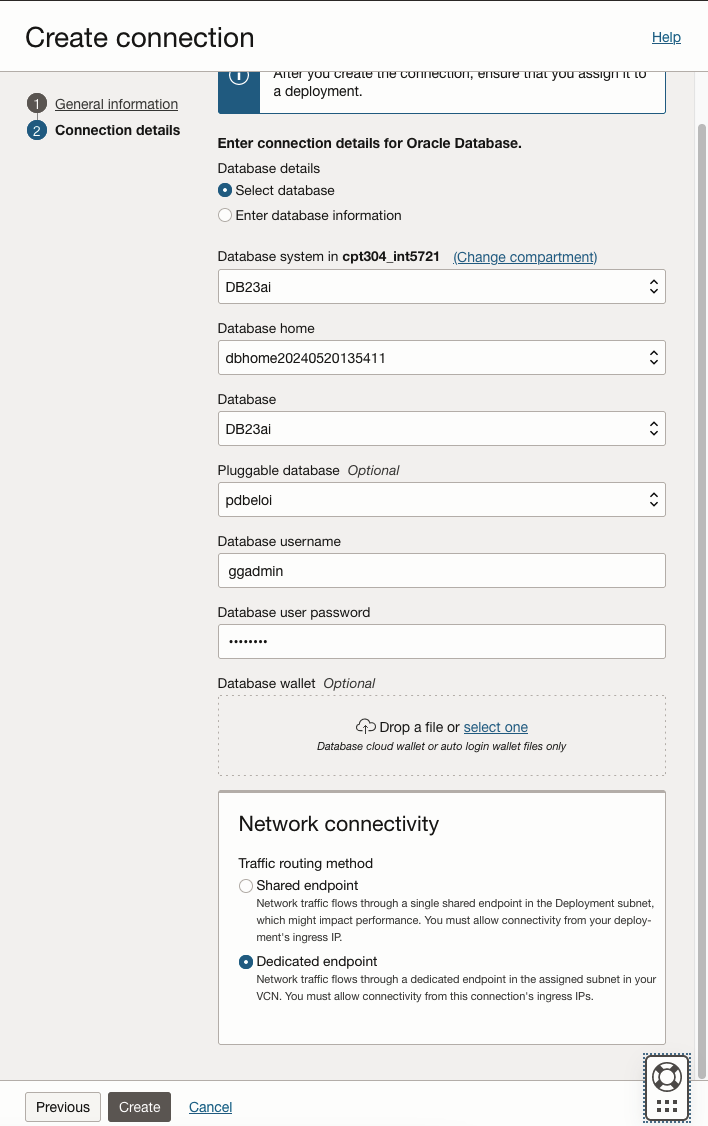

Select the database name and the PDB name since the Oracle database will a target:

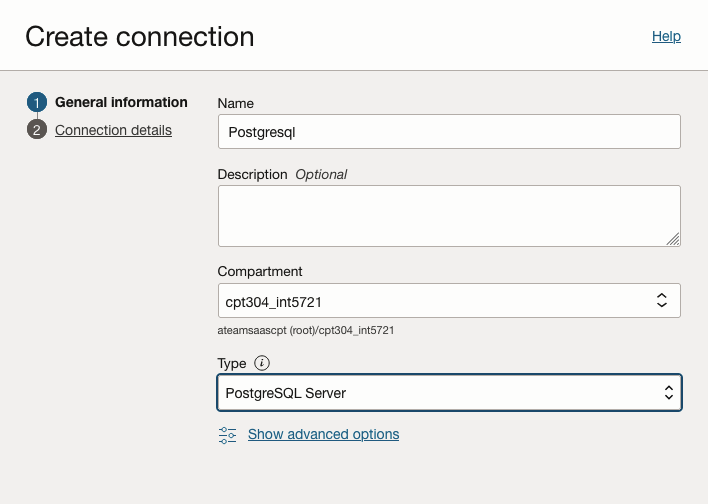

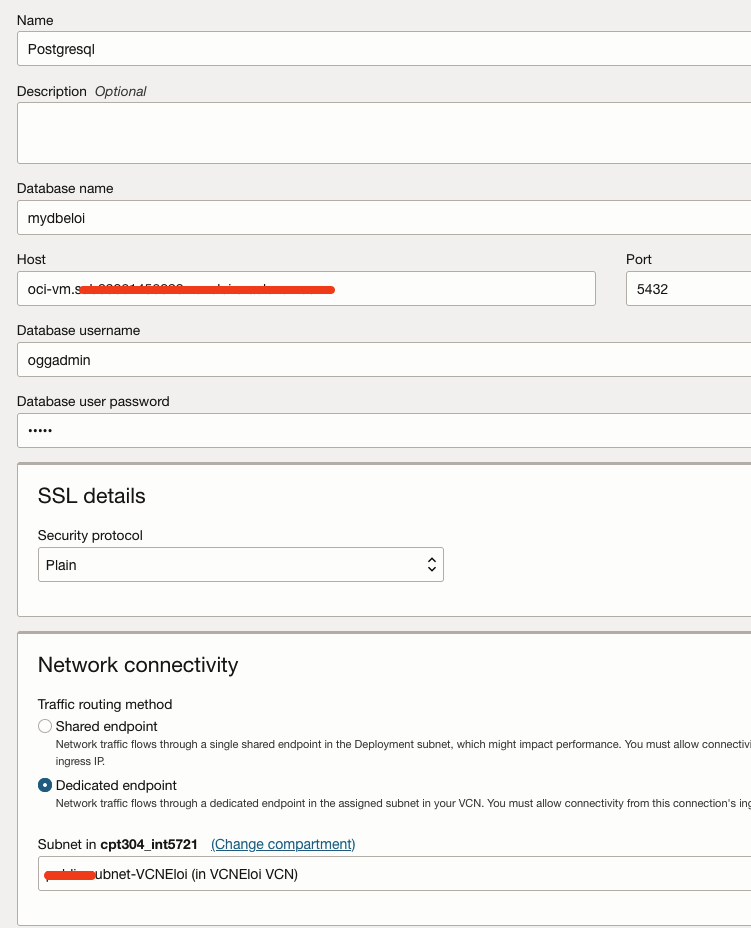

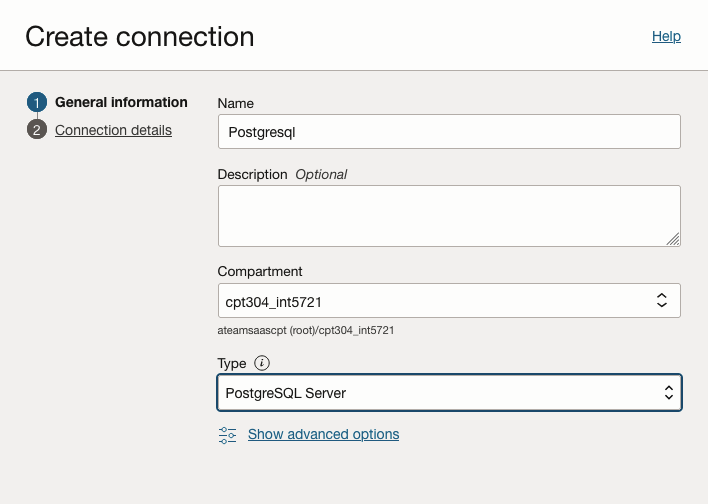

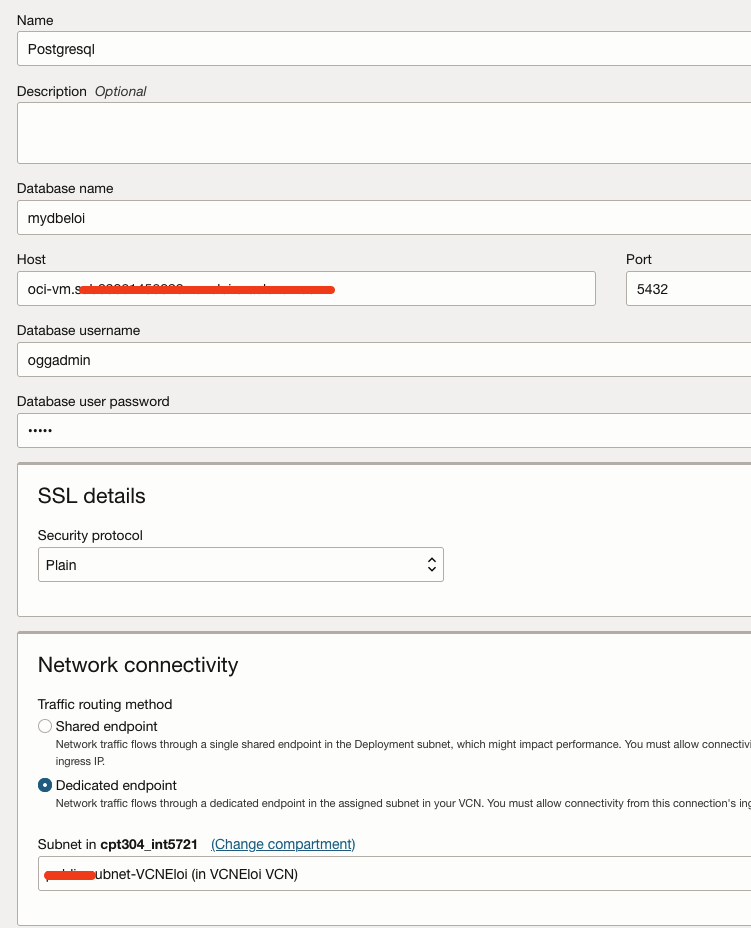

For Postgresql connection is very similar:

Once you have created both connections, please assign them to the OCI GoldenGate deployment.

Creating Connections and Adding Trandata

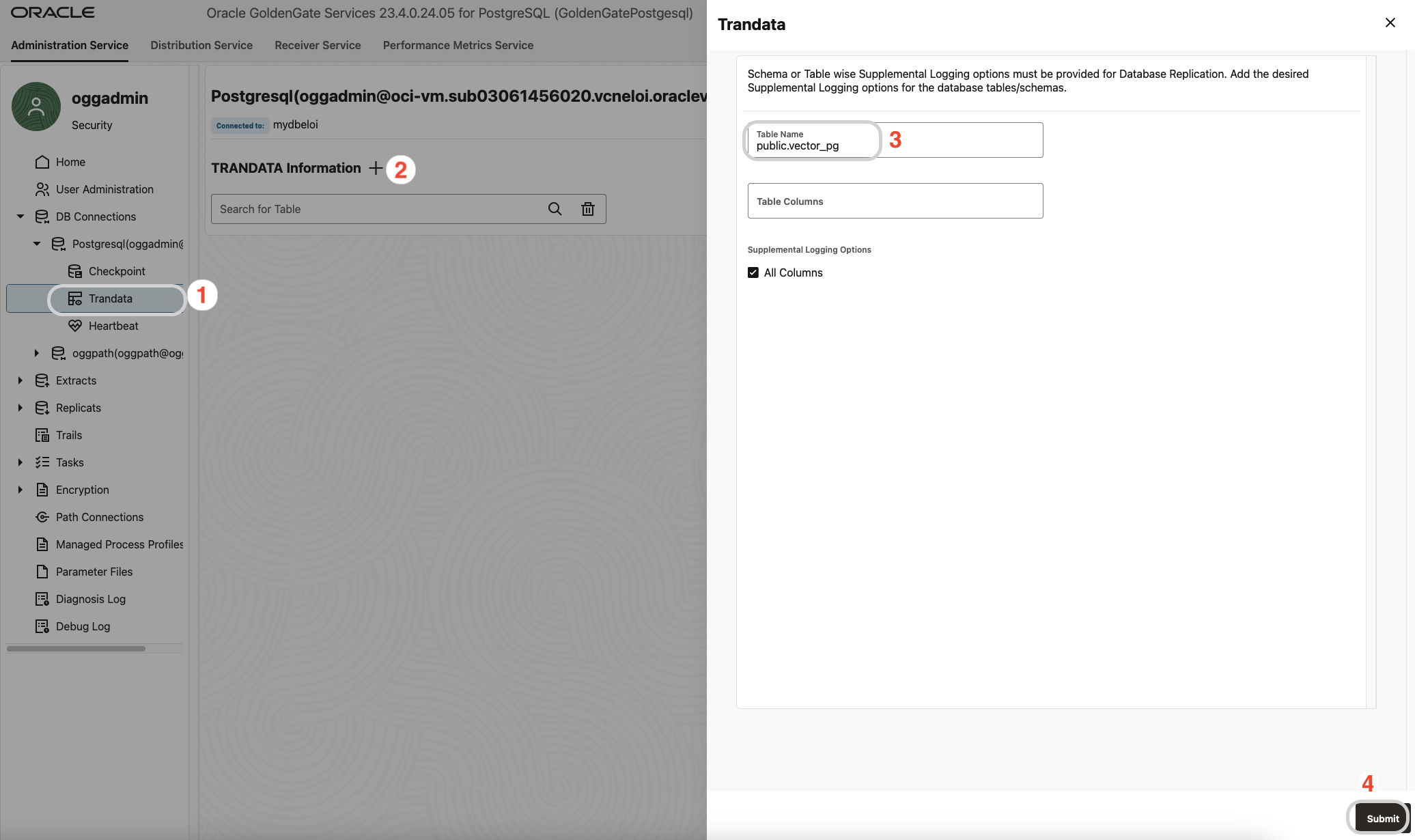

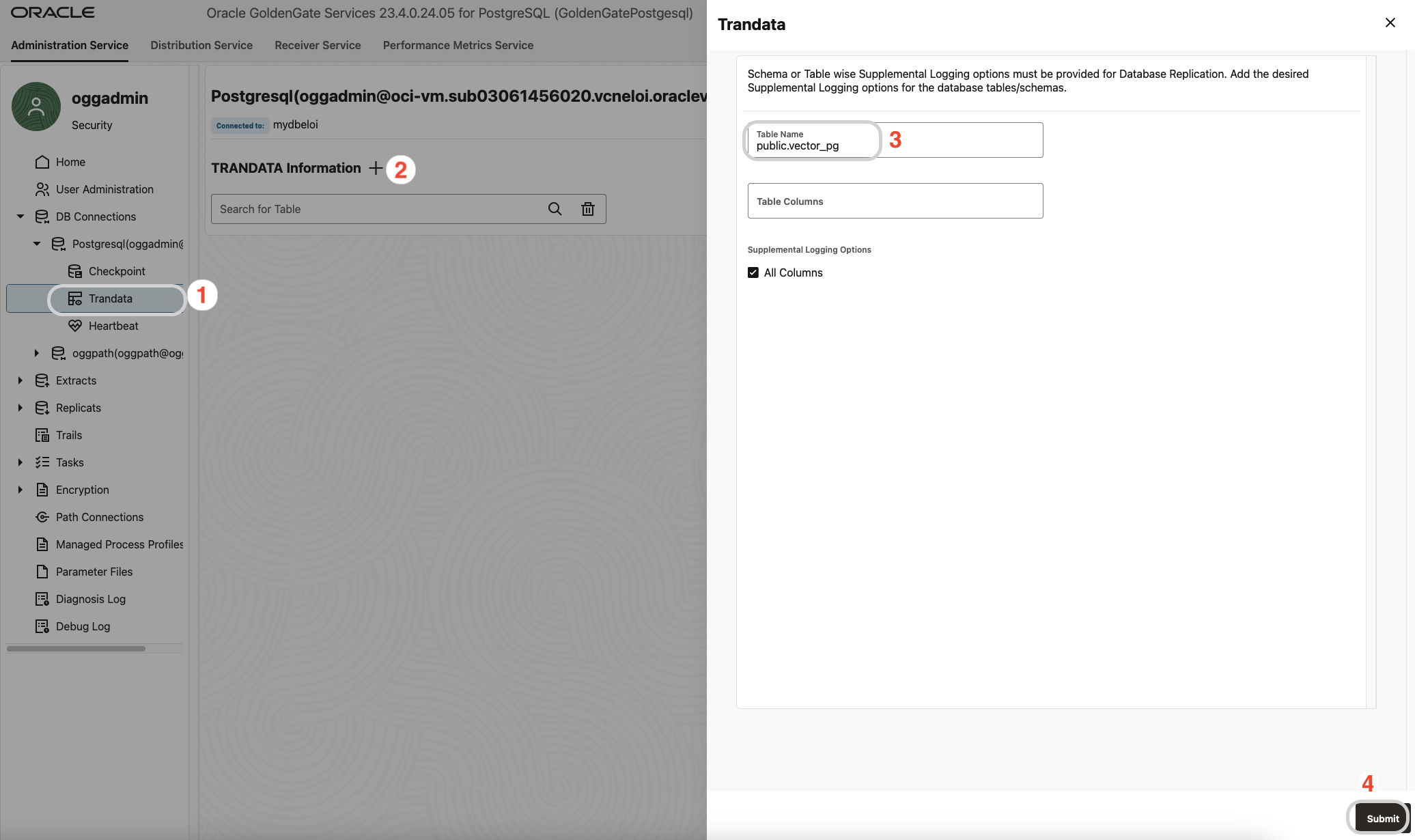

Adding Trandata

Access the GoldenGate Console and click on “Configuration.” You will find the two connections there listed as Credentials. First, test the connection to the primary database (attempting to test the standby connection will fail as the database is not in an open mode). To test the connection, you have 4 icons. Click on the first icon to connect.

If the connectivity test is successful, you will see this screen. Please, add the trandata for your postgresql table in that order:

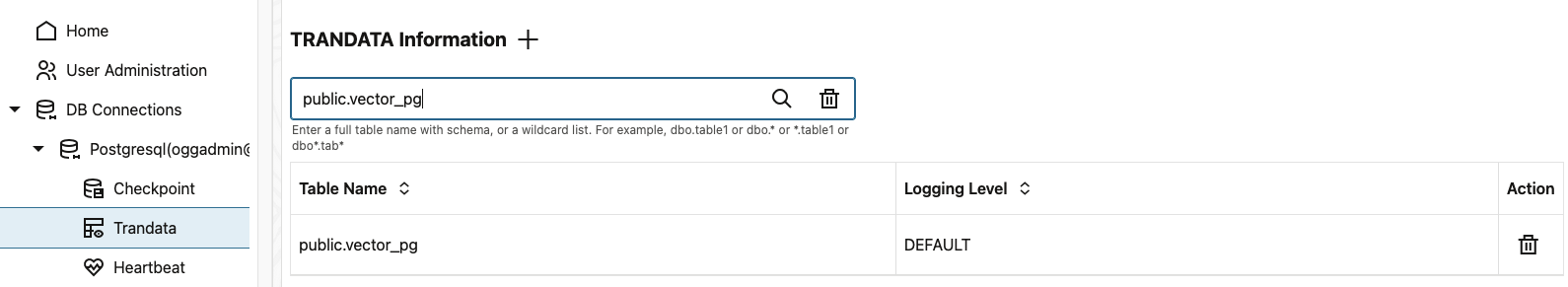

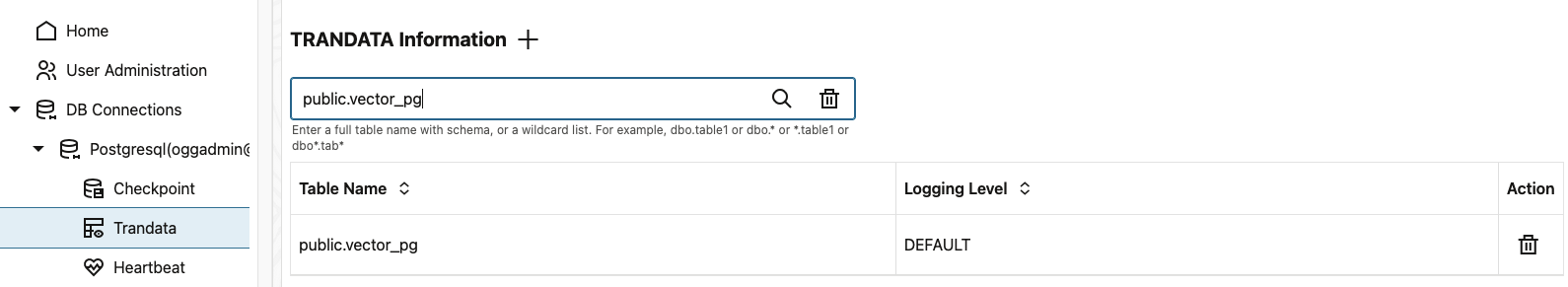

Confirm the trandata has been added by searching for your table:

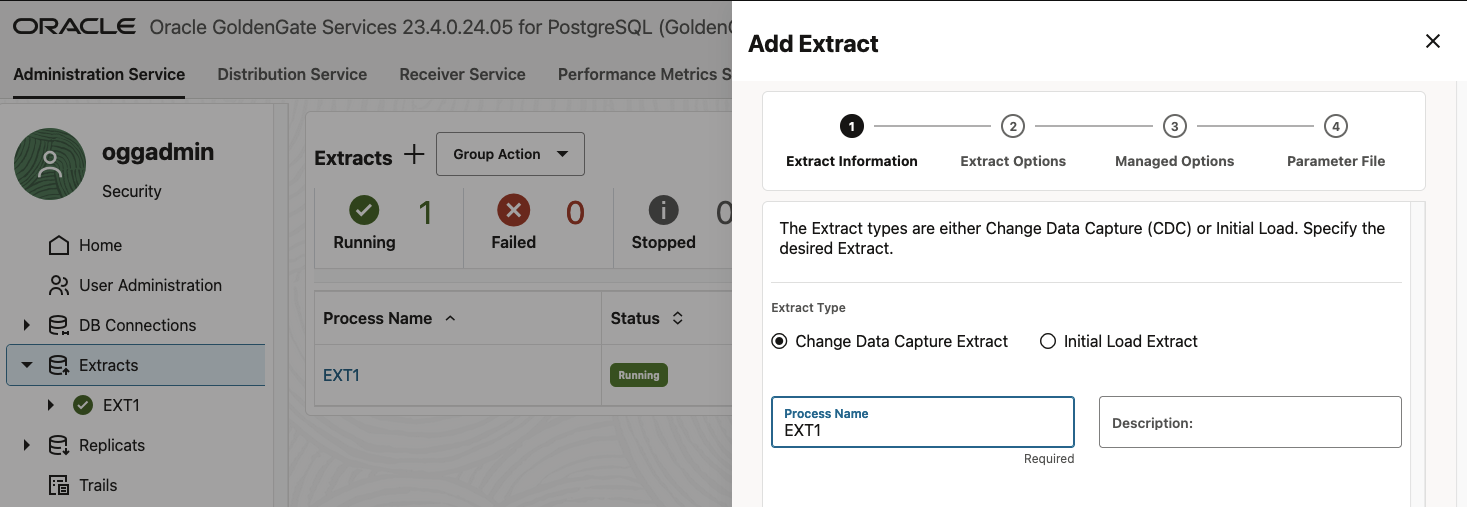

Creating Extract, Distribution Path and Replicat

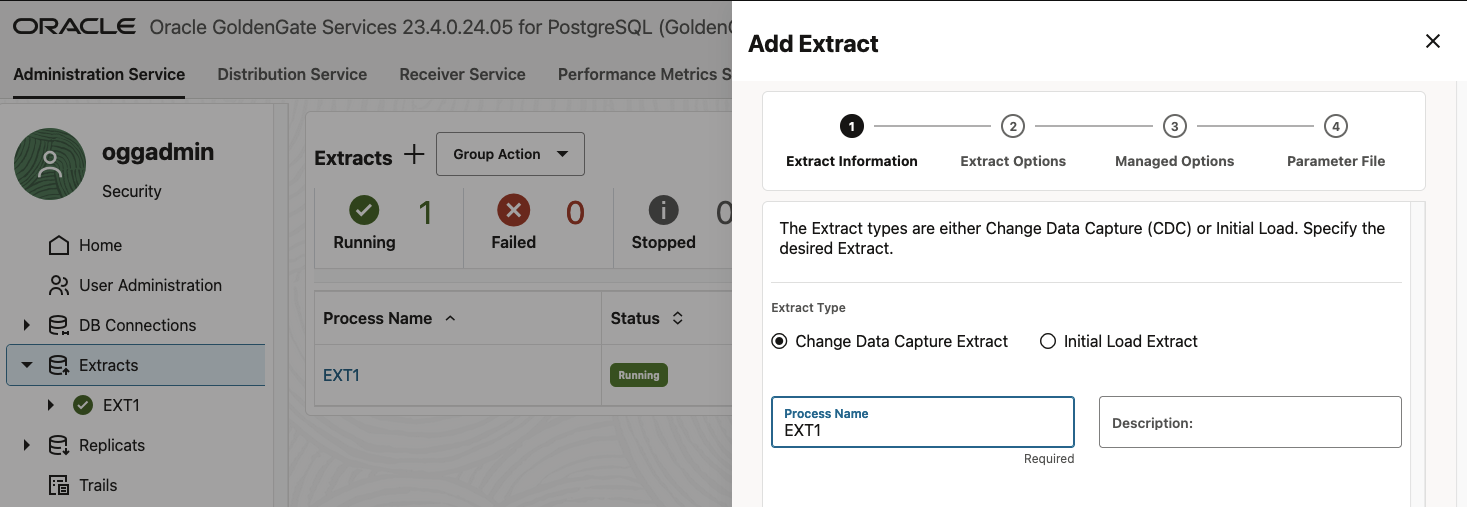

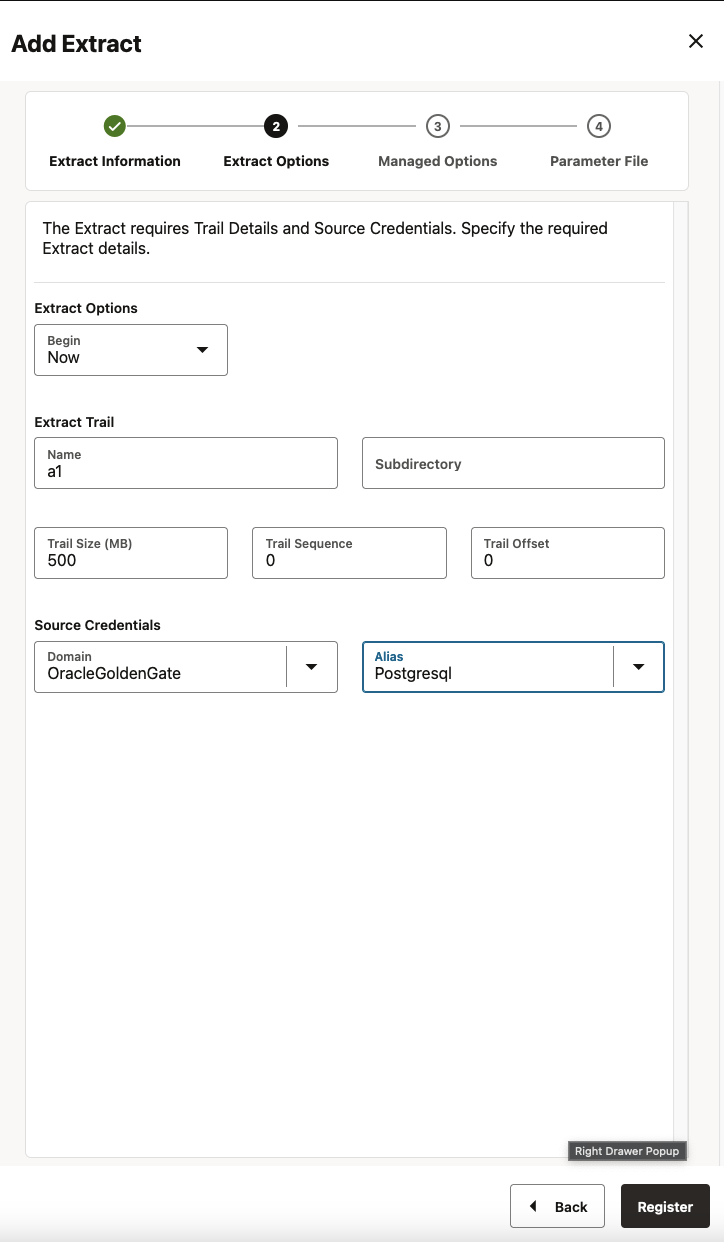

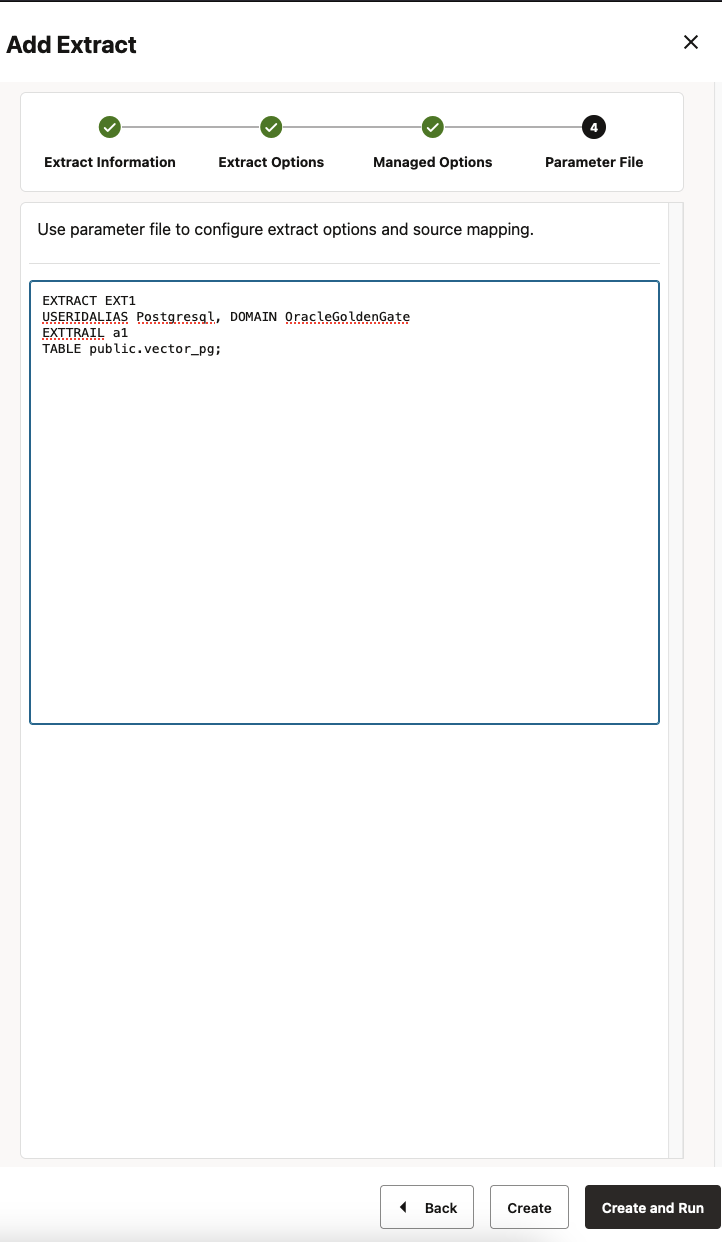

Creating Extract

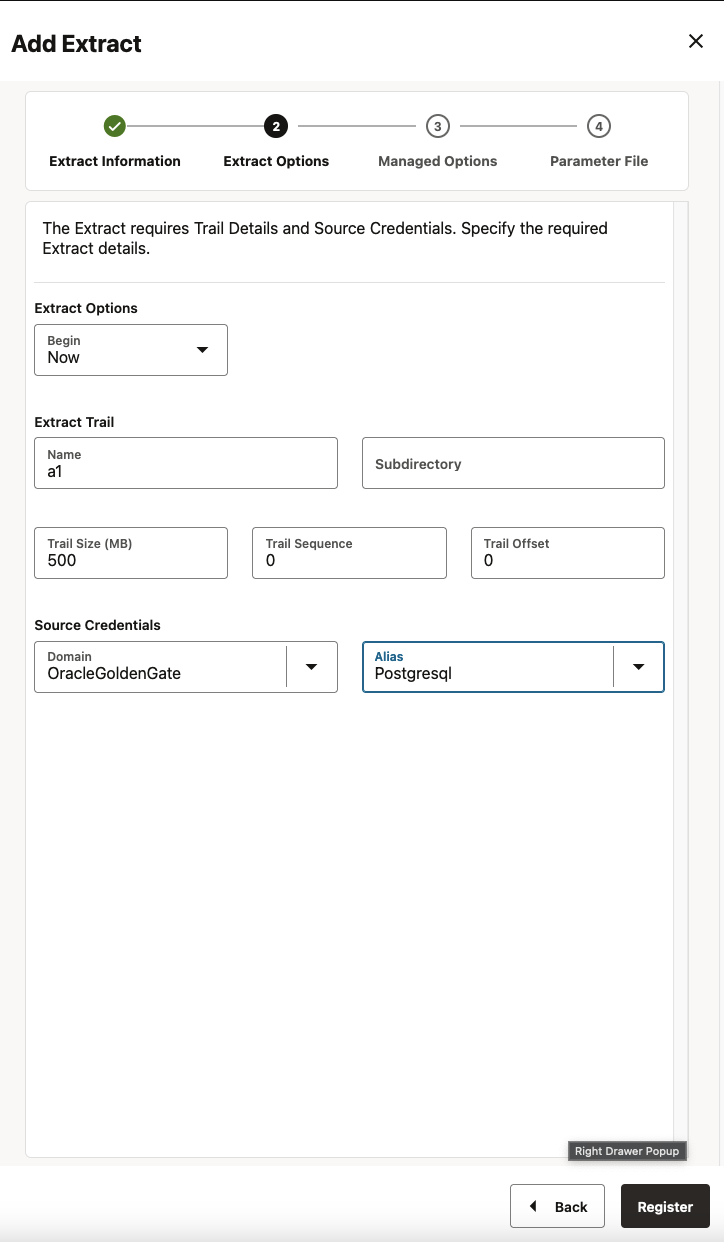

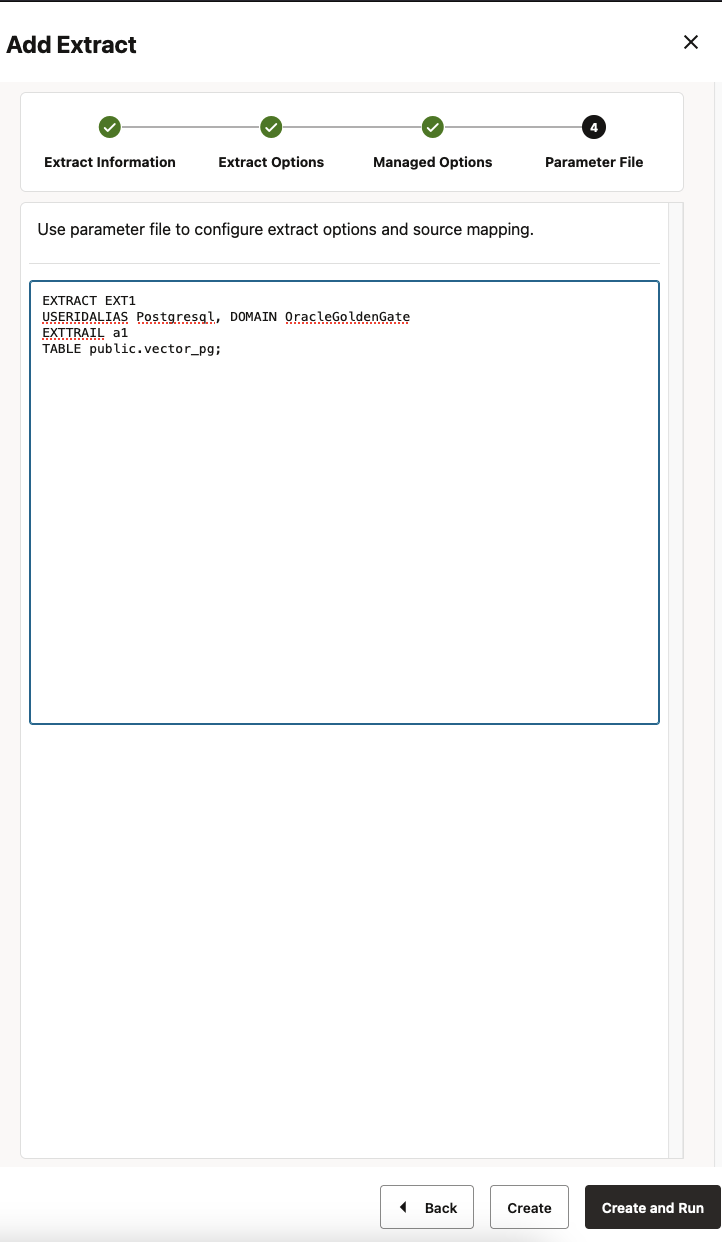

Click Add Extract (plus icon) in the Extracts panel to create either an Initial Load Extract or a Change Data Capture Extract. Select Change Data Capture Extract and click Next.

Enter a Trail Name, select the Domain and Alias. Click Next.

Specify which tables and schemas GoldenGate will capture data from in the Parameter Files page, then click Create.

Creating Distribution path

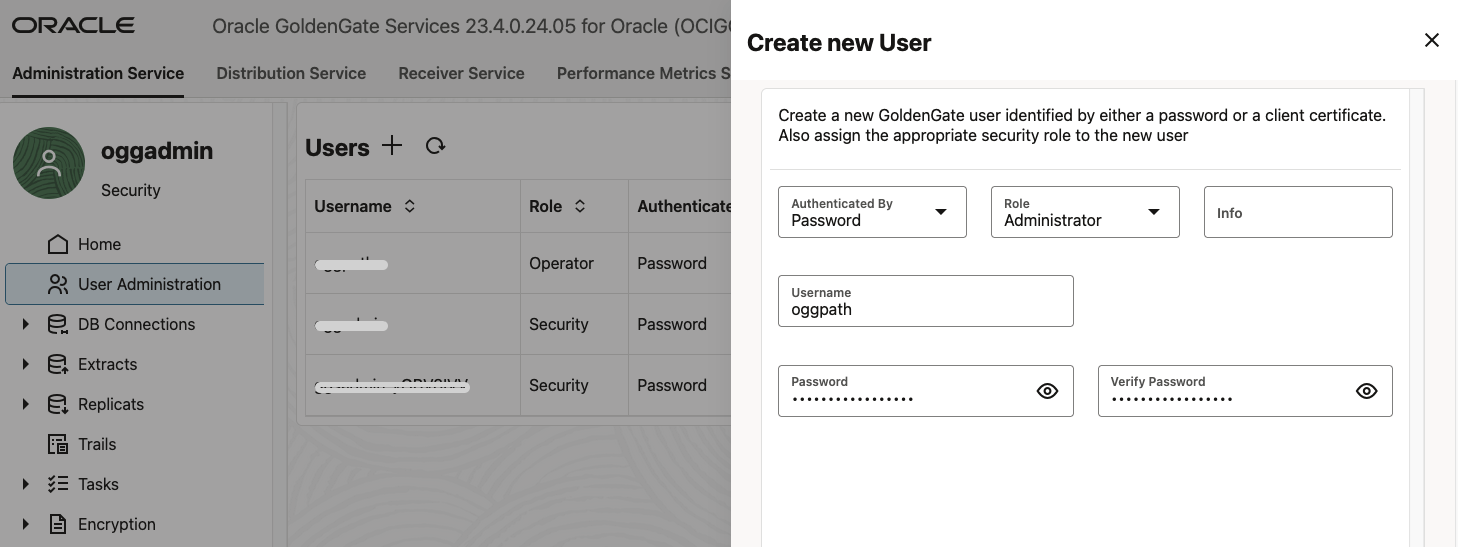

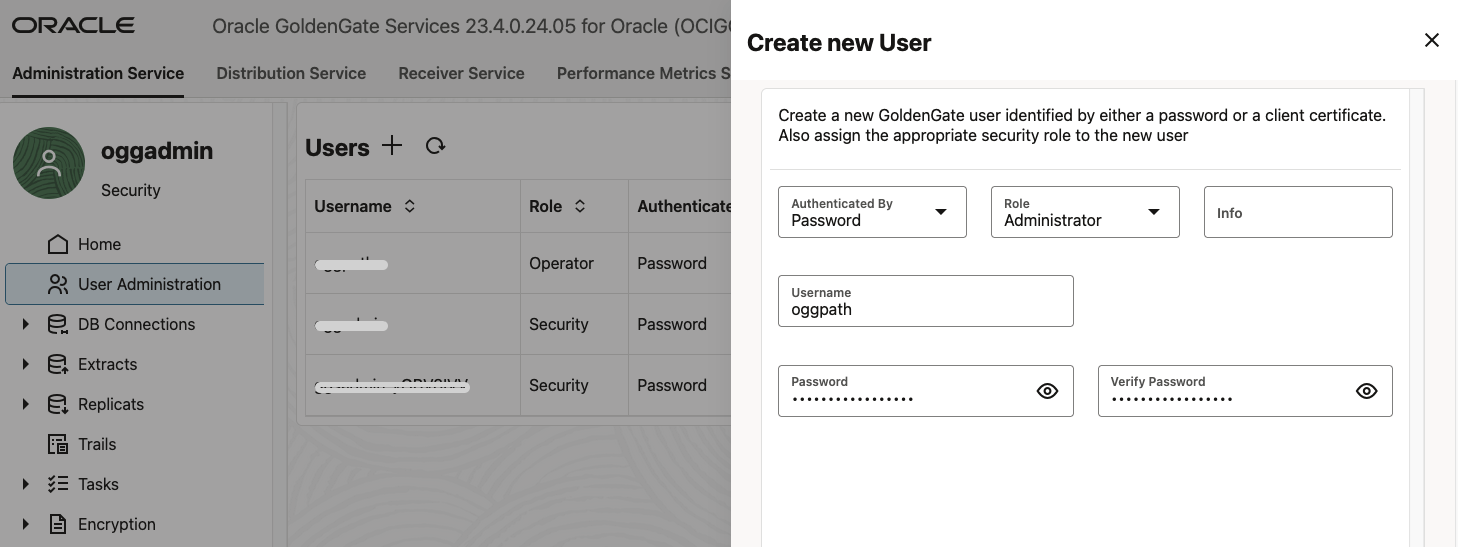

Before we create the distribution path, we need to create a credential in OCI GG for Oracle. This credential uses a GoldenGate user from the target OCI GoldenGate for Oracle deployment.

Create a new user for the Distribution Path

In OCI GoldenGate (for Oracle) Console, click User Administrator. Add a new user by clicking on the sign ‘+’. Specify the username, the Operator role, set the Type to Password, and provide your password:

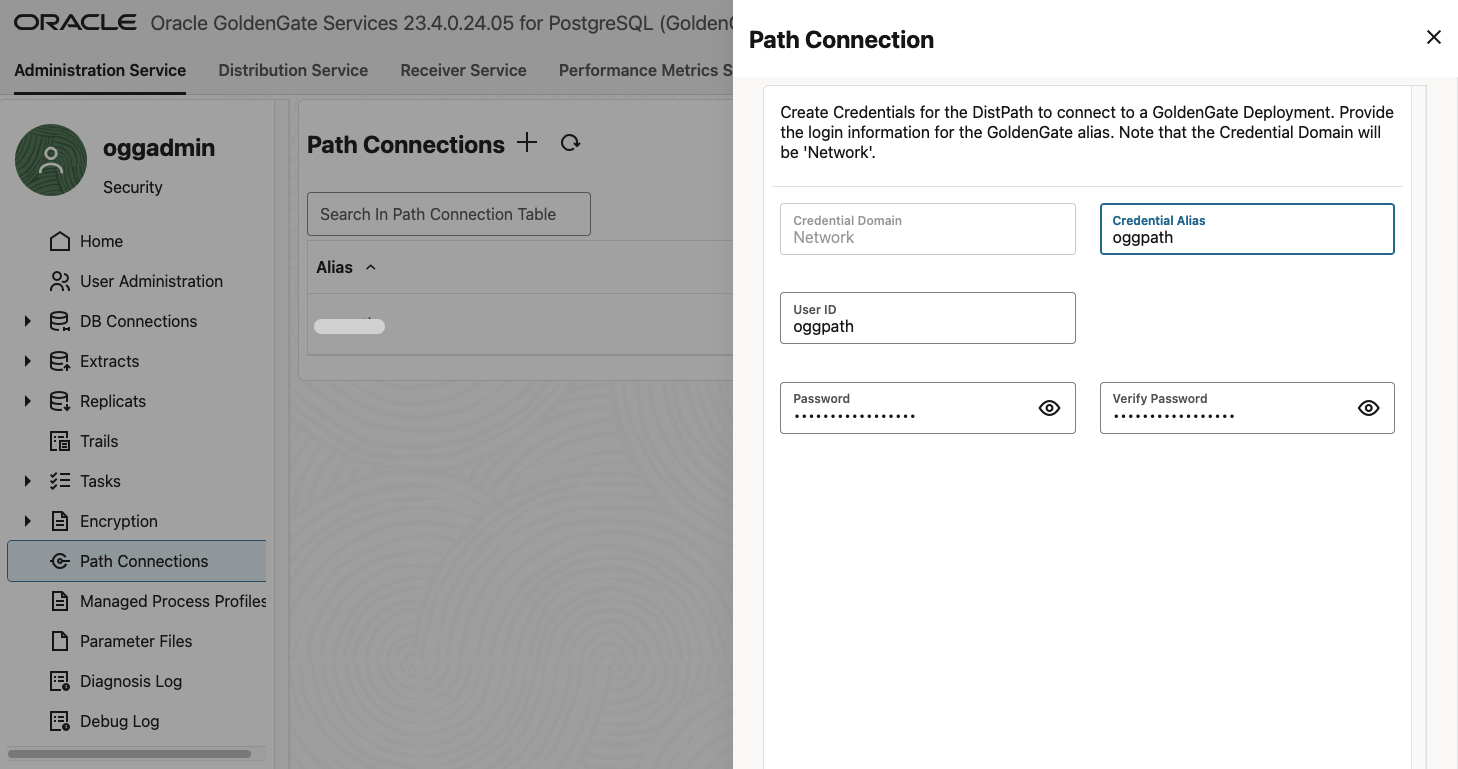

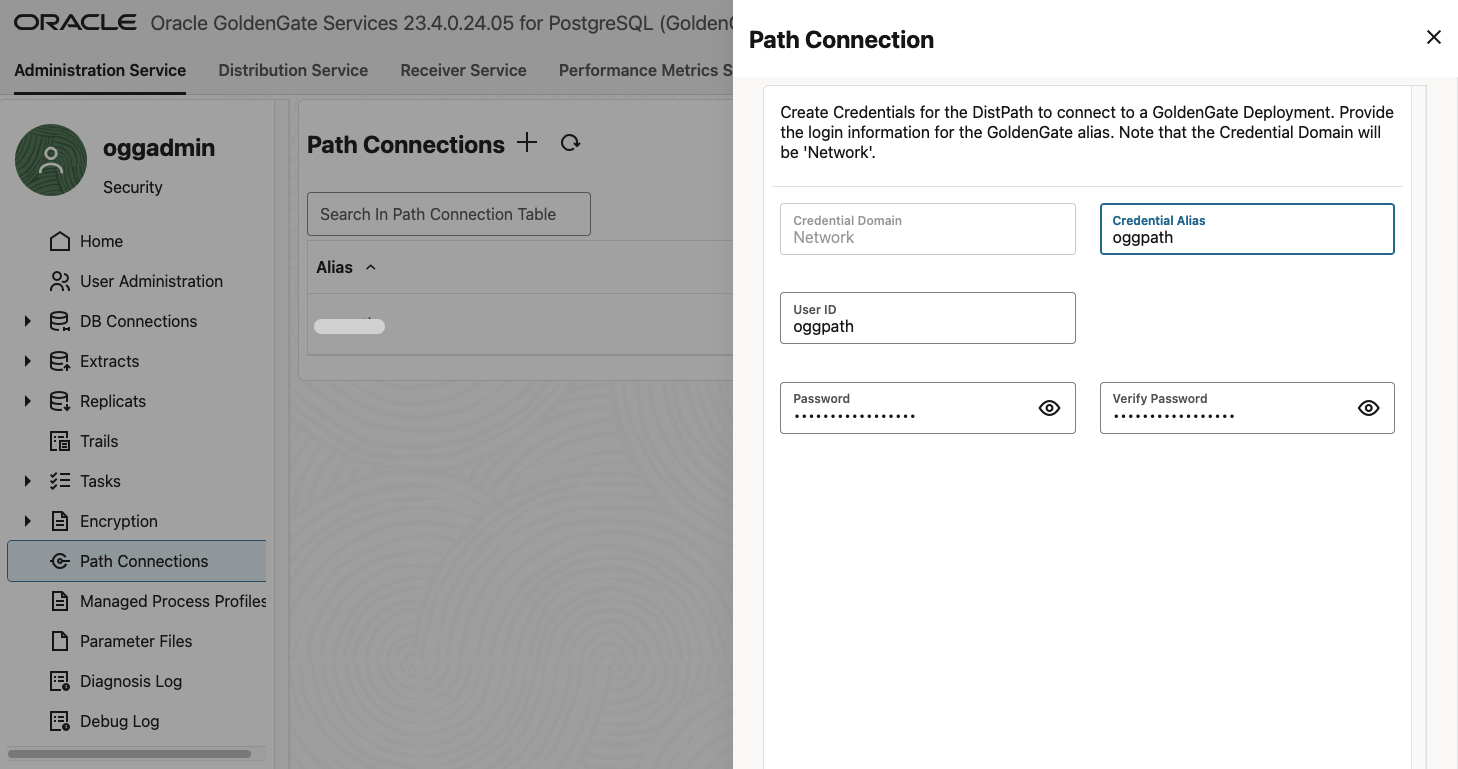

Add a new Path Connection on the source GoldenGate deployment

Go to OCI GoldenGate for PostgreSQL. Click Path Connections, and add a path connection by clicking on the sign ‘+’. Specify the same username and password you defined for the GoldenGate user on target:

Create a Distribution Path

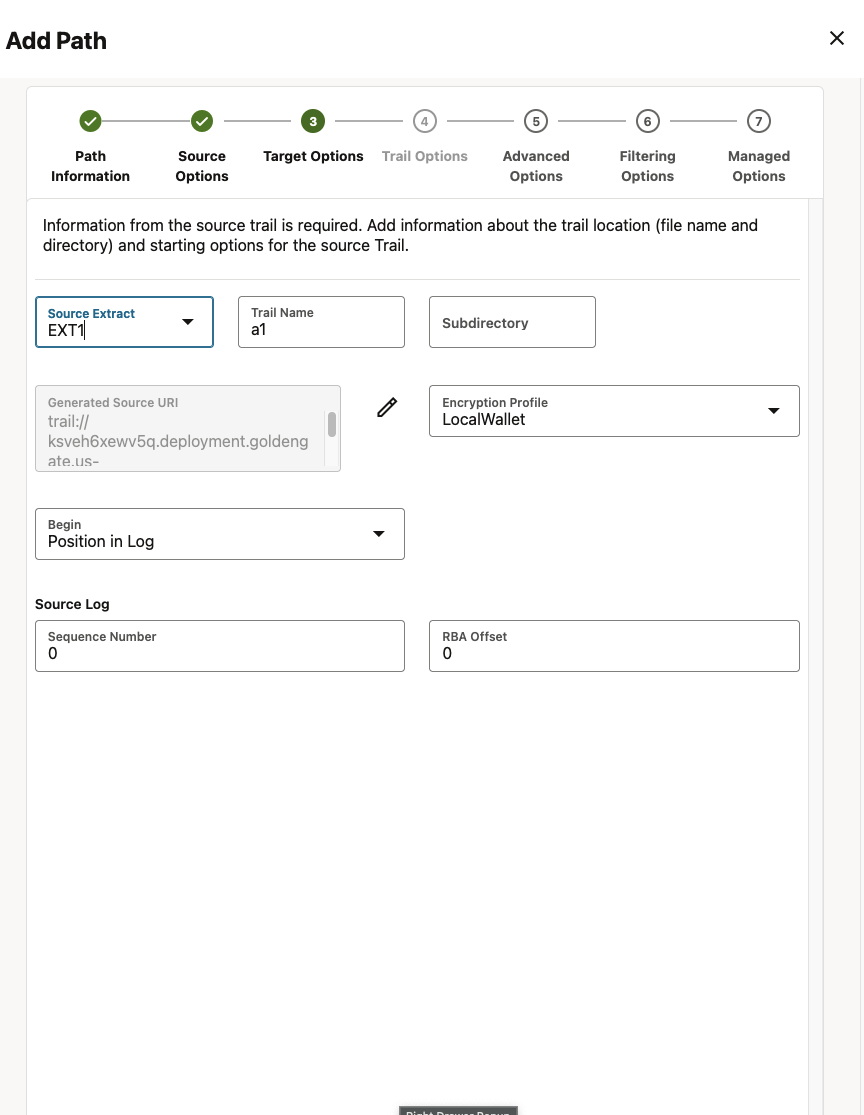

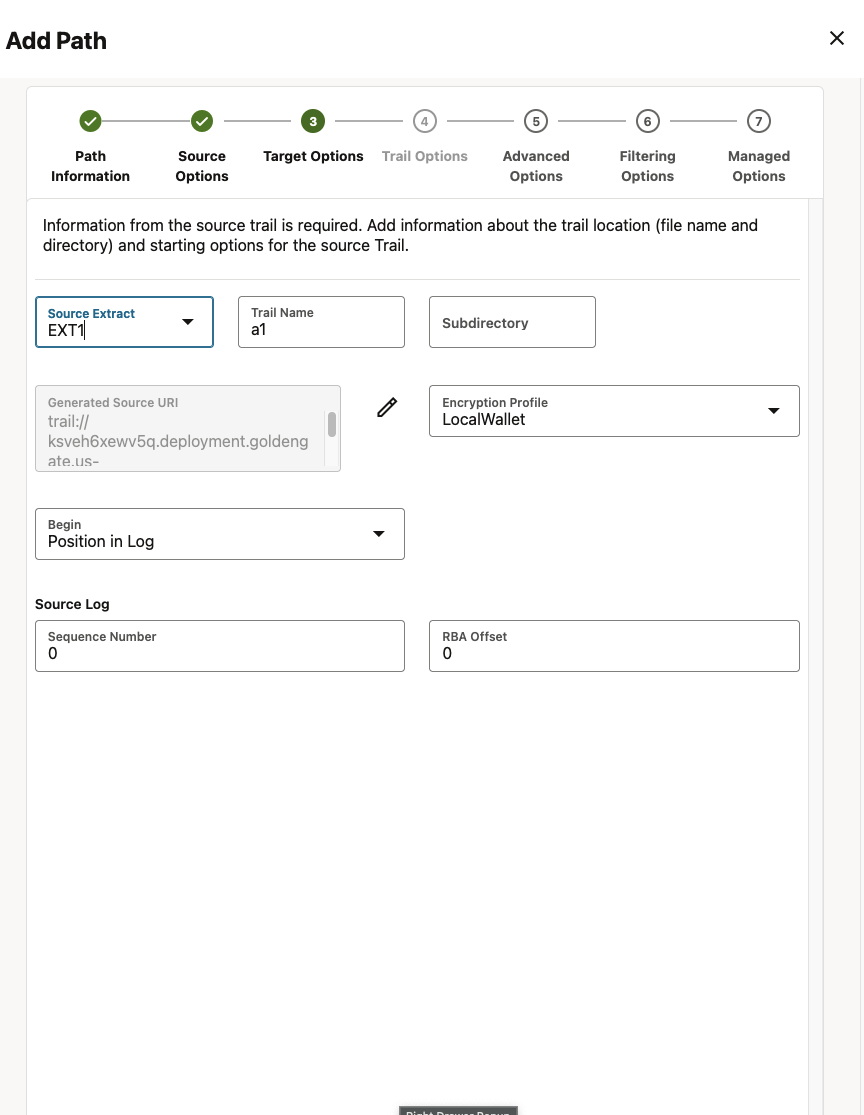

In OCI GoldenGate for Postgresql, go to Distribution Service and click Add Path (‘+’ icon). Enter the Path Name and click Next. Select the extract previously created and the Trail Name will be automatically populated. Click Next.

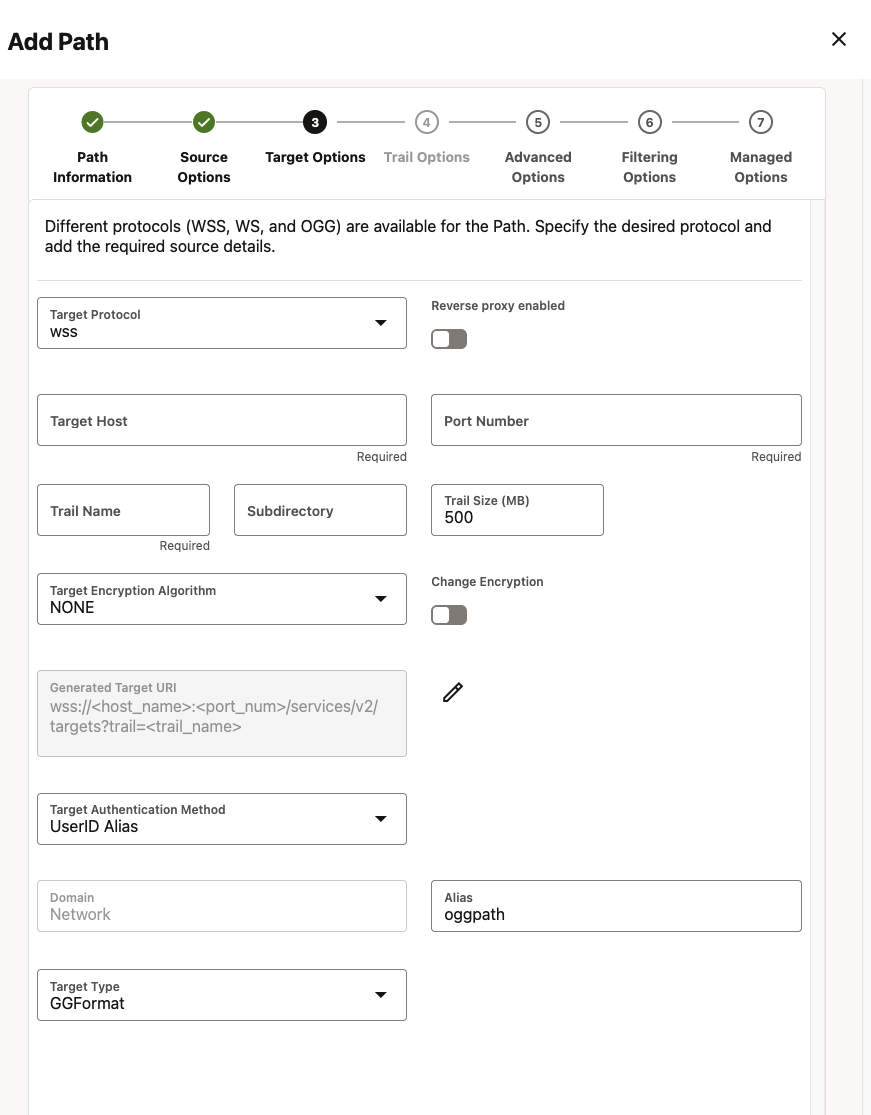

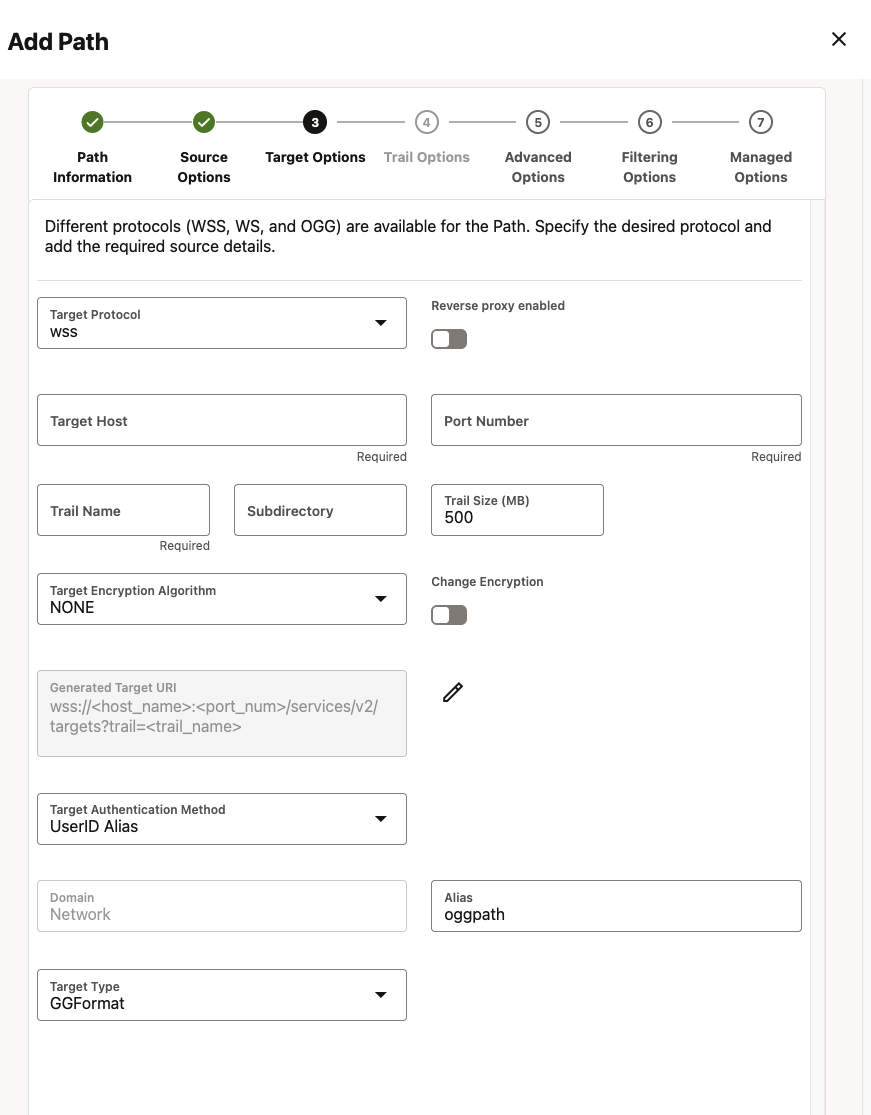

Change the Target Protocol to WSS. Specify the OCI GoldenGate for Oracle hostname for Target and port 443. Enter the Trail Name and Alias from the credential created in the previous step. Click Next until last screen and then Create Path.

Creating Replicat

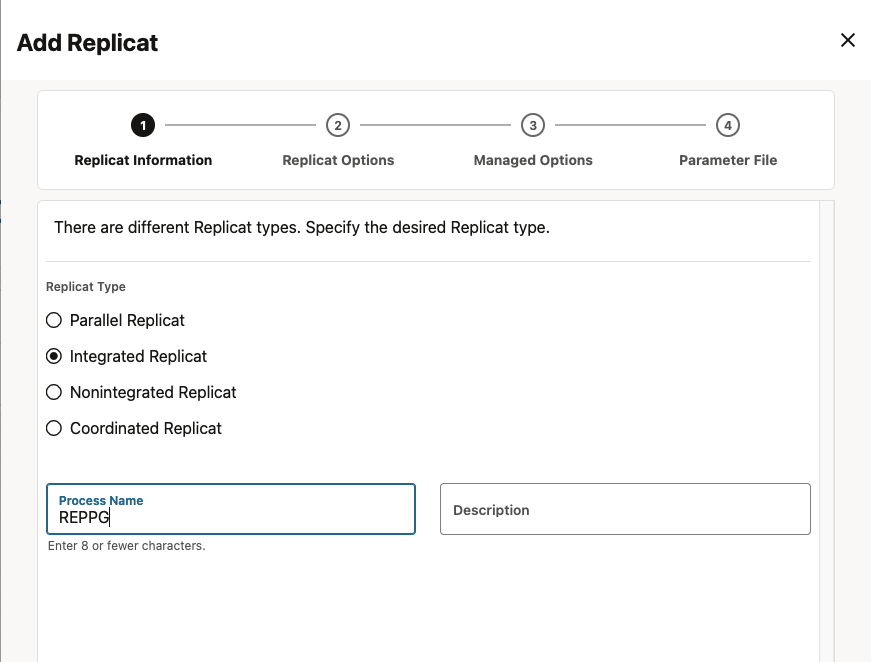

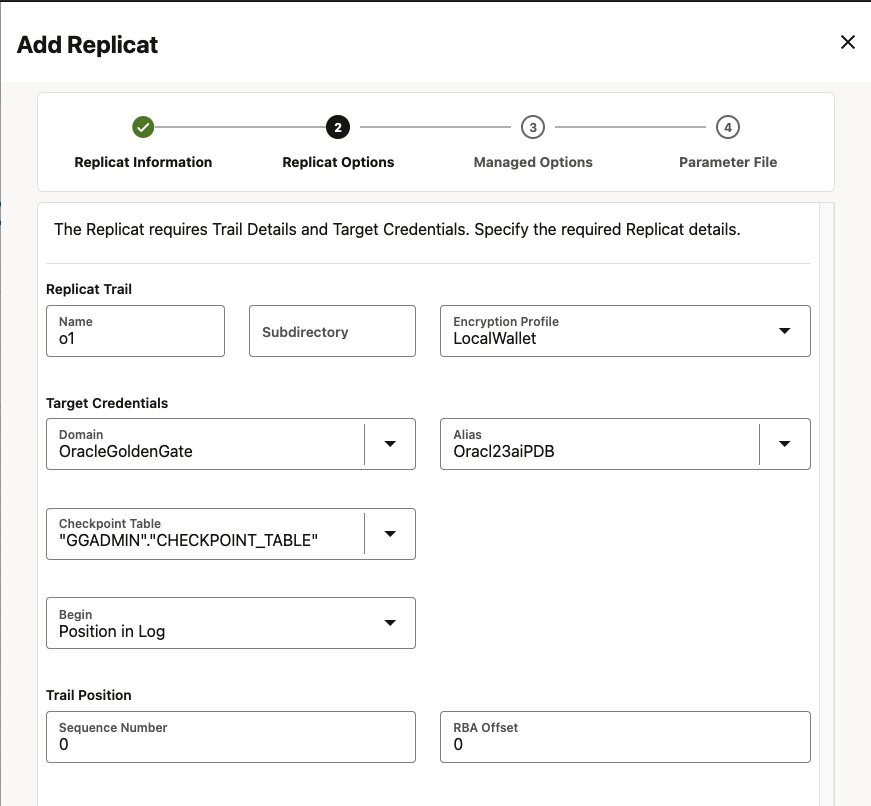

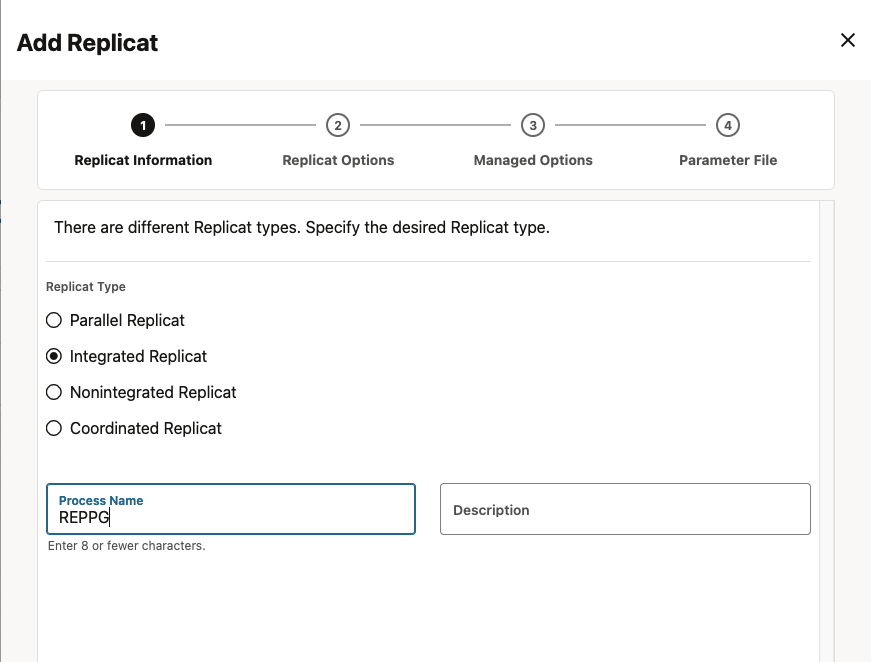

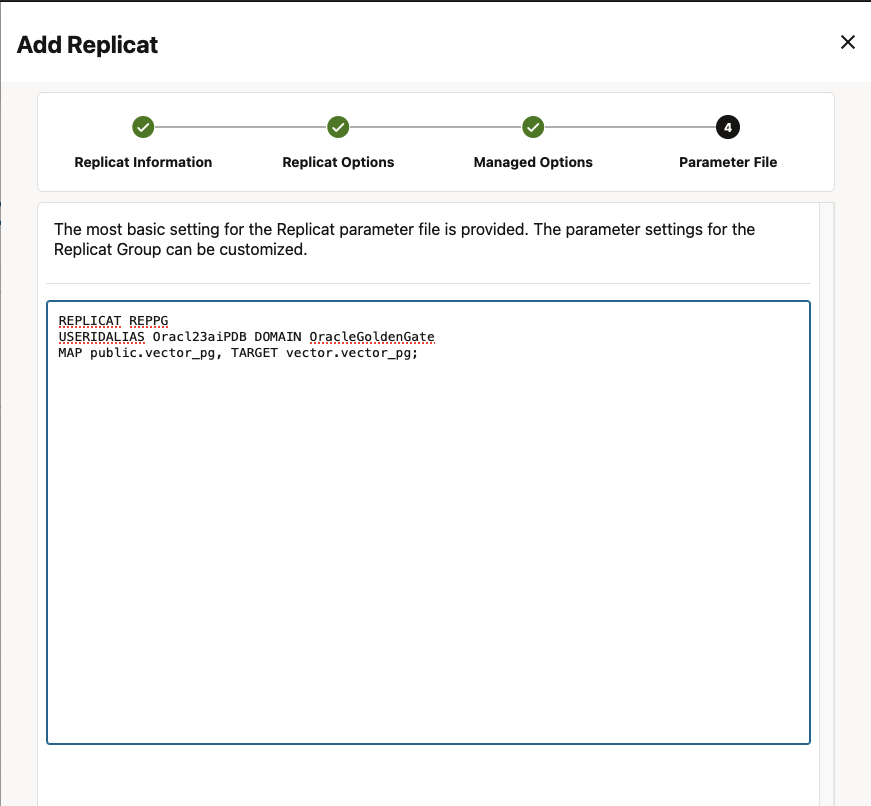

Click Add Replicat (plus icon) in the Replicats panel. Select Integrated Replicat and specify a Process Name for the replicat. Click Next.

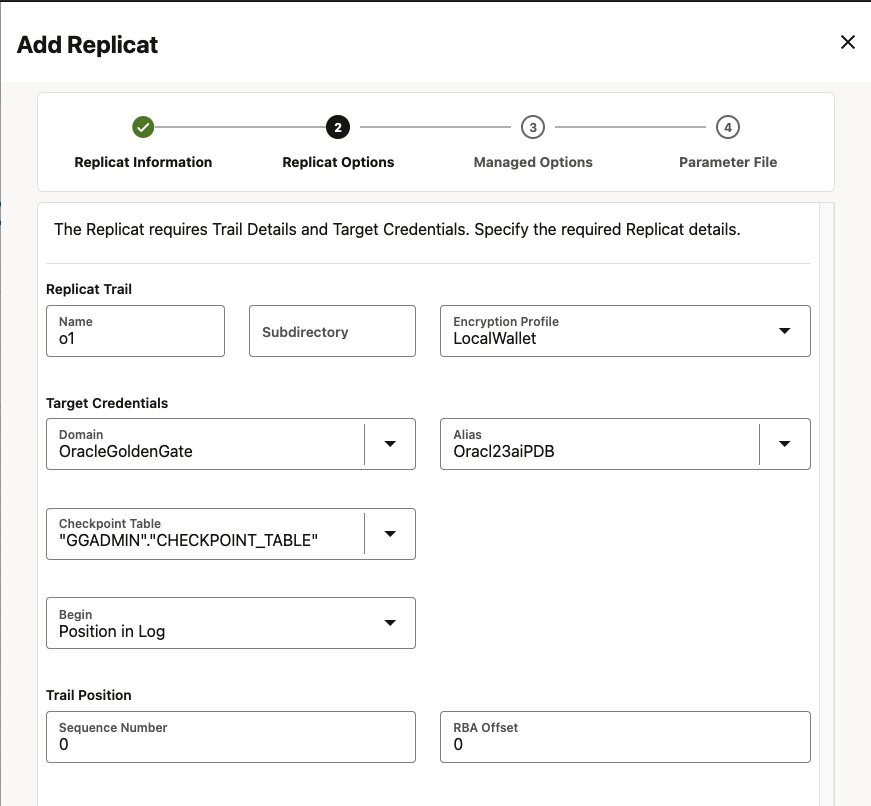

Select a Domain and Alias, and specify the Trail Name (the same one you used for the distribution path) and Checkpoint Table. Click Next until parameter file.

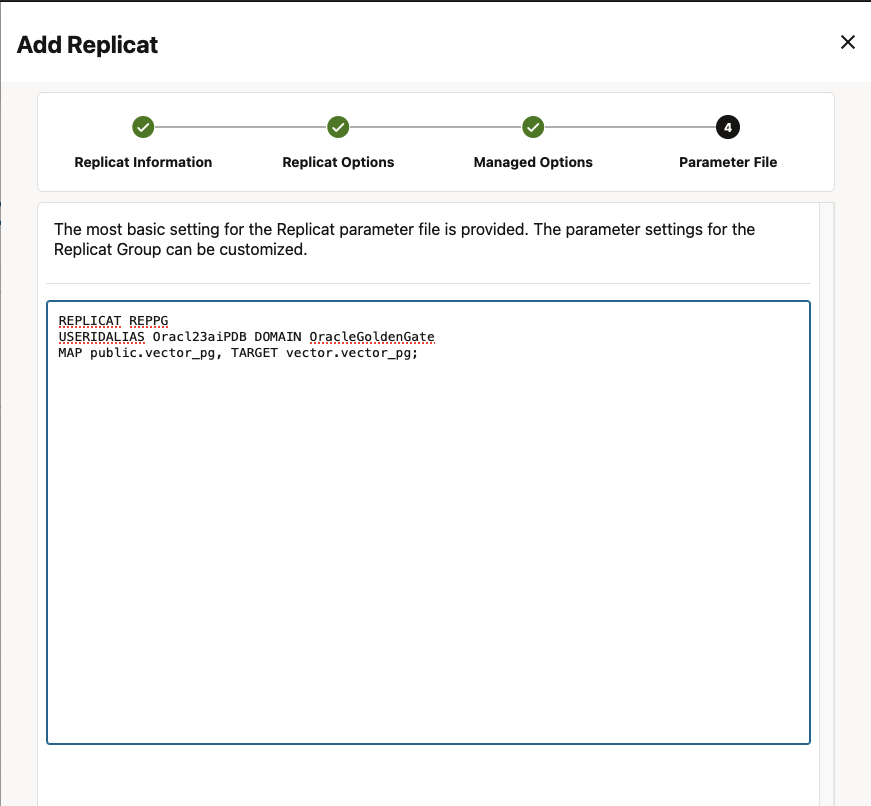

Specify how the source and target tables will be mapped by the Replicat and click Create and Run.

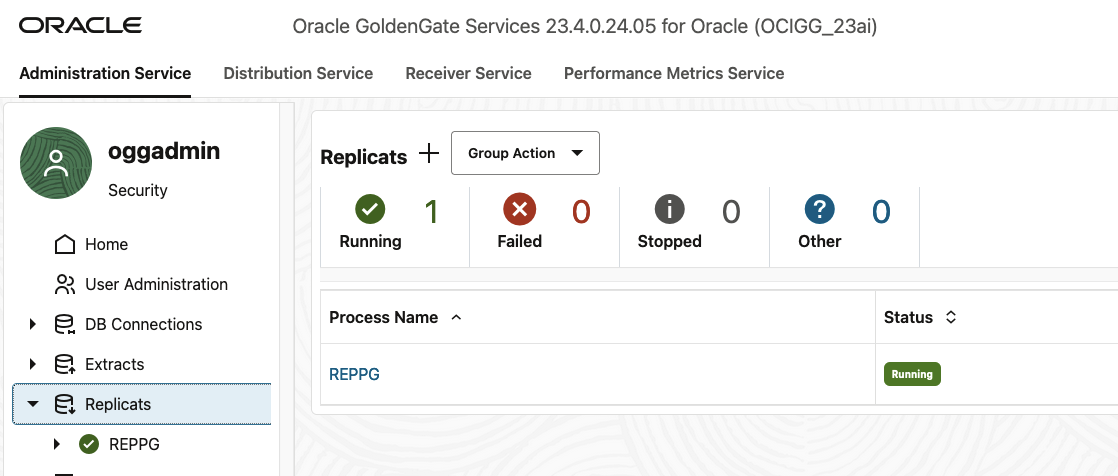

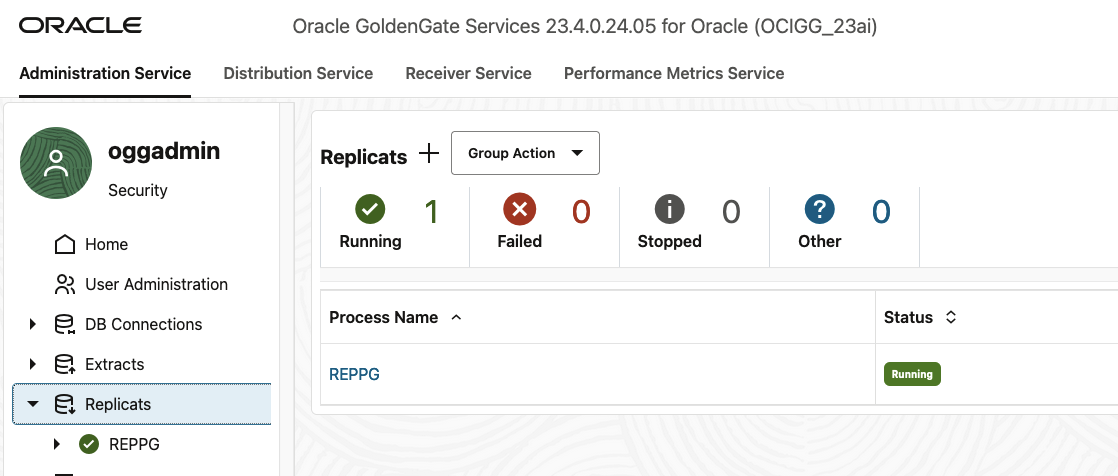

Our Replicat is now up and running in OCI GoldenGate console.

Creating Sales Orders Chatbot

Now, we have the database being syncronized from PostgreSQL to Oracle. We can consolidate the data and start having a conversation with the Oracle Database 23ai using RAG and a Chatbot (Streamlit).

create or replace view v_vectors_search as

SELECT “ID“,“TEXT“,“METADATA“,“EMBEDDING“ FROM VECTOR_PG

union all

Select “ID“,“TEXT“,“METADATA“,“EMBEDDING“ from VECTOR_SALES;

The VECTOR_PG table contains the data from PostgreSQL. The VECTOR_SALES is an oracle table that is loaded based on this SQL:

select rownum as ID, et.embed_data as TEXT, json_object (‘id‘ value rownum || ‘$‘ ||et.embed_id, ‘document_summary‘ value et.embed_data ||” FORMAT JSON) as METADATA,

to_vector(et.embed_vector) as EMBEDDING

from

SALES_ORDERS dt,

dbms_vector_chain.utl_to_embeddings(

dbms_vector_chain.utl_to_chunks(dbms_vector_chain.utl_to_text(

‘On ‘ || dt.FISCALDATA || ‘ the Customer : ‘ || dt.Customer || ‘ placed an order with item : ‘ || dt.Item || ‘ (Inventory Item Identifier: ‘ || dt.InventoryItemIdentifier

|| ‘) and Order Number: ‘ || dt.OrderNumber || ‘ This belongs to business unit : ‘ || dt.BusinessUnit || ‘ and has an order Count of ‘ || dt.OrdersCount

), json(‘{“normalize”:”all”}‘)),

json(‘{“provider”:”database”, “model”:”demo_model”}‘)) t,

dbms_vector_chain.utl_to_chunks(dbms_vector_chain.utl_to_text(

‘On ‘ || dt.FISCALDATA || ‘ the Customer : ‘ || dt.Customer || ‘ placed an order with item : ‘ || dt.Item || ‘ (Inventory Item Identifier: ‘ || dt.InventoryItemIdentifier

|| ‘) and Order Number: ‘ || dt.OrderNumber || ‘ This belongs to business unit : ‘ || dt.BusinessUnit || ‘ and has an order Count of ‘ || dt.OrdersCount

),

JSON(‘{

“by” : “words”,

“max” : “100”,

“overlap” : “0”,

“split” : “recursively”,

“language” : “american”,

“normalize” : “all”

}‘)) ct,

JSON_TABLE(t.column_value, ‘$[*]‘ COLUMNS (embed_id NUMBER PATH ‘$.embed_id‘, embed_data VARCHAR2(4000) PATH ‘$.embed_data‘,

embed_vector CLOB PATH ‘$.embed_vector‘)) et,

JSON_TABLE(ct.column_value, ‘$[*]‘ COLUMNS (chunk_id NUMBER PATH ‘$.chunk_id‘, chunk_data VARCHAR2(4000) PATH ‘$.chunk_data‘)) chunk_d

;

Setting up RAG and Chatbot

The RAG is based on Oracle SQL and chatbot is based on Streamlit.

The below code belongs to SearchDatabase.py file and the function ragdb is called on Chatbot.py that is the second file. The ragdb function uses the vector distance and utl_to_embeddings oracle database functions to search for an answer for our query. Just the first result is gathered.

SearchDatabase.py File

# Copyright (c) 2024 Oracle and/or its affiliates.

#

# The Universal Permissive License (UPL), Version 1.0

#

# Subject to the condition set forth below, permission is hereby granted to any

# person obtaining a copy of this software, associated documentation and/or data

# (collectively the “Software”), free of charge and under any and all copyright

# rights in the Software, and any and all patent rights owned or freely

# licensable by each licensor hereunder covering either (i) the unmodified

# Software as contributed to or provided by such licensor, or (ii) the Larger

# Works (as defined below), to deal in both

#

# (a) the Software, and

# (b) any piece of software and/or hardware listed in the lrgrwrks.txt file if

# one is included with the Software (each a “Larger Work” to which the Software

# is contributed by such licensors),

# without restriction, including without limitation the rights to copy, create

# derivative works of, display, perform, and distribute the Software and make,

# use, sell, offer for sale, import, export, have made, and have sold the

# Software and the Larger Work(s), and to sublicense the foregoing rights on

# either these or other terms.

#

# This license is subject to the following condition:

# The above copyright notice and either this complete permission notice or at

# a minimum a reference to the UPL must be included in all copies or

# substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

# SOFTWARE.

import sys

import oracledb

username = “vector“

password = “<password>“

proxy = “”

try:

conn = oracledb.connect(user=username, password=password,

host=“<host>“, port=1521,

service_name=“<service name>“)

# print(“Connection successful!”)

except Exception as e:

print(“Connection failed!“, e)

sys.exit(1)

def ragdb(query):

cursor = conn.cursor()

sql = “””SELECT TEXT

FROM v_vectors_search

ORDER BY VECTOR_DISTANCE( embedding, to_vector((select embed_vector

from dbms_vector.UTL_TO_EMBEDDINGS(:query,

json(‘{“provider”:”database”, “model”: “demo_model”}’) ),

JSON_TABLE(column_value, ‘$[*]’ COLUMNS (embed_id NUMBER PATH ‘$.embed_id’, embed_data VARCHAR2(4000) PATH ‘$.embed_data’,

embed_vector CLOB PATH ‘$.embed_vector’)) et)), DOT )

FETCH APPROXIMATE FIRST 1 ROWS ONLY

“””

bind_query = {“query“: query}

cursor.execute(sql, bind_query)

getText = cursor.fetchall()

return str(getText[0][0])

On generate_text function we pass the result of function ragdb and OCI Gen AI rephrases the sentence. The UI to interact with database is powered by Streamlit.

ChatBot.py File:

# Copyright (c) 2024 Oracle and/or its affiliates.

#

# The Universal Permissive License (UPL), Version 1.0

#

# Subject to the condition set forth below, permission is hereby granted to any

# person obtaining a copy of this software, associated documentation and/or data

# (collectively the “Software”), free of charge and under any and all copyright

# rights in the Software, and any and all patent rights owned or freely

# licensable by each licensor hereunder covering either (i) the unmodified

# Software as contributed to or provided by such licensor, or (ii) the Larger

# Works (as defined below), to deal in both

#

# (a) the Software, and

# (b) any piece of software and/or hardware listed in the lrgrwrks.txt file if

# one is included with the Software (each a “Larger Work” to which the Software

# is contributed by such licensors),

# without restriction, including without limitation the rights to copy, create

# derivative works of, display, perform, and distribute the Software and make,

# use, sell, offer for sale, import, export, have made, and have sold the

# Software and the Larger Work(s), and to sublicense the foregoing rights on

# either these or other terms.

#

# This license is subject to the following condition:

# The above copyright notice and either this complete permission notice or at

# a minimum a reference to the UPL must be included in all copies or

# substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

# SOFTWARE.

import streamlit as st

import random

import time

import oci

from SearchDatabase import ragdb

compartment_id = “<compartment id>“

CONFIG_PROFILE = “<Profile>“

config = oci.config.from_file(‘~/.oci/config‘, CONFIG_PROFILE)

# Service endpoint

endpoint = “https://inference.generativeai.us-chicago-1.oci.oraclecloud.com“

def generate_text(input_sentence):

generative_ai_inference_client = oci.generative_ai_inference.GenerativeAiInferenceClient(config=config,

service_endpoint=endpoint,

retry_strategy=oci.retry.NoneRetryStrategy(),

timeout=(10, 240))

cohere_generate_text_request = oci.generative_ai_inference.models.CohereLlmInferenceRequest()

cohere_generate_text_request.prompt = “Please, just reply with the sentence rephrased. “ + input_sentence

cohere_generate_text_request.max_tokens = 600

cohere_generate_text_request.temperature = 1

cohere_generate_text_request.frequency_penalty = 0

cohere_generate_text_request.top_p = 0.75

# create GenerateTextDetails object

generate_text_detail = oci.generative_ai_inference.models.GenerateTextDetails()

generate_text_detail.serving_mode = oci.generative_ai_inference.models.OnDemandServingMode(

model_id=“cohere.command“)

generate_text_detail.compartment_id = compartment_id

generate_text_detail.inference_request = cohere_generate_text_request

# call generate_text function

generate_text_response = generative_ai_inference_client.generate_text(generate_text_detail)

text = vars(generate_text_response)[‘data‘].inference_response.generated_texts[0].text

for word in text.split():

yield word + “ “

time.sleep(0.05)

st.title(“Your Sales Orders Bot“)

# Initialize chat history

if “messages“ not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message[“role“]):

st.markdown(message[“content“])

# React to user input

if prompt := st.chat_input(“What is up?“):

# Display user message in chat message container

st.session_state.messages.append({“role“: “user“, “content“: prompt})

# Display user message in chat message container

with st.chat_message(“user“):

st.markdown(prompt)

with st.chat_message(“assistant“):

for m in st.session_state.messages: msg=m[‘content‘]

stream = generate_text(ragdb(msg))

response = st.write_stream(stream)

st.session_state.messages.append({“role“: “assistant“, “content“: response})

To start the application, just run:

streamlit run ChatBot.py

Now, you can ask some questions about your data. Just be mindful that is just an example on how the Oracle AI capabilities can be used and integrated. The models aren’t perfect and for some use cases it will require fine-tuning that is not the aim of this blog.

Conclusion

I hope that you have enjoyed this blog and that it can help you to start using Oracle AI capabilities for your data projects.

References

https://docs.oracle.com/en/database/oracle/machine-learning/oml4py/2/mlugp/install-oml4py-premises-database.html#GUID-84012E3D-9179-43D6-8085-EE46D503C159

https://docs.oracle.com/en/database/oracle/machine-learning/oml4py/2/mlugp/install-oml4py-server-linux-premises-oracle-database-23c.html

https://enesi.no/2024/05/oml4py-client-on-ubuntu/

https://docs.oracle.com/en/database/oracle/machine-learning/oml4py/1/mlpug/run-user-defined-python-function.html#GUID-E9782385-B82C-4024-972D-A081BDE8805A

https://docs.oracle.com/en/database/oracle/oracle-database/23/vecse/generate-text-prompt-pl-sql-example.html

https://docs.oracle.com/en/database//oracle/oracle-database/23/arpls/dbms_vector_chain1.html#GUID-C6439E94-4E86-4ECD-954E-4B73D53579DE

https://python.langchain.com/v0.1/docs/integrations/tools/oracleai/

https://api.python.langchain.com/en/latest/vectorstores/langchain_community.vectorstores.oraclevs.OracleVS.html

https://github.com/langchain-ai/langchain/blob/master/cookbook/oracleai_demo.ipynb

https://luca-bindi.medium.com/oracle23ai-simplifies-rag-implementation-for-enterprise-llm-interaction-in-enterprise-solutions-d865dacdd1ed

https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2

https://docs.streamlit.io/get-started