Welcome Everyone….

In this blog we would setup OCI Goldengate Service to replicate data into Confluent Kafka using custom properties.

While setting up GG replication into confluent kafka in OCI Goldengate most of the properties are auto generated and hence if you need to use customer properties then you would need to upload those custom properties.

In this blog we would discuss how to upload the custom properties of confluent kafka in OCI GG Service.

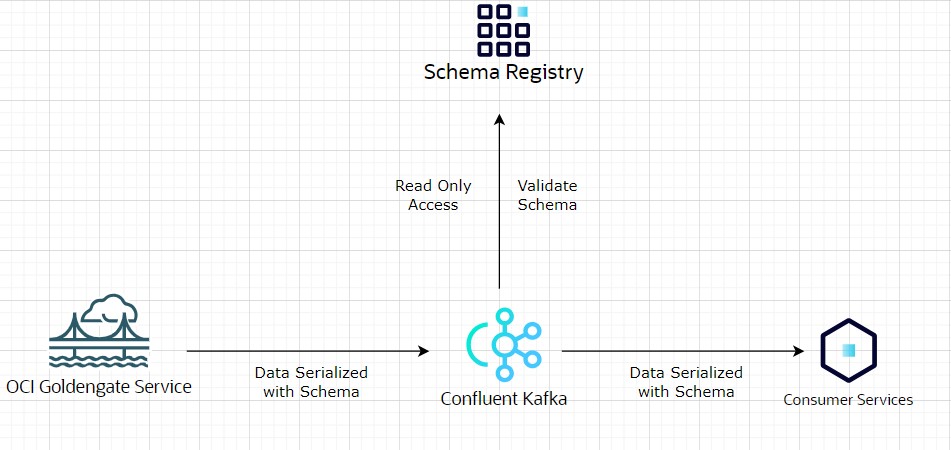

Architecture:

Use Case:

In this setup we have a requirement to only provide read only access to schema registry as we want to control the schema changes.

As by default OCI GG would update the schema registry with the new schema if any DDL change is done on the source table.

OCI GoldenGate supports Avro and JSON convertor by default but, we wanted to use StringConvertor for key.convertor and AvroConvertor for value.convertor.

That’s why, we need to create a custom producer properties file and upload them into OCI GoldenGate to use it in Goldengate replicat process.

We can update the custom properties in connection section of OCI GG Service.

Steps to follow for the setup:

1. We need write access for the first time for GG to create the initial schema for all the tables being replicated. So, every time you add a new table in the replication you would need to provide the write access to schema registry for GG to send the initial schema.

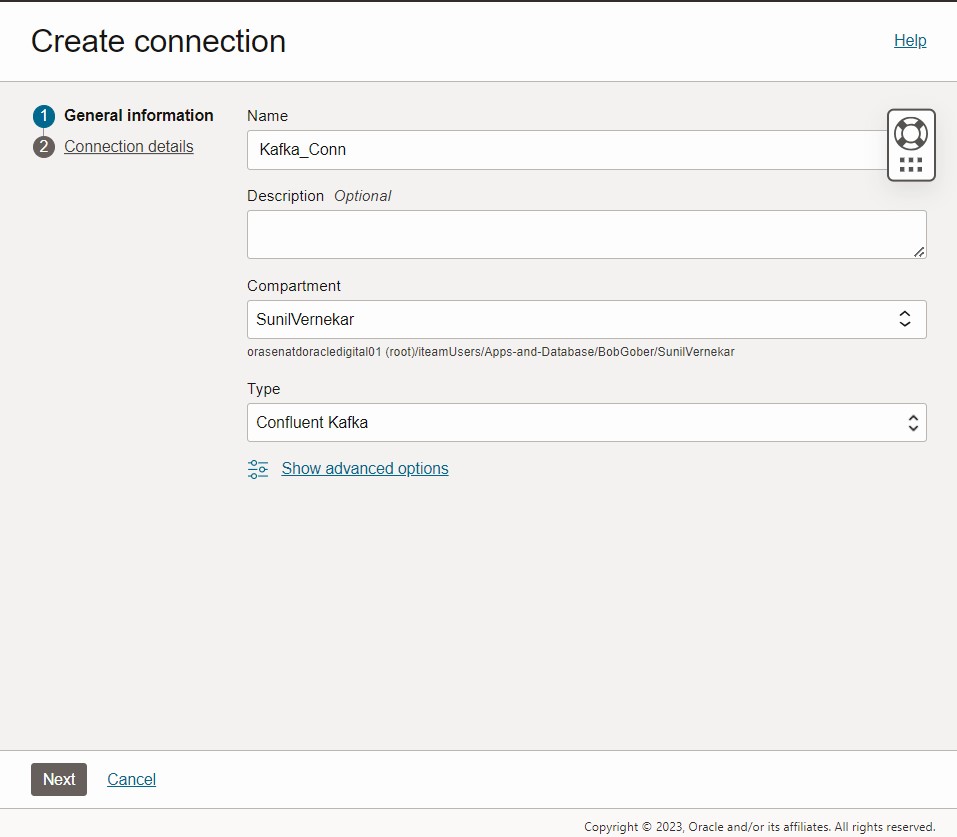

2. We would create 2 connections while replicating to confluent kafka. Assuming we already have created these connections

- Kafka connection

- schema registry connection

you can follow below blog to setup the replication to Kafka platforms.

Using Oracle Cloud Infrastructure GoldenGate with Kafka Platforms

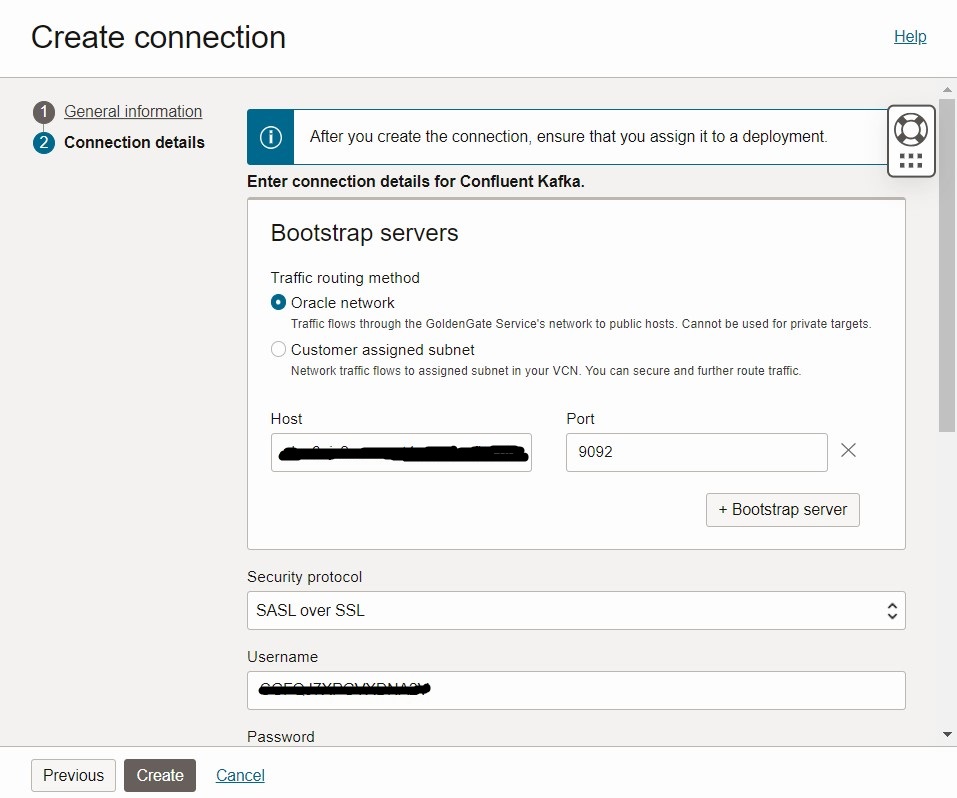

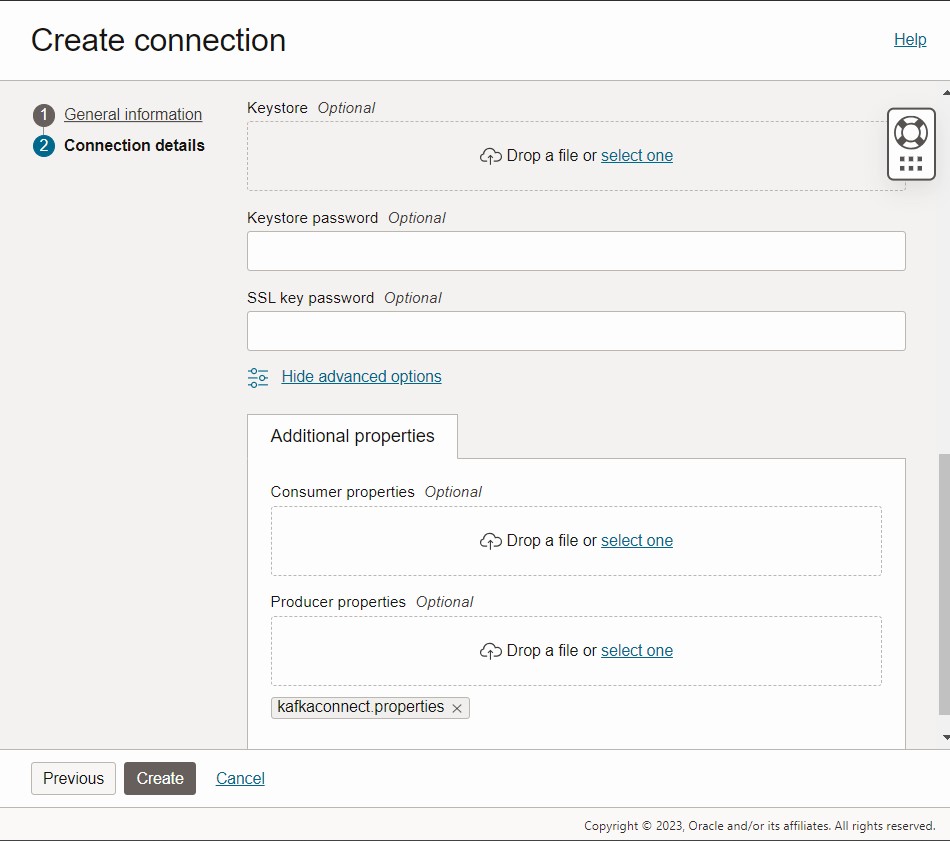

We would need to use the below custom properties while we have the write access to schema registry. So, we would create a file with following properties and upload it to your Kafka Connection

key.converter=org.apache.kafka.connect.storage.StringConverter

value.converter=io.confluent.connect.avro.AvroConverter

value.converter.auto.register.schemas=true

value.converter.use.latest.version=false

Or you can upload them while creating a new kafka connection.

You would find the option to upload the custom properties in

Show Advanced Options à Update additional properties à Producer properties

3. Once the replicat has created schemas for all the tables you can stop the replicat and remove the write access from the schema registry for GG user.

And edit the kafka connection and upload file with the below properties.

Show Advanced Options à Update additional properties à Producer properties

key.converter=org.apache.kafka.connect.storage.StringConverter

value.converter=io.confluent.connect.avro.AvroConverter

value.converter.auto.register.schemas=false

value.converter.use.latest.version=true

4. And during above both steps below would be the properties file for the replicat

# Properties file for Replicat RConf

#Kafka Connect Handler Template

gg.handlerlist=kafkaconnect

gg.handler.kafkaconnect.type=kafkaconnect

gg.handler.kafkaconnect.connectionId=ocid1.goldengateconnection.oc1.ca-montreal-1.amaaaaaakgimj3aapgarjbnrh26s46i6khcdddehu5j6t6pjehx5yxgm2v2q

gg.handler.kafkaconnect.mode=op

#TODO: Set the template for resolving the topic name.

gg.handler.kafkaconnect.topicMappingTemplate=xxxxxxxxx

gg.handler.kafkaconnect.keyMappingTemplate=${primaryKeys}

gg.handler.kafkaconnect.messageFormatting=row

gg.handler.kafkaconnect.metaColumnsTemplate=${objectname[table]},${optype[op_type]},${timestamp[op_ts]},${currenttimestamp[current_ts]},${position[pos]}

gg.handler.kafkaconnect.schemaRegistryConnectionId=ocid1.goldengateconnection.oc1.ca-montreal-1.amaaaaaakgimj3aa46suka7gy54ttzxyopcuyxhwaj5hdn4uuhmiwhl67txq

#gg.handler.kafkaconnect.converter=avro

#TODO: Set the location of the Kafka client libraries

gg.classpath=$THIRD_PARTY_DIR/kafka/*

jvm.bootoptions=-Xmx512m -Xms32m

Only change would be to comment out the below property –> #gg.handler.kafkaconnect.converter=avro

Conclusion:

In this blog , we learned how can use custom properties while configuring data replication to confluent kafka with read only access to schema registry.