It is more critical than ever to understand and react to business events as they happen; shifts in customer behavior, fraudulent activity, and marketing opportunities need to be addressed the moment they happen, not hours, days, and weeks later. Many enterprises have recognized this need and have developed a streaming backbone to offer real-time business events for consumption by different groups within their enterprise.

The challenge is often two-fold: How do I get streaming data out of business applications, and how do I translate the resulting streaming data into business insights and decisions? In order to create streaming events, many organizations turn to replication and change-data-capture from their transactional databases to create streaming events. This approach has distinct advantages over manually instrumenting the applications directly with stream publishing code: it is non-invasive and less labor-intensive and risky for the application owners, and it provides reliability that data in the database and streams match in all failure scenarios. Oracle GoldenGate is the market leader for transactional replication and provides extensive connectivity options to replicate data from various database vendors into other databases or big data and streaming environments such as Apache Kafka. Oracle Data Integration Platform Cloud (DIPC) provides this functionality as a service in the Oracle Cloud Infrastructure. For processing and analyzing streaming data and driving automated decisions, Oracle provides Oracle Stream Analytics (OSA) with a rich interactive user interface and scalable runtime based on Apache Spark.

At Oracle OpenWorld 2018 we demonstrated this end-to-end use case of capturing and analyzing real-time data as part of a Hands-on Lab “Analyzing Oracle GoldenGate Streams with Oracle Data Integration Platform Cloud”. The use case is to react to real-time marketing opportunities based on customer movements and buying history. The lab demonstrates how to set this up in a straight-forward user interface without any programming or low-level configuration. The lab also uses the Oracle Big Data Cloud environment for Spark and Oracle Event Hub for Kafka streaming.

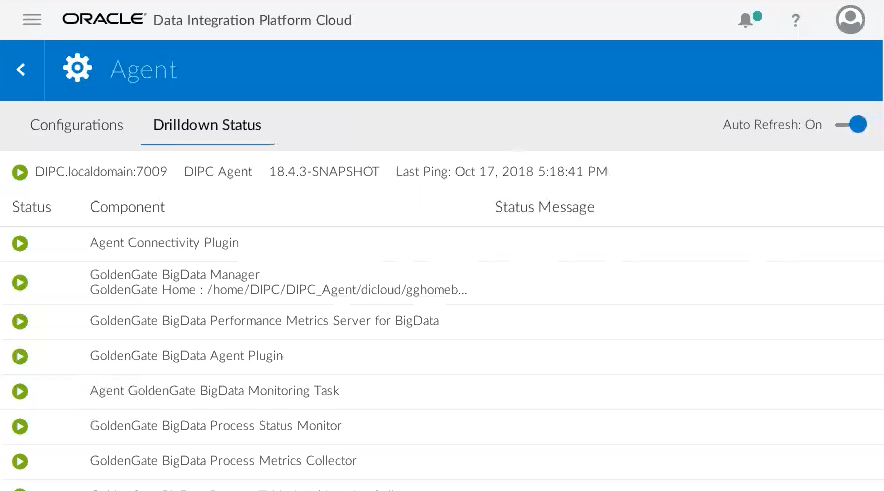

The first step is to open the DIPC Console to start a DIPC Agent that will manage the replication run-time. The agent can be deployed in the cloud or on-premises, enabling you to perform replication in any location.

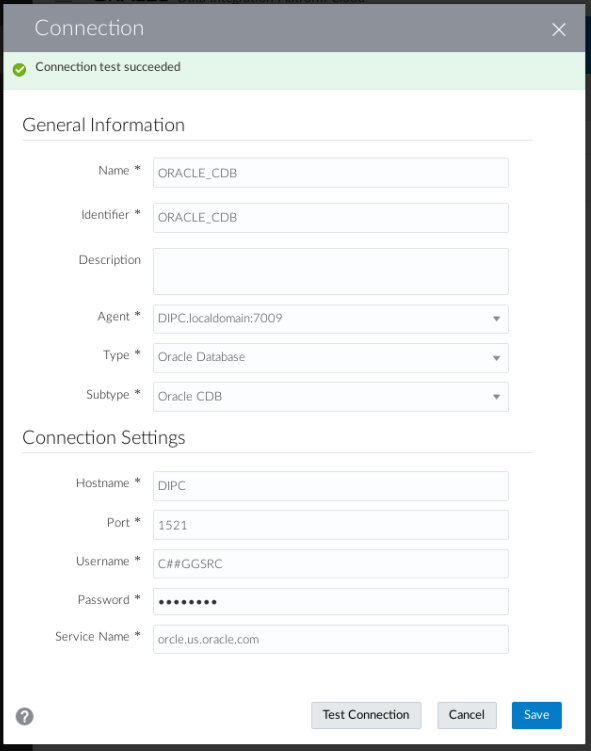

In the next step the user can configure connectivity with source database and target Kafka environment. DIPC is automatically harvesting metadata and profiling information from the source database to provide the user with detailed schema information.

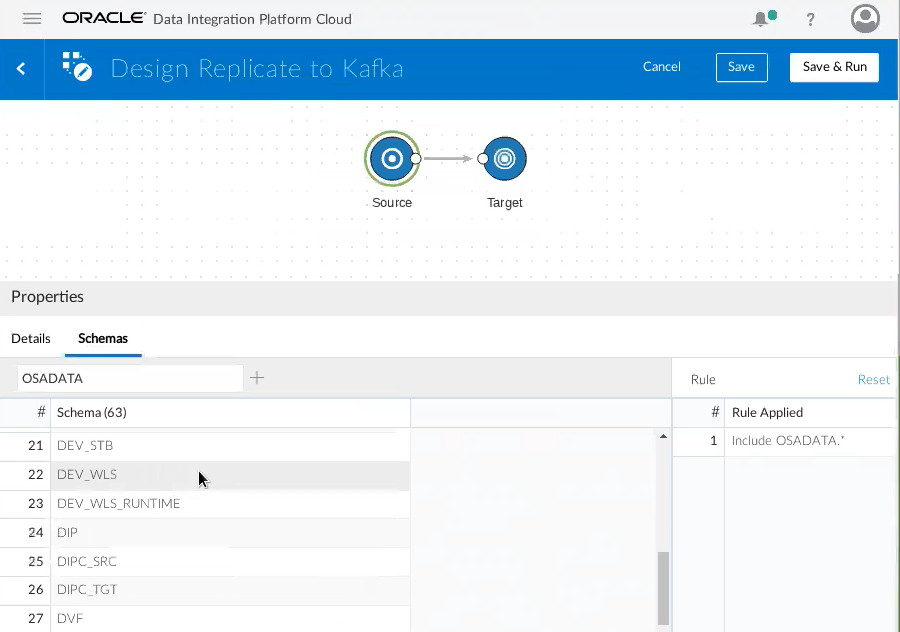

The user can now design the replication using a graphical designer and choose the details of which schemas and tables should participate in the process and provide events into Kafka.

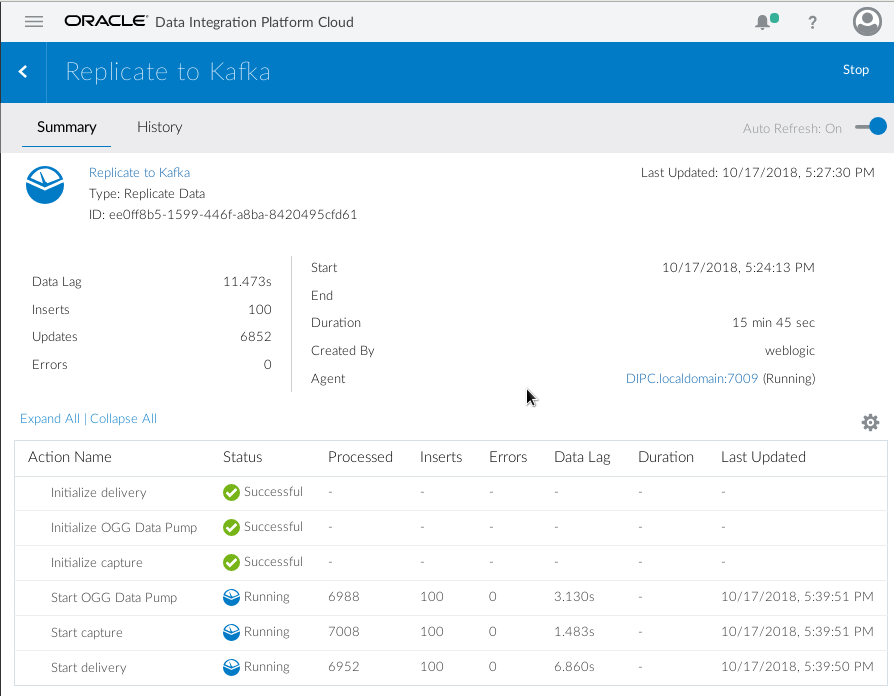

The replication progress can be monitored and controlled in the task monitoring page. The user can review statistics on event counts, errors, data lags, and other critical factors.

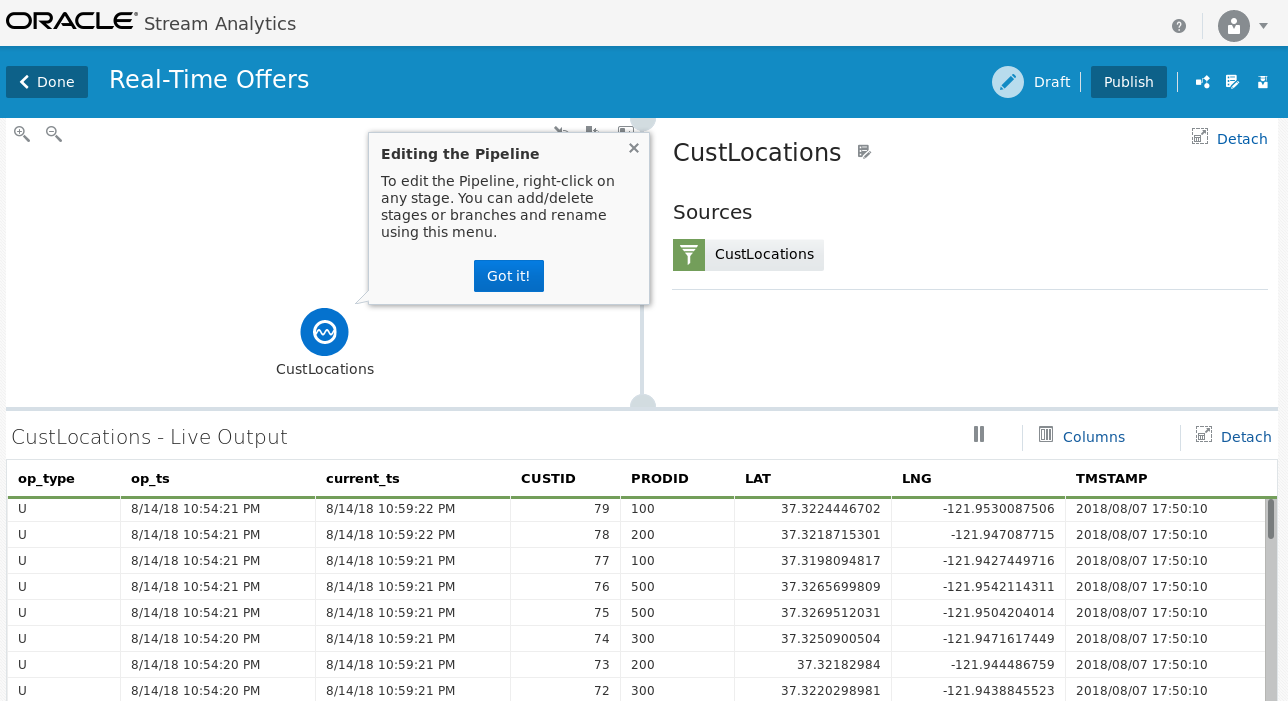

As the data is replicated into Kafka, the user can now turn to the Stream Analytics user interface to start analyzing the events. The user creates a pipeline based on the Kafka topic with the database changes. The pipeline shows data from incoming events, enabling the user to interactively process and analyze the data stream.

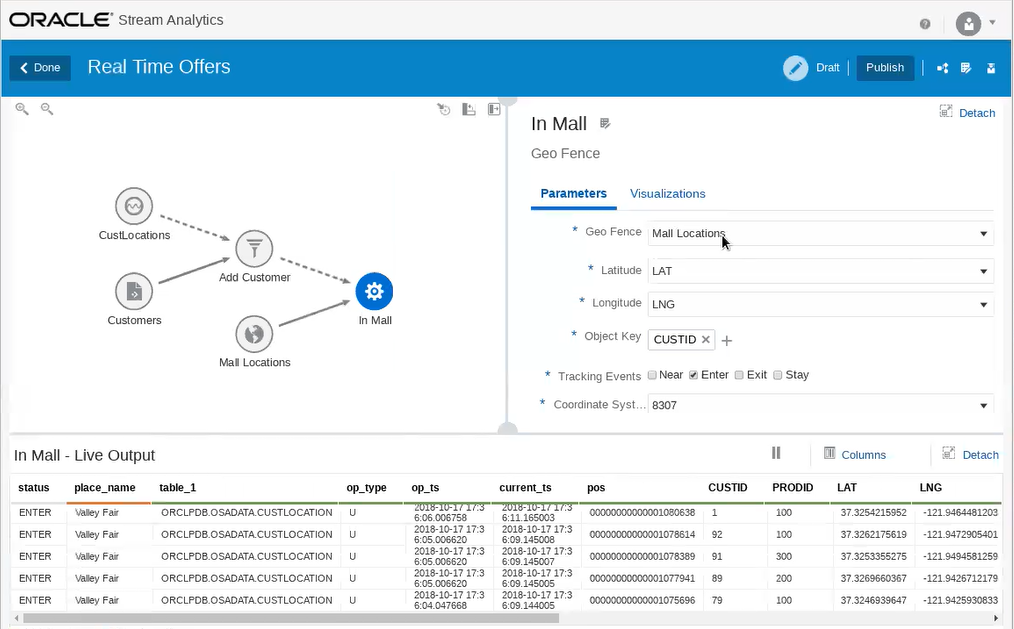

The user continues adding processing steps that progressively enrich and transform the events. The second step is to enrich event data with additional customer data from a database.

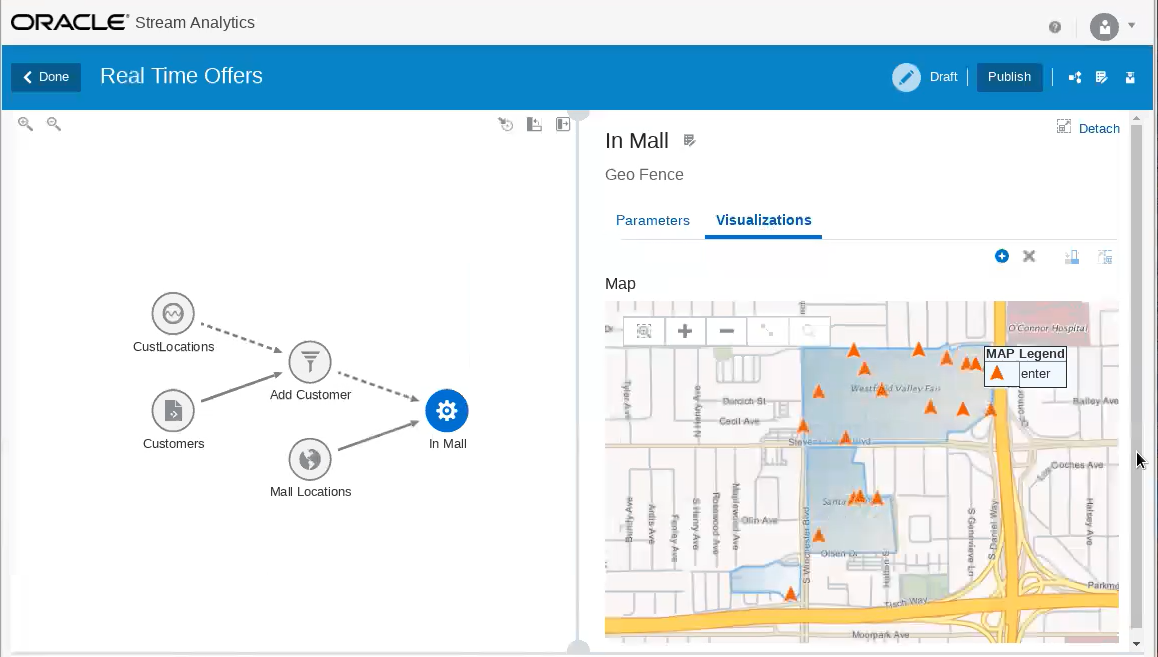

In the third step we are adding a pattern to filter events based on a geo-fence. The geo-fence is a predefined area, in this case retail locations that would trigger an offer to the customer. The user can see the location of individual customers in relation to the geo-fence in the user interface:

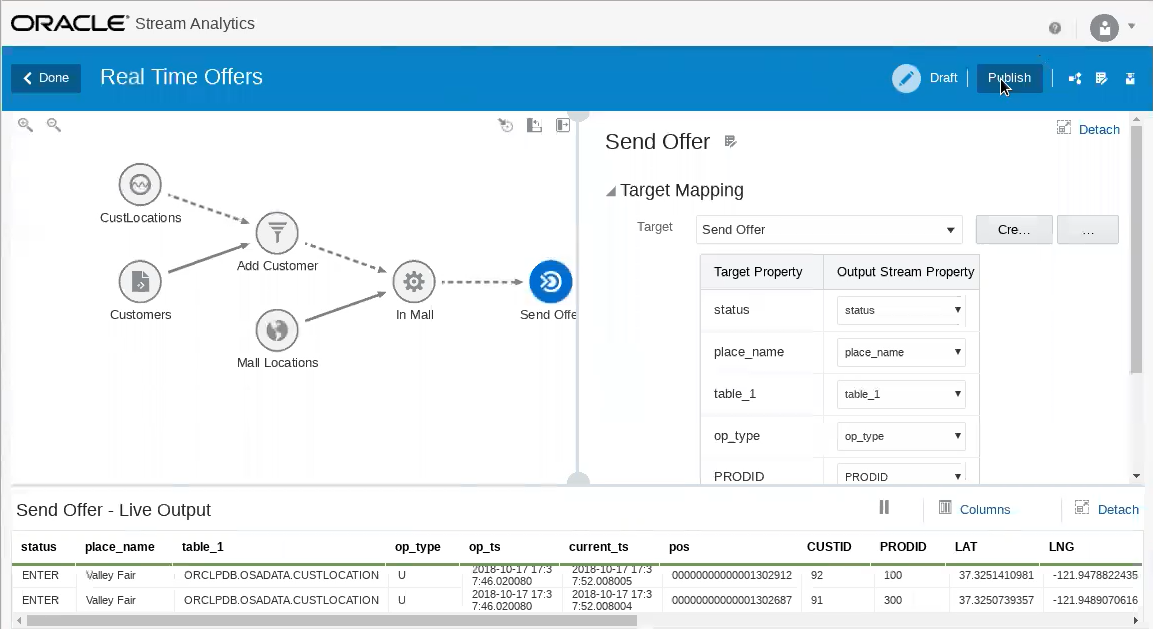

After the filtering, the user could add further processing, such as applying a machine learning model through the Scoring step in the product. Finally, the processed event with customers that should receive an offer can now be stored into a target stream to initiate a communication to the customer.

In summary, the hands-on-lab showed that in less than 60 minutes you can go from changes in a database to a complex real-time analytics solution to help your business react instantly to risks and opportunities.

For more information please visit Oracle Stream Analytics, Oracle Data Integration Platform Cloud, and Oracle GoldenGate.