Introduction

Retrieval Augmented Generation (RAG) is a very important part of today’s generative AI landscape. RAG provides a way to optimize the output of an LLM with targeted, domain specific information without modifying the underlying LLM model itself. The targeted information can be more up to date than what was used to train the LLM as well as specific to a particular organization and industry. That means the generative AI system can provide more contextually appropriate answers to prompts as well as base those answers on extremely current data.

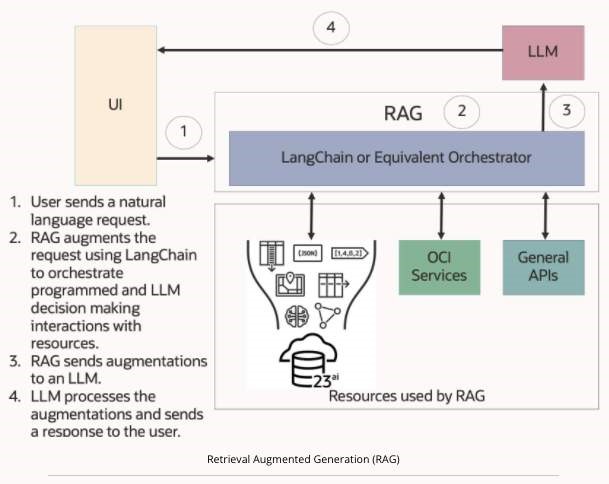

RAG can be implemented using middleware between a client UI, a set of resources, and a final LLM. Here is how it can work in a generative AI application.

Generative AI RAG applications can provide more contextually appropriate answers to prompts, based on the customer’s current data, helping to prevent the LLM from hallucinating and enabling explanations of the given answers. For example, if a customer asks for information on a product, RAG can combine information from unstructured documents and logs using AI Vector Search combined with structured product information and send it to an LLM to be summarized for the customer.

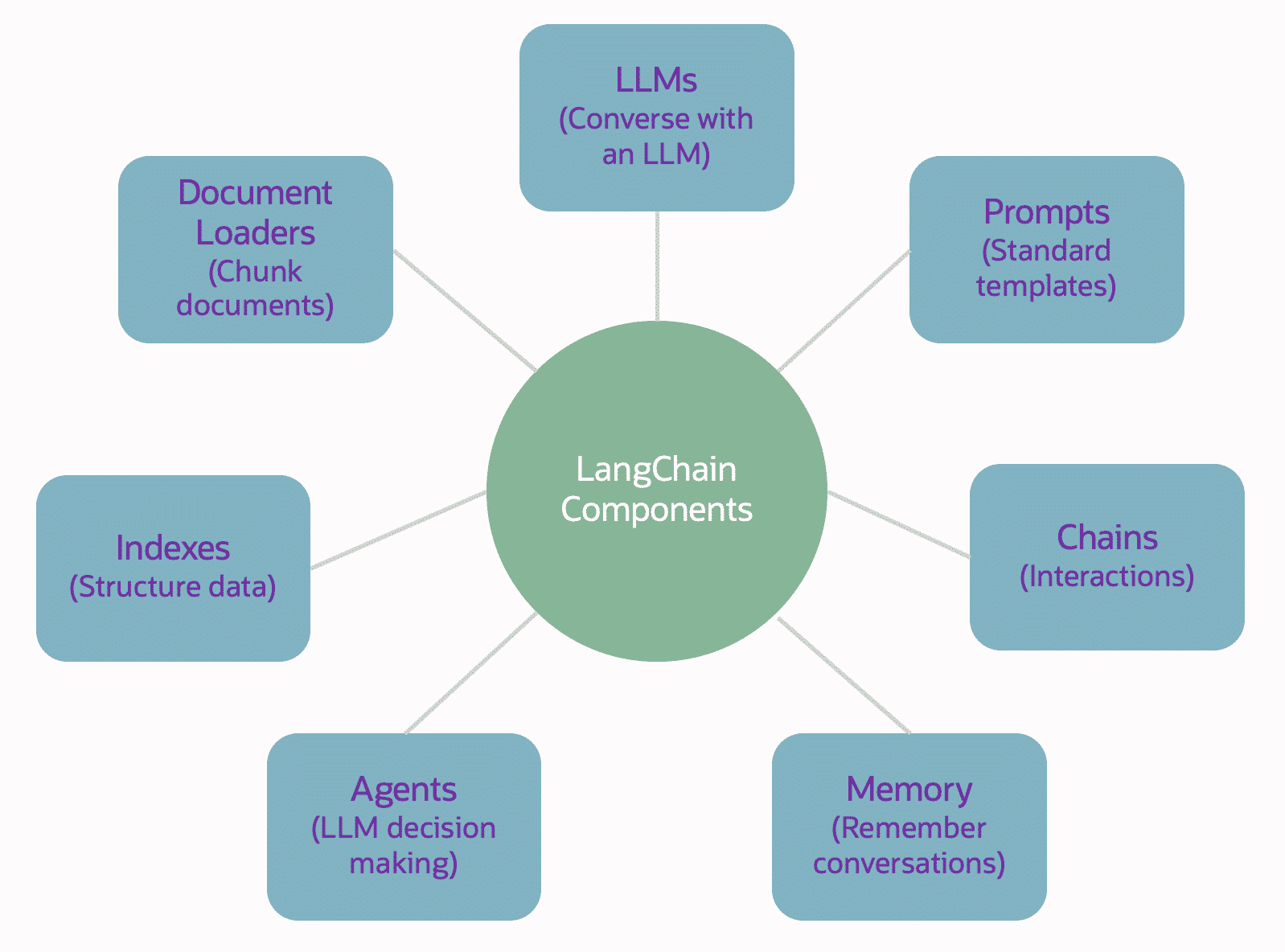

Developers using RAG techniques typically use a framework to manage all of the interactions in the application and LangChain is such a framework (also known as an orchestrator). LangChain is an open-source Python and JavaScript library that provides a flexible and modular application framework for prompt engineering by orchestrating:

- Chains of interactions between resources like LLMs, APIs, and databases

- Memory retention between interactions

- Resource loaders and formatters for better interactions

- Intelligent AI agents that can make decisions as to which interaction to perform next

LangChain comes with a standard set of components as shown in the figure below,

The LangChain library can be used in JavaScript React/Next.js applications. The combination of React and Next.js is one of the most popular platforms for building genAI applications, it’s used by 10s of millions of developers.

Here are some elementary examples of some of the LangChain components.

- LLM

// Converse with OpenAI.

import { OpenAI } from “langchain/llms”;

const model = new OpenAI({ temperature: 0.1 }); // temperature controls the LLMs creativity with a range from 0 (deterministic)to 1 (max creativity)

const res = await model.call(“What is the genre of the movie Birdcage?”);

console.log({ res }); // output: “Comedy”

- Prompt

// Create a prompt using a template.

import { PromptTemplate } from “langchain/prompts”;

const template = ” What is the genre of the movie {movie}?”;

const prompt = new PromptTemplate({inputVariables: [“movie”], template: template

});

const res = prompt.format({movie: “Birdcage”,});

console.log(res); // output: ” What is the genre of the movie Birdcage?”

- Chain

// Send a prompt to OpenAI and wait for a response.

import { OpenAI } from “langchain/llms”;

import { LLMChain } from “langchain/chains”;

import { PromptTemplate } from “langchain/prompts”;

const model = new OpenAI({ temperature: 0.1 });

const template = ” What is the genre of the movie of {movie}?”;

const prompt = new PromptTemplate({ template, inputVariables: [“movie”] });

const chain = new LLMChain({ llm: model, prompt });

const res = await chain.call({ movie: “Birdcage” });

console.log({ res }); // output: “Comedy”

- Memory

// Send a prompt to OpenAI and wait for a response.

import { OpenAI } from “langchain/llms”;

import { LLMChain } from “langchain/chains”;

import { PromptTemplate } from “langchain/prompts”;

const model = new OpenAI({ temperature: 0.1 });

const template = ” What is the genre of the movie {movie}?”;

const prompt = new PromptTemplate({ template, inputVariables: [“movie”] });

const chain = new LLMChain({ llm: model, prompt });

const res = await chain.call({ movie: “Birdcage” });

console.log({ res }); // output: “Comedy”

- Agent

// Agents are LLM-based assistants that take actions based on tools and their descriptions.

import { OpenAI } from “langchain”;

import { initializeAgentExecutor } from “langchain/agents”;import { SerpAPI, Calculator } from “langchain/tools”;

const model = new OpenAI({ temperature: 0 });

const tools = [new SerpAPI(), new Calculator()]; //SerpAI searches google

const executor = await initializeAgentExecutor(tools, model, “zero-shot-react-description” //This decides what tool to use based on the tool’s description);

const input = `What is 2 times the number of days in the week?`;

const result = await executor.call({ input });

console.log(`Got output ${result.output}`); // output “14”

RAG for Graphs

Oracle has two standards-based graph technologies for developers to use in RAG applications, Property Graphs to add meaning from complex relationships and RDF Knowledge Graphs to add additional meaning from class and subclass structures in the data. For more information see GenAI RAG Likes Explicit Relationships: Use Graphs!

The ISO standards organization considers graph technology so important that they enhanced the SQL standard to include capabilities for Property Graph Queries (SQL/PGQ). Oracle is the first vendor to implement this standard. It’s available in Oracle Database 23ai.

The other graph standard, W3C Resource Description Framework (RDF) standard, for RDF Knowledge Graphs. Oracle Database 23ai has a complete implementation of the RDF standard.

Developers can take full advantage of all the LangChain features in JavaScript, React/Next.js RAG applications using Oracle Database 23ai. To do this, they simply need to install the node-oracledb driver into Next.js, use it to connect to the Database, and use the results of PGQ or RDF queries inside of their RAG applications.

Here are examples of each. The examples are taken from GenAI RAG Likes Explicit Relationships: Use Graphs!.

Property Graph RAG

The following query returns movies and their genres from the database.

let connection = await oracledb.getConnection(JSON.parse(process.env.dbConfig));

sql = “ select ‘Movie’ as label, t.*

from graph_table (explicit_relationship_pg

match

(m is movie) -[c is has_genre]-> (g is genre)

columns (m.title as title,g.type as type)

) t

order by 1;“

const result = await connection.execute(sql);

This example could be expanded by adding the dates a movie was released to the database. Then queries like “What are the movies with their genre that were released in 2024” could be run. The results could be used to augment the information passed to the final LLM because it probably was not trained on 2024 movie releases. This could be done by issuing a query like the one above, looping through the results, augmenting a prompt and then calling something like

const res = await chain.call({movie:”Birdcage” });

RDF Graph RAG

The following query returns movies and their genres from the database.

let connection = await oracledb.getConnection(JSON.parse(process.env.dbConfig));

sql = “SELECT ?movie ?class ?title

WHERE

{?movie rdf:type/rdfs:subClassOf* :Movie .

?movie rdf:type ?class .

?movie :title ?title .

}”

const result = await connection.execute(sql);

Similar operations can be done in Python using the Python LangChain library. As a matter of fact, Oracle supports Python LangChain directly.