Is your database located behind a firewall with only one open port? Is direct network routing between clients and your databases not desired or possible (e.g. IPv4 vs IPv6)? Or would you as a database operator like to be able to define rules as to which networks are allowed to access which database? These are common scenarios for the use of Oracle Connection Manager (CMAN) as part of every Oracle Database Enterprise Edition license. In order to operate CMAN, there are a few circumstances to consider, which are particularly noticeable when integrating it under Kubernetes.

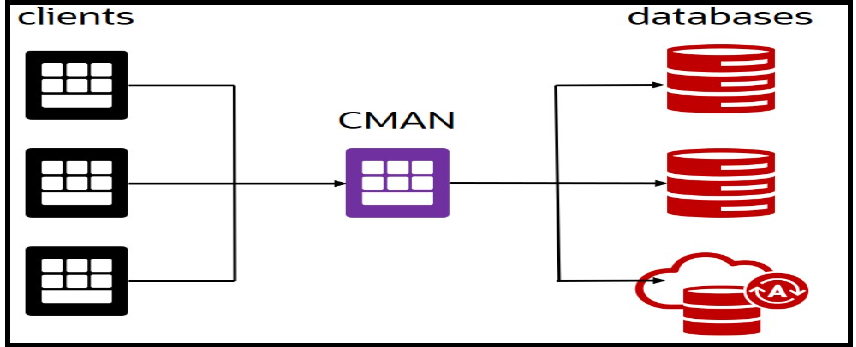

The Oracle Connection Manager acts as an intelligent network proxy and is placed between Oracle databases and their clients. It can do much more for database environments than the proxy classics haproxy and nginx, because these can only serve the TCP-IP socket layer. This is because they do not know the Oracle Net (also formerly known as SQL*Net or Net8) protocol above it and cannot respond to it:

- CMAN prevents clients from having to reconnect to another listener or another port.

This process is often part of a seemingly simple database connect and is often problematic behind firewalls or other isolated networks. - CMAN can provide SSL termination – it offers encrypted network access, the addressed databases speak via unencrypted channels and are located in a separate, isolated network, for example.

- CMAN can reduce the number of connections to a database with its own connection pools.

- CMAN can be actively involved in a connection failover.

A client usually retains its database session despite a database switch due to downtime. - Moving a database or PDB to another server can be done without adapting the client configuration.

- Databases can register with CMAN in the same way as with a REMOTE_LISTENER.

The manual configuration effort is reduced. - Policies can define which clients have access to certain databases.

This includes IP address ranges as well as Oracle Net services (database services, extproc agents, gateways,…) - From version 23, CMAN has a REST API for remote configuration and administration.

This can be activated on request; it is deactivated by default.

More detailed explanations and features such as the “Traffic Director Mode” are listed in the CMAN documentation.

What are the spontaneous arguments in favor of using CMAN under Kubernetes?

- CMAN is available as container image in version 23.5 on container-registry.oracle.com

No image has to be created manually from an installation script - However, there is an official container build script on github.com/oracle

For your own images, e.g. due to hotfixes - CMAN is easy to configure and does not actually require any persistent volumes

A configuration file “cman.ora” and any SSL certificates are sufficient. They are particularly easy to attach to the container under Kubernetes. The simplified commissioning per container is also documented on container-registry.oracle.com - CMAN is one of several “database satellites” such as ORDS, Observability Exporter, GoldenGate

and could be operated in the same environment. - The clusterware contained in Kubernetes restarts canceled containers quickly, there is automatic load balancing and better availability with container replica,

although session affinity is mandatory. So let’s move on to the difficulties.

What makes it difficult (mentally) to use CMAN under Kubernetes?

- According to the pure microservices doctrine, containers should be “stateless” so that they can be started and stopped in large numbers and at any time. As entire virtual machines and databases can now also be operated under Kubernetes, this principle has been softened. Having said this, I must now announce: CMAN is stateful. It manages and holds database sessions. If the CMAN process dies and with it the container, the database sessions are also lost. These can be rebuilt by a surviving CMAN container with a preceding load balancer, but this can lead to error messages in clients. It must therefore be prevented (not only under Kubernetes!) that CMAN containers spontaneously move to other nodes or that the containers are restarted after configuration changes. However, this is easily achievable. With PriorityClass, preemptionPolicy and remote-controlled live refresh of the configuration without restarting the container.

- CMAN uses the Oracle Net protocol, it does not speak HTTP or HTTPS. This means that Kubernetes Ingresses and pure HTTP gateways cannot be used, but it is still possible:

If the CMAN service created is of type ClusterIP, it must be proxied directly at socket level by the gateway set up (usually based on the old familiar nginx), i.e. without ingress. The gateway itself is usually of the LoadBalancer type, and the following sentence also applies to this:

If the created CMAN service (or the gateway) is of type LoadBalancer, the external load balancer preceding the cluster must function at socket level. In the Oracle Cloud, this would mean using a network load balancer service and not a regular load balancer service. This can be controlled via Kubernetes annotations, for example.

Session affinity must be set in the load balancer, e.g. based on the client address, if several CMAN containers are started.

If the CMAN service created is of the NodePort type, nothing else needs to be considered except to accept the usual disadvantages of the NodePort service. These are manual load balancing and the relatively small number of free ports in the cluster by definition. - Kubernetes often uses its own internal overlay network (flannel). The resulting additional routings, tunnels and proxies in the previous paragraph increase latency. Database connections and operations simply take a little longer. It would be advisable to configure Kubernetes with more up-to-date network connections such as the VCN native networking in the Oracle Cloud or Calico networking, where each container receives its own external IP address, and to reduce the number of proxies and load balancers somewhat. Of course, this also applies in a configuration without Kubernetes!

- The CMAN REST service (admittedly – HTTPS protocol, albeit in a subordinate position) for remote administration does not (yet) work in the container. It is not activated when CMAN recognizes a container environment at startup. In order to avoid having to restart the container in the event of infrequent configuration changes, the CMAN process can be notified to reload its configuration during operation. This is done either via the REST interface or, as listed in the documentation and also on container-registry.oracle.com, via a shell connected to the container and the command “cmctl reload”

Would you still like to try?

To make testing and trials easier for you and to speed up deployment, I will spare you lengthy explanations about deployments with containers, volumes, secrets, associated services and non-existent because unnecessary ingresses. I have created a relatively convenient helm chart for you, which you can easily install with the Kubernetes package manager helm. The source code for the helm chart, consisting of Kubernetes YAMLs as templates with some variables in them, is also available for you.

To install the helm chart, add a new chart repository using the helm command. The helm tool should ideally be available where you can already access your Kubernetes cluster using the kubectl command. The repository to be integrated contains other charts such as cloudbeaver , sqlcl or ords, which still need to be updated due to their age. Therefore, please disregard them or use them for your reference.

$ helm repo add myorarepo https://ilfur.github.io/VirtualAnalyticRooms/ $ helm repo list NAME URL artifact-hub https://artifacthub.github.io/helm-charts/ bitnami https://charts.bitnami.com/bitnami argo https://argoproj.github.io/argo-helm myorarepo https://ilfur.github.io/VirtualAnalyticRooms/ $ helm show chart myorarepo/cman apiVersion: v2 appVersion: 23.5.0.0 description: Oracle Connection Manager icon: https://ilfur.github.io/VirtualAnalyticRooms/cman.png name: cman type: application version: 1.0.4

Then create a new Kubernetes namespace, for example cman.

$ kubectl create namespace cman namespace/cman created

Retrieve the parameter file values.yaml from the chart, save it and then adapt the parameters suggested in it to your circumstances.

$ helm show values myorarepo/cman > values.yaml $ vi values.yaml

The following are excerpts from the values.yaml file with a few comments and one or two todos. The first section is about loading the CMAN container into your Kubernetes cluster:

## Please provide a docker registry type secret and enter its name ## if needed, download the container image to Your location and specify that location here image: repository: container-registry.oracle.com/database/cman:23.5.0.0 pullPolicy: IfNotPresent pullSecretName: oraregistry-secret

To download the container, your Kubernetes cluster needs Internet access to access container-registry.oracle.com. The download of the container image is password-protected. You should first log in to container-registry.oracle.com with your Oracle technet account and confirm the license agreement on the cman information page.

You will then be able to download the container repeatedly for some time. The Kubernetes cluster also requires your Oracle technet user name and its password for the download in a Kubernetes secret, specified here with the name oraregistry-secret. Before you install the helm chart, please first create a corresponding secret in the namespace cman, for example:

$ kubectl create secret docker-registry oraregistry-secret -n cman \

--docker-username=marcel.pfeifer@oracle.com \

--docker-password=myComplexPwd123 \

--docker-server=container-registry.oracle.com

secret/oraregistry-secret created

The next section deals with the network connection of the CMAN container. As already mentioned, no ingresses are defined as the service does not communicate with HTTP or HTTPS protocol. You will get the fastest result if you set the type of the service to NodePort. The IP address obtained is then accessible outside the network, your Oracle Net clients can then use this address in their connect string.

## For external access, if You have a LoadBalancer implementation installed, use the LoadBalancer type. ## Optionally, specify ClusterIP as type and configure Your Gateway to forward TCP traffic to the ClusterIP service. ## CMAN traffic is non-http, so please do not use an Ingress . service: type: LoadBalancer port: 1521

If the type is set to LoadBalancer, as is the case here, the IP address of the load balancer must be used. In addition, the external load balancer used must NOT operate HTTP load balancing, but must remain at socket level.

In the Oracle Cloud, you can achieve this by adding a corresponding annotation to the service in the metadata section:

metadata:

annotations:

oci.oraclecloud.com/load-balancer-type: nlb

If you have entered the LoadBalancer type, the Helm Chart will automatically perform this annotation for you. If you are not in the Oracle Cloud, this annotation is harmless and has no effect. Let’s now look at the entries for additional persistence of the log files and configuration, here set to “false” by default:

## if the toPVC param is set to false, no PVC is created and config and logs are gone on container restart.

## ideally, use an NFS type strage class for external shared acess and manipulation.

storage:

log:

toPVC: false

storageClass: rook-ceph-block

storage: 50Gi

config:

toPVC: false

storage: 1Gi

storageClass: rook-ceph-block

Should log information and the configuration be stored persistently? The container log already contains the standard output of the CMAN and shows the start, configuration and accesses very nicely. You could store additional trace files in persistent volumes, ideally on a shared file system such as NFS. Please specify an existing storageClass for this, e.g. oci-bv for block volumes in the Oracle Cloud or rook-ceph-block – if available. You can then also access the log information from outside. The same applies to the CMAN configuration: the configuration is normally “shot in” via a so-called ConfigMap each time the CMAN is started. If a persistent configuration already exists in a volume, this is used. The ConfigMap is filled via the following section in the values.yaml file, which contains a large part of a cman.ora file:

## the initial configuration is copied to a ConfigMap.

## The NAME of the cman configuration is auto-generated, please do not specify that here.

initialConfig: |

(configuration=

(address=(protocol=tcp)(host=0.0.0.0)(port=1521))

(parameter_list =

(registration_invited_nodes=*)

(aso_authentication_filter=off)

(connection_statistics=yes)

(remote_admin=on)

(max_connections=256)

(idle_timeout=0)

(inbound_connect_timeout=0)

(log_level=user)

(session_timeout=0)

(outbound_connect_timeout=0)

(max_gateway_processes=16)

(min_gateway_processes=2)

(trace_timestamp=on)

(trace_filelen=1000)

(trace_fileno=5)

(trace_level=off)

(max_cmctl_sessions=4)

(event_group=init_and_term,memory_ops)

)

(rule_list=

(rule=

(src=*)(dst=*)(srv=*)(act=accept)

(action_list=(aut=off)(moct=0)(mct=0)(mit=0)(conn_stats=on))

)

)

)

The only thing missing in this configuration section is the name of the CMAN. This is because it consists of the name of the network service and the namespace, among other things, and is put together for you by the helm chart. However, this also means that there can only be one CMAN per CMAN container with exactly one internal port 1521. If you want to have several CMANs, you simply need several containers. You can keep port 1521 everywhere, each container gets its own IP address and there is no overlap.

You can also change the ConfigMap created during installation (kubectl edit configmap … ) and add further routing rules as required or try to activate the REST service yourself. What you should not change is the IP address 0.0.0.0, on which CMAN is listening. The entry 0.0.0.0 reacts to any IP address. Such a container under Kubernetes receives its own internal IP address, and this is dynamic and also not accessible from outside by network clients. You would have to enter the IP address of your load balancers there, but I think we are already secure and isolated enough as it is.

Have you now adapted your values.yaml file to your needs? Then we can start the installation! This is done by a helm install call similar to this one:

$ helm install my-cman myorarepo/cman --values values.yaml -n cman NAME: my-cman LAST DEPLOYED: Fri Dec 6 15:15:51 2024 NAMESPACE: cman STATUS: deployed REVISION: 1 TEST SUITE: None

The installation should take place in the namespace cman created much further above. A pod, service, ConfigMap and other resources should be visible there quite quickly:

$ kubectl get configmap -n cman NAME DATA AGE my-cman-config 4 3m40s $ kubectl get service -n cman NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-cman-svc LoadBalancer 10.106.92.132 10.10.2.247 1521:30663/TCP 4m16s $ kubectl get pod -n cman NAME READY STATUS RESTARTS AGE my-cman-cman-6c7d9945d-bd92t 1/1 Running 0 5m6s

And with a bit of luck, your CMAN will start and you can start registering databases. You can see this in the standard log output of the CMAN container:

$ kubectl logs pod/my-cman-cman-6c7d9945d-bd92t -n cman

Defaulted container "app" out of: app, init-config (init)

12-06-2024 15:15:53 UTC : : Creating /tmp/orod.log

sudo: unable to send audit message: Operation not permitted

sudo: unable to send audit message: Operation not permitted

12-06-2024 15:15:53 UTC : : Using the user defined cman.ora file=[/scripts/cman.ora]

12-06-2024 15:15:53 UTC : : Copying CMAN file to /u01/app/oracle/product/23ai/client_1/network/admin

12-06-2024 15:15:55 UTC : : Starting CMAN

CMCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 06-DEC-2024 15:15:55

Copyright (c) 1996, 2024, Oracle. All rights reserved.

Current instance CMAN_my-cman-svc.cman.svc.cluster.local is not yet started

Connecting to (DESCRIPTION=(address=(protocol=tcp)(host=0.0.0.0)(port=1521)))

Starting Oracle Connection Manager instance CMAN_my-cman-svc.cman.svc.cluster.local. Please wait...

CMAN for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems

Status of the Instance

----------------------

Instance name cman_my-cman-svc.cman.svc.cluster.local

Version CMAN for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems

Start date 06-DEC-2024 15:15:56

Uptime 0 days 0 hr. 0 min. 9 sec

Num of gateways started 2

Average Load level 0

Log Level USER

Trace Level OFF

Instance Config file /u01/app/oracle/product/23ai/client_1/network/admin/cman.ora

Instance Log directory /u01/app/oracle/diag/netcman/my-cman-cman-6c7d9945d-bd92t/cman_my-cman-svc.cman.svc.cluster.local/alert

Instance Trace directory /u01/app/oracle/diag/netcman/my-cman-cman-6c7d9945d-bd92t/cman_my-cman-svc.cman.svc.cluster.local/trace

The command completed successfully.

12-06-2024 15:16:05 UTC : : Reloading CMAN

CMCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 06-DEC-2024 15:16:05

Copyright (c) 1996, 2024, Oracle. All rights reserved.

Current instance CMAN_my-cman-svc.cman.svc.cluster.local is already started

Connecting to (DESCRIPTION=(address=(protocol=tcp)(host=0.0.0.0)(port=1521)))

The command completed successfully.

12-06-2024 15:16:05 UTC : : Checking CMAN Status

CMCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 06-DEC-2024 15:16:05

Copyright (c) 1996, 2024, Oracle. All rights reserved.

Current instance CMAN_my-cman-svc.cman.svc.cluster.local is already started

Connecting to (DESCRIPTION=(address=(protocol=tcp)(host=0.0.0.0)(port=1521)))

Services Summary...

Proxy service "cmgw" has 1 instance(s).

Instance "cman", status READY, has 2 handler(s) for this service...

Handler(s):

"cmgw001" established:0 refused:0 current:0 max:256 state:ready

<machine: localhost, pid: 41>

(ADDRESS=(PROTOCOL=ipc)(KEY=#41.1)(KEYPATH=/var/tmp/.oracle_5432100))

"cmgw000" established:0 refused:0 current:0 max:256 state:ready

<machine: localhost, pid: 39>

(ADDRESS=(PROTOCOL=ipc)(KEY=#39.1)(KEYPATH=/var/tmp/.oracle_5432100))

Service "cmon" has 1 instance(s).

Instance "cman", status READY, has 1 handler(s) for this service...

Handler(s):

"cmon" established:1 refused:0 current:1 max:4 state:ready

<machine: localhost, pid: 33>

(ADDRESS=(PROTOCOL=ipc)(KEY=#33.1)(KEYPATH=/var/tmp/.oracle_5432100))

The command completed successfully.

12-06-2024 15:16:05 UTC : : cman [CMAN_my-cman-svc.cman.svc.cluster.local] started sucessfully

12-06-2024 15:16:05 UTC : : ################################################

12-06-2024 15:16:05 UTC : : CONNECTION MANAGER IS READY TO USE!

12-06-2024 15:16:05 UTC : : ################################################

12-06-2024 15:16:05 UTC : : cman started sucessfully

12-06-2024 15:15:53 UTC : : Using the user defined cman.ora file=[/scripts/cman.ora]

12-06-2024 15:15:53 UTC : : Copying CMAN file to /u01/app/oracle/product/23ai/client_1/network/admin

12-06-2024 15:15:55 UTC : : Starting CMAN

12-06-2024 15:16:05 UTC : : Reloading CMAN

12-06-2024 15:16:05 UTC : : Checking CMAN Status

12-06-2024 15:16:05 UTC : : cman [CMAN_my-cman-svc.cman.svc.cluster.local] started sucessfully

12-06-2024 15:16:05 UTC : : ################################################

12-06-2024 15:16:05 UTC : : CONNECTION MANAGER IS READY TO USE!

12-06-2024 15:16:05 UTC : : ################################################

12-06-2024 15:16:05 UTC : : cman started sucessfully

Take a look around the container first. Connect to a shell and look for directories or reload the configuration once to test it. For this we need the name of the pod, which we just learned earlier with kubectl get pod -n cman and the following kubectl call:

$ kubectl exec -it my-cman-cman-6c7d9945d-bd92t -n cman -- /bin/bash Defaulted container "app" out of: app, init-config (init) [oracle@my-cman-cman-6c7d9945d-bd92t ~]$

You are now inside the container. A call to lsnrctl status shows you that there is currently only the cman service without registered databases.

And you can also see the directory in the container where the logs are stored. The ORACLE_HOME is always set to /u01/app/oracle/product/23ai/client_1 .

$ lsnrctl status LSNRCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 06-DEC-2024 15:32:10 Copyright (c) 1991, 2024, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=0.0.0.0)(PORT=1521))) STATUS of the LISTENER ------------------------ Alias cman_my-cman-svc.cman.svc.cluster.local Version TNSLSNR for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems Start Date 06-DEC-2024 15:15:56 Uptime 0 days 0 hr. 16 min. 14 sec Trace Level off Security OFF SNMP OFF Listener Parameter File /u01/app/oracle/product/23ai/client_1/network/admin/cman.ora Listener Log File /u01/app/oracle/diag/netcman/my-cman-cman-6c7d9945d-bd92t/cman_my-cman-svc.cman.svc.cluster.local/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=0.0.0.0)(PORT=1521))) Services Summary... Proxy service "cmgw" has 1 instance(s). Instance "cman", status READY, has 2 handler(s) for this service... Service "cmon" has 1 instance(s). Instance "cman", status READY, has 1 handler(s) for this service... The command completed successfully [oracle@my-cman-cman-6c7d9945d-bd92t ~]$ echo $ORACLE_HOME /u01/app/oracle/product/23ai/client_1

To test this, have the configuration of the CMAN read in again using the corresponding cmctl commands. Please note the name of the CMAN created, which you have already learned from the lsnrctl status command:

[oracle@my-cman-cman-6c7d9945d-bd92t ~]$ cmctl CMCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 06-DEC-2024 15:36:08 Copyright (c) 1996, 2024, Oracle. All rights reserved. Welcome to CMCTL, type "help" for information. CMCTL:CMAN_my-cman-cman-6c7d9945d-bd92t> admin cman_my-cman-svc.cman.svc.cluster.local Current instance cman_my-cman-svc.cman.svc.cluster.local is already started Connections refer to (DESCRIPTION=(address=(protocol=tcp)(host=0.0.0.0)(port=1521))). The command completed successfully. CMCTL:cman_my-cman-svc.cman.svc.cluster.local> reload The command completed successfully. CMCTL:cman_my-cman-svc.cman.svc.cluster.local> exit [oracle@my-cman-cman-6c7d9945d-bd92t ~]$ exit

One last test: register a database with CMAN by setting its REMOTE_LISTENER parameter to the IP and port of the CMAN container or its load balancer. If the database service appears in the CMAN, you have actually already won. Provided that the name of the registered database can be resolved from the CMAN, i.e. in the opposite direction to the registration. For this to work, there must be a corresponding entry in the LOCAL_LISTENER parameter of the database to be addressed. An entry such as “localhost:1521”, for example, would be completely unsuitable.

In order to register a database with CMAN for testing purposes, I first had to find out which load balancer address was assigned to CMAN and enter this in the REMOTE_LISTENER parameter of my database. My CMAN service is of the LoadBalancer type, so it was given an external IP address (that of the load balancer) of 10.10.2.247:

$ kubectl get service -n cman NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-cman-svc LoadBalancer 10.106.92.132 10.10.2.247 1521:30663/TCP 2d18h

Then I connected to my database or a PDB of my choice (called chronin in my case) as SYS user, entered resolvable local and remote addresses in the database configuration and registered the database with both CMAN and the local listener:

SQL> alter system set remote_listener='10.10.2.247:1521' sid='*' scope=both; System altered. SQL> alter system set local_listener='10.244.2.130:1521' sid='*' scope=both; System altered. SQL> alter system register ; System altered.

Then I opened a shell for the CMAN container in my test environment again and checked whether the database registration was successful:

$ kubectl exec -ti my-cman-cman-6c7d9945d-bd92t -n cman -- /bin/bash Defaulted container "app" out of: app, init-config (init) $ lsnrctl status LSNRCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 10-DEC-2024 10:58:33 Copyright (c) 1991, 2024, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=0.0.0.0)(PORT=1521))) STATUS of the LISTENER ------------------------ Alias cman_my-cman-svc.cman.svc.cluster.local Version TNSLSNR for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems Start Date 09-DEC-2024 12:34:36 Uptime 0 days 22 hr. 23 min. 57 sec Trace Level admin Security OFF SNMP OFF Listener Parameter File /u01/app/oracle/product/23ai/client_1/network/admin/cman.ora Listener Log File /u01/app/oracle/diag/netcman/my-cman-cman-6c7d9945d-tw7jr/cman_my-cman-svc.cman.svc.cluster.local/alert/log.xml Listener Trace File /u01/app/oracle/diag/netcman/my-cman-cman-6c7d9945d-tw7jr/cman_my-cman-svc.cman.svc.cluster.local/trace/cman_my-cman-svc_tnslsnr_206_139977477528512.trc Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=0.0.0.0)(PORT=1521))) Services Summary... Proxy service "cmgw" has 1 instance(s). Instance "cman", status READY, has 2 handler(s) for this service... Service "28da2fd09e0c0fcbe0638202f40a6fbf" has 1 instance(s). Instance "ORCL1", status READY, has 1 handler(s) for this service... Service "chronin" has 1 instance(s). Instance "ORCL1", status READY, has 1 handler(s) for this service... Service "cmon" has 1 instance(s). Instance "cman", status READY, has 1 handler(s) for this service... The command completed successfully

Here you can see that the database service of my PDB chronin has been successfully registered. CMAN now recognizes the remote database service. A cmctl command “show services” lists further details, e.g. the hopefully correct host name / IP address behind the example service chronin and its status ready, not blocked:

$ cmctl

CMCTL for Linux: Version 23.0.0.0.0 - for Oracle Cloud and Engineered Systems on 10-DEC-2024 11:23:25

Copyright (c) 1996, 2024, Oracle. All rights reserved.

Welcome to CMCTL, type "help" for information.

CMCTL> admin cman_my-cman-svc.cman.svc.cluster.local

Current instance cman_my-cman-svc.cman.svc.cluster.local is already started

Connections refer to (DESCRIPTION=(address=(protocol=tcp)(host=0.0.0.0)(port=1521))).

The command completed successfully.

CMCTL:cman_my-cman-svc.cman.svc.cluster.local> show services

Services Summary...

Proxy service "cmgw" has 1 instance(s).

Instance "cman", status READY, has 2 handler(s) for this service...

Handler(s):

"cmgw001" established:0 refused:0 current:0 max:256 state:ready

<machine: localhost, pid: 42>

(ADDRESS=(PROTOCOL=ipc)(KEY=#42.1)(KEYPATH=/var/tmp/.oracle_5432100))

"cmgw000" established:1 refused:0 current:0 max:256 state:ready

<machine: localhost, pid: 40>

(ADDRESS=(PROTOCOL=ipc)(KEY=#40.1)(KEYPATH=/var/tmp/.oracle_5432100))

Service "28da2fd09e0c0fcbe0638202f40a6fbf" has 1 instance(s).

Instance "ORCL1", status READY, has 1 handler(s) for this service...

Handler(s):

"DEDICATED" established:1 refused:0 state:ready

REMOTE SERVER

(DESCRIPTION=(CONNECT_DATA=(SERVICE_NAME=))(ADDRESS=(PROTOCOL=tcp)(HOST=10.244.2.130)(PORT=1521)))

Service "chronin" has 1 instance(s).

Instance "ORCL1", status READY, has 1 handler(s) for this service...

Handler(s):

"DEDICATED" established:1 refused:0 state:ready

REMOTE SERVER

(DESCRIPTION=(CONNECT_DATA=(SERVICE_NAME=))(ADDRESS=(PROTOCOL=tcp)(HOST=10.244.2.130)(PORT=1521)))

Service "cmon" has 1 instance(s).

Instance "cman", status READY, has 1 handler(s) for this service...

Handler(s):

"cmon" established:5 refused:0 current:1 max:4 state:ready

<machine: localhost, pid: 34>

(ADDRESS=(PROTOCOL=ipc)(KEY=#34.1)(KEYPATH=/var/tmp/.oracle_5432100))

The command completed successfully.

A client connect with sqlplus to the load balancer address and the registered service chronin should now work:

$ sqlplus system@//10.10.2.247:1521/chronin SQL*Plus: Release 21.0.0.0.0 - Production on Tue Dec 10 10:49:25 2024 Version 21.16.0.0.0 Copyright (c) 1982, 2022, Oracle. All rights reserved. Enter password: Last Successful login time: Mon Dec 09 2024 16:43:07 +00:00 Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Version 21.3.0.0.0 SQL>

If the connect also works in your environment: congratulations! You have successfully reached the end of this blog.

If this does not work for you, here are a few hints: please check whether the registered service is using a correct host name and whether the listener on your database host has accepted the registration. A “show services” command in cmctl will show the registered services with their hopefully correct connect strings and the listener.log on your database host will report errors when registering the local service. A service registered in CMAN but not registered with the local listener is marked as blocked by the cmctl command “show services”. However, there can be several reasons for blocked services, e.g. a protocol not permitted by the configuration is being used (tcp instead of tcps) and other possibilities.

Conclusion and outlook

A seemingly simple container like CMAN has two hurdles to overcome. Its special network configuration and its non-existent statelessness. Despite its small size, it must be treated as valuably as a database system, i.e. it must be protected against failure and restarted as rarely as possible. It must not develop into an SPOF (single point of failure) due to a configuration that is too small, neither under Kubernetes nor anywhere else. For remote maintenance of the CMAN, a functional REST service access would also be desirable in the container. But the container runs under Kubernetes and fulfills its purpose in the mix of the “database satellites” ORDS, GoldenGate, Observability Exporter and Co. A limitation with regard to increased latency in the standard Kubernetes network was actually not noticeable in our tests, despite the overlay network. Further helm charts on other Oracle components will follow in other blogs.

And now, as always: have fun testing and trying it out!

Used links in this text:

Documentation of Oracle Connection Manager 23ai

Blog about VM-based setup of Connection Manager by Sinan Petrus Toma

Connection Manager 23ai container on container-registry.oracle.com

Container build scripts for Connection Manager on github.com

Helm Chart source code for Connection Manager on github.com