Enterprises can now deploy services, such as Identity Management, Logging, custom DNS, Internet of Things, and streaming media (audio and video), behind Oracle Cloud Infrastructure (OCI)’s network Load Balancing service, benefiting from its low latency, scale, and reliability. This blog focuses on the custom DNS use case. Read more about the flexible network load balancer in the announcement blog post.

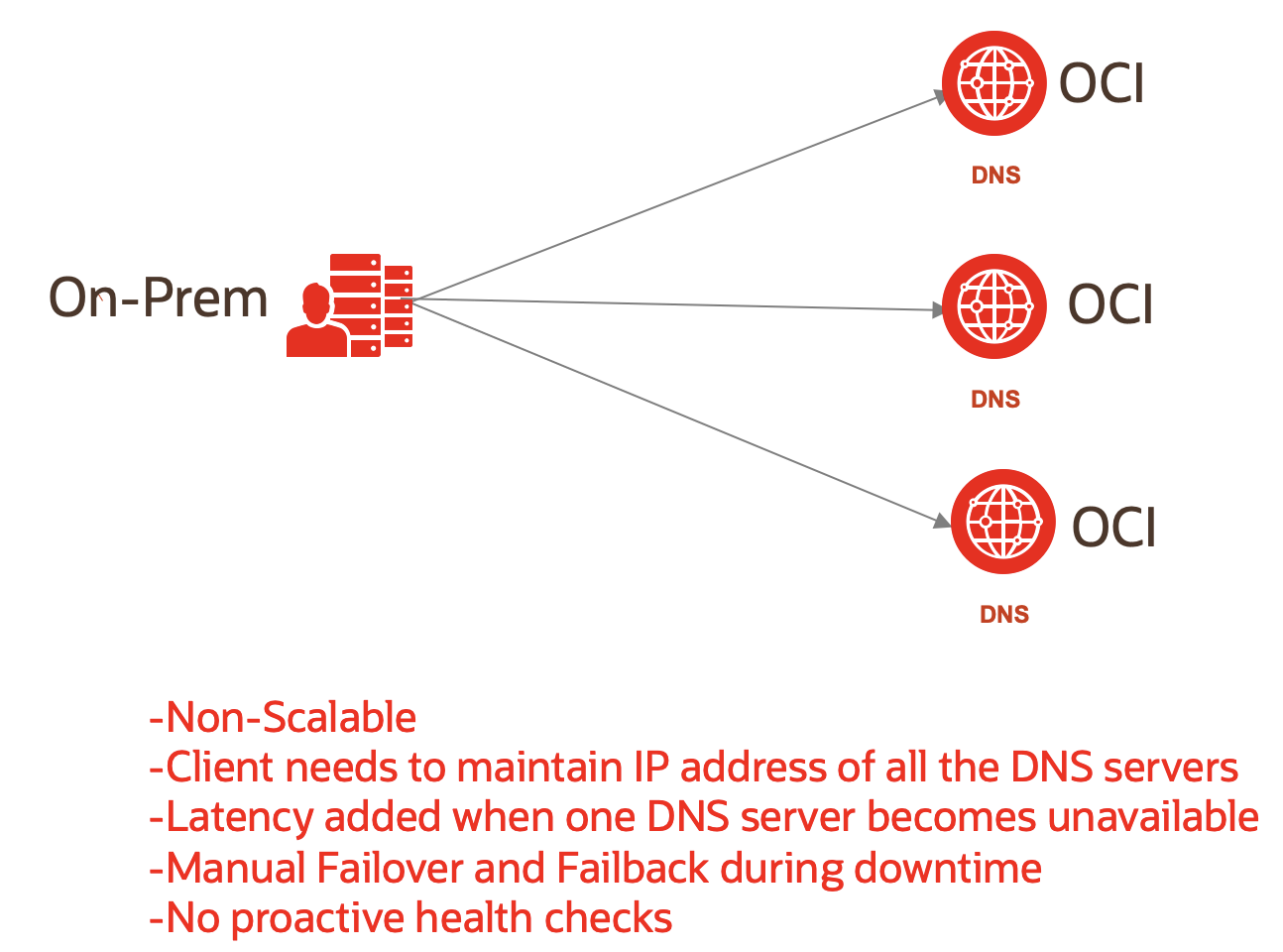

DNS redundancy is a fail-safe solution or a backup mechanism for DNS outages resulting from configuration errors, infrastructure failure, or a DDoS attack. A single dedicated DNS server might not be sufficient in such scenarios. Deploying multiple DNS servers in different networks ensures DNS availability while cutting down the risk of DNS unavailability. Maintaining these redundant custom DNS servers might not be the most cost-effective option for most enterprises. However, this configuration can cause service interruption on your server upgrades and increase the cost and operational complexity of your application deployment.

How our flexible load balancer can help

OCI’s flexible network load balancer now offers a single frontend for their applications that rely on TCP and UDP protocols. A single frontend is cost effective and simplifies deployment and operations.

You can now use the flexible network load balancer to load balance DNS traffic and simplify your network architecture by eliminating the need for redundant DNS servers for ingesting DNS traffic. You no longer need to maintain a fleet of DNS servers to manage DNS traffic within Oracle Cloud in the same region or different regions. This separation can simplify your architecture, reduce your costs, and increase your scalability. UDP support on the network load balancer helps centralize your DNS service infrastructure to achieve operational efficiencies, such as scaling, managing downtime or upgrades, and sharing one fixed IP externally. It also gives an option to add TCP protocol on the same listener if your DNS service requires it.

We don’t support configuring OCI’s private DNS endpoint as a backend to the flexible network load balancer. This configuration only works for custom DNS service running on virtual machine or bare metal instances.

TCP and UDP support on the network load balancer is a fully managed solution. In situations like DNS where you need support for both TCP and UDP on the same port, you can set up a multiprotocol backend set and a multiprotocol listener.

Use case

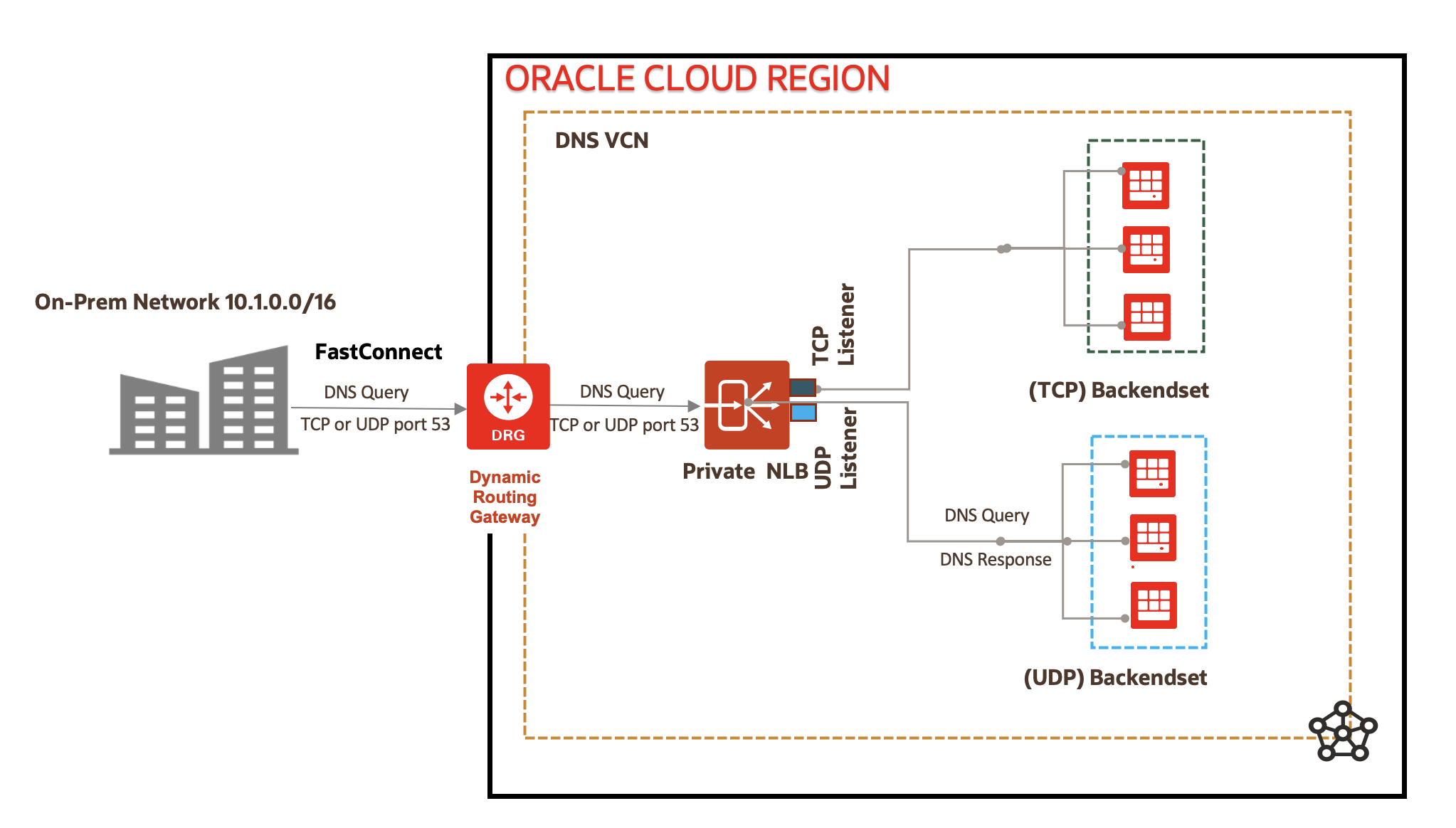

Imagine a scenario where you’re trying to reach the network load balancer from the on-premises. The client from on-premises provides the IP address of the private network load balancer in their /etc/resolv.conf file as the DNS server and no longer needs to provide the IP address of the individual DNS servers.

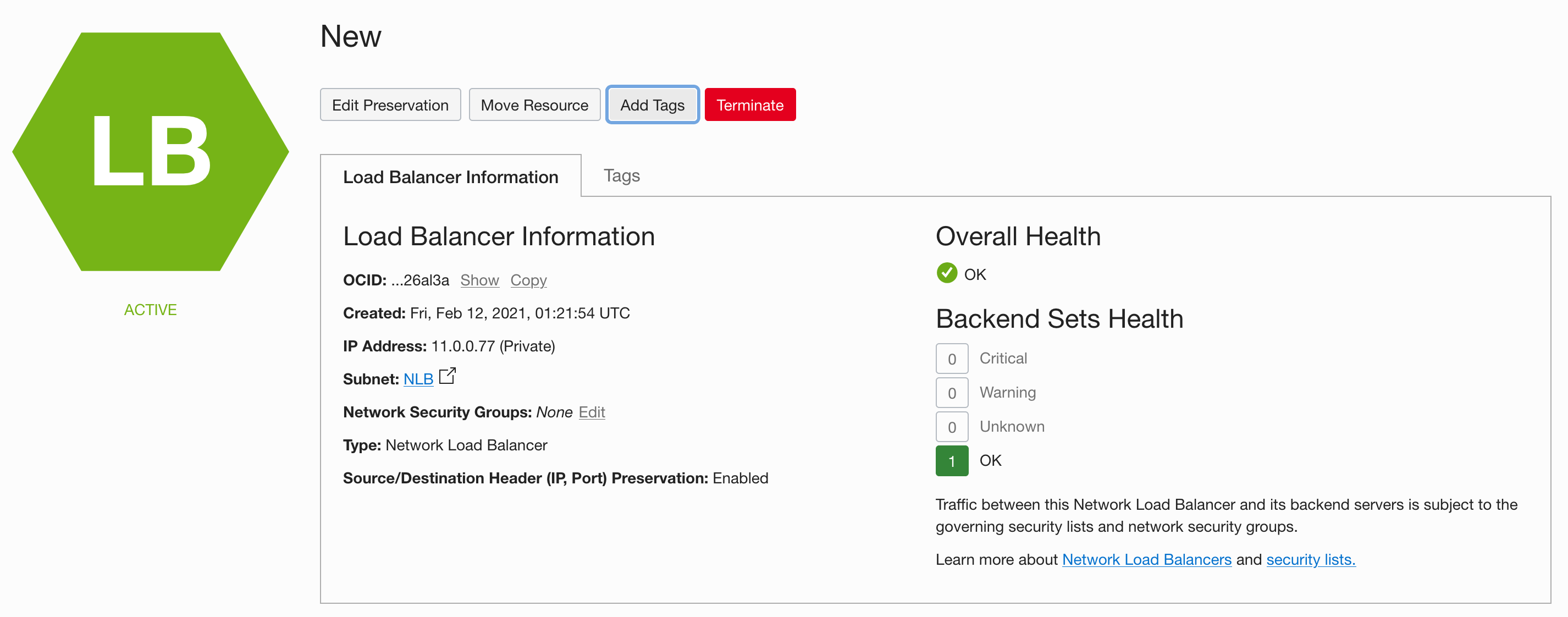

In this example, the network load balancer has an IP address of 11.0.0.77 and a TCP/UDP listener, which listens on port 53 for DNS queries. A TCP/UDP backend set has many DNS backends behind the network load balancer..

What happens when you send a DNS query to the network load balancer?

-

DNS client sends a DNS query to the network load balancer.

-

The network load balancer has a TCP_UDP listener, which listens on port 53 of both TCP and UDP traffic. It forwards the DNS requests to a backend set whose traffic routing protocol is also TCP_UDP on port 53.

-

The backend DNS server resolves the DNS request by looking up in its hosts file and sends a DNS response.

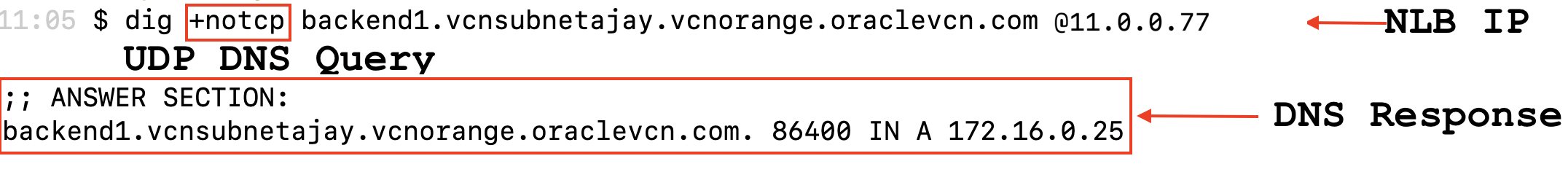

The following output shows how the network load balancer IP address is passed as the DNS server IP address in the dig query. The DNS query is a UDP(notcp) request. The output illustrates how the network load balancer, passed as a DNS server, provided a DNS response providing the IP address.

While UDP DNS traffic is connectionless, the network load balancer maintains UDP flow state based on 5-tuple hash — src-ip, src-port, dst-ip, dst-port, and protocol. This configuration ensures that packets sent in the same context are consistently forwarded to the same backend set. The flow is considered active as long as traffic is flowing and until the idle timeout is reached. When the timeout threshold is reached, the load balancer forgets the affinity, and incoming UDP packet is considered as a new flow and load-balanced to a new backend set.

Conclusion

In this article, we looked at how you can use the flexible network load balancer as a centralized DNS server. We went through the workflow of what happens when the DNS traffic hits the network load balancer and what the output of a DNS query looks like. The ability to flexibly load balance layer 4 DNS traffic to particular regions and endpoints gives you more control, which is useful for high traffic applications. A great way to test this process out yourself is with a 30-day free trial of Oracle Cloud Infrastructure, which includes US$300 in credit and our Always Free services.