Oracle’s public cloud embraces open source technologies and the communities that support them. With the strong shift to cloud native technologies and DevOps methodologies, organizations want a high-performance, high-reliability cloud that avoids cloud lock-in and allows them to run what they want, whether or not it’s built by the cloud provider. One of the open source technologies that Oracle Cloud customers are seeing value in is Rook, a popular open source storage technology.

What’s Rook?

Rook is an open source, cloud native storage orchestrator for Kubernetes. It turns distributed storage systems into storage services that manage, scale, and heal themselves. It automates storage administrator tasks such as deployment, bootstrapping, configuration, provisioning, scaling, upgrading, migration, disaster recovery, monitoring, and resource management. And Rook uses Kubernetes to deliver its cloud native container management, scheduling, and orchestration services.

Rook features include:

- Automated resource management

- Storage cluster hyperscaling or hyperconverging

- Efficient data distribution and replication

- Provisioning of file, block, and object storage with multiple storage providers

- Management of open source storage technologies

- Elastic storage in your data center

- Open source software released under Apache 2.0

- Optimization of workloads on commodity hardware

A key benefit of Rook is its support for multiple database and file system options, including:

- Ceph

- CockroachDB

- EdgeFS

- Cassandra

- Minio

- NFS

- Yugabyte DB

This capability provides a common framework for several different storage solution use cases on Kubernetes with a consistent experience.

This blog post focuses on setting up and managing Ceph with Rook on the Container Engine for Kubernetes service of Oracle Cloud. This service helps you deploy, manage, and scale Kubernetes clusters in the cloud. With Container Engine for Kubernetes, organizations can build dynamic containerized applications that integrate seamlessly with all the services available in Oracle Cloud.

Why Ceph Distributed Storage Cluster?

Ceph is a popular open source storage platform that provides high performance, reliability, and scalability. The Ceph distributed storage system provides an interface for object, block, and file storage.

Components of Ceph include:

- Ceph Object Storage Deamons (OSDs), which handle the data store, data replication, and recovery. A Ceph cluster needs at least two Ceph OSD servers based on Oracle Linux.

- Ceph Monitor (ceph-mon), which monitors the cluster state, OSD map, and CRUSH map.

- Ceph Meta Data Server (ceph-mds), which is needed to use Ceph as a file system.

You can find more details in the Ceph documentation.

Rook enables Ceph storage systems to run on Kubernetes using Kubernetes primitives. With Ceph running in the Kubernetes cluster, applications can mount block devices and file systems managed by Rook, or use the S3/Swift API for object storage. For more information, see the Rook documentation on Ceph storage.

The Rook operator automates configuration of storage components and monitors the cluster to ensure that the storage remains available and healthy. The following image shows how Ceph Rook integrates with Kubernetes:

Source: https://rook.io/docs/rook/v1.2/ceph-storage.html

Getting Started

First, let’s explore the components needed to build and deploy a Ceph cluster with Rook in Container Engine for Kubernetes.

- A running Container Engine for Kubernetes cluster.

- A desktop (cloud VM or on premises) with the Oracle Cloud Infrastructure (oci) and kubectl command lines installed and configured.

- CIDR/ports for Kubernetes pods and worker nodes open in the local virtual cloud network (VCN) security list.

- Kubernetes version 1.14.8 or later.

- A NodePool with 2 or more Worker Nodes. Recommended 3 for the below configuration.

- Because Rook Ceph clusters use local storage for pool objects, production environments must have local storage devices attached to Container Engine for Kubernetes worker nodes. Before setting things up, create extra block volumes and attach them to the worker nodes. You can also use worker nodes with a local NVMe SSD storage provided through Compute DenseIO shapes.

Deployment Process

To deploy on Contain Engine for Kubernetes, review the Ceph Storage Quickstart and follow these steps.

Create the Cluster

-

Clone the rook.git repo and deploy the Rook operator. You can also deploy the Rook operator with the Rook Helm Chart.

$ git clone https://github.com/rook/rook.git $ cd rook/cluster/examples/kubernetes/ceph $ kubectl create -f common.yaml $ kubectl create -f operator.yaml -

Edit cluster-test.yaml file and change useAllDevices from false to true.

This option indicates whether all devices found on nodes in the cluster are automatically consumed by OSDs. When true, all devices are used except for devices with partitions created or a local file system. When using this option, avoid losing data in your Container Engine for Kubernetes cluster. For examples and settings, see the Ceph Cluster CRD page in the documentation.

-

Run the following command:

$ kubectl create -f cluster-test.yaml -

Verify that the Rook Ceph pods, specifically the operator one, have a status of Running.

$ kubectl -n rook-ceph get pod

-

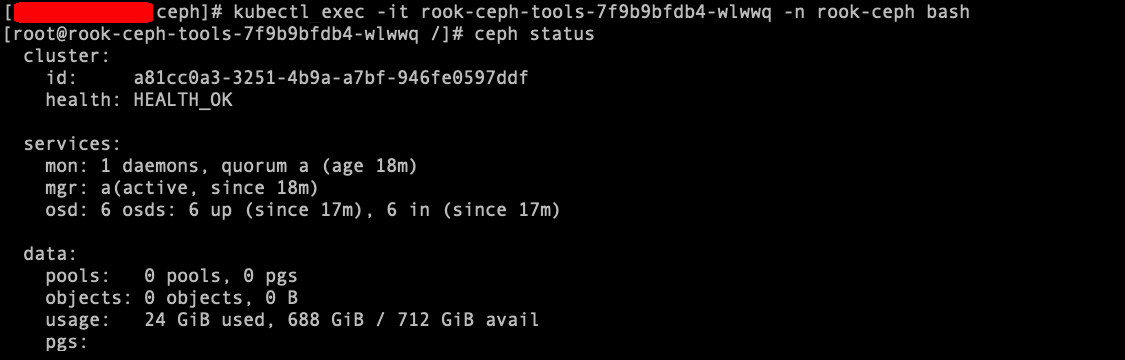

Deploy the Rook toolbox, connect into it, and run the ceph status command to verify that the cluster is in a healthy state:

$ kubectl apply -f toolbox.yaml $ kubectl exec -it rook-ceph-tools-POD_NAME -n rook-ceph bash

Create the Block Storage

Next, you create the block storage that the applications consume. Rook provisions the following types of storage:

- Block: Block storage for pods

- Object: An object store that’s accessible inside or outside the Kubernetes cluster

- Shared filesystem: A file system shared across multiple pods

Before Rook can provision storage, you must create a StorageClass and a CephBlockPool. These resources let Kubernetes interoperate with Rook when provisioning persistent volumes. As explained in the Ceph Block documentation, the following example requires at least one OSD per node, with each OSD located on three different nodes. This example explains how to create block storage that’s used in a multitier web application. Either CSI RBD or FlexDriver storage class can be used, but this post uses the CSI RBD driver, which is the preferred driver for Kubernetes v1.13 and later.

-

Save the following StorageClass definition as storageclass.yaml:

apiVersion: ceph.rook.io/v1 kind: CephBlockPool metadata: name: replicapool namespace: rook-ceph spec: failureDomain: host replicated: size: 3 --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: rook-ceph-block # Change "rook-ceph" provisioner prefix to match the operator namespace if needed provisioner: rook-ceph.rbd.csi.ceph.com parameters: # clusterID is the namespace where the rook cluster is running clusterID: rook-ceph # Ceph pool into which the RBD image shall be created pool: replicapool # RBD image format. Defaults to "2". imageFormat: "2" # RBD image features. Available for imageFormat: "2". CSI RBD currently supports only `layering` feature. imageFeatures: layering # The secrets contain Ceph admin credentials. csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # Specify the filesystem type of the volume. If not specified, csi-provisioner # will set default as `ext4`. csi.storage.k8s.io/fstype: xfs # Delete the rbd volume when a PVC is deleted reclaimPolicy: -

Create the storage class:

$ kubectl create -f storageclass.yamlAfter you create the storage class, test the Rook operator deployment by creating a Ceph block storage volume and attaching it to other pods to be consumed. To make the test easier, use the WordPress example available in the Rook cloned repo (~/rook/cluster/examples/kubernetes). This example creates a sample app to consume the block storage provisioned by Rook with the WordPress and MySQL apps.

-

From the cluster/examples/kubernetes folder, start MySQL and WordPress:

$ kubectl create -f mysql.yaml $ kubectl create -f wordpress.yaml

-

Check the WordPress public page by using the new Load Balancer public IP address provisioned in the preceding step.

Verify the Cluster Status

To finish, connect to the toolbox tools pod, verify the Ceph cluster status again, and see the new pool and objects that were created with the sample applications.

$ kubectl exec -it rook-ceph-tools-

-n rook-ceph bash

Congratulations! A Ceph cluster with Rook is now running in Container Engine for Kubernetes, and WordPress and MySQL pods are using the block volumes provisioned by Rook.

Conclusion

Combining Container Engine for Kubernetes with the Rook storage framework capabilities gives you a robust and scalable storage platform that’s production ready and easy to deploy. For more information, see the Container Engine for Kubernetes documentation and rook.io. If you want to experience Rook on Container Engine for Kubernetes for yourself, sign up for an Oracle Cloud Infrastructure account and start testing today!