Enterprises want to migrate their existing workloads to the cloud to gain performance, elasticity, and agility for their applications. However, refactoring those applications as cloud native can be expensive and time consuming. This post shows you how to leverage several Oracle Cloud Infrastructure services to run WebLogic domains for your existing Java EE applications in the cloud and gain these benefits without rewriting the apps.

There are several ways of running WebLogic in Oracle Cloud Infrastructure, from taking a DIY approach to using the Oracle Java Cloud Service. However, running WebLogic on Kubernetes offers a balance among the level of automation, portability, and the ability to customize multiple domains. Creating and managing multiple domains in a Kubernetes cluster is simplified by the use of the WebLogic Kubernetes Operator, an open source tool that bridges the gap between the WebLogic administrative tools and modern solutions for management and monitoring applications, such as ELK Stack (Elastic Stack), Prometheus, and Grafana.

Architecture Overview

This end-to-end solution for running WebLogic domains that follow the Maximum Availability Architecture guidelines uses the following Oracle Cloud Infrastructure services:

- Container Engine for Kubernetes: Although the operator supports any generic Kubernetes cluster, in this post we use the Container Engine for Kubernetes (sometimes abbreviated OKE). The Container Engine for Kubernetes cluster has three worker nodes spread out on different physical infrastructure, so that the WebLogic clusters themselves have the highest availability.

- File Storage: To further comply with best practices for running WebLogic domains, the domain configuration files are stored on shared storage that is accessible from all WebLogic servers in the cluster, on File Storage. This setup offers the following advantages: you don’t need to rebuild Docker images for changes in the domain configuration, backup is faster and centralized, and logs are stored by default on persistent storage.

- Load Balancing: By default, the WebLogic servers (admin or clustered managed servers) created by the operator are not exposed outside the Container Engine for Kubernetes cluster, so to expose an application to the outside world, we use the Load Balancing service.

- Registry: Optionally, the Docker images can be stored in a private Oracle Cloud Infrastructure Registry repository.

Before You Start

Building the environment requires the following utilities to manage the infrastructure and the cluster:

- Oracle Cloud Infrastructure CLI: https://docs.cloud.oracle.com/iaas/Content/API/SDKDocs/cliinstall.htm

- Kubectl: https://kubernetes.io/docs/tasks/tools/install-kubectl/

- Docker: https://docs.docker.com/install/ and a Docker Hub account

- Helm: https://github.com/helm/helm

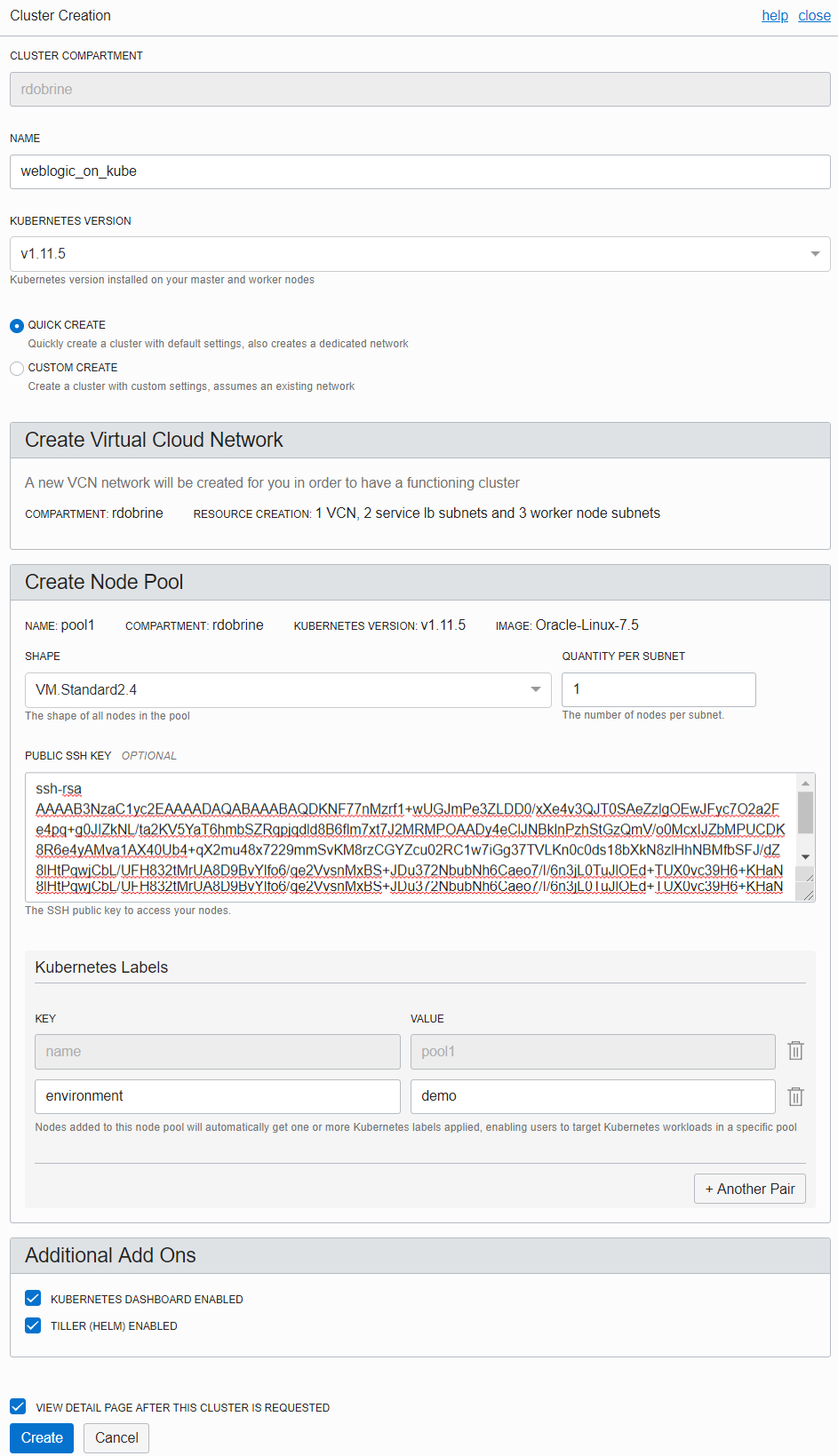

Step 1: Create the Kubernetes Cluster

Before creating a new managed Kubernetes cluster, ensure that the correct Identity and Access Management (IAM) policy is set up and that you have an existing virtual cloud network (VCN) to support your cluster. This documentation describes these requirements in detail.

The Oracle Cloud Infrastructure Console also has a Quick Create option that automatically creates a new VCN to support the cluster. To use this option, log in the Console and follow these steps:

- In the navigation menu, select Developer Services and then select Container Clusters (OKE).

- Click Create Cluster.

- Enter a name for the cluster.

- Select the Kubernetes version.

- Select Quick Create.

- Create a node pool. Select the shape of each worker node in the cluster and the number of nodes in each subnet. To ensure the highest performance for those demanding workloads, Container Engine for Kubernetes allows you to select bare metal instances or even GPU instances on which to run your containers.

- Accept the default (enabled) for the add-ons, Tiller (Helm) and Kubernetes Dashboard.

- Click Create.

- After the cluster is created, click Access Kubeconfig and follow the instructions for pointing the kubectl utility to the newly created cluster.

- You can then confirm the configuration by running kubectl cluster-info or kubectl config view.

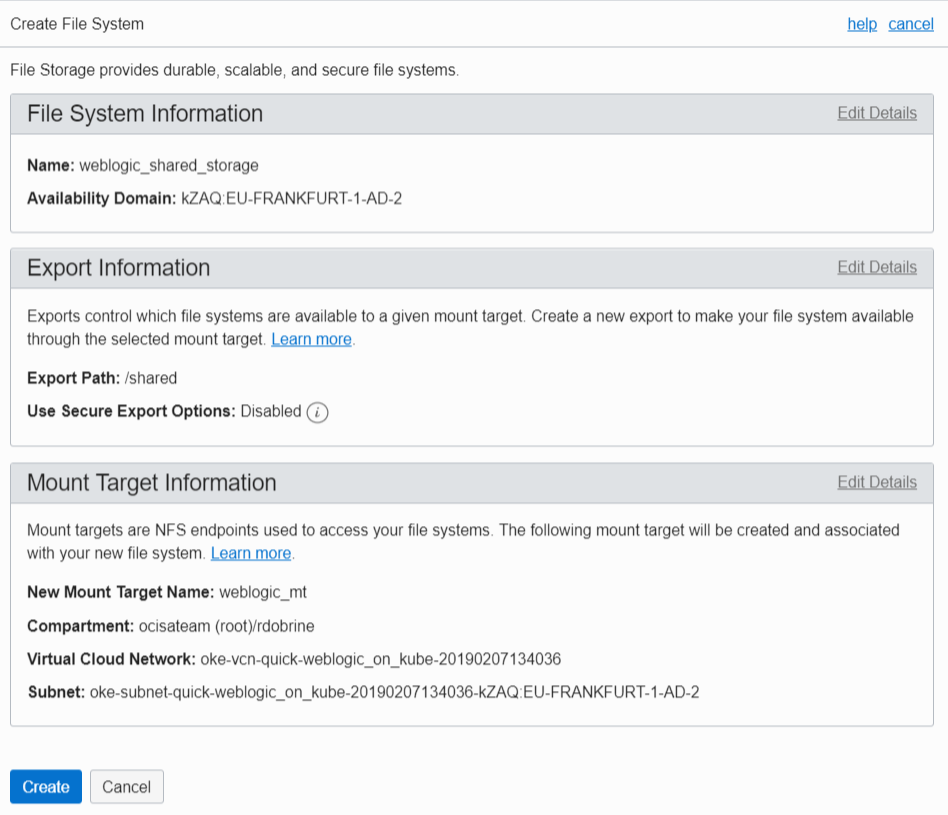

Step 2: Create and Configure the File Storage Service

Back in the Console, create and configure File Storage as follows:

- In the navigation menu, select File Storage.

- Click Create File System.

- Click Edit Details and enter the name of the file system, the export path, and the name of the mount target. Select the same VCN as the cluster and one of the subnets where the worker nodes are running. When you do this, you don’t have to set up additional VCN routing and security to have the file system reachable from the worker nodes, although you can do that if you require better isolation.

Step 3: Install the WebLogic Kubernetes Operator on Container Engine for Kubernetes

The WebLogic Kubernetes Operator uses a helm chart for installation. It also requires you to set a secret in Kubernetes for authentication to the Docker Hub repository in order to pull the operator image.

-

Create the secret as follows:

kubectl create secret docker-registry dockerhub-secret --docker-server=docker.io --docker-username='<your_docker_user>' --docker-password='<your_docker_pass>' --docker-email='<docker_email_address>'Alternatively, you can pull the operator and WebLogic binaries images from Docker Hub and then push them in your private registry running in Oracle Cloud Infrastructure.

-

Clone the operator Git repository on your workstation.

-

Edit the weblogic-kubernetes-operator/kubernetes/charts/weblogic-operator/values.yaml file and specify the custom values for the operator.

We recommended that you install the operator in its own namespace and that you use a separate service account. Container Engine for Kubernetes comes with a preconfigured service account for Tiller.

-

Before running Helm, ensure that Tiller matches the version of your local Helm:

helm init --upgrade --service-account tiller -

Install the operator in the namespace (weblogic-operator-namespace in this example):

[opc@workvm weblogic-kubernetes-operator]$ helm install kubernetes/charts/weblogic-operator --name weblogic-operator --namespace weblogic-operator-namespace --values .\kubernetes\charts\weblogic-operator\custom_values.yamlIf the installation is successful, a new custom resource definition that defines the WebLogic domains is created. You can check it by running kubectl get crd helm status weblogic-operator.

Step 4: Create a New WebLogic Domain on Container Engine for Kubernetes

Start by creating a new namespace for the domain. In this example, it is domain1. The operator includes several scripts that make it easy to generate manifests for creating the Kubernetes resources. We’ll use some of these scripts in the following sequence:

-

Create a secret to store the WebLogic domain credentials:

weblogic-kubernetes-operator/kubernetes/samples/scripts/create-weblogic-domain-credentials/create-weblogic-credentials.sh -u weblogic -p <some_password> -d domain1 -n domain1 -s domain1-weblogic-credential -

Create a persistent volume and a persistent volume claim to make the shared file system accessible from the pods.

Edit the /home/opc/weblogic-kubernetes-operator/kubernetes/samples/scripts/create-weblogic-domain-pv-pvc/create-pv-pvc-inputs.yaml file and set the parameters to point the persistent volume to the mount target and the export path of the file system. For this example, the values are as follows:

namespace: domain1 weblogicDomainStorageType: NFS weblogicDomainStorageNFSServer: 10.0.96.4 weblogicDomainStoragePath: /sharedSave the file, and from the same directory, run the following script. The manifest files are created in the output directory, and adding the -e flag directly creates the persistent volume and a persistent volume claim resources in Kubernetes.

./create-pv-pvc.sh -i create-pv-pvc-inputs.yaml -o ./pv-pvc-output –eCheck that the persistent volume and a persistent volume claim are created and have the Bound status by running kubectl get pv -n domain1 and kubectl get pvc -n domain1.

-

Create a WebLogic domain. The domain configuration files are stored on the shared storage.

Edit the /home/opc/weblogic-kubernetes-operator/kubernetes/samples/scripts/create-weblogic-domain/domain-home-on-pv/create-domain- inputs.yaml file and set the properties of your new domain, such as the admin server port, the admin server name, and the number of managed servers in the cluster.

Then, in the same directory, run the script with the -e flag to directly create the resources in Kubernetes:

./create-domain.sh -i create-domain-inputs.yaml -o ./create-domain-output -eThis script generates the necessary manifests and also creates the domain resources in Kubernetes. You can verify that the domain has been successfully created and started by checking the domain resource (kubectl get domains -n domain1) and the state of the WebLogic server, each running within a pod (kubectl get pods -n domain1).

You now have a running WebLogic domain in the cloud, on shared storage.

Step 5: Manage the New Domain with the WebLogic Operator

The operator allows you to perform the following tasks:

- Modify the domain configuration by using the typical WLST scripts, the WebLogic Deploy Tooling, or the Configuration Overrides.

- Manage lifecycle operations, such as WebLogic server start, stop, and restarts, and WebLogic cluster scaling operations. You can do this by using REST calls, kubectl, or autoscaling with the use of WLDF policies.

For example, to start new managed servers in your domain, edit the domain resource and set the replica value to the appropriate value:

kubectl edit domain domain1 -n domain1

- clusterName: cluster-1

replicas: 3Step 6: Expose Java EE Applications with Load Balancing

Let’s see how a sample application can be deployed and exposed to end users over the internet.

Deploy the application by using the WebLogic Scripting Tool (WLST) or, if you prefer a web interface, use the WebLogic Administration console, which is not exposed externally by default. You could create a NodePort service to make the console accessible from the internet, but ensure that you understand the security implications of this change.

After the application is deployed, let’s check the services that are available on the domain’s namespace:

[opc@workvm weblogic-kubernetes-operator]$ kubectl get services -n domain1 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE domain1-admin-server ClusterIP None <none> 30012/TCP,7001/TCP 4d domain1-cluster-cluster-1 ClusterIP 10.96.57.119 <none> 8001/TCP 1h domain1-managed-server1 ClusterIP None <none> 8001/TCP 4d domain1-managed-server2 ClusterIP None <none> 8001/TCP 4d

To expose the sample application, let’s create a service of type Load Balancer that uses an Oracle Cloud Infrastructure provisioner to create a new load balancer in the Container Engine for Kubernetes VCN.

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: name: domain1-cluster1-lb-ext namespace: domain1 labels: app: domain1-cluster1 annotations: service.beta.kubernetes.io/oci-load-balancer-shape: "100Mbps" service.beta.kubernetes.io/oci-load-balancer-backend-protocol: "HTTP" service.beta.kubernetes.io/oci-load-balancer-tls-secret: "ssl-certificate-secret" service.beta.kubernetes.io/oci-load-balancer-ssl-ports: "443" spec: type: LoadBalancer ports: - name: https port: 443 targetPort: 8001 selector: weblogic.clusterName: cluster-1 weblogic.domainUID: domain1 EOF

Checking the services again, we can retrieve the public IP address of the new load balancer:

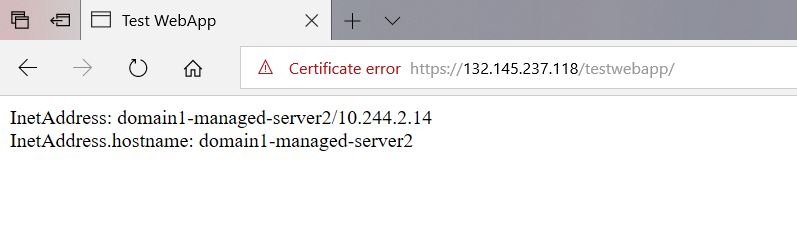

[opc@workvm weblogic-kubernetes-operator]$ kubectl get services -n domain1 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE domain1-admin-server ClusterIP None <none> 30012/TCP,7001/TCP 4d domain1-cluster-cluster-1 ClusterIP 10.96.57.119 <none> 8001/TCP 1h domain1-managed-server1 ClusterIP None <none> 8001/TCP 4d domain1-managed-server2 ClusterIP None <none> 8001/TCP 4d domain1-cluster1-lb-ext LoadBalancer 10.96.154.210 132.145.237.118 443:30862/TCP 4d

Accessing the load balancer public IP address with a browser shows the sample app, which displays the IP address and hostname of the managed server serving it:

Considerations

Container Engine for Kubernetes brings the benefit of integrating with other Oracle Cloud Infrastructure services. For example, instead of creating the file system and then referencing it from the operator scripts, you could create your persistent volume by using the Oracle Cloud Infrastructure provisioner, as described in this blog post: Using File Storage Service with Container Engine for Kubernetes.

If you plan to use an Oracle Cloud Infrastructure load balancer as described in this post, note that at the time this post was published, the public IP address of the load balancer can’t be reserved. So every time you re-create the Load Balancer service in Kubernetes, you get a new public IP address.