We’re pleased to announce that response caching is available in API Gateway!

APIs allow you to share information with partners and can open the doors to new opportunities. The most successful APIs are responsive and scale to meet demand. With increased load, backend systems can struggle to scale. Even if the backend systems can handle the volume, if you apply a cache, the latency of the API improves, resulting in a better consumption experience.

Use case

Say that we need to answer the question, “Who are our top 10 customers?” Previously, this process was manual, where a spreadsheet was e-mailed to interested parties. The IT department came up with a way to automate this process and started to create a dashboard for the executives. Fortunately, they realized early that members of the field-sales team also need this information and know the future consumers. So, the IT department created an API that powers the executive dashboard and any other approved clients.

In the early days, this solution worked, but as clients were added, the API began to encounter performance issues. Calculating the top 10 customers can be compute-intensive, especially if multiple backend systems coordinate through an integration layer, or the backend systems don’t provide the responses in the scale needed to support the clients. Growth in the API can also be a concern as the backend systems struggle to keep up with the demand.

In this example, the “top 10” doesn’t change rapidly. For example, if we get the list of the top ten customers in the morning, another request a few minutes or hours later doesn’t need all the responses to be recalculated. This process is known as eventual consistency or CAP theorem, where we can trade a reasonable amount of consistency for performance.

This use case is a great candidate, so the team uses the caching capability in API Gateway to improve the performance and scalability and reduce the latency of the API.

Feature architecture and benefits

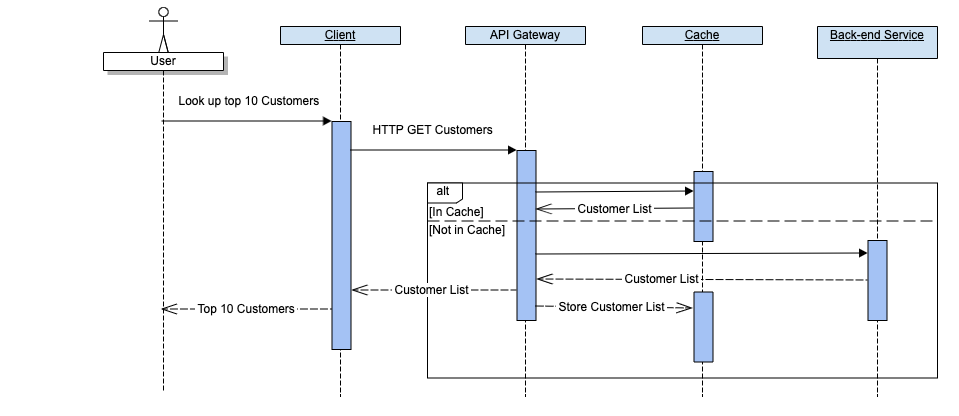

The following graphic shows the interaction sequence. For an API with caching enabled, the gateway can retrieve the response directly from cache instead of invoking the backend service. If the response isn’t in cache, the gateway invokes the backend service and store the response in the cache for subsequent access.

The sequence highlights that when response is in cache, the gateway can avoid invoking backend services, and service the request much faster. This change results in a performance and scalability increase for the API.

With response caching, you can scale your workloads and achieve lower latency for your APIs. This capability benefits APIs that require a high degree of computation, such as connecting to multiple backend systems or performing expensive calculations.

Learn more

Discover API Management in Oracle Cloud native. Learn more about cloud native API Management and response caching in our documentation.