A common strategy to migrate applications to the cloud is to lift and shift them. Application resources can often be moved without significant architectural changes by rehosting the virtual machines (VM) they’re contained in, which means recreating a VM on the target platform using the same virtual disks as the source platform. Oracle Cloud Infrastructure (OCI) is a cloud platform that provides various compute options, giving it the ability to support nearly any workload.

When rehosting existing VMs, not all OCI Compute options can be used in every situation. Understanding the current configuration and workload helps determine which options are preferred and which don’t apply.

The following key questions can help you understand an existing VM in preparation to rehost it:

-

How are data disks configured on the VM? Is one large disk used, or are there multiple disks? Are multiple disks striped?

-

How is the VM accessed?

-

Are there any compute, network, or storage performance requirements for the VM?

-

Is this the first attempt to migrate this type of VM?

Knowing the answers to these questions helps determine the preparation tasks needed to ensure that the virtual machine runs as expected on OCI.

Preparation overview

The preparation tasks covered in this post are a collection taken from various documentation sites and scenarios encountered during customer migrations. This post provides enough information for any VM to be rehosted on OCI and run as expected on the first try or be easy to troubleshoot. The preparation tasks covered in this post fall into the following categories:

-

Storage: Boot volume, block devices, iSCSI initiator

-

Network: Network configuration, OS firewall, remote service dependency

-

Performance: Paravirtualization

-

Troubleshooting: Local accounts, OS and kernel updates, Console access

The following table shows each preparation task, the operating system it pertains to, and a brief overview:

| Task | Operating system | Overview |

| Boot volume | Linux | Consolidate files required to boot |

| Block devices | Linux | Reference consistent device names and set remote disk options |

| iSCSI initiator | Windows and Linux | Install tools for iSCSI block volume attachment |

| Network configuration | Windows and Linux | Remove hard-coded MAC and static IP addresses |

| OS firewall | Windows and Linux | Update firewall rules for interface and network location changes |

| Remote service dependency | Windows and Linux | Ability to boot independently |

| Paravirtualization | Windows and Linux | Validate support and install drivers |

| Local user accounts | Windows and Linux | Ability to log in without network connectivity |

| OS and kernel updates | Windows and Linux | Hardware support and security patches |

| Console access | Linux | Output boot message to the Console |

Preparation tasks

The following tasks give guidance when preparing to rehost VMs on OCI. The exact steps and commands vary based on the operating system version.

Single boot volume

For VMs that use the logical volume manager (LVM) devicemapper framework, data of the root (/) filesystem can easily span multiple block devices over its lifetime. Ensuring that the root filesystem doesn’t span numerous devices prevents unexpected launch behavior and a complicated, non-standard boot configuration.

A VM that has expanded to multiple devices over time might have lsblk output similar to the following example. The vg_main-lv_root volume spans xvdb2, xvde, and xvdf.

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT xvdb 202:16 0 60G 0 disk ├─xvdb1 202:17 0 500M 0 part /boot └─xvdb2 202:18 0 19.5G 0 part ├─vg_main-lv_root 249:0 0 55.5G 0 lvm / └─vg_main-lv_swap 249:1 0 4G 0 lvm [SWAP] xvdc 202:32 0 100G 0 disk /mnt/data01 xvdd 202:48 0 200G 0 disk /mnt/data02 xvde 202:64 0 20G 0 disk └─vg_main-lv_root 249:0 0 55.5G 0 lvm / xvdf 202:80 0 20G 0 disk └─vg_main-lv_root 249:0 0 55.5G 0 lvm /

You can consolidate all the data to one device by increasing the original disk size, using the pvmove command to evacuate data in the logical volume to the new space, and using vgreduce to remove the spanned device from the volume group. The process is similar to Migrate SAN LUNs (LVM) with the pvmove command.

Block devices

Block devices are one of the major components that require configuration changes. Block volumes are attached to Compute instances by iSCSI or using a matching launch mode attachment type. Regardless of attachment type, the entries in /etc/fstab require validation. The OCI Block Volume documentation includes a section on fstab options.

Device names

Because the order that Linux operating systems detect devices can change with each boot, referencing devices by UUID or LVM name in /etc/fstab gives a more consistent mount behavior.

The following /etc/fstab file needs updating. The devices for the /data01 and /data02 mount points reference device names.

# cat /etc/fstab /dev/mapper/vg_ol65000-lv_root / ext4 defaults 1 1 UUID=8100e2df-2f65-4b3b-8bf0-481203b85126 /boot ext4 defaults 1 2 /dev/mapper/vg_ol65000-lv_swap swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 /dev/sdb1 /data01 ext4 defaults 0 0 /dev/sdc1 /data02 ext4 defaults 0 0

To fix this issue, use the blkid command to find each device’s UUID, and update the mount entry in /etc/fstab.

# blkid | grep sd /dev/sda1: UUID="8100e2df-2f65-4b3b-8bf0-481203b85126" TYPE="ext4" /dev/sda2: UUID="DkrMxH-eFHe-sPcK-N5DZ-4DYR-Nfmr-fQ4ezl" TYPE="LVM2_member" /dev/sdb1: UUID="ca501b9d-cdce-46a5-af8c-65176b47090b" TYPE="ext4" /dev/sdc1: UUID="a045aece-5078-4304-a000-14affcae9ca5" TYPE="ext4" # cat /etc/fstab /dev/mapper/vg_ol65000-lv_root / ext4 defaults 1 1 UUID=8100e2df-2f65-4b3b-8bf0-481203b85126 /boot ext4 defaults 1 2 /dev/mapper/vg_ol65000-lv_swap swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 UUID=ca501b9d-cdce-46a5-af8c-65176b47090b /data01 ext4 defaults 0 0 UUID=a045aece-5078-4304-a000-14affcae9ca5 /data02 ext4 defaults 0 0

Mount options

During the initial launch of the VM, only the boot volume can be attached. You can add more block volumes after the instance is running. Extra block devices listed in /etc/fstab that don’t use the nofail mount option can prevent the host from booting successfully. Also, volumes mounted by iSCSI must include the _netdev mount option to indicate to the mount process that it needs to configure the networking before attempting a mount operation.

Building on the UUID example, the _netdev and nofail options are required for any non-boot volumes.

# cat /etc/fstab /dev/mapper/vg_ol65000-lv_root / ext4 defaults 1 1 UUID=8100e2df-2f65-4b3b-8bf0-481203b85126 /boot ext4 defaults 1 2 /dev/mapper/vg_ol65000-lv_swap swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 UUID=ca501b9d-cdce-46a5-af8c-65176b47090b /data01 ext4 defaults,_netdev,nofail 0 0 UUID=a045aece-5078-4304-a000-14affcae9ca5 /data02 ext4 defaults,_netdev,nofail 0 0

iSCSI initiator

You can attach non-boot block volumes to VMs running in OCI in two ways, emulated or paravirtualized (matching the launch mode) or through iSCSI. I/O performance can improve when using iSCSI attachment over other attachment options, but it needs extra configuration. You can skip this task if you don’t plan to attach OCI block volumes using iSCSI.

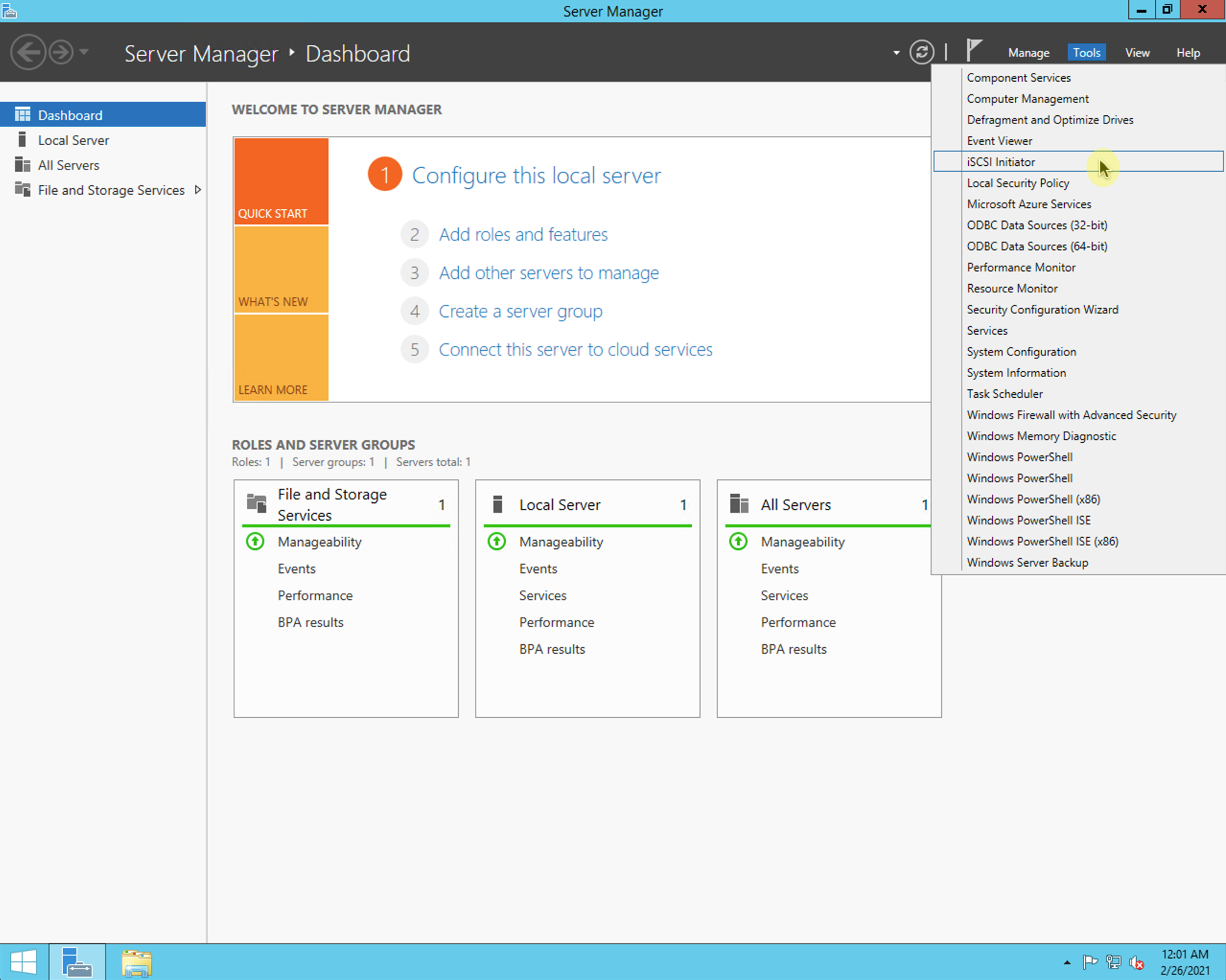

Windows iSCSI

The MSiSCSI Initiator Service is configured using Server Manager.

Linux iSCSI

To add iSCSI support to Oracle Linux 6 hosts, run the following command:

yum install iscsi-initiator-utils

For Linux-based hosts, configure a few recommended iSCSI Initiator Parameters.

Network configuration

Networking is another significant component that requires configuration changes. Depending on the configuration of the VMs’ existing network, you might need updates to multiple settings. When rehosting a VM on OCI, OCI Networking service provides the instance with a virtual network interface card (VNIC) with the appropriate settings for the attached subnet. DHCP provides network settings to the VM for MAC address, IP address, and DNS resolvers. Any statically configured settings can cause unexpected network connectivity or prevent network access completely.

Ensure that no hard-coded MAC addresses, static IP addresses, and DNS settings are used on the VM.

For Linux VM, you might need to remove references to MAC addresses and rename network configuration scripts. In Oracle Linux 6, this process includes persistent networking files in /etc/udev/rules.d/ and scripts in /etc/sysconfig/network-scripts/. You can control consistent network device naming behavior by editing GRUB to include net.ifnames=0 and biosdevname=0.

Like secondary block volumes, secondary VNICs are only attached to a running instance.

OS firewall

The network changes associated with rehosting can affect firewalls configured at the operating system level. Windows Network Location Awareness (NLA) service is addressed in the custom image documentation. The default Windows NLA service settings view OCI VNICs as attached to a public network. Sometimes, this setting can affect firewall rules that only allow protocols for private or domain locations, like RDP. For Linux, more complicated firewalld multi-zone configurations or iptable rule definitions can be affected by the interface name changes.

Remote service dependency

Ensure that any remote service dependencies that the VM has don’t prevent booting when they’re unreachable. For example, configure any persistent NFS mounts to use the nofail option, similar to secondary block volumes. Any applications that startup on boot but require remote services to run can be temporarily configured for manual startup and reverted after the instance is functionally validated.

Paravirtualization

OCI provides two launch modes when rehosting VMs: emulated and paravirtualized. Most VMs can launch in emulated mode as-is, while paravirtualized mode requires extra software for Windows and most Linux hosts. The primary benefit of paravirtualization is a much higher disk I/O performance.

Windows paravirtualization

Paravirtualization for Windows is provided by the Oracle virtio Drivers for Windows and is available for all Windows versions, supported as Compute instances. To download and install the virtio drivers, follow the instructions in Chapter 5 of the Oracle Linux KVM user guide.

Linux paravirtualization

The virtio kernel drivers provide paravirtualization for Linux-based operating systems. The Linux kernel includes support for virtio drivers, starting with version 2.6.25. The OCI documentation for custom Linux images contains a list of supported operating systems tested on OCI. As noted in the documentation, not all operating systems have been tested, so they might not be listed.

Some operating systems already have virtio drivers added to the kernel. To check if an Oracle Linux 6 hosts include virtio drivers, run the following command:

lsinitrd | grep virtio

Before adding virtio drivers, update the kernel to the newest available for the operating system and reboot. To add virtio drivers to Oracle Linux 6 hosts, run the following command:

dracut --add-drivers 'virtio virtio_blk virtio_net virtio_pci virtio_ring virtio_scsi virtio_console' -f /boot/initramfs-3.8.13-16.2.1.el6uek.x86_64.img

Local user accounts

Sometimes, a rehosted VM launches successfully to a running state but isn’t accessible by SSH or RDP. Having a local user account that doesn’t rely on network services like SSH, DNS, or Active Directory can help investigate the instance. Local user accounts are handy when working with a VM from a source that hasn’t previously rehosted on OCI. Slight variations in network settings or virtual disks can make a VM appear to have failed on OCI when it needs a small configuration update to fix the issue.

Local user accounts are beneficial for VM types that haven’t previously been rehosted to OCI.

OS and kernel updates

Updating VMs to the most recent software simplifies troubleshooting and provides a known base for working with Oracle support if you need assistance. For Linux, running the latest available kernel can fix hardware compatibility issues, such as running Oracle Linux 6 on X7 hardware.

Console access

OCI provides access to malfunctioning instances through an instance console connection. Instance console connections are graphical (VNC) or textual (serial), and you can use them with local user accounts to troubleshoot a malfunctioning instance. Textual console output is handy for Linux hosts that haven’t previously been rehosted to OCI.

Windows console

Instance console connections to a Windows host through VNC don’t need extra configuration and work by default.

Linux console

A VNC instance console connection doesn’t require extra configuration for Linux-based hosts but might not provide enough information. For boot failures, like a kernel panic, the output can quickly scroll past the VNC display window. To get the full-textual output of the Linux boot process, configure the boot loader to forward output to a serial device. For details on how to enable a serial console on Linux hosts, see the documentation.

Final thoughts

Although rehosting a virtual machine doesn’t require significant architectural changes, configuration updates are needed to ensure that the VM operates as expected when moved to OCI. Storage and networking likely involve the most work but are the most critical components for running on Oracle Cloud Infrastructure. To achieve the best performance, add paravirtualization support. If you’re working on rehosting for the first time, having an available local user can help with troubleshooting through an instance console connection. Hopefully, the preparation tasks covered here help you on your journey to the cloud.