Oracle and WEKA are proud to announce the availability of WEKA on Oracle Cloud Infrastructure (OCI). Both companies have been working together to validate WEKA can run on OCI bare metal instances to deliver the highest level of performance that customers demand for their critical workloads. Along with performance, we’ve also validated transparent storage tiering for WEKA by using S3-compatible OCI Object Storage as a low-cost tier. This option enables customers to run at petabyte scale in a high-performance file system by using local NVMe SSDs for hot data and object storage for warm or cold data.

Oracle’s Second-Generation cloud infrastructure provides a high-performance computing (HPC) bare metal Compute shape (BM.Optimized3.36) with 100-Gbps RDMA over converged ethernet (RoCEv2) and 3.8 TB of local NVMe SSD. A 100-Gbps clustered network is instrumental for delivering IO throughput at terabytes per second scale for a file system.

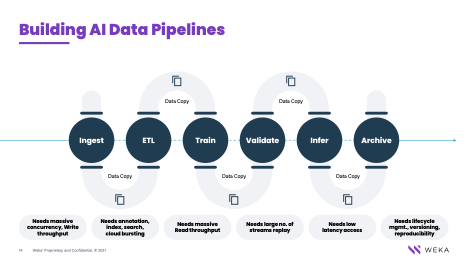

The WEKA data platform provides significant benefits to various workloads, including easy data access Electronic Design Automation (EDA), AI and ML, life sciences, and financial analysis. Traditionally, for each step in these workloads pipeline, you needed to copy data to an optimized location to quickly ingest data, then perform some type of extract, transform, load (ETL) function, do analysis, training, and inference, and then send off the data to a data lake for archive or other analysis.

WEKA accelerates this process by providing performance that works across both large and small IO concurrently. Each data pipeline needs zero copying, which improves time-to-value of the data.

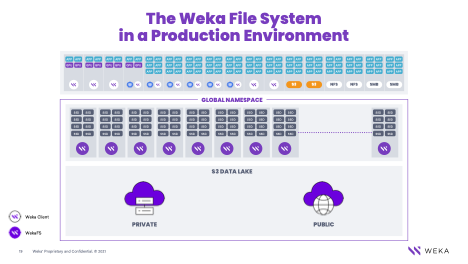

OCI provides compute infrastructure, which can be deployed as virtual machine (VM) or bare metal instances. OCI offers many different Compute shapes enabling customers to choose the best configuration for their workloads. OCI offers Compute shapes with 100 Gbps RDMA and two 50-Gbps networking and local NVMe SSDs with no data loss on reboot, which are ideal to run WEKA. WEKA scales into the Exabyte space by using OCI Object Storage as a transparent tier. When you write data into WEKA, it always appears in the same location that it was written, even if the data was tiered off to Object Storage. This global namespace capability allows you to have your performant storage and your data lake all in one system, reducing complexity and easing data pipeline management.

Architectures

Weka can be deployed on OCI in the following configurations:

-

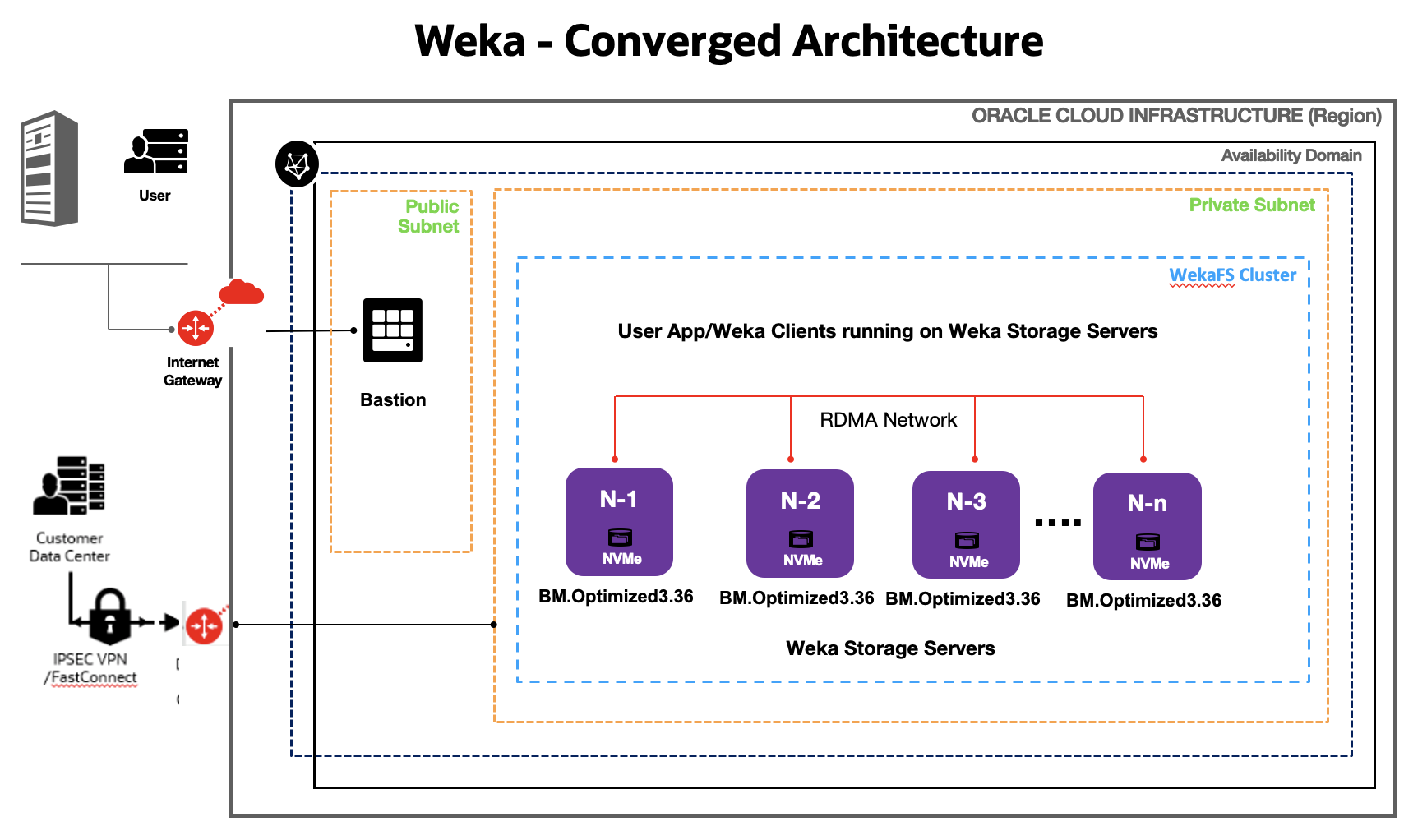

Converged Weka deployment: User application and Weka client deployed on Weka storage servers

-

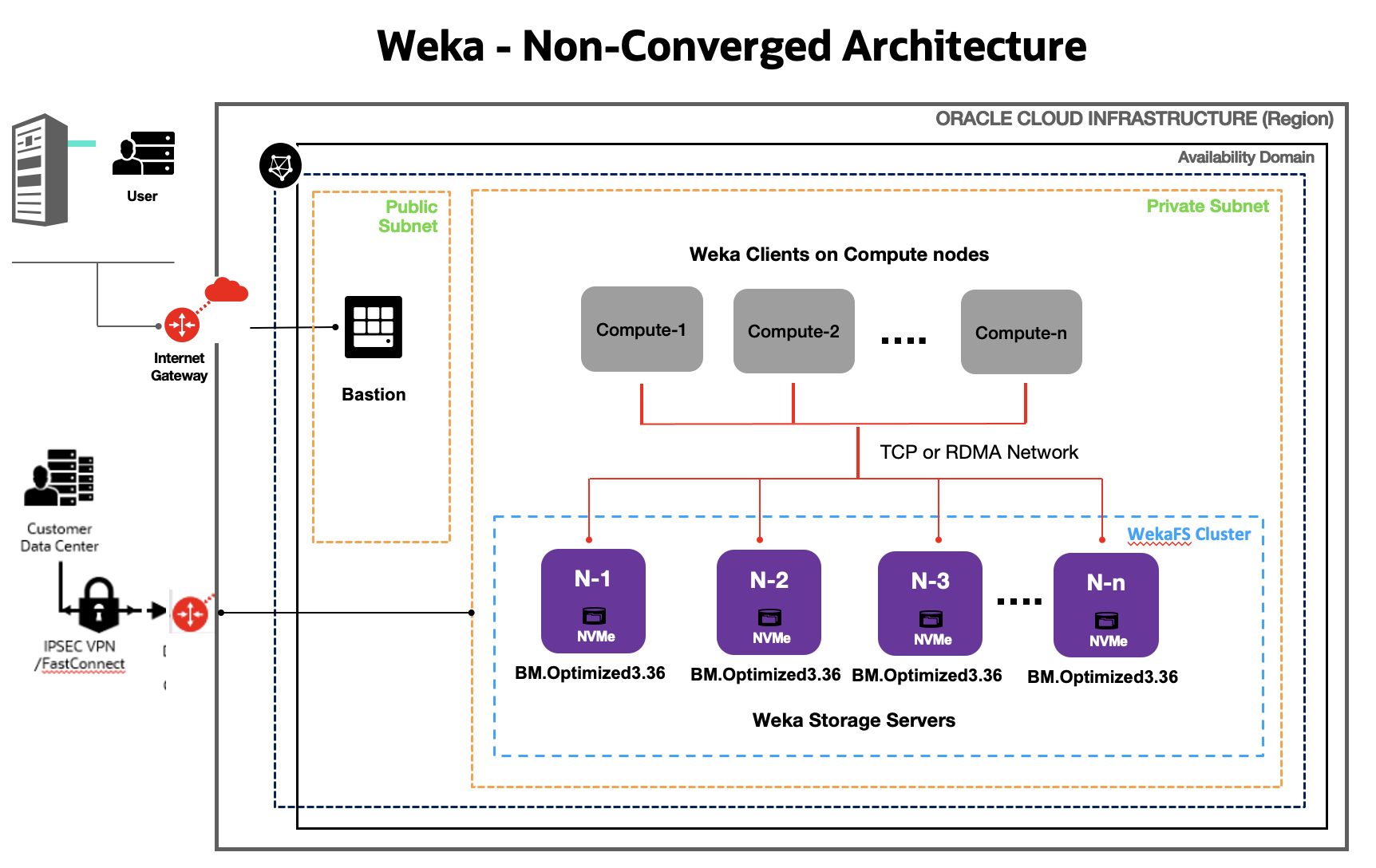

Non-converged Weka deployment: User application and Weka client deployed on separate servers

Figure 1: Converged Weka deployment

Figure 2: Non-converged Weka deployment

Benchmark

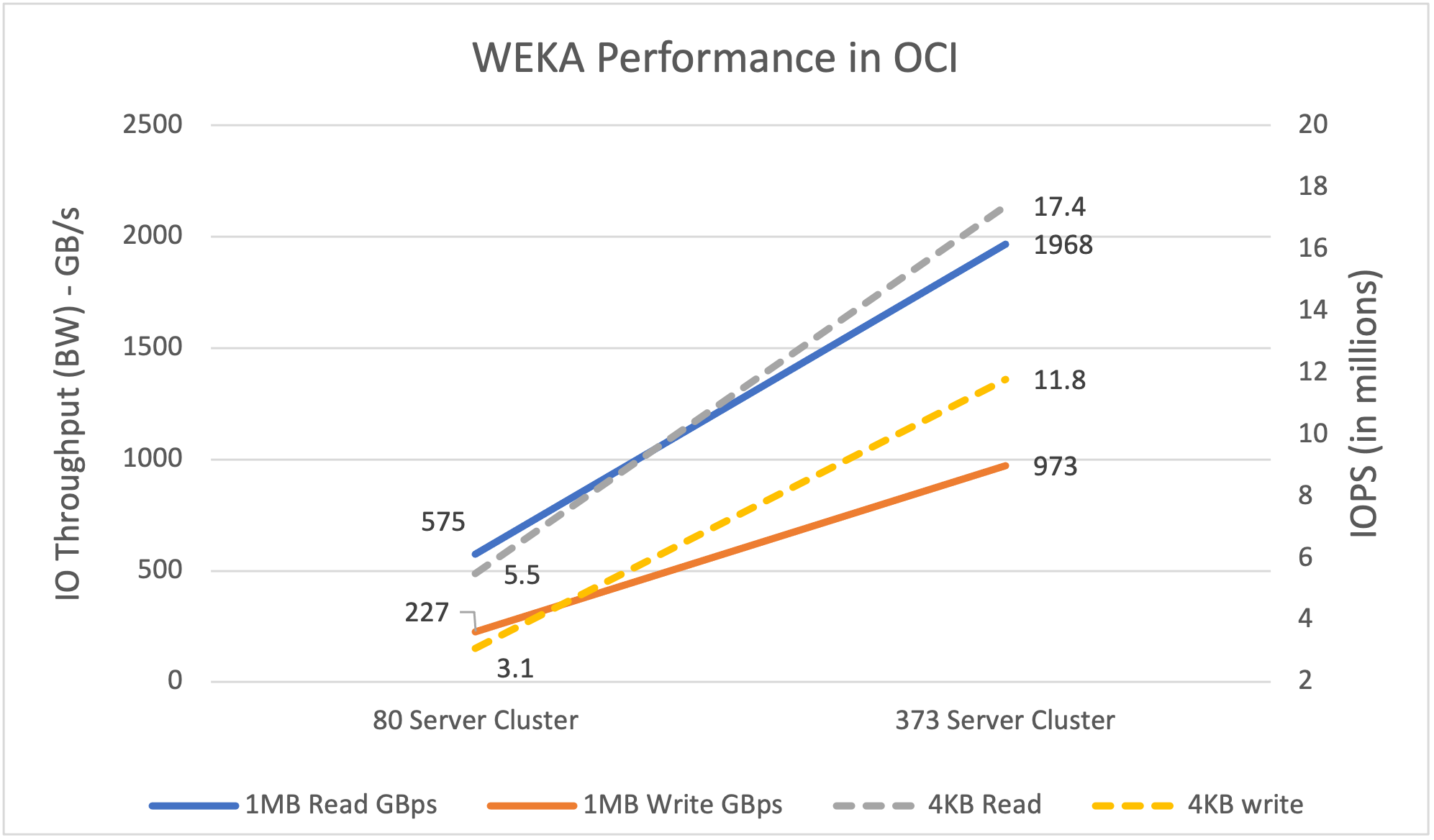

To test Weka on OCI, we utilized two sizes of cluster: 80 servers and 373 servers. BM.Optimized3.36 Compute shapes (bare metal) were used, each having 36 physical cores, 512 GB of RAM, RDMA enabled 100-gigabit ethernet network port, and a single 3.84-TB NVMe drive. WEKA was configured to utilize six physical cores on the servers, leaving 30 physical cores available for user workloads and applications. Performance testing was done in a converged manner, where the benchmarking workload was run using the spare CPU cores on the same servers as the WEKA storage.

Flexible IO (Fio) was used to generate IO activity on the cluster with 1-MB blocks to simulate large block workloads and 4-KB blocks to simulate small block workloads. Latencies from both clusters showed excellent results with read latencies down to 108 μs and write latencies down to 89 μs.

Large block (1 MB) read throughput on the 80-server cluster was 575 GB/s, averaging at 7.19 GB/s per server and 227 GB/s on writes. For the 373-server cluster, large block throughput was 1.968 TB/s and 937 GB/s on writes.

For small block (4 KB) IOPS on the 80-server cluster, we saw 5.5 million read IOPs and 3.1 million write IOPS. For the 373-server cluster, we saw 17.4 million read IOPs and 11.8 million write IOPs.

| Cluster size |

Read BW (1 MB) |

Write BW (1 MB) |

Read IOPS (4 KB) |

Write IOPS (4K B) |

| 80 server cluster |

575 GB/s |

227 GB/s |

5.5 million |

3.1 million |

| 373 server cluster |

1.968 TB/s |

973 GB/s |

17.4 million |

11.8 million |

Table 1: Fio benchmark performance

Graph 1: IO throughput and IOPS performance

The performance that WEKA and OCI can provide to customer workloads is fantastic. With the rise of AI and HPC workloads across various industries, this combination of performance and scale along with the elasticity that OCI provides, allows you to successfully host modern EDA, life sciences, financial analysis, and even more traditional enterprise workloads on OCI.

Next steps

To learn more on how to deploy Weka on Oracle Cloud Infrastructure or run a proof of concept, contact Pinkesh Valdria or ask your Oracle Sales Account team to engage the HPC Storage team.