Oracle Cloud Infrastructure (OCI) preemptible instances allow you to run periodic and short-term workloads at a reduced cost. As a tradeoff, OCI can reclaim these instances when the capacity is needed elsewhere. Oracle Container Engine for Kubernetes (OKE) now supports the use of Preemptible instances on your Kubernetes clusters. So, you can run your fault tolerant containerized workloads on your cluster with preemptible worker nodes.

What are preemptible instances?

Preemptible virtual machines (VMs) are OCI’s equivalent to spot instances that provide a 50% discount off on-demand VM instances pricing irrespective of shape or region. Fault-tolerant and interruptible workloads, such as daily builds, automated tests, extract, transform, load (ETL) and batch jobs, and big data analytics, can take advantage of these less expensive instances with lower availability guarantees.

Preemptible VMs utilize spare compute capacity available in OCI, typically for a short duration when customers scale down their usage temporarily or in a ramp up phase. This scalability enables OCI to provide customers this capacity to meet their short-term or burstable needs. The only difference between an on-demand VM instance and a preemptible VM instance is that OCI can interrupt a preemptible VM instance with two minutes of notification when it needs the capacity back.

Fortunately, with simple modifications, modern cloud native applications can be fault-tolerant and resilient to interruptions. This opportunistic preemptible compute capacity allows customers to run highly optimized workloads that aren’t otherwise possible. These benefits make interruptions an acceptable trade-off for many workloads.

Preemptible instances are offered on all OCI VM shapes (except Dedicated VM) and are available in all regions. For more information, see the documentation about preemptible instances.

OKE and preemptible nodes

OKE simplifies the operations of enterprise-grade Kubernetes at scale. It reduces the time, cost, and effort needed to manage the complexities of the Kubernetes infrastructure. It lets you deploy Kubernetes clusters and ensure reliable operations for both the control plane and the worker nodes with automatic scaling, upgrades, and security patching. Kubernetes is a natural fit for running fault tolerant workloads because it’s designed to handle ephemeral infrastructure and Kubernetes Scheduling is one of the core components of the Kubernetes control plane. It assigns workloads to available worker nodes. If a node is deleted, it reschedules the pod to another available worker node.

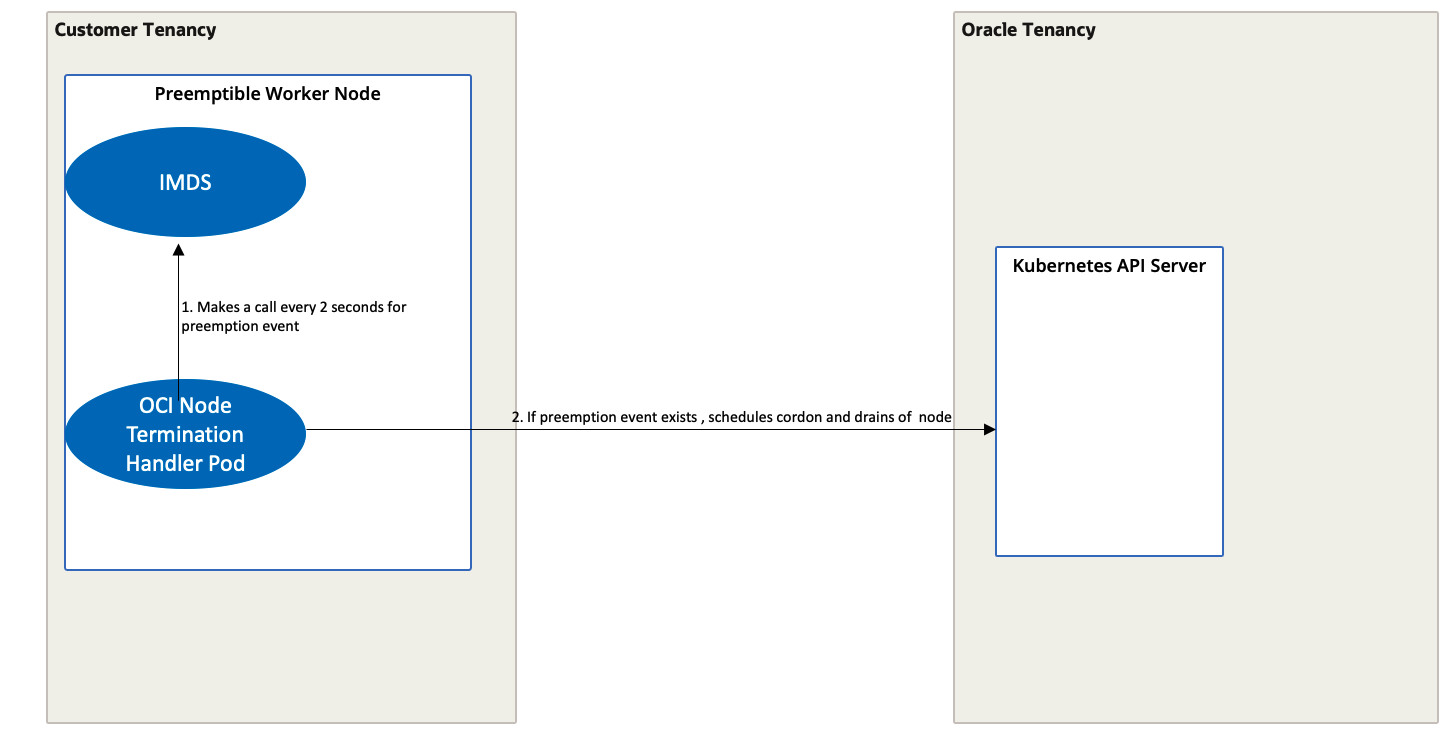

OKE automatically deploys an OCI node termination handler to each preemptible instance in your cluster, so you don’t have to build automation and deploy yourself. The termination handler runs as a daemonset on each preemptible instance and polls the Instance Metadata service (IMDS) every two seconds to detect a preemption event on the preemptible node. If a preemption event is detected, the node is terminated in two minutes from the time the preemption event was created.

The OCI node termination handler then communicates with the Kubernetes API Server using the OKE cordon and drain feature to cordon the preemptible node to prevent the kube-scheduler from placing new pods onto that node. It then drains the preemptible node to safely evict pods, ensuring that the pod’s containers terminate gracefully and perform any necessary cleanup.

How do I schedule my pods to use preemptible nodes?

The preemptible nodes are automatically created with both a Kubernetes label and a taint to control pod placement of your workloads. A Kubernetes label enables you to use node affinity, allowing you to constrain which nodes your pod can be scheduled on based on node labels. So, while node affinity is a property of pods that attracts them to a set of nodes, taints are the opposite: They allow a node to repel a set of pods. Taints ensure that pods aren’t scheduled to run on a certain node type. Only pods that have a toleration matching the taint can be scheduled on the node type. Critical pods that run on your OKE clusters, such as CoreDNS, automatically have the matching toleration for the preemptible node taint to be able to be scheduled on the node.

The following command adds a Kubernetes label to preemptible nodes:

oci.oraclecloud.com/oke-is-preemptible=trueThe following command adds a Kubernetes taint to preemptible nodes:

oci.oraclecloud.com/oke-is-preemptibleRun on preemptible nodes with an on-demand node as backup

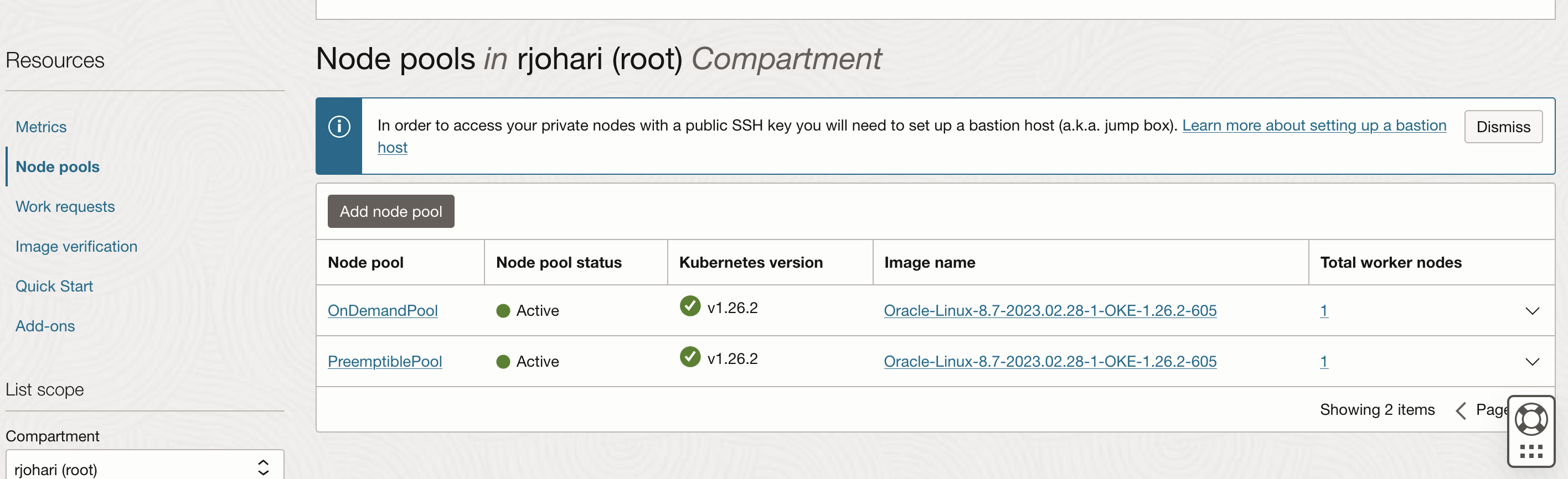

The tutorial deploys a pod with a preference to a preemptible node. If a preemptible instance is unavailable, it uses a regular or on-demand node as a backup. The tutorial has two node pools with managed nodes. One node pool has a managed node with on-demand capacity, and the other node pool has a managed node with preemptible capacity.

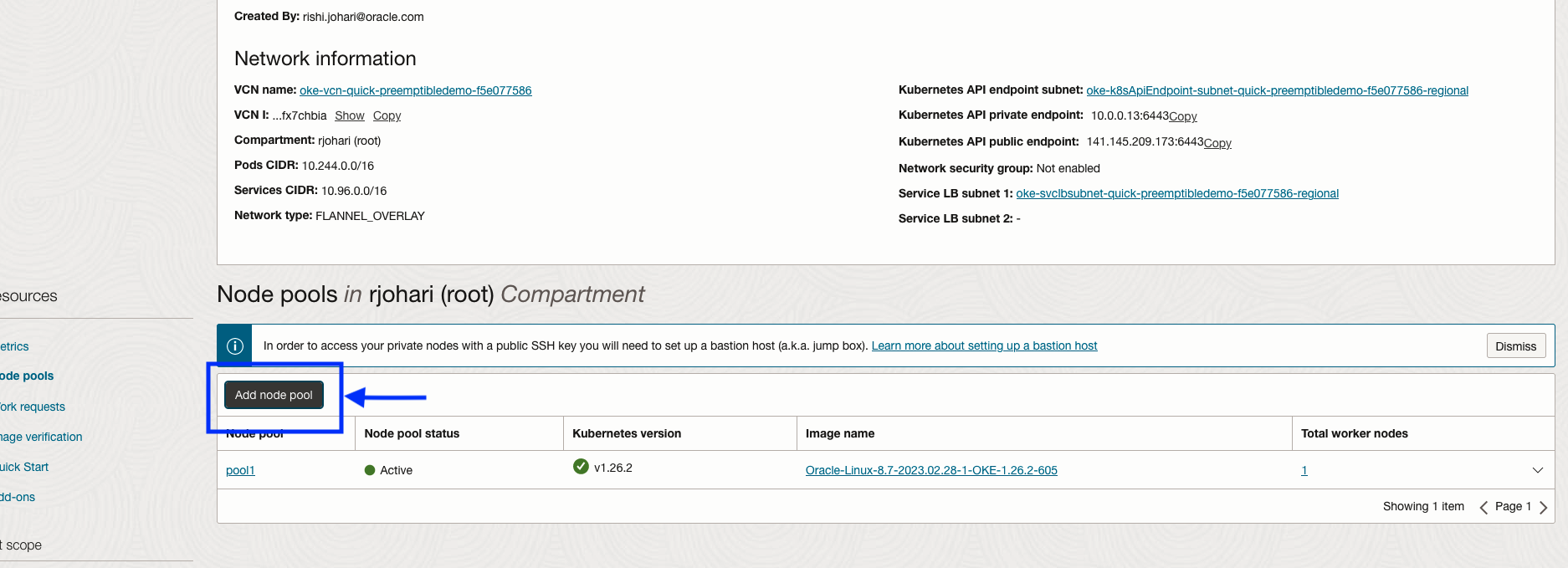

This tutorial requires the creation of a OKE cluster. To get started with OKE cluster creation, see Using the Console to create a Cluster with Default Settings in the Quick Create workflow. When creating your cluster, keep all the defaults but reduce the node count from 3 to 1.

-

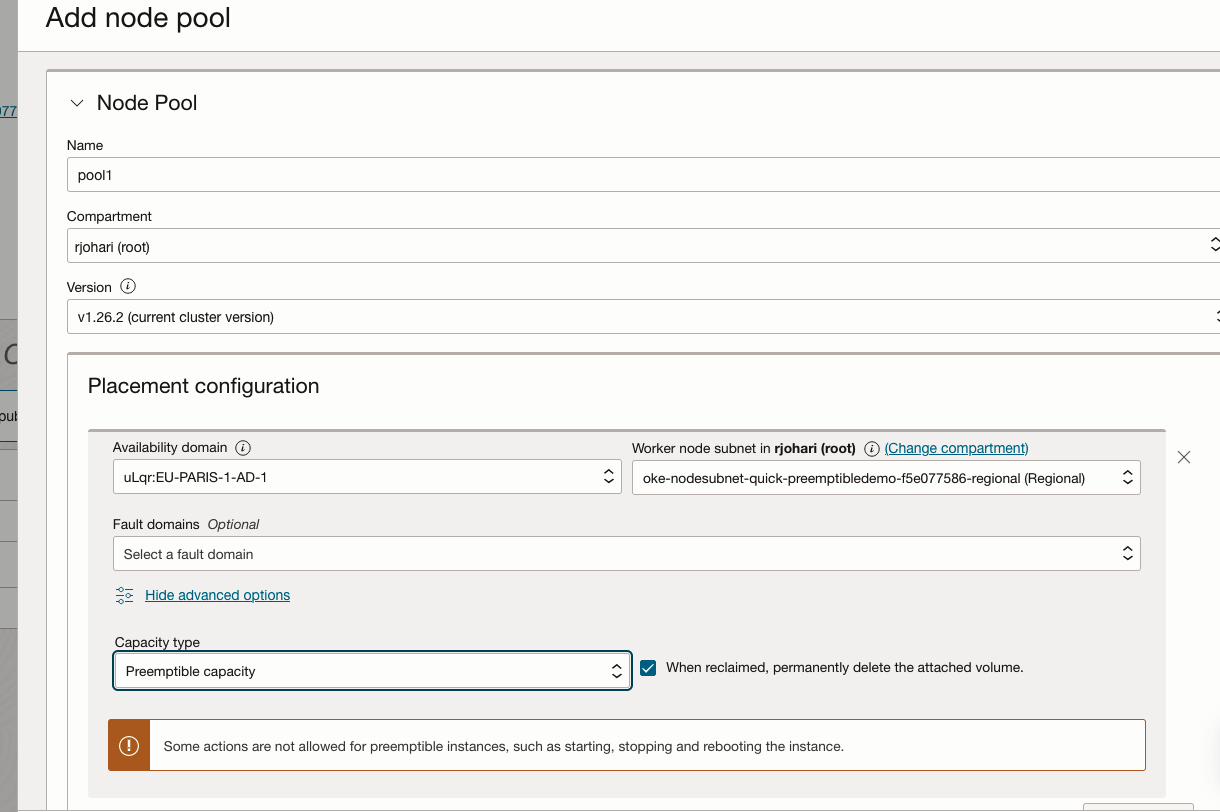

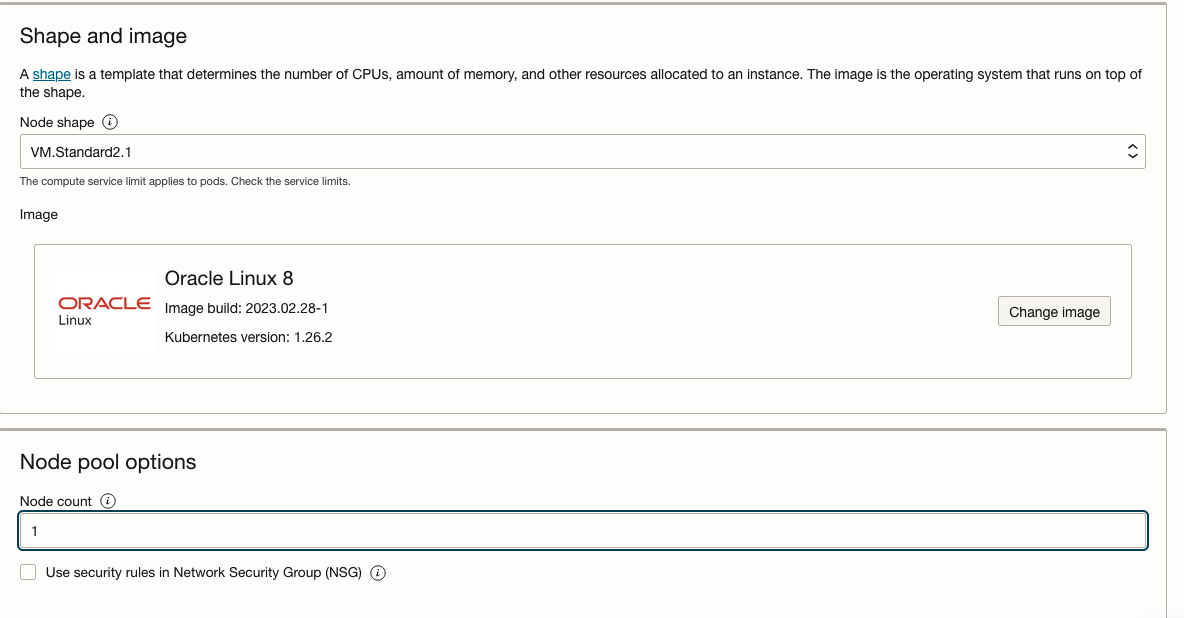

Create a node pool of 1 of VM standard 1.1. Choose Preemptible capacity. With the OKE cluster you created from your prerequisite step, create another node pool.

-

Create another node pool with preemptible capacity. Keep the default settings but change the following fields:

-

Choose any availability domain.

-

Choose the worker node subnet you created from the Quick Create workflow.

-

Choose preemptible capacity. You can leave “When reclaimed, permanently delete the attached volume” checked.

-

Choose shape VM.Standard2.1.

-

Reduce the number of nodes from 3 to 1.

-

- Click Add to create the node pool of the preemptible instance. When complete, you can see two node pool with one node each in your cluster.

-

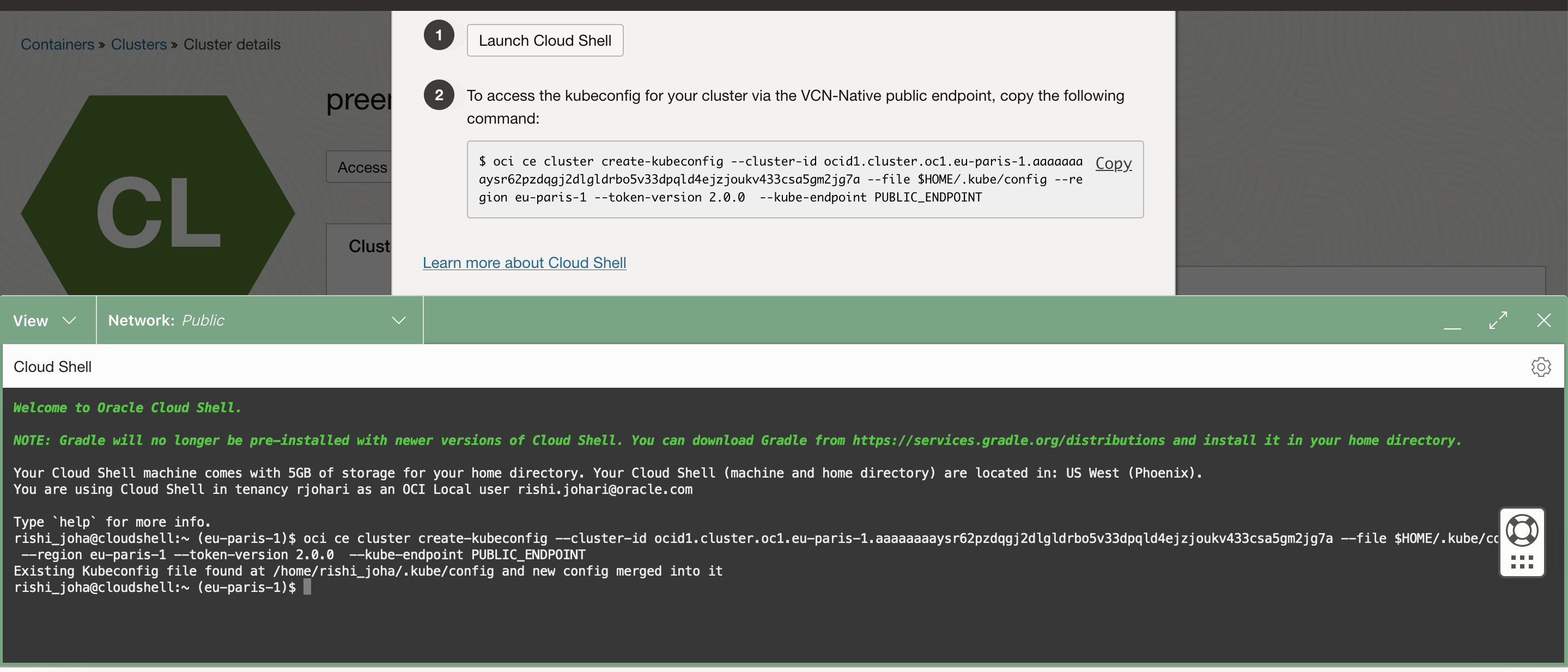

Access your cluster using OCI Cloud Shell. In Cluster details, click Access Cluster, and then select Launch Cloud Shell.

-

Run the provided OCI CLI command in Cloud Shell to create the kubeconfig to access your cluster.

-

Create a pod that has preferred scheduling towards the preemptible instance in your Kubernetes cluster with the following command:

vi preemptibleworkload.yamlThen enter the following information in the manifest file. This manifest creates a strong preference to schedule the pod on the preemptible instance with a weight of 100. To learn more, see Assigning Pods to Nodes.

apiVersion: v1 kind: Pod metadata: name: preemptible-node-affinity spec: affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 preference: matchExpressions: - key: oci.oraclecloud.com/oke-is-preemptible operator: In values: - "true" containers: - name: with-node-affinity image: registry.k8s.io/pause:2.0 tolerations: - key: oci.oraclecloud.com/oke-is-preemptible operator: Exists effect: "NoSchedule -

Deploy the manifest file with following command:

kubectl create -f preemptibleworkload.yaml -

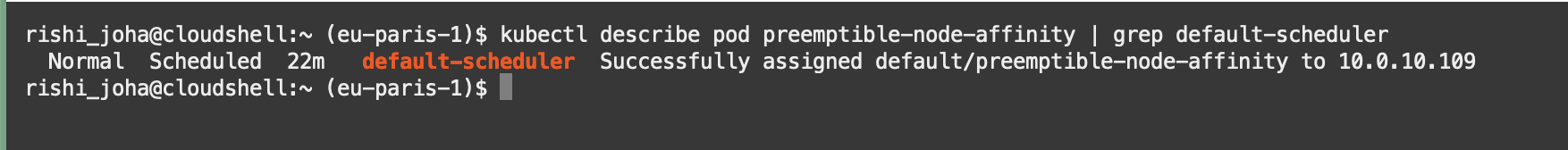

After noting the preemptible instance private IP address from checking the OCI Compute instance console, you can now see the pod scheduled to the preemptible instance. You can confirm by running the following command:

kubectl describe pod preemptible-node-affinity | grep default-schedulerWithin the output, you can see the pod running on the preemptible instance.

With the power of using node affinity, you can prefer your pod run on a preemptible instance only if it’s available. Your pod can always run with an on-demand node as a backup.

Run diverse workloads in a single Kubernetes cluster

Some customer use cases have diverse workloads that they run on a single OKE cluster. Customers prefer this option instead of creating multiple clusters to ease the operational burden of managing multiple Kubernetes clusters. In this case, you might opt to run fault-tolerant workloads with stateful workloads on the same cluster.

In this scenario, we want the fault-tolerant workloads to run only on preemptible instances, while the stateful workloads need a guarantee of node availability, so they can only run on on-demand instances. So, the pod specification for the fault tolerant workload needs to have both the toleration and Kubernetes label to ensure that they only run on a preemptible instance.

A pod specification can look like the following example:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

nodeSelector:

oci.oraclecloud.com/oke-is-preemptible: "true"

tolerations:

- key: oci.oraclecloud.com/oke-is-preemptible

operator: Exists

effect: "NoSchedule"When running a pod that needs to be scheduled to a node running on-demand capacity in the same cluster, you can have a pod specification without a toleration for the preemptible taint. You need a nodeSelector only if you want the pod to be placed on a specific on-demand capacity instance type, such as a flexible instance, as seen in the following code block:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

nodeSelector:

node.kubernetes.io/instance-type: "VM.Standard.E3.Flex"Conclusion

Preemptible instances offer a predictable saving to rein in costs as your cloud infrastructure footprint grows. The tradeoff of using this capacity type is that OCI can reclaim the instances at any time, which means only fault-tolerant workloads are suitable to run on preemptible instances. Kubernetes helps as a natural fit for these instances because it’s designed to handle ephemeral infrastructure, enabling you to run fault tolerant workloads using Container Engine for Kubernetes and save on costs.

For more information on the concepts in this blog post and Oracle Cloud Infrastructure, see the following resources: