We recently worked with a customer looking to deploy a managed Kubernetes cluster using Oracle Container Engine for Kubernetes (OKE). The cluster used the native pod networking (NPN) option and had its resources spread across two compartments: Network resources were deployed to one compartment while the cluster, node pool, and instance resources were deployed to another. The customer ran into challenges deploying this topology into their tenancy and reached out to us for help. While the OKE documentation is comprehensive and complete, pulling all the appropriate pieces together for this particular but common scenario can be challenging.

This blog curates the various, disparate documentation sources into an approach that customers with similar requirements can follow. The target reader is an Oracle Cloud Infrastructure (OCI) tenancy administrator looking to implement a similar approach. The steps detailed were used to solve this customer’s problem and unblock their deployment. We believe that readers can use the prescriptive guidance provided to properly configure their deployments.

Background

Oracle Container Engine for Kubernetes (OKE) is a managed container service that has been a prime destination for OCI workloads for over half a decade. OKE provides a Cloud Native Computing Foundation (CNCF)-certified Kubernetes environment tailored to hosting your microservices or to deploy custom or packaged applications in a highly available, managed capacity. Over the last year, OKE has introduced a number of improvements, including the ability to create clusters with native pod networking. This capability allows OKE users to make Kubernetes pods directly routable within their virtual cloud networks (VCNs) and apply OCI networking capabilities like security lists, network security groups (NSGs), and VCN flow logs directly to their pods. This capability is also advantageous to anyone moving to OKE from competitive cloud platforms where a native container network interface (CNI) is the default, reducing the need to rearchitect when lifting-and-shifting their deployments.

OCI has continued to refine and extend our best practices, landing zone reference architecture for a secure, scalable, and manageable tenancy design that fosters proper segregation of duties. This reference architecture is structured to comply with the cloud provider benchmarks laid out by the Center for Internet Security (CIS) and is a starting point for most of our customers. A key aspect of the landing zone, and something that we see with most of our customers, is the separation of network resources from compute resources into separate OCI compartments.

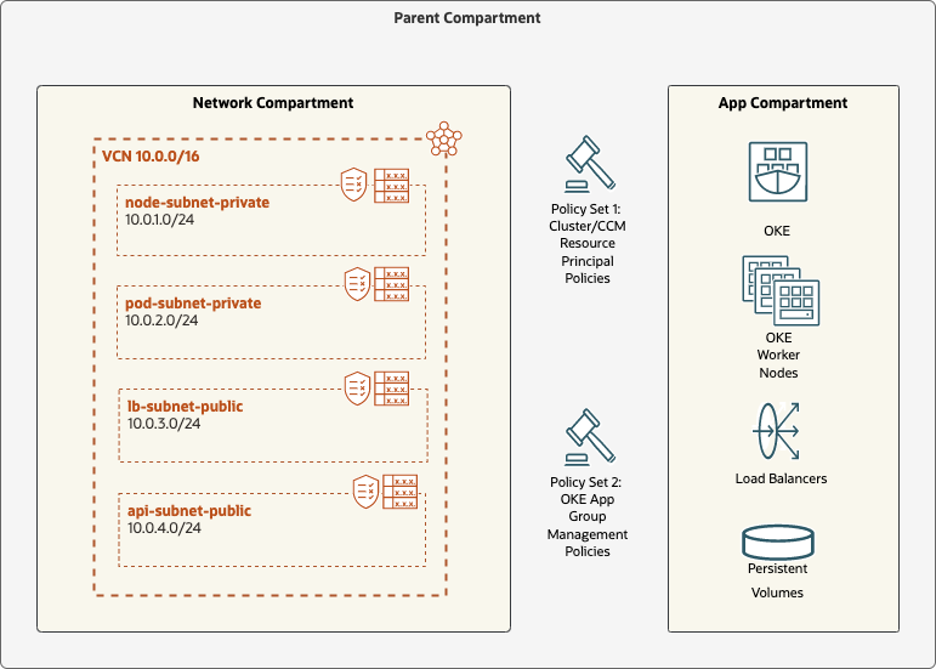

In the scenario covered in this blog, a customer has distinct network and application compartments. An application administrator needs the ability to deploy and manage an OKE cluster into the application compartment while using network resources, such as VCNs, network security groups, and routing tables, precreated in the network compartment by the network administrators The application administrator can’t create more network resources directly while the OKE cluster itself would have the ability allocate whatever resources necessary to ensure proper operations, such as private IP addresses for pods.

The following graphic visually depicts the reference setup that was tested:

The following steps describe how each portion of the environment is set up, which pages of the documentation are relevant to review, and any relevant highlights of the setup.

Step 1: Compartment setup

In our test setup, we modeled the environment with a common parent compartment called split-network-test containing two child compartments: Network and app. In our example, the policies depicted in the first image were placed in the split-network-test compartment though they can exist in any compartment above the app and network compartments in the hierarchy. For details on how to create an OCI compartment, see Creating an Oracle Cloud Infrastructure Compartment.

Step 2: VCN setup in the network compartment

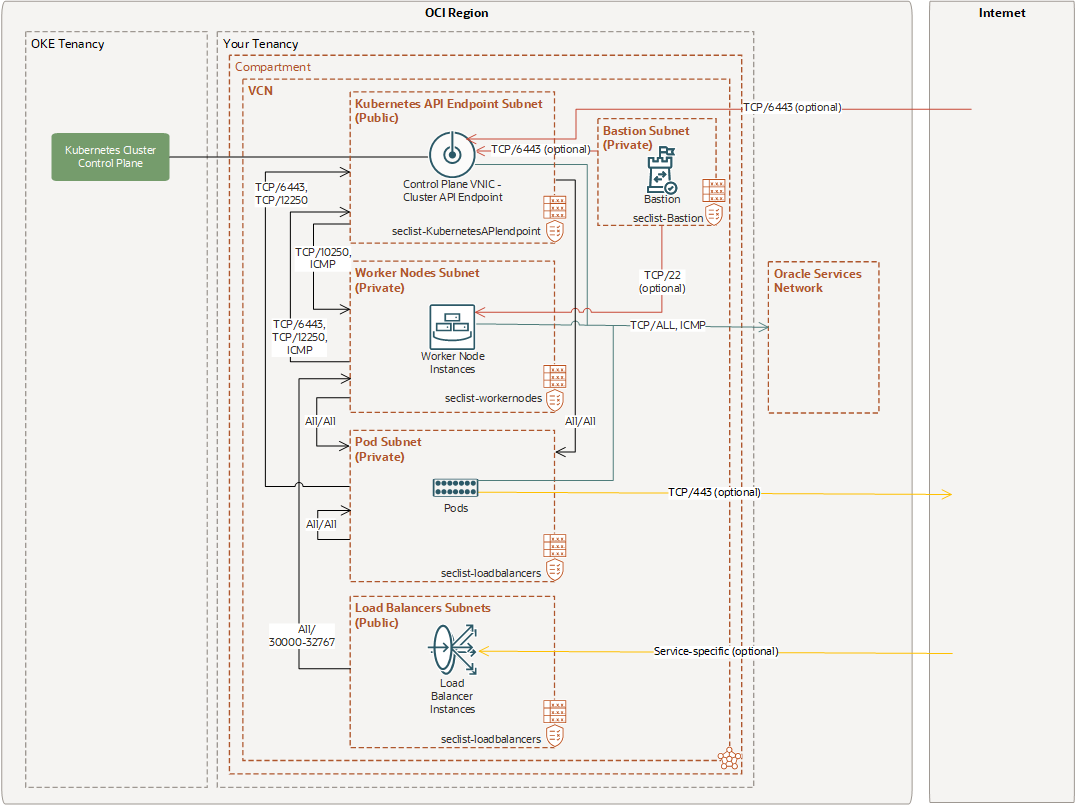

Correctly configuring your VCN is crucial for a properly functioning OKE cluster and is especially essential for native pod networking (NPN) configurations because so much more of the VCN surface area is exposed to OKE for interaction. All the details are described in the network configuration documentation. In setting up this architecture, we found the example network configurations particularly helpful. Four reference configurations describing the four permutations are possible related to Flannel versus NPN CNIs and public or private API endpoints. In our setup, we followed the steps for Example 3, which can be visualized in the following graphic.

Carefully note all the routing rules and security list rules described in this scenario. You must implement them precisely either with security lists or network security groups. The bastion subnet and rules are optional. In our setup, we used the public load balancer subnet as a bastion subnet with the relevant rules, such as SSH ingress on port 22.

Step 3: Policy setup in parent compartment

The tricky part for many customers is correctly authoring the appropriate policies. When splitting OKE across a network and application compartment, understanding which policies need to apply to which compartments might be tricky. The following setup worked for us along with references to the corresponding OKE documentation. Both policies were placed in a parent compartment common to both the app and network compartments.

NPN resource principal policy

When working with NPN, specific resource principal policies are required for the cloud controller manager (CCM) to interact with the VCN. Those policies are described in the documentation as Additional IAM Policy when a Cluster and its Node Pools are in Different Compartments. We found that these policies are also required in the case that a cluster and node pools are in the same compartment, but the network is in a different compartment.

Allow any-user to manage instances in compartment split-network-test where all { request.principal.type = 'cluster' }

Allow any-user to use private-ips in compartment split-network-test where all { request.principal.type = 'cluster' }

Allow any-user to use network-security-groups in compartment split-network-test where all { request.principal.type = 'cluster' }Our policy statements are a hybrid of the examples in the documentation. While we liked the stronger control of locking down permissions to a given compartment, instead of the entire tenancy, we didn’t feel the need to lock the resource principal down to an individual cluster OCID. We used request.principal.type instead of request.principal.id. The danger of using a specific OCID is that you constantly need to update the policy as your cluster footprint changes, and forgetting to update the policy leads to failures that may be difficult to debug. We observed this malady when debugging the customer’s environment.

These policies are specific in that they permit an OCI resource type, a cluster running within your tenancy, to manage certain resources in each compartment. Our customer saw the “Allow any-user…” prefix and became concerned that this policy is broadly permissive. However, that permission is limited to a specific set of automated resources within the second portion of the policy clause.

Nonadministrative group policy

You will also need to set policies to allow an OKE administrator to create, update, and manage clusters without needing full administrator permissions or the ability to create network resources. A full treatment of this topic is covered in the Policy Configuration for Cluster Creation and Deployment section of the documentation. While comprehensive, what the documentation doesn’t contain is a clear example of how to apply these policies to the split-compartment model.

We applied the following policies statements, which were successful in the described scenario:

Allow group app-group to read all-resources in compartment app

Allow group app-group to manage cluster-family in compartment app

Allow group app-group to manage instance-family in compartment app

Allow group app-group to manage volume-family in compartment app

Allow group app-group to read all-resources in compartment network

Allow group app-group to use virtual-network-family in compartment network

Allow group app-group to use virtual-network-family in compartment network

Allow group app-group to use network-security-groups in compartment network

Allow group app-group to use vnics in compartment network

Allow group app-group to use subnets in compartment network

Allow group app-group to use private-ips in compartment network

Allow group app-group to use public-ips in compartment networkThe group app-group contains the OKE management users. This group is given permission to control instance, cluster, and volume resources in the app compartment, while only using preallocated resources in the network compartment.

Other use cases, such as using OCI Vault or the File Storage Service, potentially require more policy statements. We tested with a simple setup that used compute resources and persistent volumes.

Step 4: Creating the OKE cluster in the app compartment

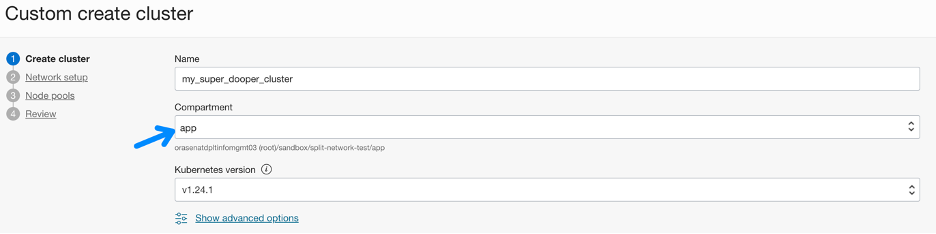

After you complete steps 1–3, step 4 is easy. When creating an OKE cluster in the Oracle Cloud Console, follow the Custom create flow.

Your cluster goes into the app compartment:

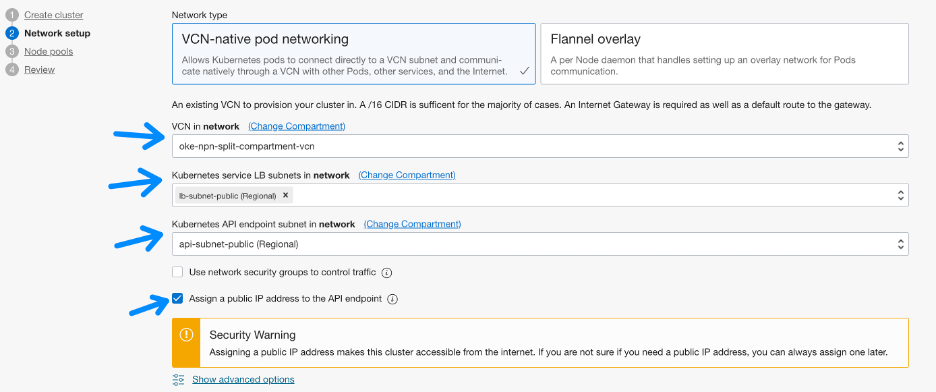

On the second page, click the Change Compartment link three times to switch to the network compartment and select the correct subnet.

If you’re creating a cluster with a public endpoint, even though the “Assign a public IP address to the API endpoint” selector is optional, if you don’t check it your, cluster fails because the CCM looks for a public endpoint to configure the cluster. The cluster creation error you get is difficult to correlate. So, if placing your API endpoint in a public subnet, don’t forget to click this checkbox.

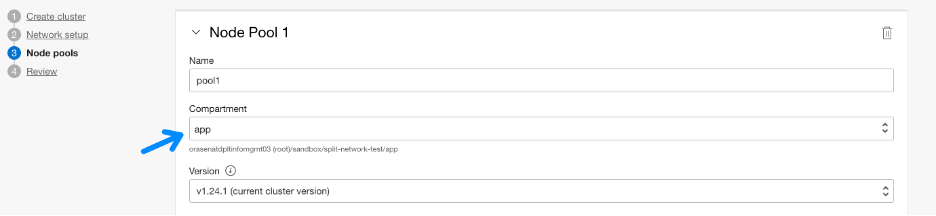

On the third page, your node pools stay in your app compartment:

While your placement configuration and pod communication sections require you to click the Change Compartment link, switch to your network compartment and select the appropriate subnets that you created in Step 1.

On the final review page, approve the cluster creation and that’s it! Within a few minutes, you have a fully functioning cluster to start deploying your workloads to.

Conclusion

Deploying an OKE cluster with native pod networking into a CIS-compliant Oracle Cloud Infrastructure landing zone can be tricky. We hope that, by following the steps in this blog, you can avoid some of the pitfalls that others have faced when getting this production-grade configuration up and running.