The financial services sector is a rapid adopter of the high-performance computing (HPC) market, projected to reach $56 billion by 2028. Over half of this industry, encompassing banking and fintech, is choosing HPC to enhance operational efficiency, reliability, and decision-making through advanced analytics, artificial intelligence (AI) and machine learning (ML), and the Monte Carlo method. However, financial institutions face significant cost challenges with HPC technologies.

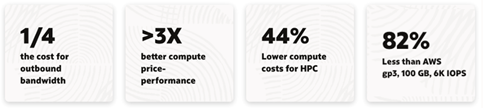

In collaboration with technology partners such as NVIDIA, Oracle Cloud Infrastructure (OCI) addresses these challenges by significantly enhancing HPC grid performance. The first STAC-A2 Benchmark test in OCI with NVIDIA’s advanced GPU technology demonstrates unparalleled price performance in the market. A 25% reduction in outbound bandwidth costs, over threefold improvement in compute price performance, 82% less than Amazon Web Service (AWS) gp3, 100 GB, 6K IOPS, and a 44% decrease in HPC compute costs all position OCI at the forefront of cost-effective HPC solutions in the financial services sector.

How the cloud solves the HPC challenge

Today’s financial institutions navigate a complex landscape, balancing traditional market pressures like regulatory compliance and uncertain markets with emerging challenges, such as new competitors and evolving customer expectations. While user-friendly, compute-intensive platforms aren’t new, the current computational demands are unprecedented, driven by volatile markets, changing customer behaviors, heightened competition, the surge in available data, and the need for rapid decision-making.

The integration of AI, and now generative AI, offers refined insights in areas like lending, capital markets, market risk, and portfolio pricing and optimization, thus balancing profitability with risk management and compliance excellence. Given the challenge of computational power required for AI, the new generation of HPC is a breakthrough for financial institutions, capable of powering demanding applications like the Monte Carlo method on demand at economically viable costs. A critical tool in financial analysis, Monte Carlo methods enable institutions to simulate millions of scenarios, stress-test portfolios, and manage a broad spectrum of financial risks more effectively.

To apply these advantages, financial institutions must have access to HPC capabilities in a cloud solution platform, with a flexible deployment model on-premises, hybrid, or in the public cloud, without losing the ability to consume higher capacity on demand. According to a 2022 McKinsey survey, only 13% of financial services leaders have half of their IT footprint in the cloud, but over 50% plan to shift at least half of their workloads to the cloud in the next five years, starting with their high-performance analytic platform-as-a-service (APaaS) engines. A public cloud that offers unmatched flexibility, scalability, and cost-efficiency can allow institutions not only to meet peak demands in the short term but also adapt to changing business needs and support a coexistence strategy with a hybrid deployment model, which is inevitably part of the transformation to the public cloud.

OCI delivers a flexible deployment model offering unmatched flexibility, scalability, and cost-efficiency allowing institutions not only to meet peak demands in the short term but also adapt to changing business needs. For example, a large investment bank, known for its significant market influence and high computing demands, deployed the OCI platform to power its Monte Carlo model for market risk and demonstrated exceptional performance and economic efficiency using OCI in its market risk analysis. The bank initially chose OCI for its cost-effectiveness, but the platform’s security-focused design proved crucial, streamlining the complex onboarding and certification process typical in the financial industry. OCI’s standout feature was its superior performance, delivering a 54% improvement ratio in processing large Monte Carlo loads, empirically validating the STAC benchmarking findings in production and showing that OCI outperforms other cloud providers in speed and efficiency.

The client was also pleased with Oracle’s flexible and customer-centered approach and reinforced the fact that the offering of dedicated resources and expertise was pivotal in this successful deployment. Use cases like these position OCI not only as the best platform for HPC in support of Monte Carlo methods, AI and ML, and generative AI, but also as the leading comprehensive solution provider, including people, technology processes, and best practices for finance.

OCI’s computational power

OCI offers the industry’s most powerful HPC solutions with ultra-high-speed NVIDIA ConnectX RDMA network connectivity. The integrated OCI solutions based on OCI bare metal, the BM.GPU4.8 shape, can scale to tens of thousands of NVIDIA GPUs, while NVIDIA A100 Tensor Core GPU7 delivers unprecedented acceleration at every scale for AI, data analytics, and HPC to tackle the world’s toughest computing challenges. The combined solution offers a portfolio of other bare metal instances with various CPU and GPU configurations, including NVIDIA H100 and NVIDIA A100 GPUs, offering an ideal solution platform, training generative AI, including conversational applications and diffusion models, applications for computer vision, natural language processing, recommendation systems, and more.

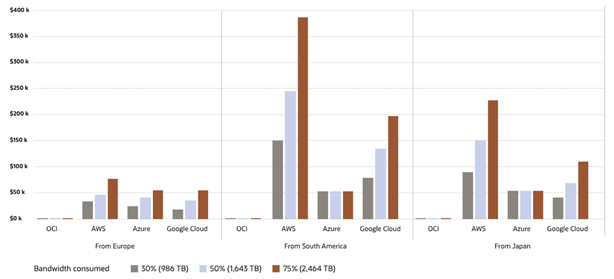

Technical differentiators and pure performance are important, but the delivered performance per dollar is what ultimately matters. OCI is a leader in price-performance compared to other cloud providers, partly because of low list pricing for infrastructure services, commitment-based discounts, and the Oracle Support Rewards program. OCI also provides flexibility with universal cloud credits and no longer-term commitments to specific hardware to get favorable pricing (except for A100 and H100 GPUs). Most OCI security services are free of charge because Oracle is committed to protecting customer data and does not want cost to be a consideration when it comes to security. Finally, OCI doesn’t charge for data egress over a private dedicated connection with FastConnect or between availability domains in a region, which can be a significant and unpredictable cost from other cloud providers.

STAC-A2 shows OCI differentiators

STAC-A2 is the technology benchmark standard based on financial market risk analysis. Designed by quantitative analysts and technologists from some of the world’s largest banks, STAC-A2 reports the performance, scaling, quality, and resource efficiency of any technology stack that can handle the workload. The benchmark is a Monte Carlo estimation of Heston-based Greeks for a path-dependent, multi-asset option with early exercise. The workload can be a proxy extended to price discovery, market risk calculations, such as sensitivity Greeks, profit and loss, and value at risk (VaR) in market risk and counterparty credit risk (CCR) workloads, such as credit valuation adjustment (CVA) and margins that financial institutions calculate for trading and risk management.

The core of STAC-A2 is a set of algorithms to compute Greeks on a particular type of option using mathematical methods to approximate theoretical values. STAC-A2 speed benchmarks require the implementation to report the elapsed time (tcomplete – tsubmit) for component operations and the end-to-end GREEKS Operation. These tests involve five repeated runs of a given operation without restarting the STAC-A2 Implementation. So, each operation has one “cold” run and four “warm” runs, the latter of which benefit from preallocated memory and other efficiencies. Whether a cold run or warm run is more realistic depends on the use case one has in mind. A cold run simulates a deployment situation in which a risk engine starts in response to a request. A warm run simulates a case in which an engine is already running, with sufficient memory allocated to handle the request.

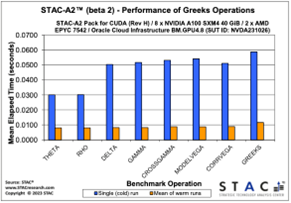

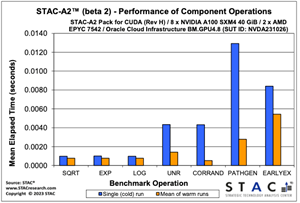

Figures 3 and 4 plot the mean elapsed time for each of the benchmarks to provide some insight into the relative time consumption of each operation. Figure 4 looks at only the component operations, while Figure 3 looks at each of the Greeks and Greek operations, which generates all the Greeks. The elapsed time for Greeks might be less than the sum of the elapsed time for the individual Greeks because certain interim algorithm results can be reused for multiple Greeks.

The STAC-A2 specifications cover over 200 test results performed on OCI HPC systems using a NVIDIA-authored STAC pack on OCI GPU hardware on October 27, 2023. The OCI NVIDIA-based STAC-A2 includes the following highlights:

- Compared to all publicly reported solutions to date, this solution set several records, including the best price performance among all cloud solutions (48,404 options per USD) over one hour, three days, and one year of continuous use (STAC-A2.β2.HPORTFOLIO.PRICE_PERF.[BURST | PERIODIC | CONTINUOUS])

- Compared to the most recently audited, single-server cloud-based solution (INTC221006b), OCI demonstrated the following capabilities:

- Demonstrated 3.2-times, 3.2-times, and 2.0-times price-performance advantages (in options per USD) for one hour, three days, and one year of continuous use (STAC-A2.β2.HPORTFOLIO.PRICE_PERF.[BURST | PERIODIC | CONTINUOUS])

- Had 14.6-times the throughput (options per second) (STAC-A2.β2.HPORTFOLIO.SPEED)

- Demonstrated 6.8-times and 7.8-times the speed in cold and warm runs of the baseline Greeks benchmarks (STAC-A2.β2.GREEKS.TIME.COLD|WARM)

- Demonstrated 9.0-times and 4.3-times the speed in cold and warm runs of the large Greeks benchmark (STAC-A2.β2.GREEKS.10-100K.1260.TIME.COLD|WARM)

Typically, Monte Carlo studies analyze hundreds of thousands of scenarios. As a result, the STAC-A2 warm benchmarks are probably more indicative of MCM performance.

Architectures and configurations

HPC architectures exhibit diverse structures and configurations, typically comprising the following fundamental components:

- Compute: Compute encompasses both central processing units (CPU) and graphics processing units (GPU). The compute element forms the core processing power responsible for executing complex calculations and tasks.

- Data storage: Involving both input and output, data storage in HPC systems can take various forms, including block storage, object storage, distributed file systems, and databases. This component plays a critical role in efficiently managing and accessing vast datasets associated with high-performance computing.

- Orchestration: This component involves a system for orchestrating, scheduling, managing, and monitoring jobs within the HPC environment. It ensures the efficient allocation of resources, coordination of tasks, and monitoring of overall system performance to optimize the processing of computational workloads.

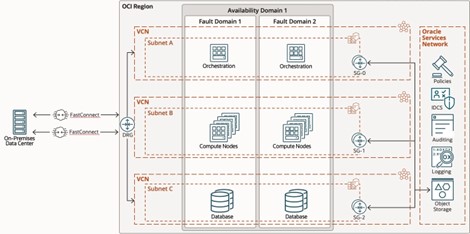

Figure 5 shows a sample architecture of how a customer runs a market risk Monte Carlo simulation on OCI. After each market close, the latest securities data is loaded in the databases. The orchestration software then prompts dozens, hundreds, or thousands of Compute nodes, depending on the type of workload, to start simulations. It’s not uncommon to have thousands of cores work on a particular simulation. Each Compute node stores results on attached block volumes and sends data back to on premises.

In this architecture example, the workload can easily be broken down into multiple parallel tasks, and each compute node has its own task to analyze with minimal dependency on other compute nodes. Other workloads can’t be easily split in parallel tasks and require a cluster of compute nodes. NVIDIA GPUs are key for parallel processing which is usually the case where rows can be operated independently.

| Part # | Description | Quantity | Instances | Unit price (cents) | Monthly cost($) |

| Compute VM | |||||

| B93113 | Compute – Standard – E4 – OCPU (OCPU per hour) | 16 | 6250 | 0.025 | 1,860,000.00 |

| B93114 | Compute – Standard – E4 – Memory (GB per hour) | 256 | 6250 | 0.0015 | 1,785,600.00 |

| Boot Volume | |||||

| B91961 | Storage – Block Volume – Storage (GB storage capacity per month) | 250 | 6250 | 0.025 | 39,843.75 |

| B91962 | Storage – Block Volume – Performance (Perf units/GB/month) | 2500 | 6250 | 0.0017 | 26,562.50 |

| Monthly total | $3,712,006.25 |

Table1: Compute grid bill of material (BOM) for 100,000 cores on OCI

Conclusion

OCI’s partnership with NVIDIA marks a significant advancement in high-performance computing for the financial sector. This powerful alliance delivers an unmatched blend of technological innovation and cost-efficiency, significantly outperforming competitors in price-performance ratio. Ideal for compute-intensive applications like Monte Carlo methods, OCI provides a future-ready solution that meets and exceeds the evolving computational demands of financial institutions, helping ensure that they stay at the forefront of innovation and efficiency in a rapidly changing digital landscape. To learn more, see: OCI with NVIDIA A100 Core GPUs for HPC and AI sets risk calculations records in financial services.