We are excited to announce the release of on demand node cycling for Oracle Container Engine for Kubernetes (OKE). This feature simplifies the process of updating managed worker nodes in OKE clusters. This previously time-consuming task required you to manually rotate nodes or develop your own solutions. Now, you can not only easily perform node upgrades through updating Kubernetes and host OS versions, but also update other node pool properties, such as SSH keys, boot volume size, custom cloud-init scripts, and more.

Node pools

Within a Kubernetes environment, work is scheduled onto worker nodes. Worker nodes are physical or virtual machines containing the resources necessary to run containers. Groups of nodes, called node pools, possess a set of standard properties that are inherited by worker nodes running in the pool. These properties include the following examples:

-

Kubernetes version

-

Host image

-

Compute shape

-

Node metadata, including custom cloud-init scripts and public SSH keys

For more information about node pool properties, see Modifying Node Pool and Worker Node Properties.

Clusters can have multiple node pools, each with their own unique set of properties, to support workloads with different requirements. For example, you might create one pool of nodes with GPU shapes to support a high-performance computing (HPC) use case and another pool with Arm-based shapes for better price-performance.

Modifying the Kubernetes version and host image version properties of your node pool allows you to upgrade your cluster. Keeping these two properties up to date as new versions are released is important to maintain compliance and receive the latest feature updates.

The challenge of updating

Updating the properties of a node pool is easy, but any changes made to node pool properties only apply to new worker nodes, which means that existing nodes aren’t updated. You need to either manually update a fleet of nodes or write your own scripts to do it, which contributes to regular operational toil. While the toil of updating the properties of nodes within a pool might appear trivial in theory, many users shared that in practice this is anything but the case.

Imagine you receive a new patched and compliant host OS image monthly—or even more regularly—and your security team expects you to update all your nodes with this new image. You have hundreds of nodes across several node pools, and each node already has workloads running on it. First, you need to cordon those nodes, to prevent the clurer control plane from scheduling new work onto them. Next, you need drain the existing workloads off those nodes in a manner that respects your pod disruption budgets and other Kubernetes concepts you implemented to maintain the availability of your application. During that time, you need to ensure that other nodes in your cluster have enough space available for these workloads to be rescheduled onto, either by temporarily scaling up the number of nodes in your cluster or by limiting the number of nodes you simultaneously make unavailable. After workloads have been drained off one node and scheduled onto a new node, you can delete the existing node and replace it with one running the new OS version. Imagine doing this for every node in every cluster in every region every month. Simplifying this activity has a material impact on the lives of platform teams and cluster operators. With the introduction of on demand node cycling, this update process becomes trivial.

On demand node cycling

With the release of the on demand node cycling feature, you can now trigger the replacement of all existing nodes in a node pool with nodes running updated properties. You simply modify the properties of node pools in the same way that you always have and then cycle the nodes in the node pool. The node pool update and cycling operations are decoupled from each other to provide you with the option to continue using your own approach to apply updates to nodes in your node pool.

After you choose to cycle your node pool, OKE automatically cordons, drains, and deletes existing worker nodes in the pool and according to the cordon and drain options specified for your node pool, and also respecting any PodDisruptionBudgets or other Kubernetes best practices you created to maintain the availability of your application.

It ensures available space in your cluster for workloads to shift onto by allowing you to specify the number of extra nodes to temporarily allow during the update operation (maxSurge) and the number of nodes to allow to be unavailable during the update operation (maxUnavailable). maxSurge is useful to prevent downtime during your upgrade by ensuring enough available nodes to avoid a service disruption. maxUnavailable is useful to keep costs low by avoiding the need to temporarily add more nodes to the pool.

When new worker nodes are started in the existing node pool, they have the updated properties you specified. At the end of the update operation, the number of nodes in the node pool returns to the number specified by the node pool’s node count property shown in the Console.

Node pool cycling in action

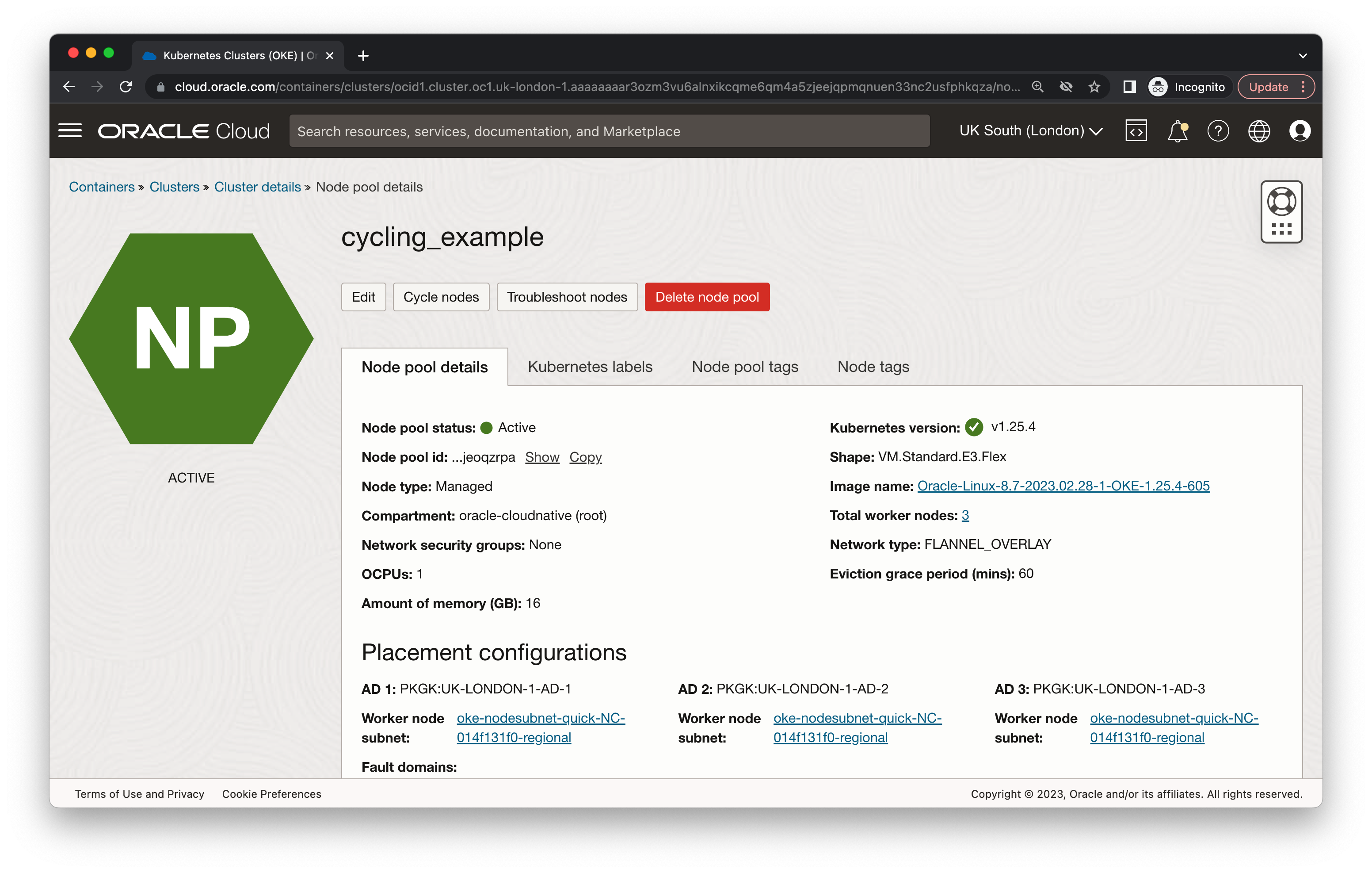

In this example, the node pool details page of a cluster shows a node pool with the Kubernetes version property set to 1.24.1. It indicates the node pool should be upgraded to the newest supported Kubernetes version.

The page also shows the list of nodes in the node pool and that the nodes are all running Kubernetes 1.24.1.

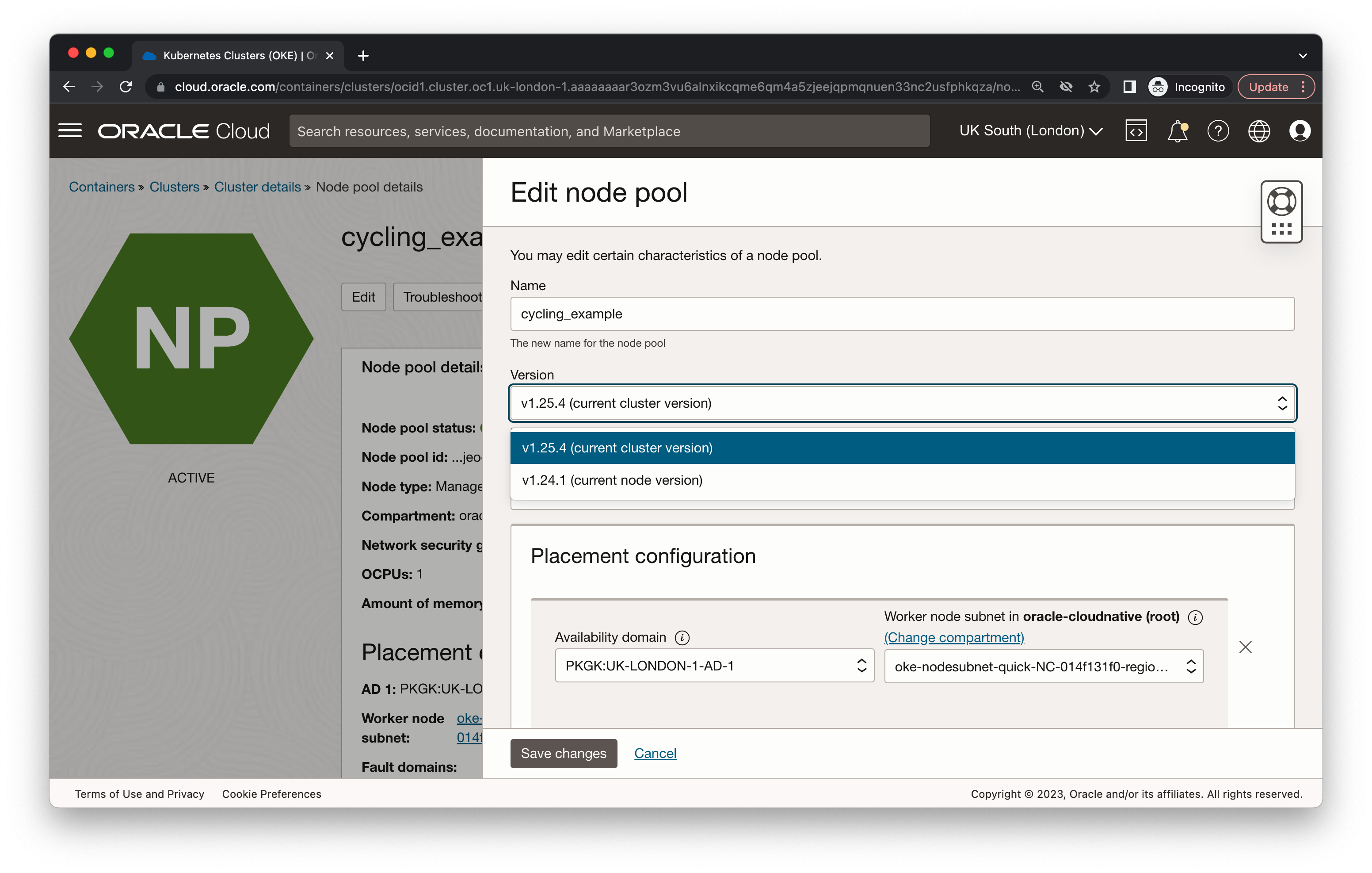

At the top of the page, click Edit to open a panel to configure the properties of the node pool. Update the Kubernetes version property to the newest available Kubernetes version, 1.25.4. To save the updates to your Kubernetes version, click Save changes.

The node pool properties are refreshed with the updated Kubernetes version. When the operation is complete, the updated property shows in your node pool details. On the same page, click Cycle nodes to open a dialog to begin the cycling operation.

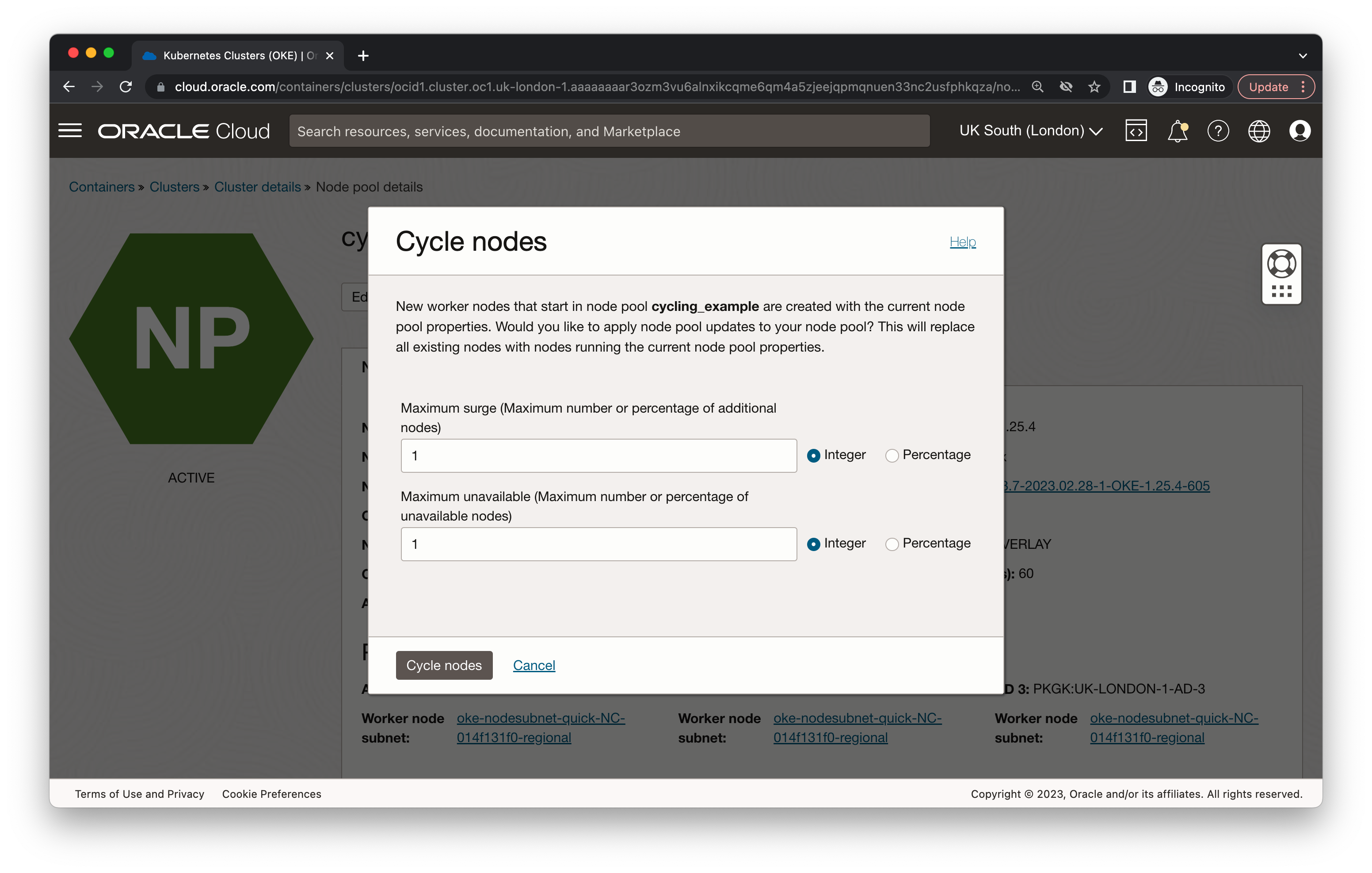

In the cycle nodes dialog, you can specify either integer or percentage values for the maximum number of nodes to add to the pool (maxSurge) or have simultaneously unavailable (maxUnavailable) during the cycling operation. If you leave both values blank, maxSurge is set to 1 and maxUnavailable ise set to 0. Click Cycle nodes to begin the operation.

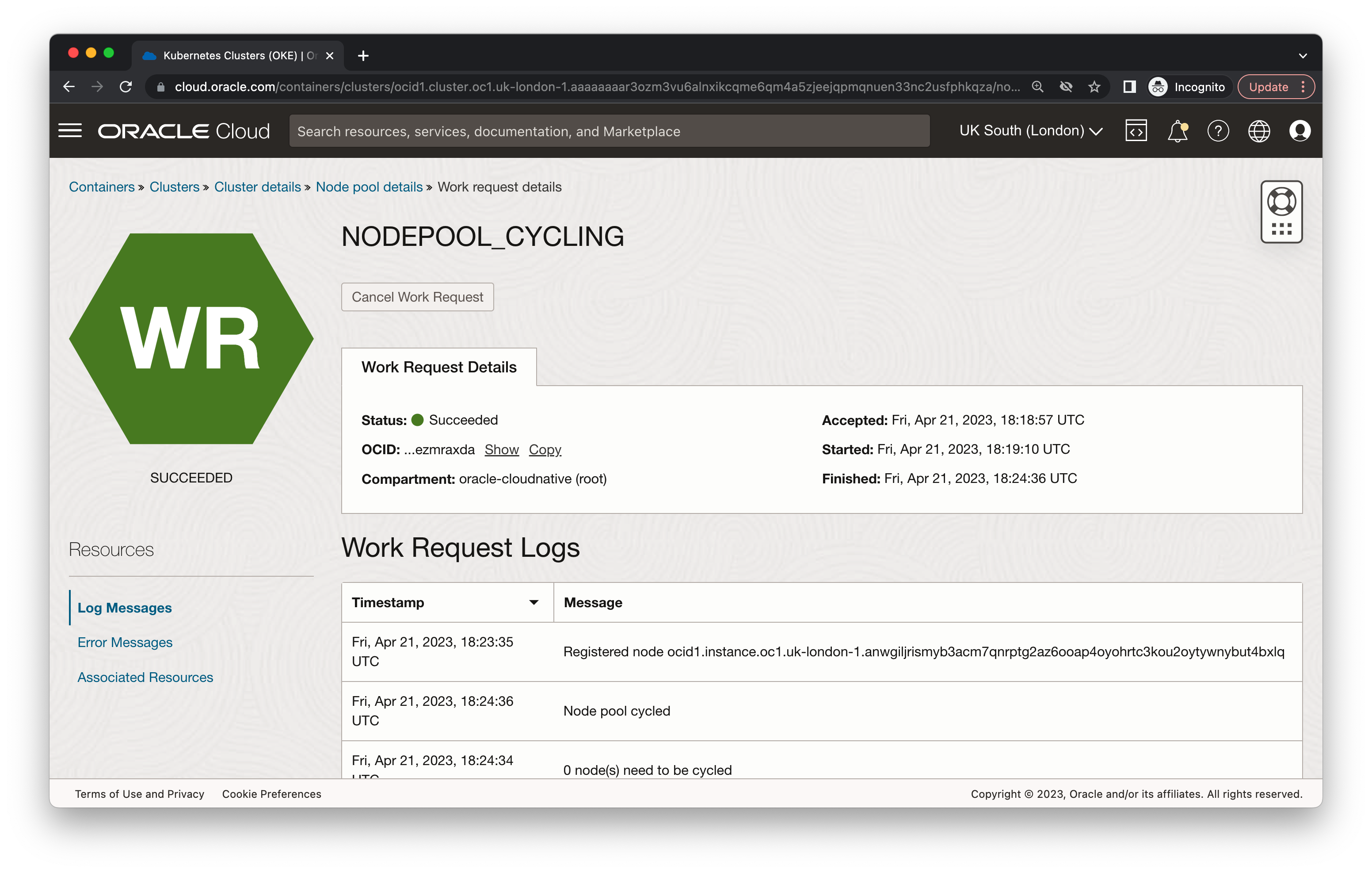

When you trigger the cycling, the status of the node pool changes to UPDATING. You can track the status of the work request by navigating to the bottom of the page and clicking the NODEPOOL_CYCLING operation. The NODEPOOL_UPDATE operation previously created to update the Kubernetes version property also appears. Clicking into the NODEPOOL_CYCLING work request opens a detailed view of the operation that you can use to track its progress by means of logs.

You can also watch the node pool itself to see the existing nodes replaced with nodes running the newer Kubernetes version.

When the work request is complete and all the existing nodes are replaced, the task is marked as Suceeded.

All your nodes are now ready and running the updated Kubernetes version.

When do I cycle nodes?

You might want to update the properties of your node pools and apply those properties to the nodes in the pool for the following reasons:

-

New node shape: A team finds that their application requirements have changed, and their workloads now require a larger shape to meet their performance goals. The cluster administrator updates the node pool shape and uses on demand node cycling to roll out the update.

-

New Kubernetes version: Kubernetes releases new minor versions three times a year and patch versions monthly. Customers who want to take advantage of new features delivered in the minor version or apply the patches delivered in the patch version need a mechanism to apply those updates to their fleet of nodes.

-

Common vulnerabilities and exposures (CVE) remediation: A cluster administrator wants to update the image of their worker nodes to address a CVE found in the existing host OS image. After updating the image property, they can trigger an update to create new instances running the new image.

-

Key rotation: Applications are typically governed by requirements for key rotation. A cluster administrator who needs to update the SSH keys for worker nodes in their cluster can update the node pool property with a new SSH key. After updating the property, they can trigger an update to create instances with the new public key present on each node.

Conclusion

The on demand node cycling feature makes it easy for you to keep your nodes up to date and secure by adding the ability to easily replace existing nodes in your node pool with new nodes running updated properties. This feature automates the process of updating a fleet of nodes, which was previously a time-consuming task that required you to develop your own solutions. When you want to update another node pool property, such as SSH keys, boot volume size, custom cloud-init scripts, it comes with the flexibility to achieve your goals.

To learn more, see the following resources: