Containerized applications and Kubernetes adoption in production are on the rise, and we certainly see that trend across Oracle Cloud Infrastructure (OCI) customers. One of the main considerations for a Kubernetes cluster administrator is to maintain the availability, security, and specifically the traffic path into the Kubernetes cluster to reach the relevant microservices. A load balancer like OCI Flexible Load Balancing for Kubernetes services (type: Load Balancer) is highly available and has been the standard way to expose a service to the internet. The load balancer helps to distribute a set of workloads over a set of resources and provides generic networking services to direct network traffic to multiple worker nodes in the cluster. The flexible load balancer acts as a proxy between the client and the application running in the Oracle Container Engine for Kubernetes (OKE) cluster. Incoming application traffic to the flexible load balancer is distributed across multiple worker nodes.

We recently added support for another type of load balancer in OKE: Flexible network load balancer. The network load balancer is a non-proxy load balancing solution that performs pass-through load balancing of layer 3 and layer 4 workloads: Transmission control protocol (TDP), user datagram protocol (UDP), and internet control message protocol (ICMP). This load balancing solution is ideal for latency-sensitive workloads, such as real-time streaming, VoIP, internet of things, and trading platforms. The network load balancer operates at the connection level and balances incoming client connections to healthy backend servers based on IP protocol data.

Advantages of using a network load balancer

The network load balancer is designed to handle tens of millions of requests per second while maintaining high throughput at ultra-low latency, with no effort on your part. It can scale up or down based on client traffic with no minimum or maximum bandwidth configuration requirement and provides the benefits of high availability and low latency. By providing this multiplexing capability, a network load balancer becomes an essential building block of a scalable service.

As a Kubernetes cluster administrator, you’re tasked with configuring a firewall to inspect every packet that enters the OKE cluster and block any malicious traffic. The HTTP X-headers that come from the OCI flexible load balancer aren’t available in the network packets when the mode is set to transmission control protocol (TCP). The network load balancer acts as a bump-in-the-wire layer 3 transparent load balancer that doesn’t modify the packet characteristics and preserves the client source and destination IP header information. With the source and destination IP preserved, you can allow access to the applications that are behind the internet-facing load balancer.

The OCI network load balancer is a non-proxy layer-4 load balancing solution that performs pass-through load balancing of OSI layer 3 and 4 (TCP/UDP/ICMP) workloads. It includes a layer 4 pass-through load balancing and client header preservation. The network load balancer operates at the connection level and balances incoming client connections to healthy OKE worker nodes. The load balancing policy uses a hashing algorithm to distribute incoming traffic. The default load balancing distribution policy is based on a 5-tuple hash of the source and destination IP address, port, and IP protocol information. This 5-tuple hash policy provides session affinity within a given TCP or UDP session, where packets in the same session are directed to the same backend server behind the network load balancer. You can use a 3-tuple (source IP, destination IP, and protocol) or 2-tuple (source and destination IPs) load balancing policies to provide session affinity beyond the lifetime of a given session.

Creating a network load balancer service

For applications where you want to preserve a client IP address, you can add the annotation oci.oraclecloud.com/load-balancer-type: “nlb” to your service definition.

To create a service that’s backed by a network load balancer, specify annotations in the metadata section of the service manifest:

apiVersion: v1

kind: Service

metadata:

name: my-nginx-svc

labels:

app: nginx

annotations:

oci.oraclecloud.com/load-balancer-type: "nlb"

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: nginxAs a security best practice, Oracle recommends specifying the network security rules for the load balancer as part of a network security group (NSG) or the subnet security list

To associate a network security group to the network load balancer, add the following annotation to your service definition:

oci.oraclecloud.com/oci-network-security-groups: "ocid1.networksecuritygroup.oc1.xxxx"Configuring external traffic policy

The Kubernetes specification externalTrafficPolicy denotes if the client IP is preserved or not. Let’s review two different modes or architectures for how you can use network load balancer and the benefits of each.

The two modes to configure external traffic policy are cluster(default) and local. Cluster mode obscures the client source IP and can cause a second hop to another node but has good overall load-spreading. The local option preserves the source IP address in the header of the packet all the way to the application pod.

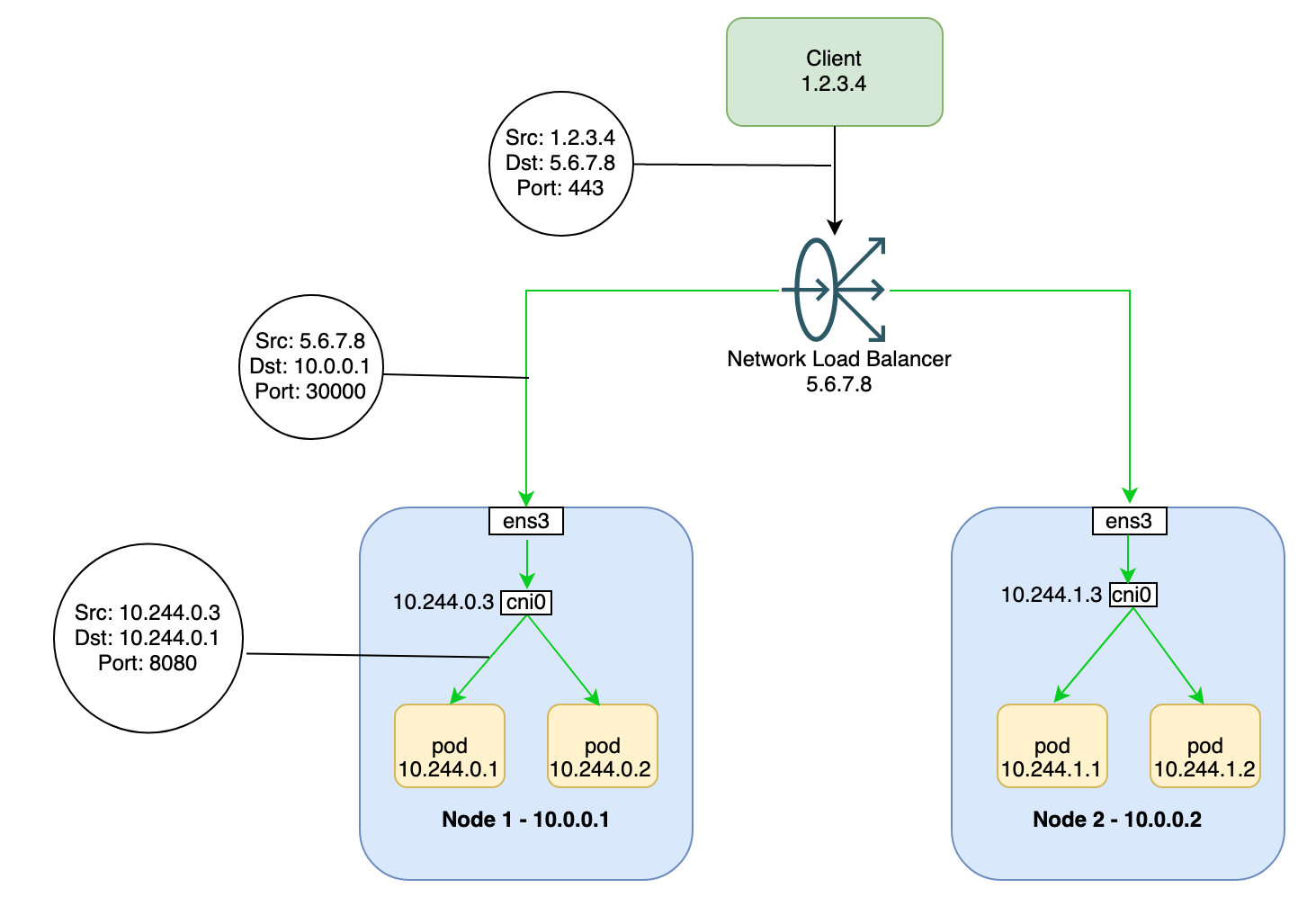

externalTrafficPolicy: Cluster

Cluster is the default external traffic policy for Kubernetes services. The assumption is that you always want to route traffic to all pods running a service with equal distribution and preserving a client IP address isn’t required. It operates by opening a random port from a range of 30000–32767 on all the worker nodes of the cluster, regardless of if a pod can handle traffic for that service on that node. Kubernetes forwards any traffic sent to any worker on that port to one of the pods of that service.

This mode often results in less churn of backends in the cluster because it doesn’t depend on the state of the pods in the cluster. Any request can be sent to any node, and Kubernetes handles getting it to the right place. It results in good load-spreading from the network load balancer across worker nodes.

When traffic reaches a Kubernetes node, the node handles it the same way, regardless of the type of load balancer. The network load balancer isn’t aware of which nodes in the cluster are running pods for its service. On a regional cluster, the load is spread across all nodes in all availability domains for the cluster’s region.

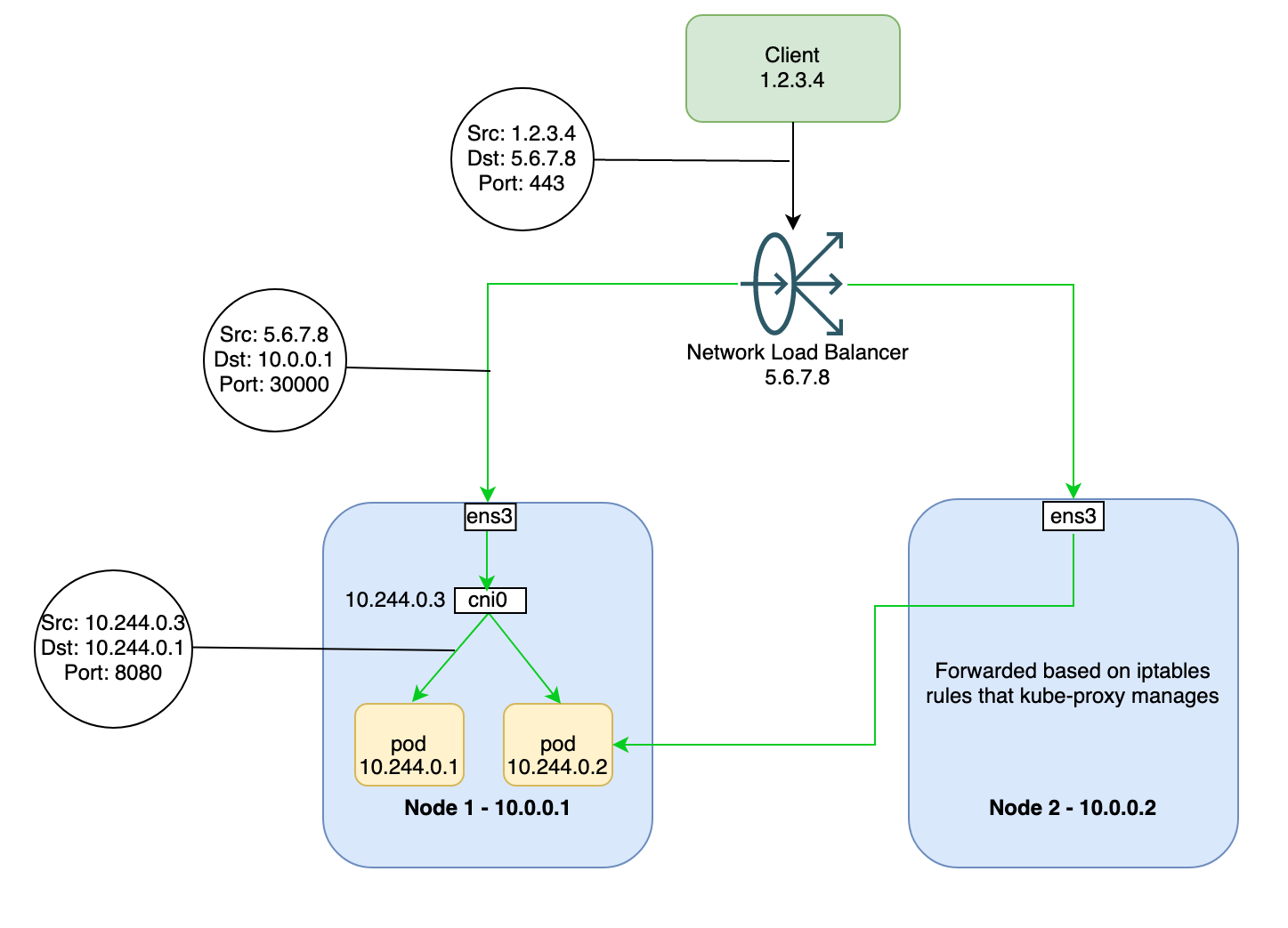

When traffic is routed to a node, the node routes the traffic to a pod, which can run on the same node or a different node. The node forwards the traffic to a randomly chosen pod by using the iptables rules that kube-proxy manages on the node.

In this mode, traffic is load-balanced across all nodes in the cluster, even nodes not running a relevant pod. The network load balancer passes the backend health checks, resulting in an extra network hop because your request might hit a node that doesn’t have the right pod on it and then need to be sent to another node.

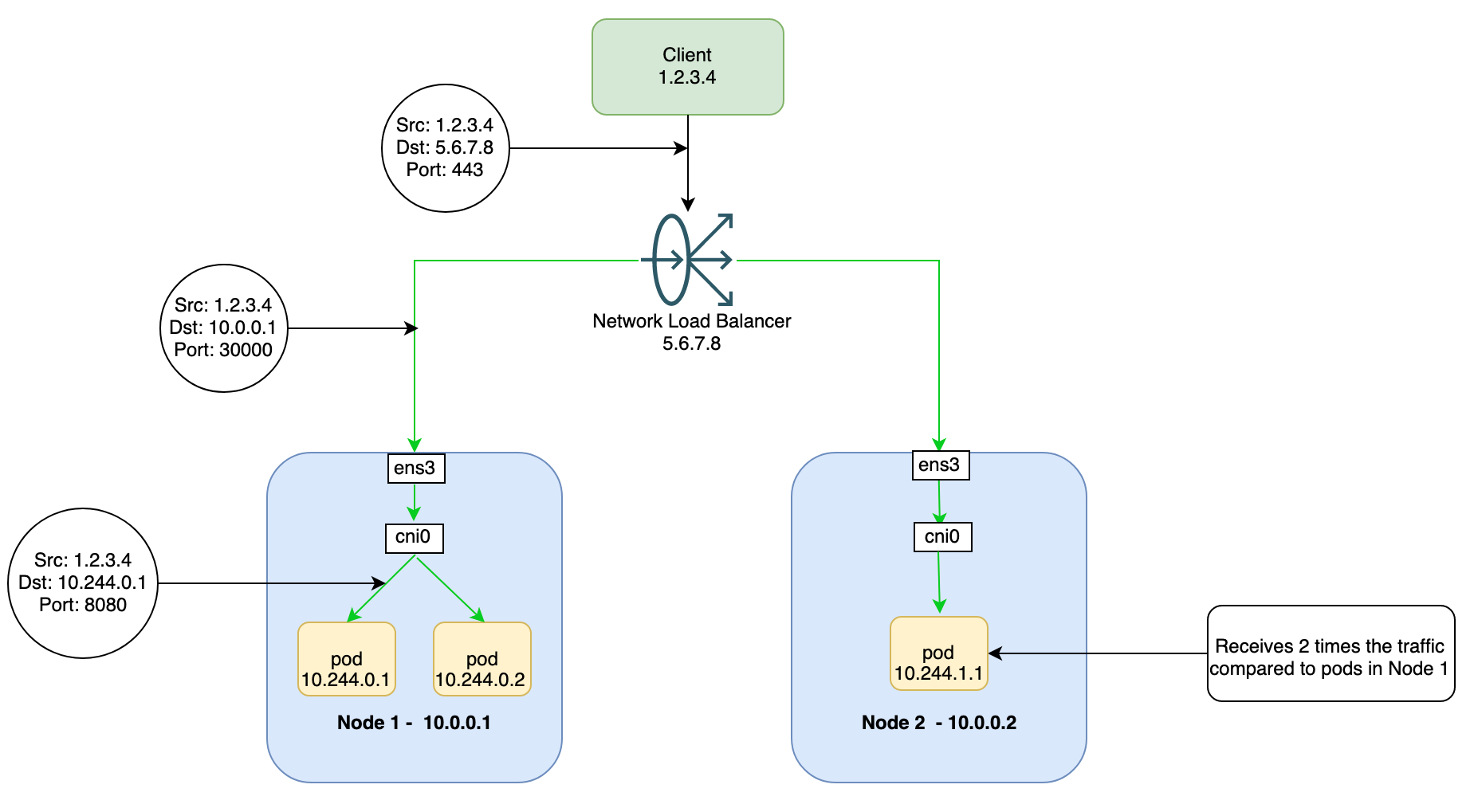

externalTrafficPolicy: local

If you want to preserve the client IP in the HTTP request, specify “externalTrafficPolicy: Local” in the service specifications. With this external traffic policy, the proxy rules are configured on a specific NodePort (30000-32767) only for pods that exist on the same node (local), instead of every pod for a service regardless of where it was placed. This mode prevents the extra network hop from cluster mode, but your traffic can potentially become imbalanced if not configured properly.

With the network load balancer, we need to add every Kubernetes node as a backend, but we can depend on the network load balancer’s health checking capabilities to only send traffic to backends where the corresponding NodePort is responsive and have the right pod running on them.

With this architecture, any ingress traffic must land on nodes that are running the corresponding pods for that service or the traffic is dropped. Because the network load balancer isn’t aware of the pod placement in your Kubernetes cluster, it assumes that each backend (Kubernetes node) receives equal distribution of traffic. As shown in the diagram, this assumption can lead to select pods for an application receiving more traffic than other pods.

To preserve a client IP, the worker nodes must accept traffic from the internet or the CIDRs where clients reside. Ensure that the ingress security rules for the worker nodes have a rule to allow traffic from the appropriate CIDRs.

...

spec:

type: LoadBalancer

externalTrafficPolicy: Local

...Exposing user datagram protocol applications in OKE

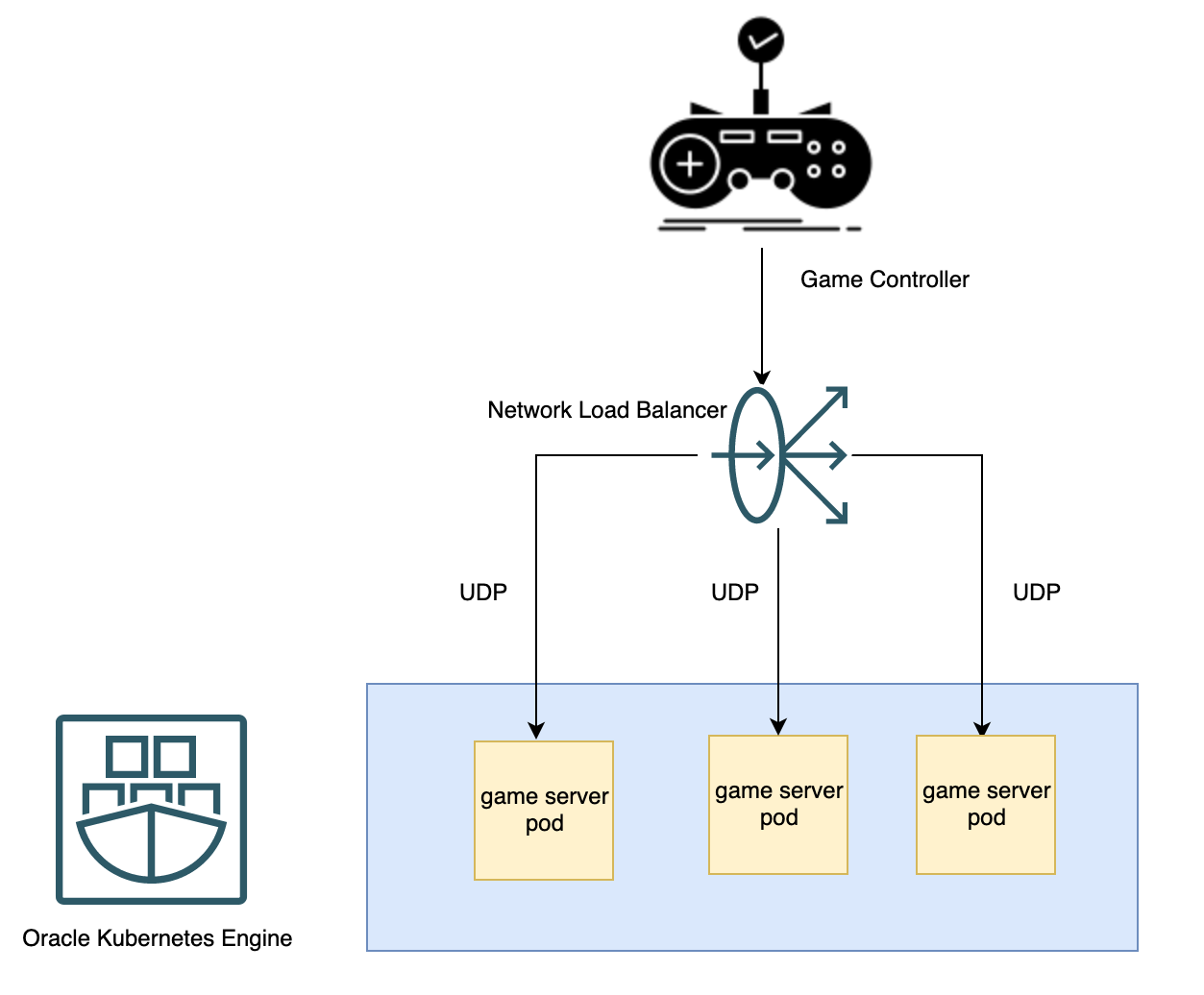

You often need to run non-HTTP based services inside Kubernetes. User datagram protocol (UDP) is a low-latency protocol that’s ideal for workloads, such as real-time streaming, online gaming, and internet of things (IoT). You no longer need to maintain a fleet of proxy servers to ingest UDP traffic, and you can now use the same load balancer for both TCP and UDP traffic. The OCI flexible network load balancer enables you to deploy and scale any TCP or UDP applications in Oracle Container Engine for Kubernetes. Together, UDP, Kubernetes, and network load balancers give customers the ability to improve their agility, while also meeting their low latency requirements.

For example, a gaming enterprise wants to deploy a UDP-based game server using a network load balancer. The architecture used to deploy is composed of the following components:

-

Oracle Container Engine for Kubernetes (OKE) cluster and a node pool of Oracle Linux instances powered by Arm-based Oracle processors

-

A UDP game server is deployed on OKE, a service with network load balancer.

-

The game server also includes UDP health probe, used as a liveness probe.

-

A network load balancer provisioned with a UDP listener associated with single backend set. This backend set is configured to register its backends and performs health checks on its backends using the UDP protocol.

When OKE provisions a network load balancer for a Kubernetes service of type LoadBalancer, you can define the type of traffic accepted by the listener by specifying the protocol on which the listener accepts connection requests. If you don’t explicitly specify a protocol, TCP is used as the default value.

spec:

type: LoadBalancer

ports:

- port: 80

protocol: UDPConclusion

Load balancing is essential to keeping your mission-critical Kubernetes clusters operational and secure at scale. While many load balancers like OCI flexible load balancers and flexible network load balancers are effective at managing many of these risks for you, configuring your Kubernetes environment correctly is important to best take advantage of the features that these load balancers offer.

For more information, see Defining Kubernetes Services of Type LoadBalancer and OCI Network Load Balancing in the OCI documentation. We want you to experience these new features and all the enterprise-grade capabilities that Oracle Cloud Infrastructure offers. Create your Always Free account today and try the load balancer options with our US$300 free credit.