The Oracle Cloud Infrastructure (OCI) Network Load Balancer service has rapidly gained widespread adoption since its introduction. A network load balancer is a critical component in network architecture. It’s designed to efficiently distribute incoming network traffic load and client requests to multiple backend servers or resources, optimizing their capacity utilization and ensuring the collective reliability and high availability of backend functions.

Since its inception, OCI Network Load Balancer has supported backends in its local region. The backends can be in the same virtual cloud network (VCN) as the network load balancer or a different VCN connected to the network load balancer VCN by local peering gateways (LPG). Today, we’re excited to announce that we expanded our support for network load balancer backends to anywhere reachable by the network load balancer through an OCI dynamic routing gateway (DRG), including the following scenarios:

-

Cross-VCN connectivity: Network load balancer and backends in different VCNs can now connect through a DRG, eliminating the need for LPGs in the network designs with OCI network load balancers.

-

Cross-region support: Network load balancers can now have backends in remote regions that are reachable through a remote peering connection (RPC) and the DRG its VCN is attached to.

-

On-premises backends: Network load balancers can now have backends in an on-premises network connected to a DRG through FastConnect virtual circuits or IPsec tunnels.

These enhancements effectively allow network load balancer backends to be deployed on any networks behind a DRG. No practical limit to how far away the backends can exist if the network load balancer can reach the backends through a DRG. These newly added flexibilities simplify customer network designs with OCI network load balancers and expands OCI network load balancer usages to more diverse scenarios.

The subsequent sections of this blog post guide you through how to add the network load balancer backends to take advantage of these enhancements and provide detailed insights into the unlocked design scenarios with examples to showcase the essential network configurations.

Adding network load balancer backends with IP addresses

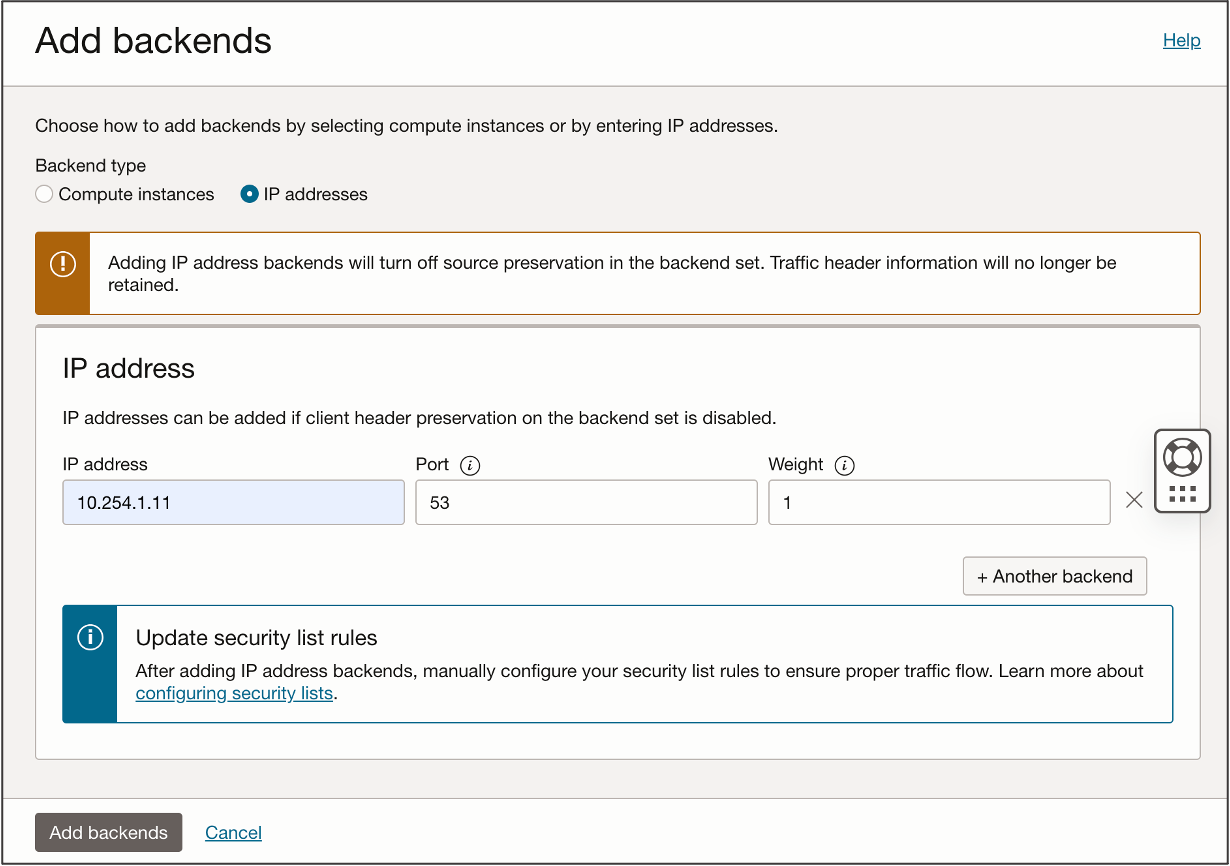

Normally, when customers add a new backend to a network load balancer backend set, they can either directly select an OCI Compute instance as a new backend or specify its IP address. When adding a remote backend reachable by network load balancer through a DRG, such as an on-premises instance or an instance in a remote VCN, the latter method is the only available option. The new backends must be added using their IP addresses.

The following sample Console screen shows how to add a remote backend to a network load balancer backend set. In this example, the backend type is set to IP address, and the IP address of the remote instance is entered.

Source Preservation must be disabled for the backend set if you need to add an ip-address-based backend to it. When adding this backend on the Oracle Cloud Console, you see a reminding message similar to the example. If the backend set has Source Preservation enabled, the option of IP address backend type becomes unavailable. You need first to take the action to disable it for the backend set before you can add IP-address-based backends. With Source Preservation disabled, the network load balancer replaces the source IP address with its own private IP address before forwarding the traffic from clients to its backends. As a result, backends see the network load balancer private IP as the source of the traffic. If in your design the backends need to know about the original source IP address, contact your Oracle account team for alternative solutions.

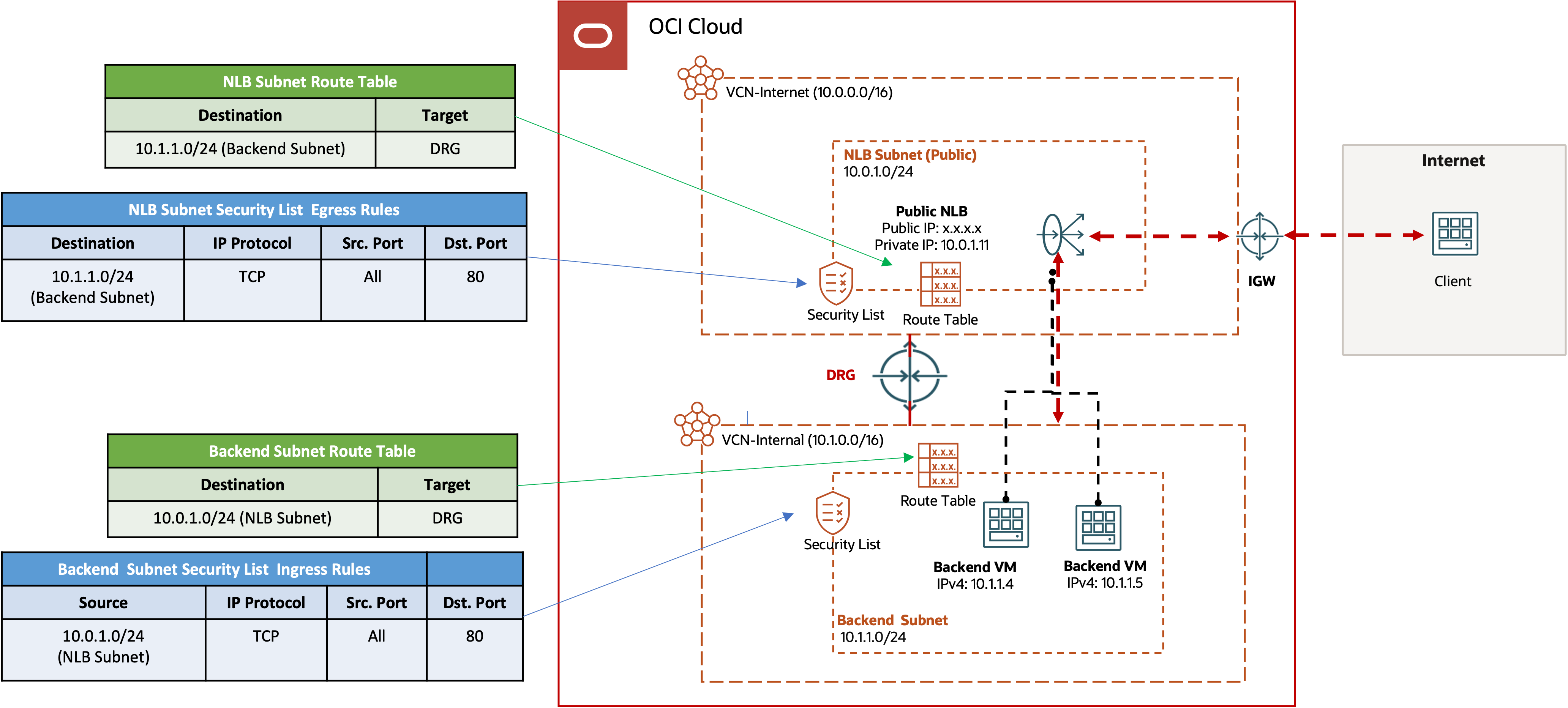

OCI network load balancers with backends in a different VCN via DRG

Many customers strategically choose to deploy OCI network load balancers and their backends in separate VCNs. For example, they can deploy public network load balancers in a centralized internet-facing VCN, while keeping the backend instances in internal-only VCNs. Previously, this design required connecting the network load balancer and backend VCNs through LPGs. This requirement prevents customers from taking advantage of the simplified inter-VCN connectivity with a DRG. As the virtual network scales with more VCNs, network load balancers, and backend sets, the local peering design can become complex or a possible bottleneck for further scaling. With the latest enhancement, this obstacle is eliminated, allowing customers to streamline their network design by using DRG for all inter-VCN connectivity, including between network load balancers and backend VCNs.

The following diagram illustrates a public network load balancer setup with the backends residing in a separate internal VCN connected by a DRG. It highlights essential routing and security configurations on both network load balancer and backend sides, crucial for IP routing and permitting health check traffic between them.

For routing, the network load balancer subnet’s VCN route table must have a route to the backend subnet (or its VCN), using the DRG as the next-hop target. Conversely, the backend subnet’s route table should have a route to the network load balancer subnet (or its VCN), again with the DRG as the next-hop target. On the DRG, both VCN attachments can use the autogenerated DRG route table for VCN attachments. It enables dynamic routing between them.

Regarding security, because the health check process is initiated by the network load balancer sending health check requests to the backends, the network load balancer subnet needs an explicit egress rule in its network security list to permit health check request to the backends. Alternatively, if network security group (NSG) on the network load balancer is used for security policies instead of the subnet security list, the same egress rule needs to be added in the NSG. If the egress rule is stateful, there is no need for an explicit ingress rule to permit the health check responses from the backends.

Similarly, the security list of the backend subnet or the NSG of the backends must have an explicit ingress rule to permit health check requests from the network load balancer. If this ingress rule is stateful, it negates the need for an explicit egress rule to permit the health check response traffic to go back to the network load balancer. In this example, health check runs on the TCP port 80. So, the network load balancer subnet has a stateful egress rule to permit traffic to the backend subnet on the TCP destination port 80 and the backend subnet has a stateful ingress rule to permit traffic from the network load balancer subnet to the TCP destination port 80. Both the network load balancer and the backend subnets also need to have the appropriate security policies to permit the production traffic that the network load balancer load shares to the backends.

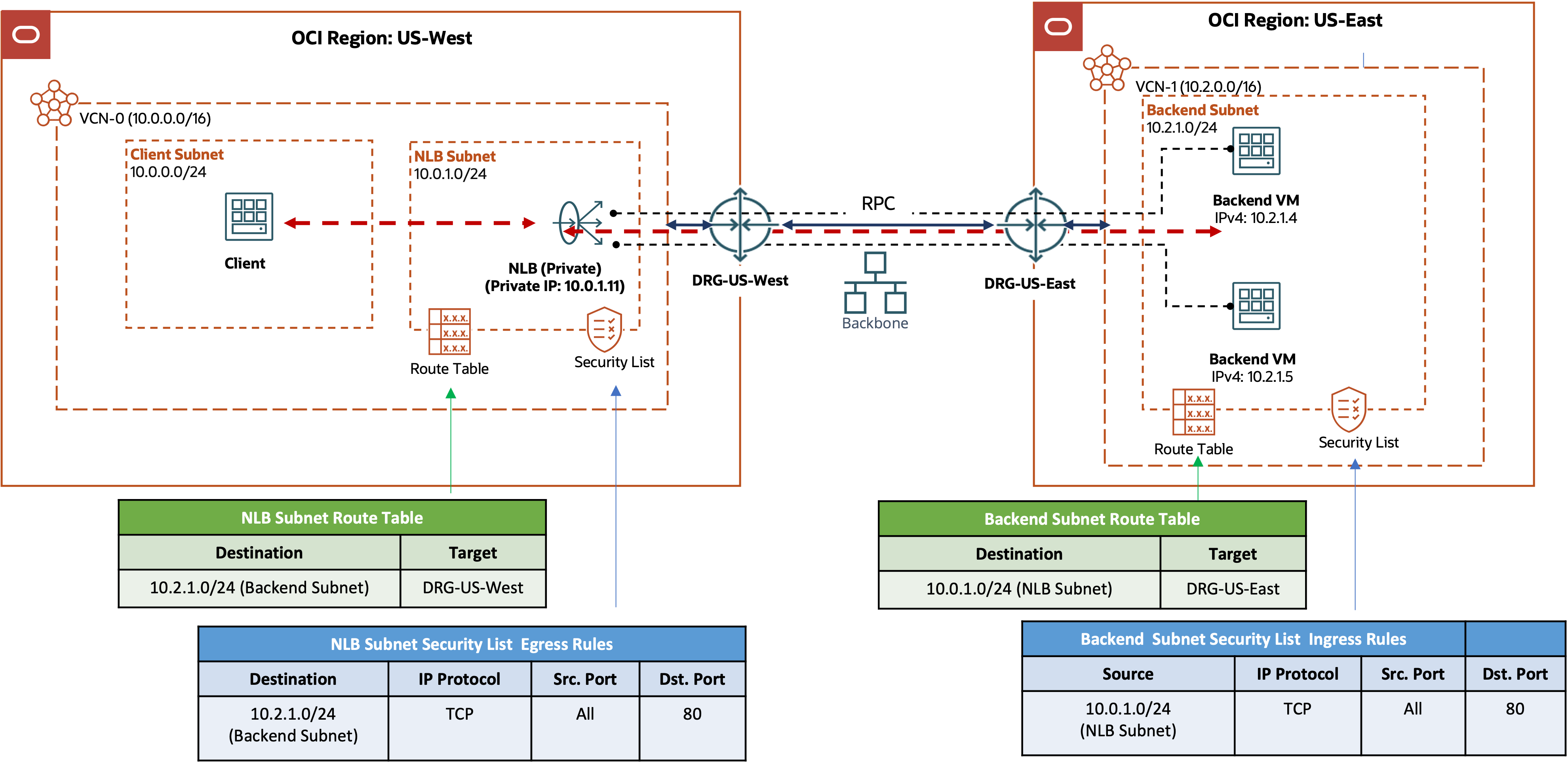

OCI network load balancers with backends in a remote region

OCI network load balancers now support backends that are deployed in a remote region. This flexibility can unlock some creative network designs. For instance, customer can deploy a subset of the backends in a remote region to cover local instance or network failures.

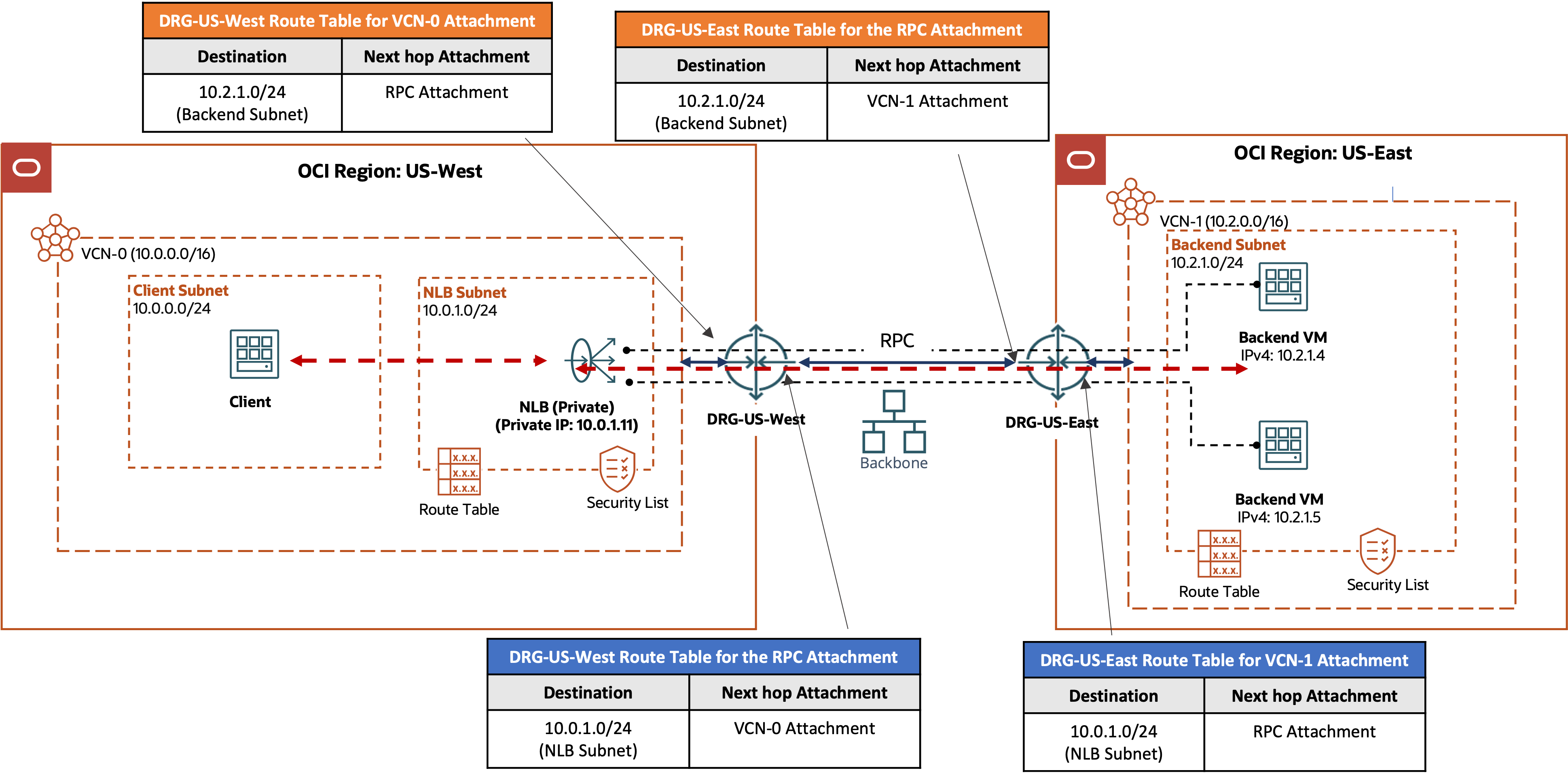

The following example shows a network load balancer in the OCI region US-West with backends in the remote region US-East. The health check for the backend set runs on the TCP port 80. The VCN routing and security list rule requirements discussed in the last design scenario, backends in a VCN through a DRG, apply here too. You also need to connect the DRGs in the two regions by a remote peering connection (RPC). If you use the autogenerated DRG route tables for the VCN and the RPC attachments as is, the VCN routes in both regions are automatically propagated to the other side and imported into the DRG route tables for the VCN attachments. If you made changes to the import distribution policies to the autogenerated DRG route tables, or you use your own DRG route tables for either the VCN attachments or the RPC attachments, ensure that your DRG route tables have the needed distribution policies so that the DRG route table for the VCNs import routes from the RPC attachments and the reverse.

OCI network load balancers with on-premises backends

Support for on-premises backends has been a frequently requested enhancement for the OCI network load balancers as customers want to use OCI-managed network load balancers to scale out their applications or services that are hosted in their on-premises networks. A popular use case of this capability is having an OCI network load balancer to frontend a cluster of on-premises DNS servers, managing capacity and ensuring redundancy.

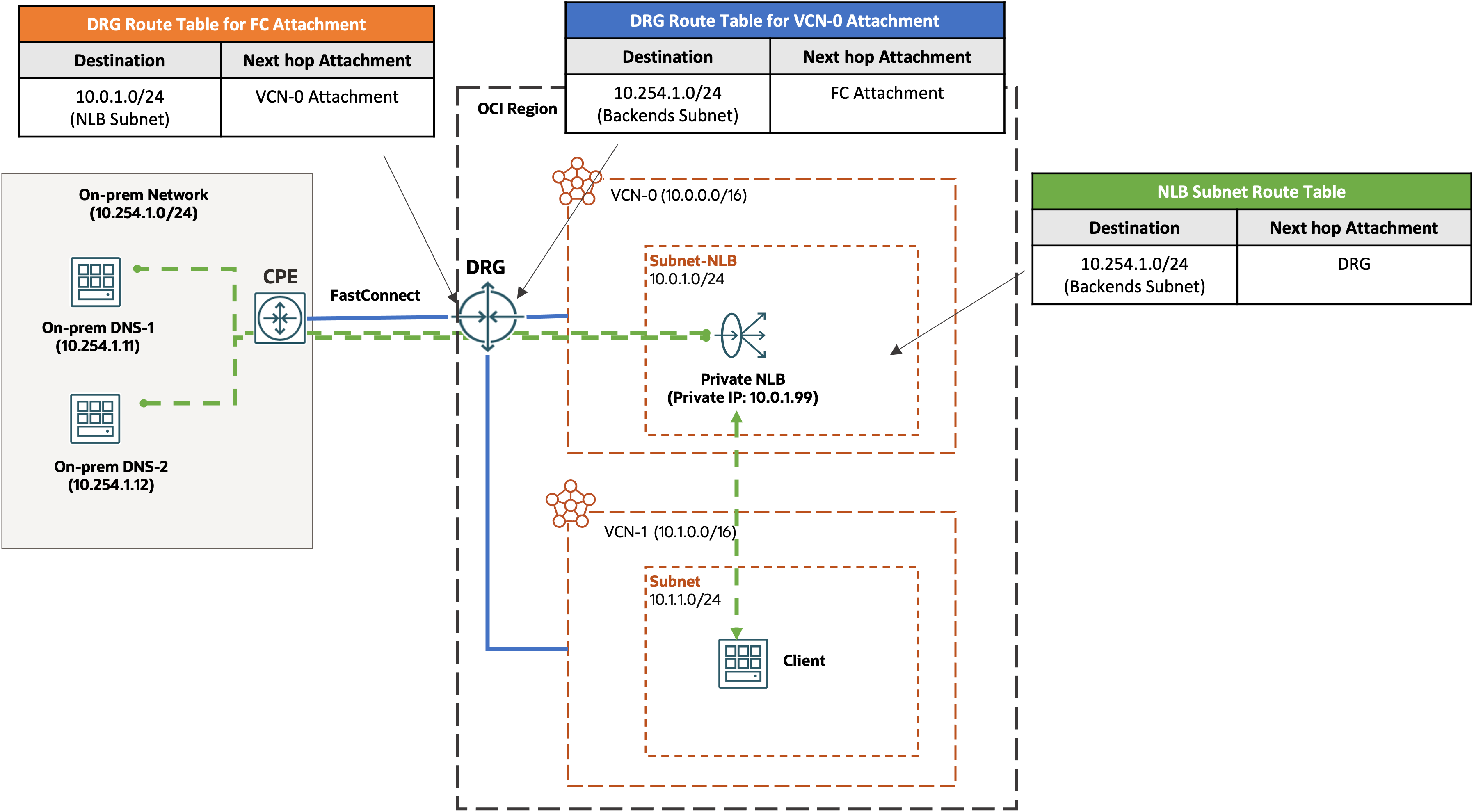

The following diagram illustrates this concept in action. It shows a private DNS setup in which the on-premises DNS servers are the backends of an OCI network load balancer. The on-premises network is connected to the OCI region through FastConnect virtual circuits.

In the routing configuration of our example, adding a static route for the on-premises backend subnet in the network load balancer subnet’s route table is critical. The next-hop target of this route is the DRG.

The diagram also highlights the needed routes in the DRG tables for both the network load balancer VCN attachment and the FastConnect virtual circuit attachment. The route table for the network load balancer VCN attachment needs a route for the on-premises backend subnet with the FastConnect virtual circuit attachment as the next-hop, while the route table for the FastConnect virtual circuit attachment needs a route for the network load balancer subnet or VCN CIDR with the network load balancer VCN attachment as the next-hop. If you use the autogenerated DRG route tables for these two attachments with their default import distribution policies, these routes are automatically populated by the DRG’s dynamic routing capabilities. If you have changed the import distribution policies of these autogenerated route tables or use your own DRG route tables, ensure that they have the appropriate import distribution policies so that they import the routes correctly from each other, such as the FastConnect attachment route table imports routes from the network load balancer VCN attachment. Because the DRG learns the on-premises network routes from the customer on-premises equipment (CPE) through BGP, you must also ensure that the BGP routing on the CPE is configured correctly to advertise the on-premises backend subnet to the DRG.

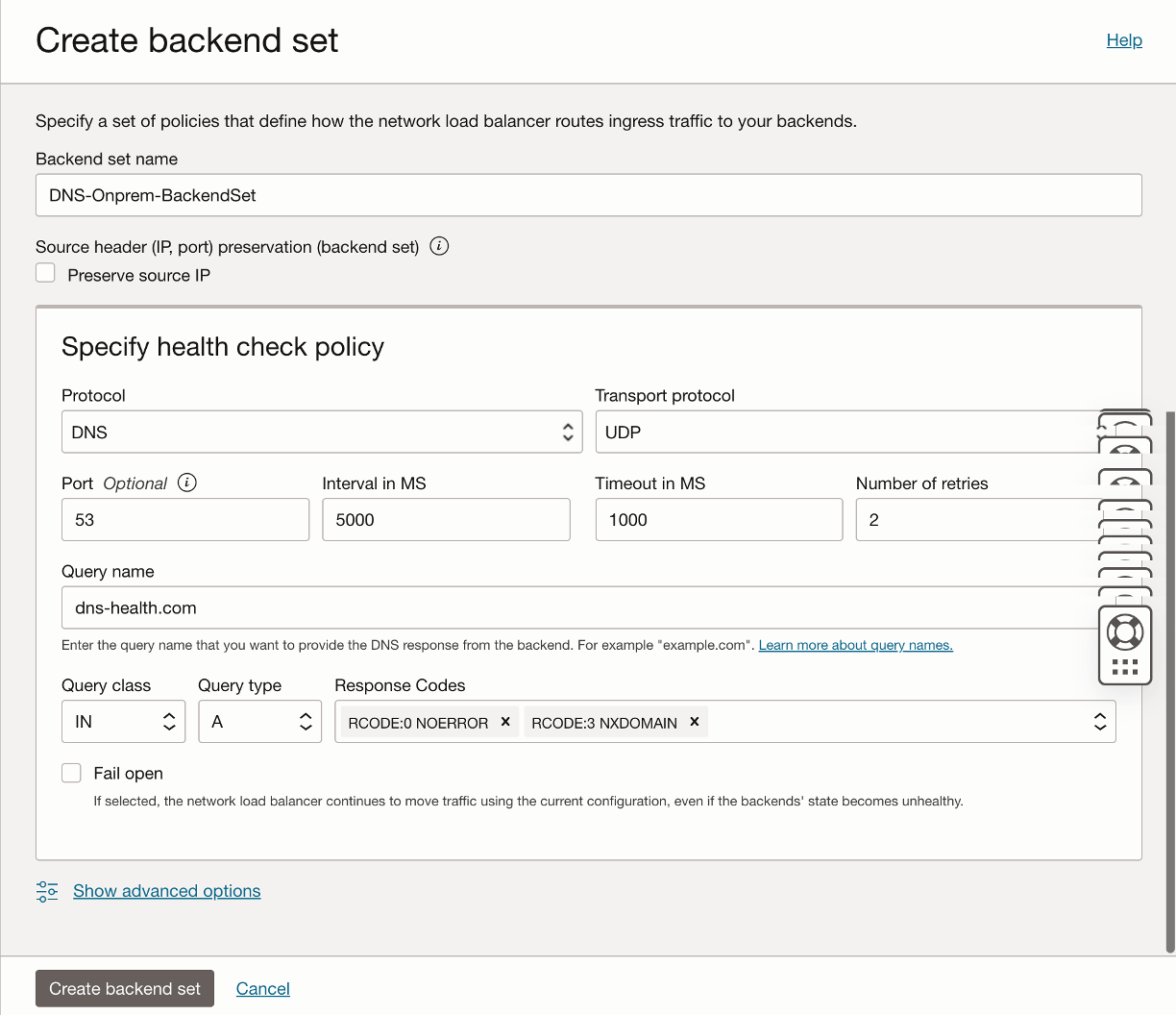

The health check in this example uses the DNS protocol and on UDP port 53, as shown in the following figure:

The DNS health check is another newly added capability on OCI Network Load Balancer. My colleague, Tim Sammut, has published a great blog on using OCI Network Load Balancer for DNS redundancy and failover (here). Read it to learn more about the DNS use case with OCI Network Load Balancer.

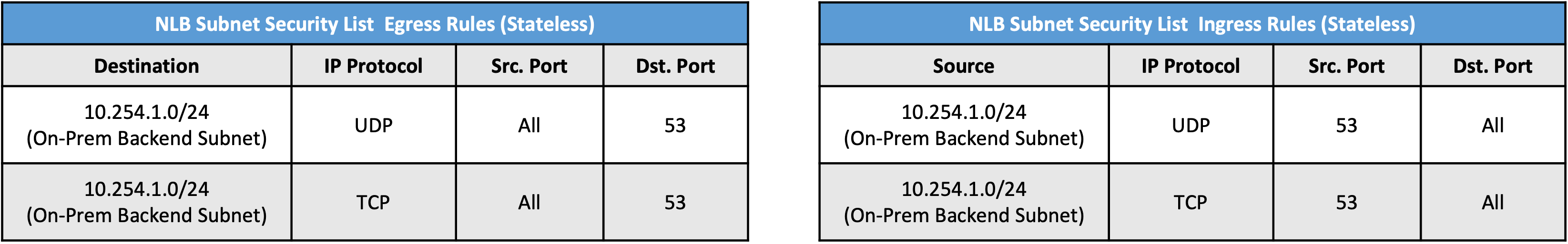

The DNS service tends to generate extremely high volumes of short-lived connections for DNS queries and responses between the clients and the DNS servers. It can be tens of thousands or even millions of requests or connections per second (CPS). Using stateful security list rules for the DNS traffic at this high CPS rate can exhaust the connection tracking table resources on the network load balancers or the backend compute hosts that are used to enforce the stateful rules. So, the best practice for DNS service or any other services with similar high CPS rates is to use stateless security policies, which requires users to create explicit permit rules for both ingress and egress directions. The following image shows the suggested stateless security list rules for the network load balancer subnet or the NSG of the network load balancer in our example:

Conclusion

The enhancements in this post have successfully removed historical constraints associated with OCI network load balancer backends, expanding their deployment possibilities and connection options. In conjunction with other recently introduced Network Load Balancer features, such as DNS health checks, these improvements empower our customers to broaden the scope of OCI Network Load Balancer applications, enabling new use cases. These enhancements contribute to simplification of our customers’ network design on OCI. Stay tuned for further developments and new functionalities from our team soon.

For more information about Oracle Cloud Infrastructure Network Load Balancer and cloud networking, see the following resources: