In 2019, Oracle and Microsoft announced the partnership to enhance cloud interoperability by connecting Oracle Cloud Infrastructure (OCI) and Microsoft Azure clouds by a private, secure, low-latency, and high-throughput network: The OCI-Azure Interconnect.This partnership enables enterprises with many on-premises, mission-critical applications that utilize Oracle and Microsoft technologies to easily migrate to public clouds. This one-of-a-kind connected-clouds alliance built for our mutual customers and complementary cloud services opens the door to the swift migration of on-premises applications. Customers can then use a broader range of multicloud solutions to integrate their implementations into a single unified enterprise cloud solution. Today, we have 12 interconnected cloud regions and plan to add more Interconnect regions.

Customers frequently ask about the network latency and how much network throughput and bandwidth they can achieve using the OCI-Azure Interconnect. What are the best practices to optimize the network latency using the Interconnect?

This blog post highlights the importance of latency, the best practices to optimize network latency using the OCI-Azure Interconnect, and the impact on performance for various types of Oracle workloads. It also encompasses intracloud latency across all OCI and Azure interconnected regions. We provide details on what we tested and how to make it easy for anyone to recreate these tests.

This blog post doesn’t show the OCI-Azure Interconnect configuration steps, but you can follow the configuration steps in the Step-by-Step Guide.

What we tested and how

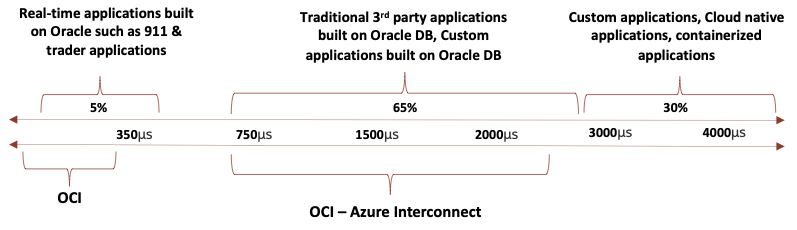

We used sockperf tool to measure TCP and UDP network latency for database workloads and measured ICMP ping network latency for other workloads. After working with multiple customers over the last couple of years, we determined that the OCI-Azure Interconnect network latency is sufficient for over 95% of applications built on Oracle databases overall. The TCP and UDP round-trip network latency and ICMP round-trip network latency are in the 1–2 ms range for most of the OCI-Azure interconnected regions.

As an example, for most of the Oracle applications, third-party packaged applications, and custom applications, the Interconnect’s latency works with minimal performance degradation. If we take the average latency of 700 μs (microseconds) versus the average latency of 1300 μs (microseconds), the application performance drop is negligible for most Oracle Applications customers and for modern applications built with concurrency in mind on the Oracle database.

Best practices for the OCI-Azure Interconnect

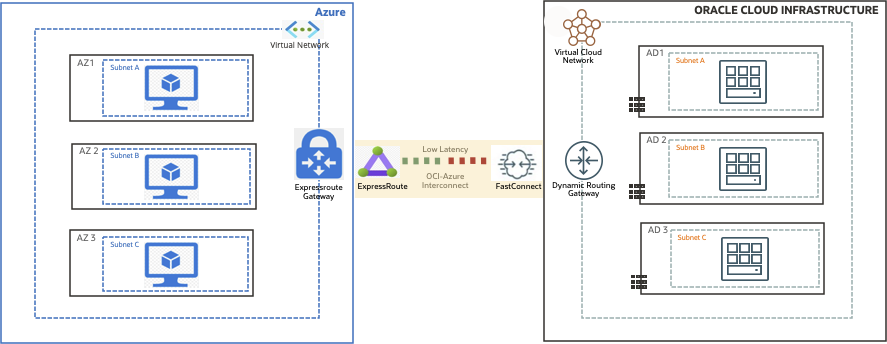

We used the following architecture to validate network latency between a virtual machine (VM) placed in Azure and the VM placed in OCI.

Figure 1: A sample architecture using clouds interconnected in Azure and OCI

The following list gives the best practices for interconnecting OCI with Azure using OCI’s FastConnect virtual circuit and Azure’s ExpressRoute virtual circuit. We applied these best practices to validate the network latency between OCI and Azure VMs. However, it can change based on your application workload and network connectivity requirements.

Interconnect setup

-

Create an Azure ExpressRoute circuit with the appropriate port size, based on the throughput required for the application workload. Select “Oracle Cloud FastConnect” as the provider and select the “local” SKU option for the Azure ExpressRoute virtual circuit.

-

Create the Azure ExpressRoute Gateway and choose the “ultra-performance” SKU for Azure ExpressRoute Gateway.

-

Create an OCI FastConnect virtual circuit with the appropriate port size, based on the throughput required for the application workload. Choose “Microsoft Azure: ExpressRoute” as the provider and use the “service key” of the Azure ExpressRoute virtual circuit as the partner service key.

-

Enable the FastPath option when adding a connection in the Azure ExpressRoute Gateway.

-

Ensure that the port size of the Azure ExpressRoute virtual circuit is the same size as the OCI FastConnect virtual circuit.

-

Oracle and Azure build the high availability into the physical layer connecting multiple dark-fiber cables from OCI data centers to Azure data centers.

-

You can choose to use multiple virtual circuits (FastConnect ports and ExpressRoute ports) to achieve the required throughput between OCI and Azure.

VM setup

-

Enable SR-IOV and accelerated networking on Azure VM.

-

Use the Azure proximity group to place the Azure VMs if you’re using more than one VM deploy the application workload.

-

Azure availability zones and OCI availability domains are logical mappings of physical data centers within a cloud region. They vary for every Azure tenant and account and OCI tenancy. Find the closest Azure availability zone to OCI availability domain and place the application VMs and Oracle databases in the appropriate Azure availability zone and OCI availability domain.

-

Enable hardware-assisted (SR-IOV) networking for OCI VM.

-

Appropriately size the VM both in Azure and OCI to achieve the network throughput with the virtual network interface card (VNIC).

-

Choose the closest OCI availability domain from Azure availability zone to place the Oracle database.

Network latency using OCI-Azure Interconnect

We performed two types of tests using the sockperf tool to capture TCP and UDP network latency and ICMP ping for network ping latency. We created the VM with a private CIDR and subnet in each Azure availability zone and each OCI availability domain in multi-availability domain and multi-availability zone cloud regions.

We ran each test for five minutes and captured the round-trip (RTT) network latency. We used the 1 Gbps port size of Azure ExpressRoute and the 1 Gbps port size of OCI FastConnect for the test. We used the following VM shapes to test the latency between the OCI VM and Azure VM.

OCI VM configuration

-

Operating System: Oracle Linux 7.9

-

VM shape: VM. Standard3.Flex (2 OCPU, 32 GB RAM, and 2 Gbps network bandwidth)

Azure VM configuration

-

Operating System: Oracle Linux 7.9

-

VM shape: D4s_v3 (4 vCPUs and 16 GB RAM)

ICMP ping test

We ran all the ICMP latency tests using a 1-microsecond interval and continuous run for five minutes. After five minutes, we captured the average round-trip latency. We ran the ICMP test between Azure VM and OCI VM from each Azure availability zones and OCI availability domain.

The following command samples what we used to capture the network latency:

sudo ping <Private IP Address> -i 0.000001

Syntax:

-i (interval): The amount of time in seconds to wait between ICMP echo requests. Fractional seconds are allowed. The value must be in the range of 0.000001-6000.Sockperf test

We ran all the TCP and UDP latency tests using the sockperf tool and continuously ran for five minutes. After five minutes, we captured the average round-trip latency. We ran the TCP and UDP network latency test between Azure VM and OCI VM from each Azure availability zone and OCI availability domain.

The following commands sample what we used to capture the network:

-

On the server (Destination host)

Syntax: #sudo sockperf sr --tcp -i <IP of Local host> -p <Unused server port> Example: #sudo sockperf sr --tcp -i 10.0.5.21-p 15000 -

On the client (Source host)

Syntax: #sockperf ping-pong -i <IP of Destination host> --tcp -t 300 -p <Destination server port> --full-rtt Example: #sockperf ping-pong -i 10.0.5.21 --tcp -t 300 -p 15000 --full-rtt

The following table shows the minimum latency between the Azure availability zone and OCI availability domain and the maximum latency between Azure availability zone and OCI availability domain in 1 microsecond (μs) from each interconnected region.

| Interconnect regions |

VMs in Azure availability zone (AZ) and OCI availability domain (AD) |

ICMP RTT in μs |

TCP and UDP RTT in μs |

| OCI Ashburn and Azure US East |

Azure AZ3–OCI AD1 |

866 μs |

691 μs |

| Azure AZ1–OCI AD3 |

1,647 μs |

1,411 μs |

|

| OCI London and Azure UK South |

Azure AZ2–OCI AD2 |

869 μs |

787 μs |

| Azure AZ1–OCI AD3 |

2,102 μs |

1,780 μs |

|

| OCI Frankfort and Azure Germany West Central |

Azure AZ1–OCI AD1 |

1,457 μs |

974 μs |

| Azure AZ3–OCI AD2 |

1,666 μs |

1,611 μs |

|

| OCI Phoenix and Azure US West3 |

Azure AZ3–OCI AD3 |

1,715 μs |

1,140 μs |

| Azure AZ1–OCI AD1 |

2,754 μs |

2,730 μs |

|

| OCI Tokyo and Azure Japan East |

Azure AZ2–OCI AD1 |

1,667 μs |

1,464 μs |

| Azure AZ1–OCI AD1 |

2,143 μs |

1,937 μs |

|

| OCI San Jose and Azure US West |

Azure AZ1–OCI AD1 |

872 μs |

815 μs |

| OCI Toronto and Azure Canada Central |

Azure AZ1–OCI AD1 |

1,777 μs |

1,602 μs |

| Azure AZ3–OCI AD1 |

2,001 μs |

1,643 μs |

|

| OCI Amsterdam and Azure West Europe |

Azure AZ3–OCI AD1 |

1,640 μs |

1,554 μs |

| Azure AZ1–OCI AD1 |

2,134 μs |

1,755 μs |

|

| OCI Vinhedo and Azure Brazil South |

Azure AZ2–OCI AD1 |

1,584 μs |

1,525 μs |

| Azure AZ3–OCI AD1 |

1,949 μs |

1,667 μs |

|

| OCI Seoul and Azure South Korea Central |

Azure AZ2–OCI AD1 |

1,045 μs |

1,146 μs |

| Azure AZ3–OCI AD1 |

1,492 μs |

1,276 μs |

|

| OCI Singapore and Azure Southeast Asia |

Azure AZ2–OCI AD1 |

1,541 μs |

1,362 μs |

| Azure AZ1–OCI AD1 |

1,836 μs |

1,696 μs |

|

| OCI South Africa Central and Azure South Africa North |

Azure AZ2–OCI AD1 |

1,455 μs |

1,246 μs |

| Azure AZ3–OCI AD1 |

2,009 μs |

1,624 μs |

Latency numbers can change slightly based on the VM placement in the Azure proximity group, availability zone, OCI availability domain in a particular cloud region, and the network architecture using hub-spoke transit routing. The Azure availability zone and OCI availability domain mapping varies for the different customer’s Azure account and tenant and OCI tenancy.

Conclusion

The OCI and Azure Interconnect allows enterprise customers to migrate or deploy their Oracle-based workloads to OCI and Microsoft-centric workloads to Azure without refactoring and rearchitecting the application workloads. With the help of low-latency and secure private network connectivity between Oracle Cloud Infrastructure and Azure, customers can run their business-critical workloads without compromising performance security.

To learn more about the Oracle Interconnect for Azure, see the following resources: