Model serving is the process of deploying a machine learning (ML) model into production, so that it can make predictions on new, unseen data. It involves loading the trained model from a model repository, deploying it to a server and providing an API endpoint for inference service. Model serving is a critical step in the ML pipeline because it enables organizations to operationalize their ML models to solve real-world problems.

When designing the serving architecture, consider the following major concepts:

- Scalability: Scale model serving capability to handle bursts of prediction traffic efficiently.

- Flexibility: Serve multiple models with different ML frameworks and switch between models easily.

- Performance: Infer with low latency for real-time applications and high throughput for offline applications.

Oracle Container Engine for Kubernetes

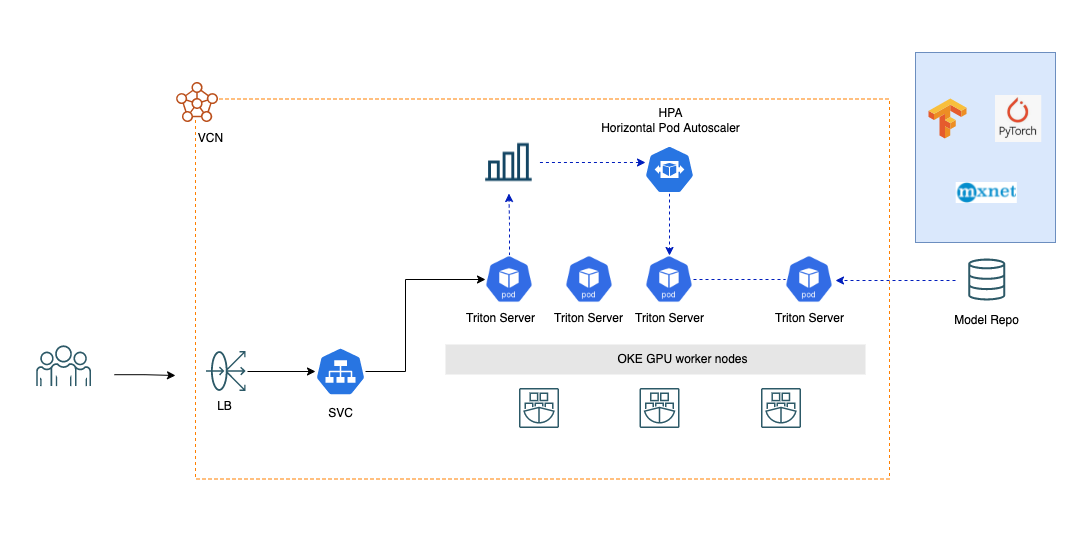

Oracle Container Engine for Kubernetes (OKE) is a managed service that enables you to run Kubernetes clusters on Oracle Cloud Infrastructure (OCI), allowing containerized applications to be run at scale without the complexity of managing Kubernetes infrastructure. OKE also supports heterogenous clusters, so you can create both CPU and GPU compute node pools to run different types of workloads in the same cluster.

All GPU worker nodes provisioned with OKE images or platform images come with the NVIDIA CUDA software stack preinstalled. To deploy an application, such as NVIDIA Triton Inference Server, on a GPU node, just specify the number of GPUs required in the Kubernetes pod specifications.

resources: limits: nvidia.com/gpu: 1

Triton: Simplifying ML Inference

NVIDIA Triton Inference Server is an open-source, platform-agnostic inference serving software for deploying and managing ML models in production environments. Triton Inference Server includes the following key features:

- Multi-framework: Supports all popular ML frameworks, including TensorFlow, PyTorch, TensorRT, ONNX, and more.

- High performance: Supports both CPU and GPU. Triton can use GPUs to accelerate inference, significantly reduce latency, and increase throughput.

- Cost effectiveness: Offers dynamic batching and concurrent model execution features to maximize GPU utilization and improve inference throughput.

Triton Inference Server can be accessed as a pre-built container image from the NVIDIA NGC catalog, so you can quickly deploy it on OKE. For scaling AI into production, global NVIDIA enterprise support for Triton is available with NVIDIA AI Enterprise software. The OCI GU1 instance family, powered by the NVIDIA A10 Tensor Core GPU and available in Bare Metal or VM shape, is an excellent choice for common ML inference workloads that balances price and performance. Triton can access models from cloud object storage or file storage, so you can use OCI Object Storage with S3 Compatibility API or File Storage service to host your model repository.

As inference service demand grows, you can use the horizontal pod autoscaler (HPA) to dynamically adjust the number of Triton Server containers, and the cluster autoscaler to automatically scale OKE worker nodes to meet target latency using real-time metrics provided natively by Triton Inference Sserver.

Get Started Today

Combining the convenience of hosted Kubernetes cluster by of OKE, the wide selection of both CPU and GPU compute instances for worker nodes, and the power of NVIDIA Triton Inference Server, you can easily deploy ML models at scale to deliver high-performing, cost-effective inference services on Oracle Cloud Infrastructure.

For more information, see the following resources:

- Running applications on GPU nodes

- Accelerate your real–time AI inference and graphics–intensive workloads with the NVIDIA Tensor Core GPU

- NVIDIA Triton Inference Server

- NVIDIA CUDA Toolkit

- NVIDIA CUDA-X Deep Learning Libraries

- NVIDIA NGC Catalog

- NVIDIA AI Enterprise

- Kubernetes horizontal pod autoscaling