We’re proud to announce validation of IBM Spectrum Scale Erasure Code Edition (ECE) on Oracle Cloud Infrastructure (OCI). You can run ECE on the OCI DenseIO.E4 Compute shape, which comes with local NVMe SSDs. These SSDs are nonephemeral, meaning no data loss on node reboot, which makes them ideal for low-latency file servers.

ECE provides IBM Spectrum Scale RAID as software, allowing customers to create IBM Spectrum Scale clusters that use scale-out storage. You can realize all the benefits of IBM Spectrum Scale and IBM Spectrum Scale RAID using OCI public cloud. ECE provides the following benefits:

-

Reed-Solomon highly fault-tolerant declustered Erasure Coding, protecting against individual drive failures and node failures

-

Disk hospital to identify issues before they become disasters

-

End-to-end checksum to identify and correct errors introduced by network and media

Architecture

You can run ECE on OCI in the following architectures:

-

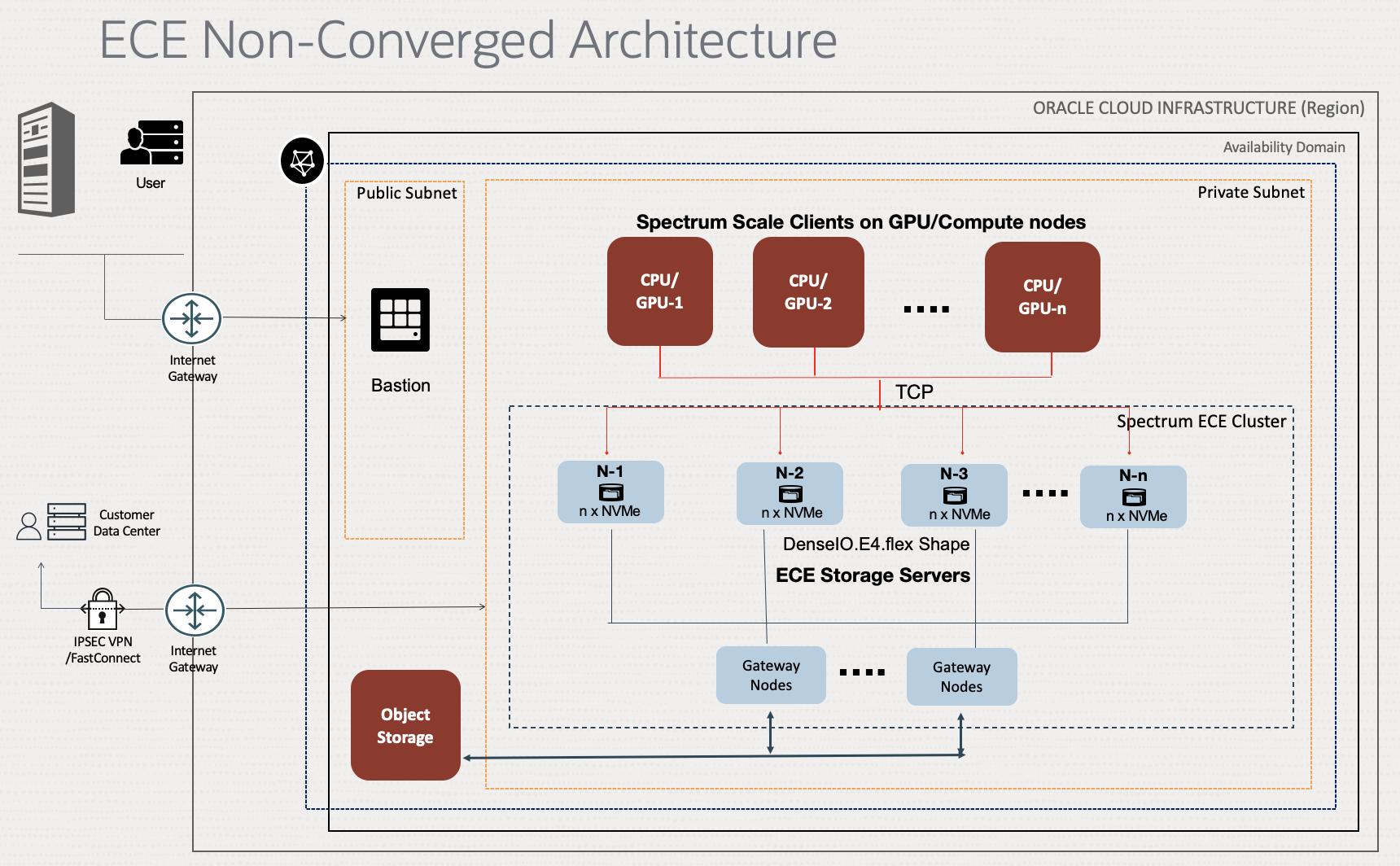

ECE nonconverged architecture: Dedicated ECE file server cluster and customer application with Spectrum Scale clients run on their own Compute nodes.

-

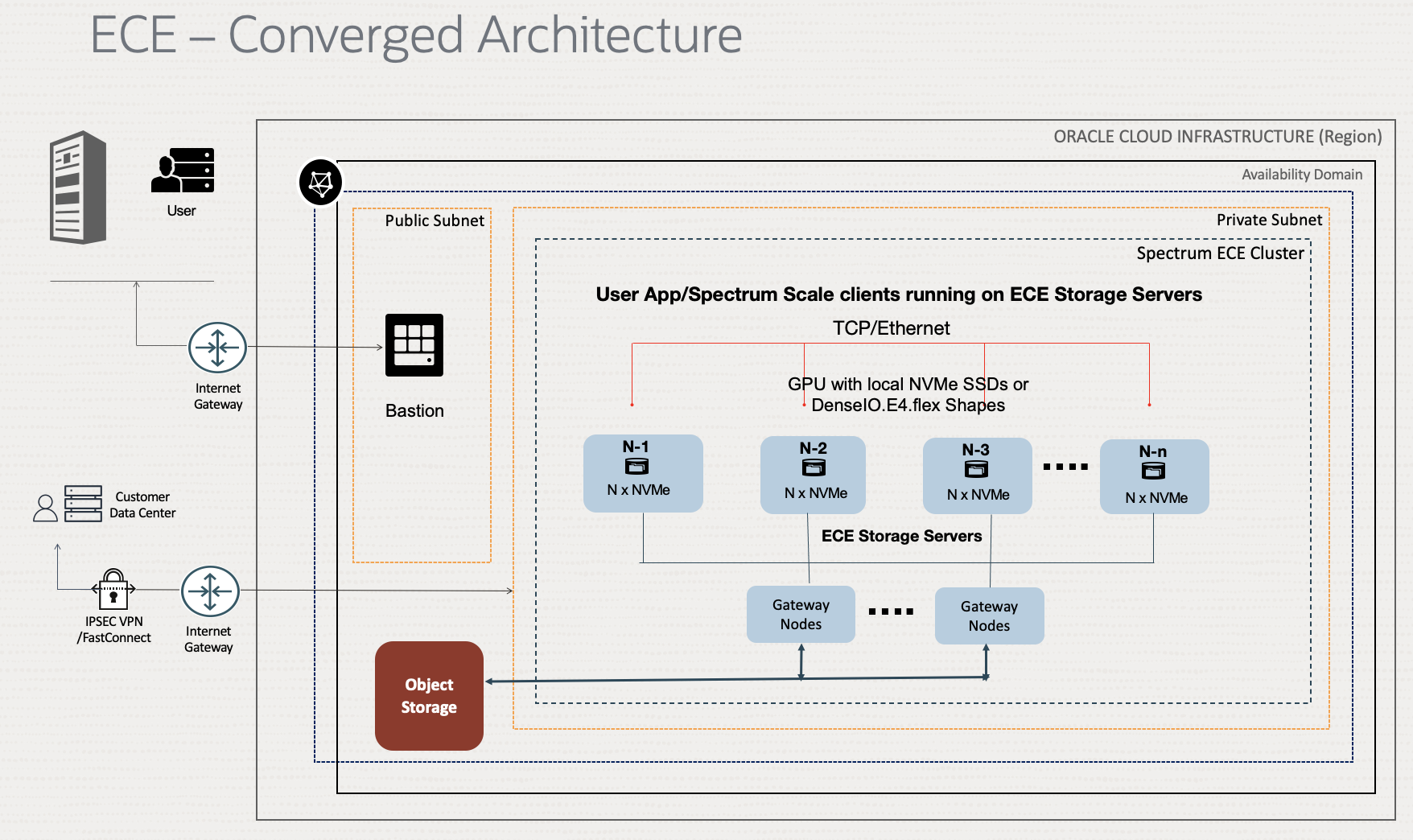

ECE converged architecture: Customer application, ECE client, and ECE file servers run on same set of nodes.

We recommend converged architecture only for vetted workloads with safeguards in place to avoid resource overallocation.

ECE nonconverged architecture

ECE converged architecture

For storage tiering to OCI Object Storage, IBM Spectrum Scale recommends using dedicated Spectrum Scale Gateway nodes.

Sizing

The minimum recommended cluster size for production workloads is six nodes of VM.DenseIO.E4.flex with two local NVMe SSD storage drives (6.8 TB each) for 4+2p erasure codes and replication level. A total of 12 disks are required spread across six nodes to build 4+2p erasure coding.

IBM Spectrum Scale Erasure Code Edition supports the following erasure codes and replication levels: 8+2p, 8+3p, 4+2p, 4+3p, 3WayReplication, and 4WayReplication.

The following table explain the approximate calculation for usable file system storage capacity for 4+2p erasure coding:

| Number of nodes | Compute shape | Total raw storage | Spare capacity | Usable file system capacity (TiB) |

|---|---|---|---|---|

| 6 | VM.DenseIO.E4.flex (16 OCPUs) |

Per node: 2 x 6.8 TB = 13.6 TB Per cluster: 81.6 TB |

1 disk (6.4 TB) | 44 |

| 6 | VM.DenseIO.E4.flex (32 OCPUs) |

Per node: 4 x 6.8 TB = 27.2 TB Per cluster: 163.2 TB |

1 disk (6.4 TB) | 93 |

| 6 | BM.DenseIO.E4.flex (128 OCPUs) |

Per node: 8 x 6.8 TB = 54.4 TB Per cluster: 326.4 TB |

3 disks (6.8 TB each) |

181 |

Performance

VM.DenseIO.E4 Cluster

On converged ECE cluster of six nodes (VM.DenseIO.E4.flex) with 16 OCPU with 4+2p erasure coding, we ran the gpfsperf-mpi tool to measure sequential create, read, and write with a 1-MB block size and 500-GB file size to ensure a larger capacity for 256-GB RAM on the nodes to avoid caching effect.

The following table shows the results:

| Operations | Seq IO Throughput (1-MB block) |

|---|---|

| create | 5.7 GB/s |

| write | 5.68 GB/s |

| read | 6.96 GB/s |

BM.DenseIO.E4 Cluster

We also ran gpfsperf performance benchmark on ECE cluster of six bare metal file server nodes (BM.DenseIO.E4.128) with 4+2p erasure coding. We used 12 client nodes (VM.Optimized3.flex with 18 OCPU/256GB RAM/40 Gbps network bandwidth) to generate IO traffic. We ran the gpfsperf-mpi tool with a 4-MB block size and 1-T file size.

The following table shows the results:

| Operations | Seq IO Throughput (1-MB block) |

|---|---|

| create | 26–29 GB/s |

| write | 26–31 GB/s |

| read | 26–32 GB/s |

The following code block shows the performance test setup details:

# install mpich

/usr/lpp/mmfs/bin/mmdsh -N all yum -y install mpich-3.2 mpich-3.2-devel

# compile gpfsperf with mpi support

export PATH=$PATH:/usr/lib64/mpich-3.2/bin/

cd /usr/lpp/mmfs/samples/perf/

make gpfsperf-mpi

# copy to all nodes

scp gpfsperf-mpi nodeX:/usr/lpp/mmfs/samples/perf/

# To run

export PATH=$PATH:/usr/lib64/mpich-3.2/bin/

#create

mpirun -hostfile /root/hosts -np 12 /usr/lpp/mmfs/samples/perf//gpfsperf-mpi create seq -r 1M -n 500G /gpfs/nvme/gpfsperf3.out

# write

mpirun -hostfile /root/hosts -np 12 /usr/lpp/mmfs/samples/perf//gpfsperf-mpi write seq -r 1M -n 500G /gpfs/nvme/gpfsperf3.out

# read

mpirun -hostfile /root/hosts -np 12 /usr/lpp/mmfs/samples/perf//gpfsperf-mpi read seq -r 1M -n 500G /gpfs/nvme/gpfsperf3.outDeployment

Use the following steps to deploy and configure ECE on OCI.

Prerequisites

-

ECE servers provisioned in a private subnet with six nodes of VM.DenseIO.E4.flex, 16 OCPU (32 vCPU), 256-GB RAM, 16 Gbps network bandwidth, 2 drives of 6.8 TB NVMe SSD storage.

-

A bastion server provisioned in a public subnet, used to run the ECE Ansible playbook to configure ECE

-

Passwordless ssh for root user configured between all ECE servers and the bastion node

-

IBM Spectrum Scale ECE software binary downloaded on Bastion node. We used version: 5.1.5.0 during our validation.

-

Oracle Linux 7.9 with RHCK (not UEK kernel). We used kernel version 3.10.0-1160.76.1.0.1.el7.x86_64.

-

Download the repo version 2.3.2 to /home/opc/my_project on the bastion node.

-

Ansible installed on the bastion

Deployment steps

-

Set the environment variable.

export ANSIBLE_ROLES_PATH=/home/opc/my_project/collections/ansible_collections/ibm/spectrum_scale/roles -

Generate the hosts file (/home/opc/my_project/hosts).

# hosts: [scale_node] node1 scale_cluster_quorum=true scale_cluster_manager=true node2 scale_cluster_quorum=true scale_cluster_manager=true node3 scale_cluster_quorum=true scale_cluster_manager=false node4 scale_cluster_quorum=false scale_cluster_manager=false node5 scale_cluster_quorum=false scale_cluster_manager=false node6 scale_cluster_quorum=false scale_cluster_manager=false [ece_node] node1 scale_out_node=true node2 scale_out_node=true node3 scale_out_node=true node4 scale_out_node=true node5 scale_out_node=true node6 scale_out_node=true -

Define the recovery group (/home/opc/my_project/group_vars/all.yml).

scale_ece_rg: - rg: rg1 nodeclass: nc1 servers: node1,node2,node3,node4,node5,node6 -

Create the playbook file (/home/opc/my_project/playbook.yml).

# playbook.yml: --- - hosts: scale_node any_errors_fatal: true gather_subset: - hardware vars: - scale_version: 5.1.5.0 - scale_install_localpkg_path: /home/opc/Spectrum_Scale_Erasure_Code-5.1.5.0-x86_64-Linux-install - scale_gpfs_path_url: /usr/lpp/mmfs/5.1.5.0/gpfs_rpms pre_tasks: - include_vars: scale_clusterdefinition.json roles: - core/precheck - core/node - core/cluster - core/postcheck - hosts: ece_node any_errors_fatal: true gather_subset: - hardware vars: - scale_version: 5.1.5.0 - scale_install_localpkg_path: /home/opc/Spectrum_Scale_Erasure_Code-5.1.5.0-x86_64-Linux-install - scale_gnr_url: gpfs_rpms/ roles: - scale_ece/node - scale_ece/cluster -

Run the playbook.

cd /home/opc/my_project sudo bash -c "export ANSIBLE_ROLES_PATH=/home/opc/my_project/collections/ansible_collections/ibm/spectrum_scale/roles ; ansible-playbook -i hosts playbook.yml"If the playbook fails with “cannot find /usr/lpp/mmfs/bin/mmvdisk,” then run the following command and rerun the playbook.

ssh nodeX "sudo yum install nvme-cli -y ; sudo rpm -ihv /usr/lpp/mmfs/5.1.5.0/gpfs_rpms/gpfs.gnr-5.1.5-0.x86_64.rpm /usr/lpp/mmfs/5.1.5.0/gpfs_rpms/gpfs.gnr.support-scaleout-1.0.0-5.noarch.rpm /usr/lpp/mmfs/5.1.5.0/gpfs_rpms/gpfs.gnr.base-1.0.0-0.x86_64.rpm" -

Create the vdisk to run on one of the ECE nodes.

/usr/lpp/mmfs/bin/mmvdisk vs define --vdisk-set nvme --rg rg1 --set-size 100% --da DA1 --code 4+2p --block-size 1M /usr/lpp/mmfs/bin/mmvdisk vdiskset create --vdisk-set nvme -

Create the file system and mount it on all nodes to run on one of the ECE nodes.

/usr/lpp/mmfs/bin/mmvdisk fs create --file-system nvme --vs nvme /usr/lpp/mmfs/bin/mmmount all -a df -h | grep gpfs nvme 44T 1.5T 42T 4% /gpfs/nvme

Summary

ECE on OCI offers you the ability to build a parallel file system using flash storage for low latency and erasure coding, which offer a higher degree of availability and durability. You can also select between OCI virtual machines (VM) or Bare metal DenseIO.E4 Compute shapes to build file systems based on your performance, storage capacity, and budget requirements.

If you have any questions or need help to design ECE solution on OCI, you can reach blog author or ask your assigned sales team to engage the Oracle Cloud Infrastructure HPC and GPU Architects team. For ECE filesystem features, refer to the IBM Spectrum Scale Erasure Code Edition documentation.

Special thanks to Nikhil Khandelwal from Spectrum Scale Development Advocacy, IBM group, for his Spectrum Scale expertise and working with OCI team to get ECE validated on OCI. Nikhil has over 20 years of storage experience and has worked on Spectrum Scale deployments across a wide set of industries with varying workloads.