Modern enterprises require their Virtual Machine (VM) workloads to operate continuously without disruptions, yet in hyperscalar cloud environments like Oracle Cloud Infrastructure (OCI), maintenance events on compute VMs need to happen regularly for security and operational reasons. In this blog, we will review the architecture of OCI VM Live Migration, a capability that removes the customer overhead of having to deal with maintenance events.

The following video begins with an overview of the VM components that need to be migrated and then explains in detail how each component is migrated along with various phases of the migration. Finally, it discusses critical design tradeoffs involving customer risk around availability and performance when designing at-scale live migration of customer VMs.

Live migration overview

OCI has a global footprint with 41+ public regions and many dedicated customer regions. With this large scale there is VM maintenance happening in more than one region at any given time. OCI has implemented “Live Migration” as a mechanism to move a VM from one physical server to another while it is still running and with minimal impact so that the VM does not even realize that it is running on a new server after the migration is completed!

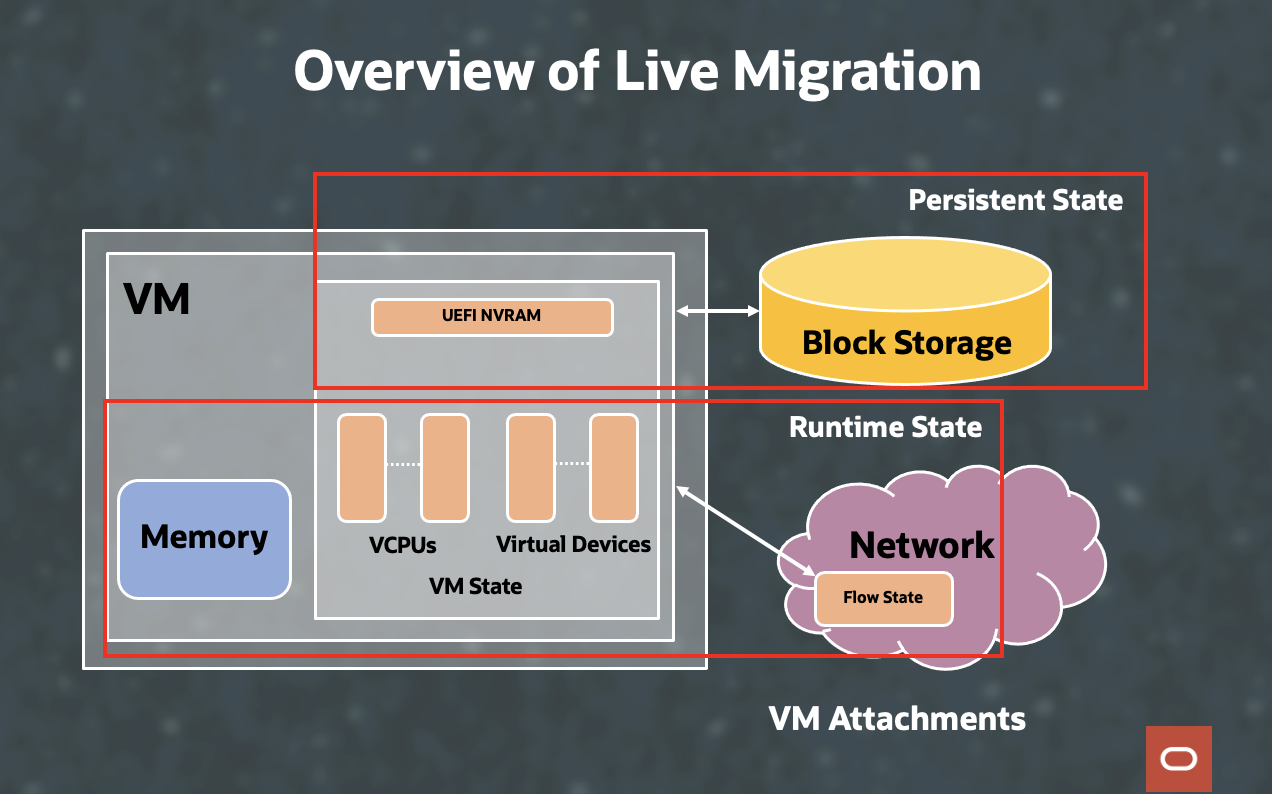

Figure 1: Overview of VM states for live migration

As part of live migration, the state of the VM is moved, which includes the persistent state and runtime state, as shown in Figure 1. The persistent state includes data on block volumes, contents of the NVRAM, the VM’s configuration, and how the devices are laid out on the system bus. The runtime state includes contents of the VM’s memory, device state of connected devices, and state stored at the network virtualization layer. For cases where the VM has direct device attachments, such as pass through network interface cards (NICs), the physical devices also have a runtime state.

VM Runtime State Migration

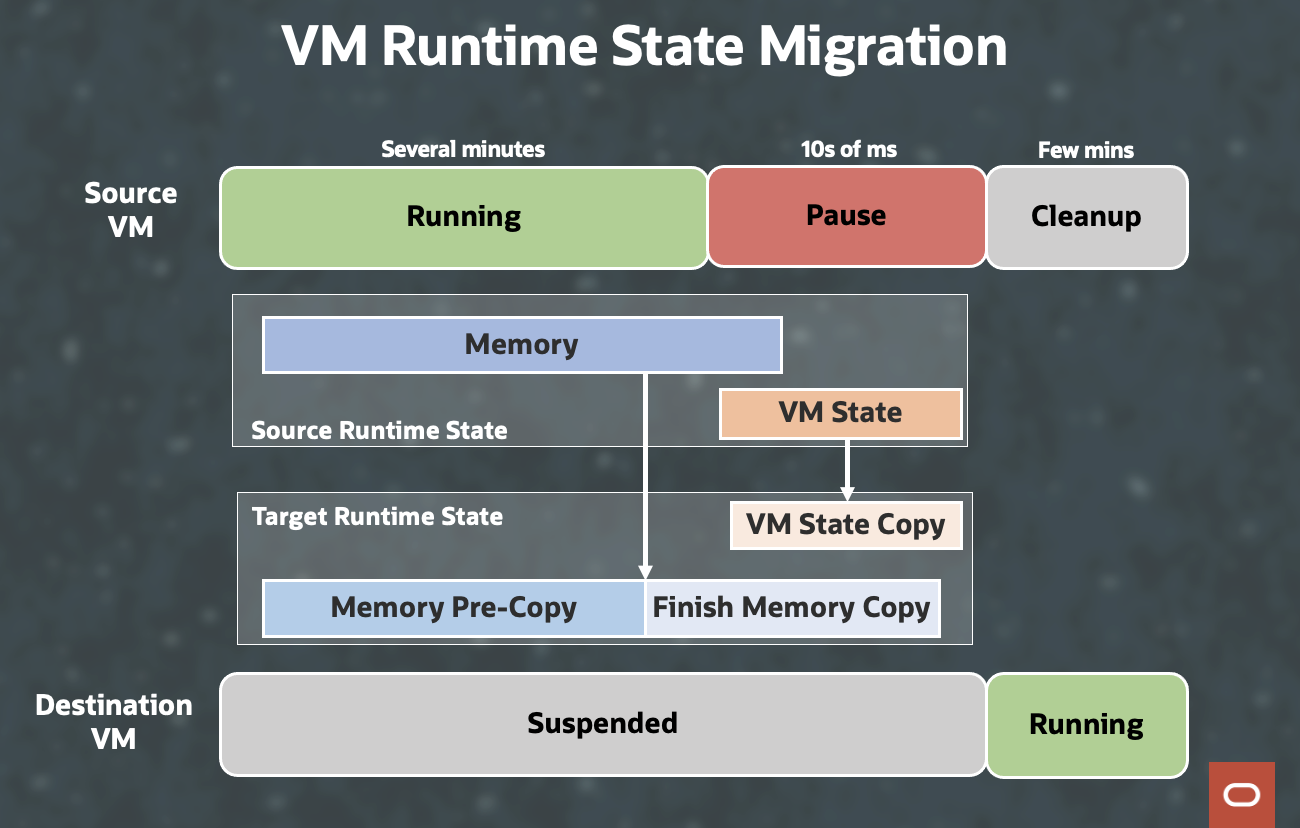

Figure 2: VM runtime stage migration

The largest runtime state is the contents of the VM’s memory. Most of this is copied over while the VM is still running. There are a couple of approaches to migrating memory. We can choose to pre-copy or post copy memory pages. OCI implemented the pre-copy option to limit the VM pause to 10s of ms and focus on availability as a fundamental principle. The video further discusses all the runtime state components shown in Figure 1 and Figure 2.

VM Persistent State Migration

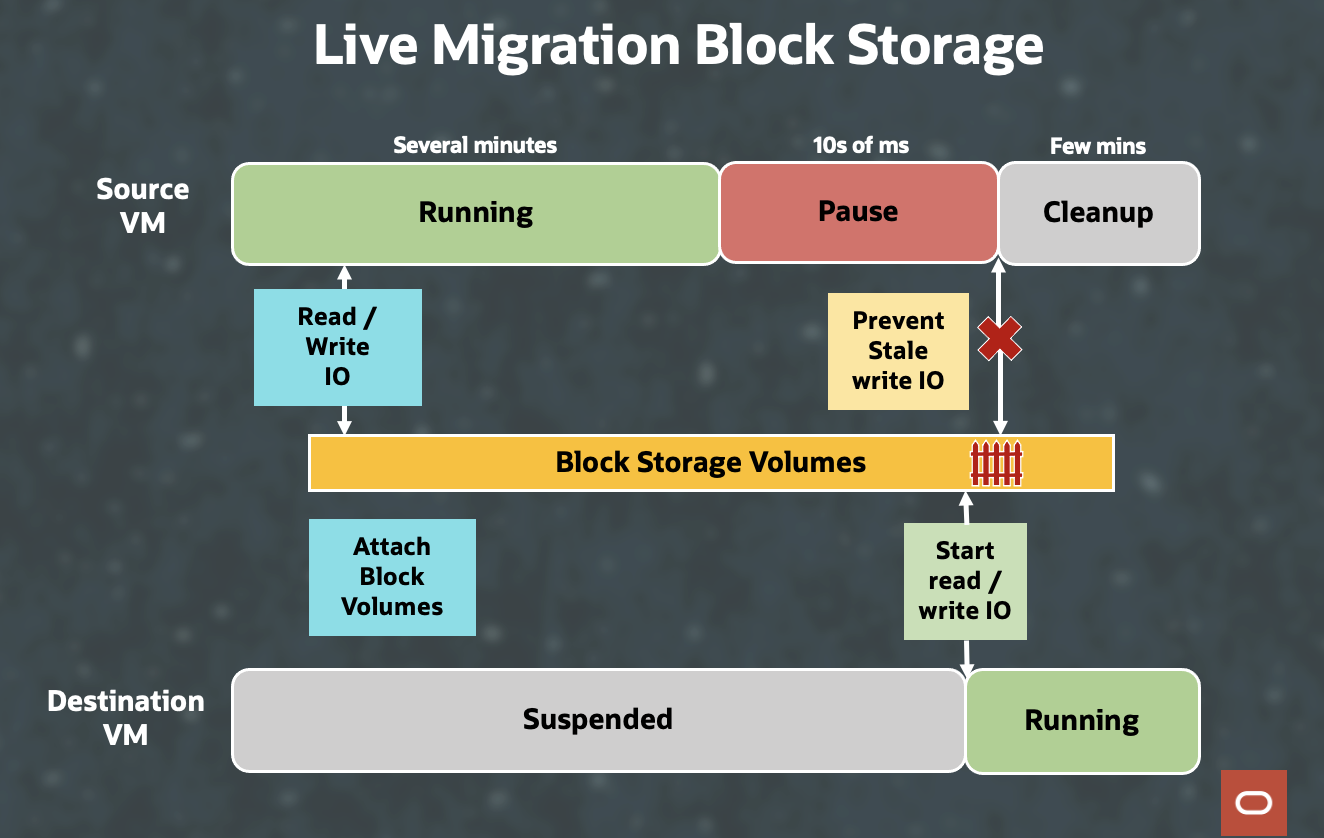

Persistent devices, namely block storage and network, are attached to the destination while the source VM is still running. For block storage migration, remote block storage devices are brought over to the destination by attaching them to the destination (i.e. the destination VM connects to them and will start using them when it is started). For a period, block devices are attached to both the source and destination VMs. When the destination VM is started, it is possible that stale write I/O from the source that were already in flight before the VM was paused may arrive after the write I/Os from the destination. This race condition can cause data corruption. To avoid this race condition, we implement a fencing mechanism so that once the block volume accepts write I/O from the destination VM, any delayed write I/Os from the source VM are dropped.

Figure 3: Block storage migration

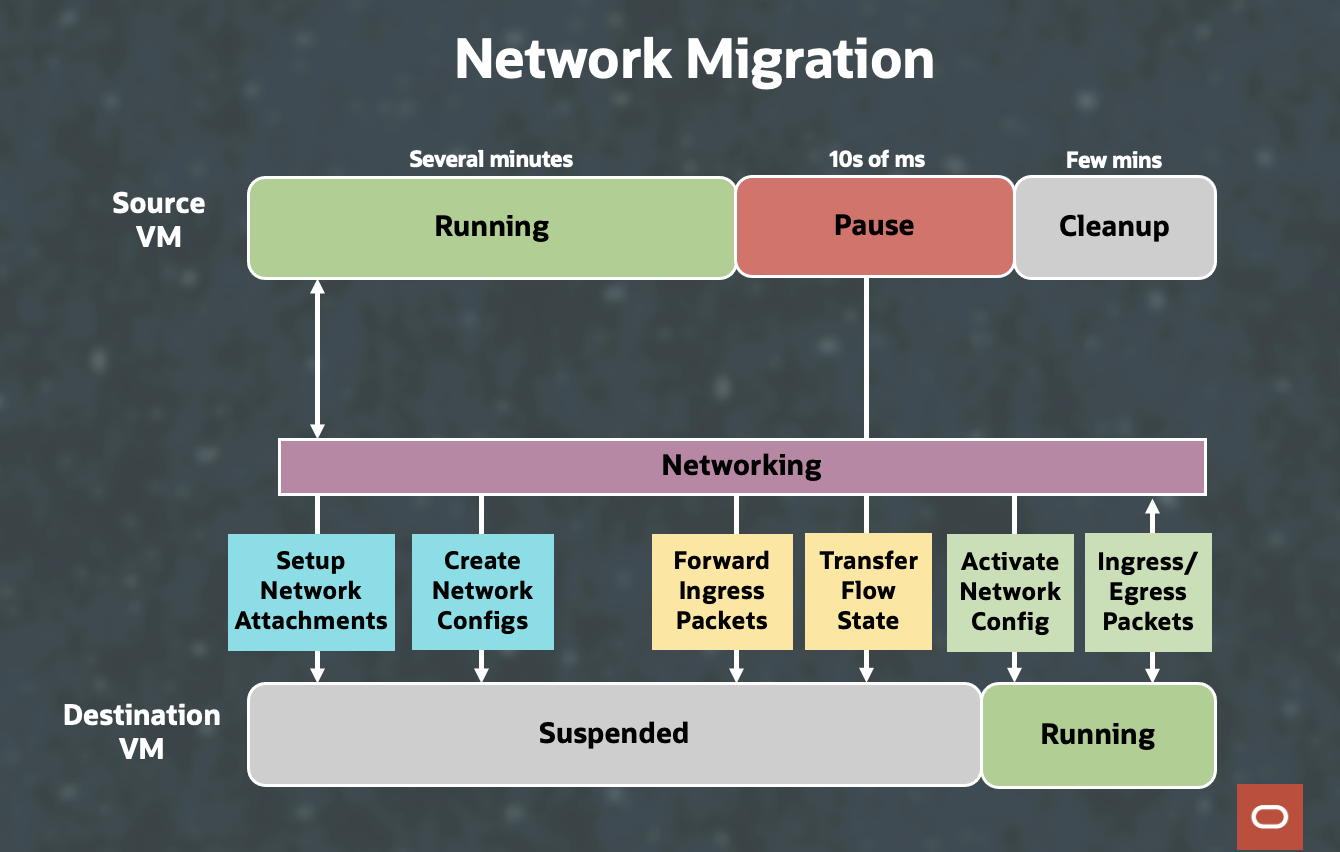

Network migration works differently for paravirtualized devices and SR-IOV pass through devices. Paravirtualized devices migration is simpler because the hypervisor can extract and restore states. For pass through devices, migration is a little challenging because the hypervisor is not in the data path for the network state migration.

OCI uses a new standard, known as vhost data path acceleration. The data path and control path are split still allowing for data pass through and a virtio interface is exposed to the VM. So, the hypervisor and the NIC cooperate to extract and restore network state to achieve the live migration.

Figure 4: Network migration

In addition to device state of the interface from within the host, we also need to make sure flow state that is maintained at the network virtualization layer is carried over to the destination. This includes connection state that is created as part of VCN’s security list functionality to enforce stateful firewall rules. This state needs to be transferred over so that established network connections aren’t dropped after migration. To minimize chances of network packets getting dropped, OCI VCN sends ingress traffic to both the source and the destination VM during the migration process.

OCI uses a dedicated migration virtual network interface during the migration process for data transfer. We have dedicated network bandwidth reserved on every node just for live migration related network traffic so that the migration can finish quickly while also protecting customers from ‘noisy neighbor’ issues that might stem from migration network traffic.

End-to-end migration

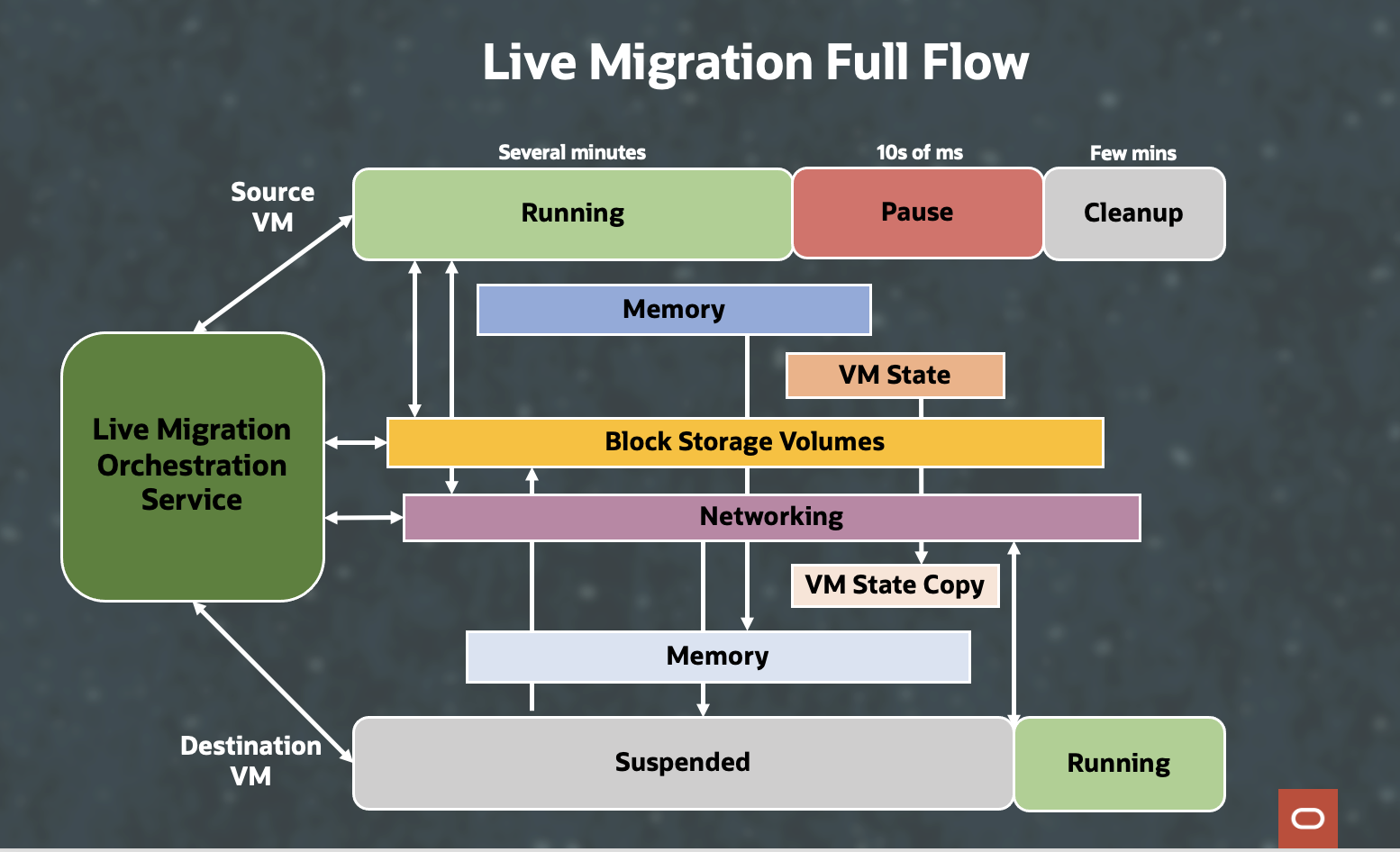

Figure 5: End-to-end migration

Live migration consists of multiple stages that must be carefully coordinated. The OCI VM Live Migration Orchestration (LMO) service handles the various stages of migration, such as the creation of the suspended destination VM with the correct config, attaching block volumes, and virtual network interfaces.

Safeguards

Live migration is tricky with a number of complex operations performed in parallel, which can cause an availability risk. OCI implements several safeguards that ensure no impact to a VM’s availability during migration. We use pre-copy so that a copy of VM’s memory is sent to destination as opposed to copying it on the fly. If LMO detects any issue, it can cancel the live migration and the VM will not notice it. If migration does not converge in a certain amount of time because, for instance, pages take too long to copy, the process is aborted. It is critical to ensure all of this works in practice. Hence, a pre-production fault injection system is designed to inject faults at every stage in the process during development to ensure migration is robust.

Conclusion

OCI’s live migration is designed to perform with minimal pause time of 10s of ms so that the VM running does not realize it has been migrated to another physical server.

To achieve successful live migration, OCI prioritizes pre-copy of memory, and establishes network and storage attachments while the source VM is still running and completes the vast majority of state copy without any interruption to the VMs by using dedicated network bandwidth for Live Migration.

To prevent any persistent state corruption, the live migration process implements fencing on the block storage to ensure that no accidental writes of old data to the block volumes occur. The network state is also migrated to the destination hypervisor. To coordinate and manage the end-to-end live migration process, we use Live Migration Orchestration to drive the different phases of migration and ensure that the workflow for the migration is recoverable.

All these optimizations that were built into OCI’s compute makes services running on OCI highly available during maintenance events and saves customers time and money.

Oracle Cloud Infrastructure Engineering handles the most demanding workloads for enterprise customers that have pushed us to think differently about designing our cloud platform. We have more of these engineering deep dives as part of the First Principles series, hosted by Pradeep Vincent and other experienced engineers at Oracle.

For more information, see the following resources: