Co-authored by David Carne.

In line with the core value of Oracle Cloud Infrastructure (OCI), Put customers first, we want to help enable enterprise customers to securely run their mission-critical applications in OCI, without requiring them to be security subject matter experts (SMEs). We intend to bake security features into OCI services and the underlying infrastructure. We focus on solving hard security problems on behalf of our customers, so security becomes simple and prescriptive for them to use.

Cloud security comprises a (very) long list of things to secure. So, how do we think about security in OCI? An iceberg is a good metaphor, where the customer-visible part of OCI security is a small part of the much larger whole. The customer-visible part comprises OCI security features, such as the console, cloud services, and APIs available for securing customer applications and data on the cloud. The much bigger part, under the surface, comprises a multitude of security mechanisms that we deploy, including the following points:

- Securing the globally scaled-out OCI infrastructure

- Including data center physical security and hardware supply chain security

- Securing the continuous integration and delivery (CI/CD) pipeline that deploys code to production

- Security architecture patterns for distributed systems that make up OCI services

- Security operations and incident response

- Least-privilege operator access

Our job takes care of the whole of OCI security, and we prioritize foundational security investments that raise the security bar of the whole.

Security isolation and blast radius reduction are key attributes for scaling security in a global cloud infrastructure. Using in-house Oracle hardware security and hardware engineering teams, we’ve designed and built hardware security components to provide greater security to customer applications and OCI cloud services. These components are integrated into all servers in the OCI cloud, and transparently raise the security for all OCI customers, without customers needing to do anything. In this blog post, we present details of two foundational security features that we’ve baked into the OCI infrastructure.

Background: First-generation cloud architecture

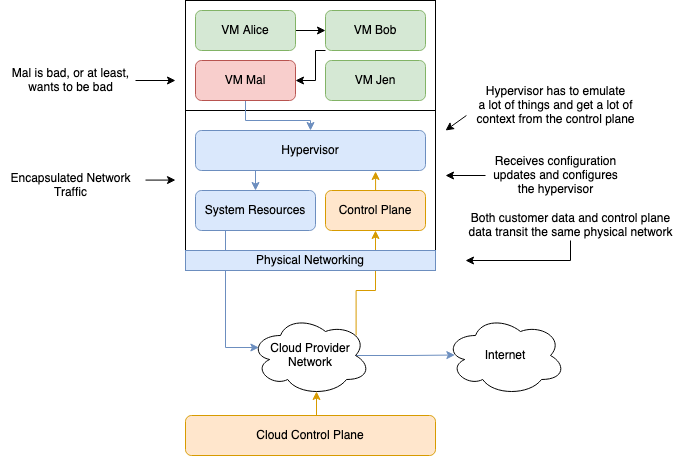

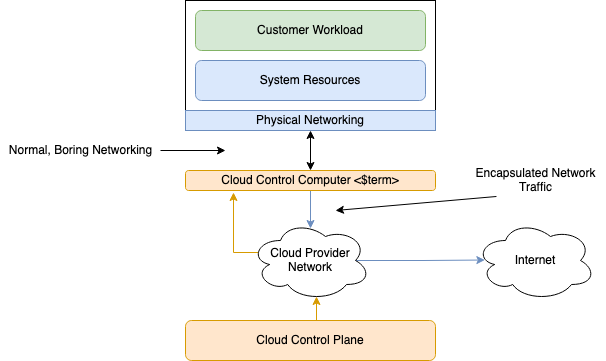

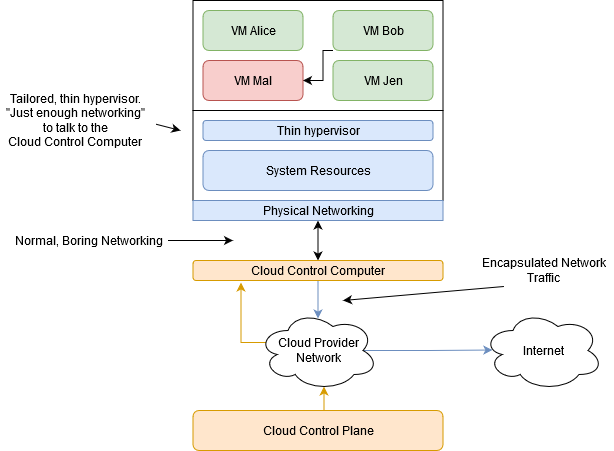

First-generation clouds were built to provide virtual machines (VMs) that could be networked within a software-defined Layer 3 (L3) network, where the hypervisor plays a key role in implementing multi-tenancy security isolation. Also, the hypervisor also implements privileged cloud operations by interacting with the cloud control plane that orchestrates operations necessary to implement customer commands. Examples of privileged cloud operations in hypervisor include virtual networking, such as packet encapsulation, decapsulation, and routing, and block storage processing. Encapsulation and decapsulation of network packets is necessary to provide the virtual network semantics over a physical data center network, a key aspect of implementing a software-defined virtual network. The following figure shows the various components of this architecture from first-generation clouds.

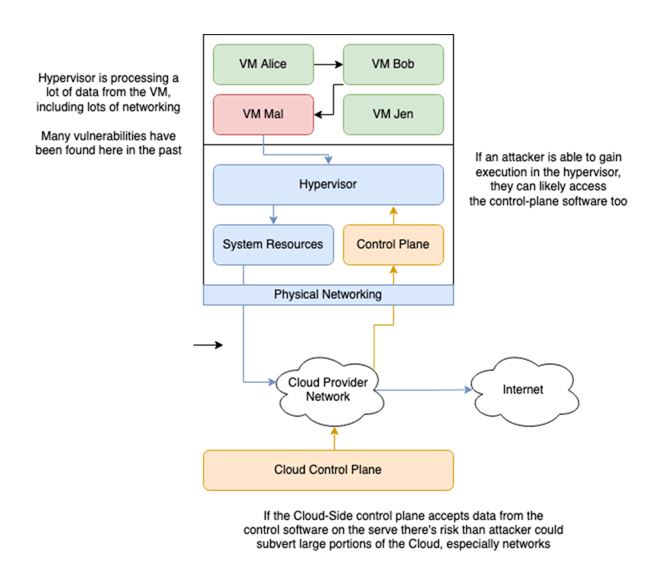

In the first-generation clouds, the critically important hypervisor and the control plane component share the server, processor, and storage resources with the untrusted customer VMs. This configuration has a security downside, because a hypervisor security vulnerability has a potentially large blast-radius, which could be exploited by a malicious customer VM. As shown in the following figure, a malicious VM (VM Mal) could exploit this vulnerability to break multi-tenancy security to access other VMs (VM Alice, VM Bob, and VM Jen) on the same server, or access the cloud control plane to pivot to other customer resources. These security issues keep cloud security architects awake at night.

Second-generation cloud: Oracle Cloud Infrastructure

Oracle Cloud Infrastructure (OCI) was built from the ground-up with a second-generation cloud architecture, designed to be resistant to many of the security issues inherent to first-generation clouds. OCI was designed as a bare metal cloud architecture, with the ability to provision bare metal instances within minutes programmatically through the console and APIs. In an OCI bare metal instance, the customer has complete control and single-tenant access to the physical server. Only customer-controlled code runs on the bare metal instance and no Oracle-managed code runs on it.

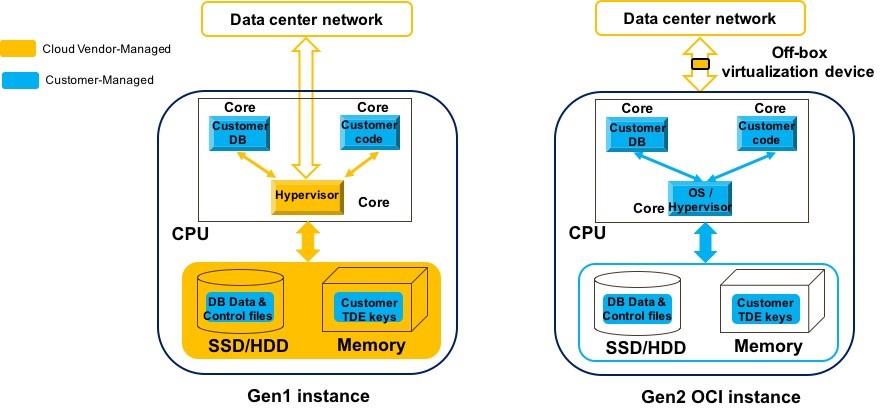

This configuration is illustrated in the following figure, where an OCI bare metal instance is shown on the right and a typical first-generation cloud instance on the left. The customer-managed resources and data are blue, while the cloud provider-managed resources are yellow. In contrast to the Gen 1 instance, which has many provider-managed components in yellow running within the BM instance itself, the Gen 2 OCI instance has none. We describe the engineering innovations that made this happen.

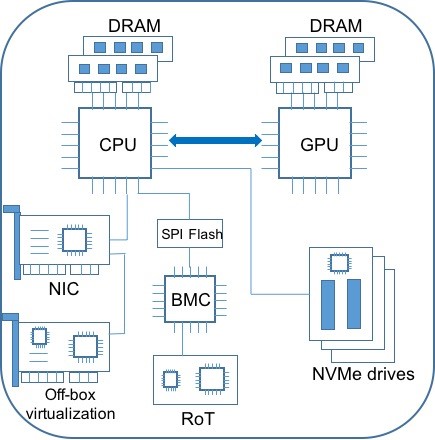

Oracle is an original device manufacturer (ODM) with an in-house hardware development group that designs custom motherboards for OCI servers and develops firmware running on these motherboards, such as BIOS and BMC. We also have a dedicated hardware security group working with the hardware group to build security hardware. To help securely enable the OCI bare metal architecture, these Oracle teams built the following security components incorporated into all OCI servers:

- Root of trust (RoT) hardware: With complete access to the physical server, customers can reconfigure hardware peripherals or modify any firmware to support their workloads. However, this capability introduces a security risk if rogue customers leave persistent firmware malware on the server, such as UEFI BIOS malware and NVMe drive malware. OCI RoT negates this security risk by installing known-good images of all firmware on an OCI server when provisioning between customers.

- Off-box virtualization hardware: The privileged OCI control-plane code runs on dedicated hardware, referred to as off-box virtualization, separate and segregated from the server processor running untrusted customer applications. This component is shown as a yellow box in the Gen 2 OCI instance in the previous figure. The hypervisor is stripped down to basic functionality, such as launching VM and allocating memory. While all the privileged cloud control plane code is off-loaded to the off-box virtualization hardware. This configuration has two security benefits: It reduces the attack surface of the hypervisor, and a hypervisor security issue has a more limited blast radius and doesn’t impact cloud control-plane operations. Previously, the off-box virtualization hardware has also been referred to as OCI Cloud Control Computer.

OCI hardware root of trust

In a bare metal cloud architecture, security is a big concern. With unfettered access to the underlying hardware and firmware, customers have immense flexibility to reconfigure hardware or modify firmware. On the flip side, rogue customers could abuse this access to implant a malicious payload into the hardware or firmware of the server and compromise subsequent customers using the same instance. In January 2019, the security research firm, Eclypsium demonstrated this issue in a non-OCI cloud offering. In their demonstration, they inserted their firmware implant into the baseboard management controller (BMC) firmware of a bare metal host, returned the bare metal host to the cloud provider, and received it back as a new host with their implant intact. The OCI RoT was built to defend against such threat vectors, and not surprisingly, the Eclypsium issue did not impact OCI.

Multiple storage chips maintain the state on both x86 and Arm servers. Examples include fused ROMs, DRAM, SPI flash for BIOS and BMC firmware, EPROMs on PCIe peripherals such as NICs, and flash memory in NVMe drives. Chips are classified in the following categories:

- Guaranteed immutable: Firmware fused in read-only memory (ROM) and fuses that cannot be physically altered. Oracle’s hardware group audits all third-party hardware that we purchase. We verify the immutability assertions made during manufacture to ensure that an attacker has no opportunity to change or persist in this memory. These components are not susceptible to firmware malware.

- Guaranteed ephemeral: DRAM and processor registers. Data isn’t persistent in these chips. The stored state disappears when the server is powered down. In Oracle servers, we design the power planes to reliably turn off power to server components, independent of the state of software or firmware onboard. To address security threats posed by DRAM-resident malware, the OCI control plane power cycles the servers before assigning them to customers.

- Persistent and mutable: Flash and PROMs, which store firmware, such as BIOS, BMC code, NIC firmware, and GPU firmware in a bare metal server. The customer can modify or update the firmware stored in these storage chips, where the biggest security risk lies. In Oracle, we made security investments to address this risk.

Securing persistent and mutable components

To help secure the persistent and mutable components, we could have implemented the following types of security mechanisms:

- Apply existing commodity technologies, such as measured boot like Intel TXT or secure boot to cryptographically verify firmware and establish trust. These approaches are primarily based on UEFI BIOS routines, which have high complexity. Also, many UEFI BIOS security issues have been disclosed in past few years. Although easier to implement, this option would have placed higher operational burden on our customers to provide the wanted level of security.

- Prevent the customer’s ability to modify firmware through server modifications. OCI customers have asked for flexibility to reconfigure hardware peripherals or update firmware to versions that have been qualified to run their mission-critical applications. Examples include SRIOV settings on network cards to improve custom virtualization workloads and customer-run hypervisors and NVMe storage device features, including customer-configured drive encryption. This option was not aligned with the requirements of OCI’s customers.

- Build a robust and inviolable mechanism to programmatically wipe and reinitialize persistent and mutable state to known-good firmware images. This option also allows us to update firmware to the latest versions, whether to patch security bugs or address operational issues, in all servers across the OCI infrastructure with less effort. For security and operational reasons, we chose this option, and invested in engineering effort to implement it.

Root of Trust (RoT) hardware establishes firmware integrity in all OCI servers. It uses hardware mechanisms to electrically program known-good firmware images in mutable memory chips. It powers on before other server components and initiates the firmware wipe and reinitialization in security-critical server components. The custom hardware is designed, built, and programmed by Oracle and present in all OCI servers. The RoT hardware isn’t visible to or accessible from customer applications or the hypervisor and can only be accessed in an authenticated manner from the OCI control-plane. The following figure shows an OCI bare metal instance with the RoT hardware:

After the server powers on, the RoT is one of the first components to start running. The trusted OCI-programmed firmware on the RoT initiates the following actions:

- The RoT accesses and verifies the known-good image of the BMC or iLOM firmware, obtained from the OCI control-plane. Next, it uses electrical signals on BMC pins to wipe local persistent storage and programs the verified BMC firmware image. Through logic analyzer traces, we have verified some of the security-critical commands, such as “chip erase,” sent by RoT to the server components. The wipe and initialization occur without any dependency on existing BMC firmware and are immune to any malware. Then, the BMC boots to the newly programmed known-good firmware.

- The BMC has direct electrical access to the SPI flash storing the UEFI BIOS and can access this flash without participation from the existing BIOS image. The trusted BMC firmware wipes the SPI flash and programs the known-good BIOS image obtained from the RoT.

- The server processor boots up with the known-good BIOS image. Next, the persistent storage in various server components is wiped clean and known-good firmware images are installed. We accomplish this task across hardware components, such as the network interface cards (NIC), NVMe drives, and the GPU. We use a combination of RoT routines, BIOS routines, and vendor-specific utilities to do this. For example, sometimes the RoT routines use electrical signals to force a hardware component into a bootstrap mode, where trusted boot-code is run from BootROM, performs the wipe, and installs the verified firmware images.

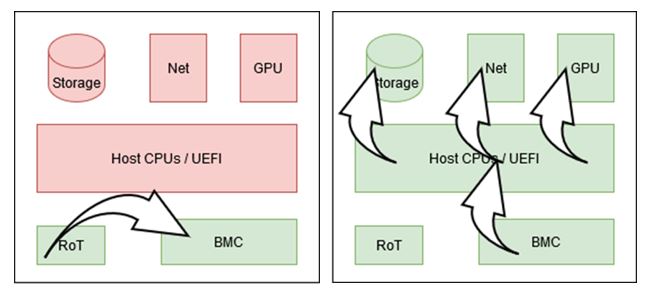

The following figure shows the firmware initialization sequence. At the beginning of the sequence, no components are trusted, shown in red, except the RoT, shown in green. As the initialization continues, the RoT bootstraps the trusted state into the server components. After the host is in a known safe and clean state, our Root of Trust card electrically hardens the server by asserting physical signals that disconnect, lock down, or otherwise restrict our server hardware to operate in a safe mode. If the wipe process fails or reports errors on a server, tickets are cut for manual investigation by security team, and the server is not assigned to customers until the investigation is completed.

OCI’s wipe mechanism ensures security of customer data through wipe at the end of the host lifecycle. The contents of local block volumes are securely erased using NIST recommended methods.

OCI cloud control computer

OCI cloud control computer comprises custom-designed hardware that’s directly connected to the OCI server that runs customer applications. All network traffic from customer applications sent or received by the server’s NIC and flows through the cloud control computer running OCI control plane code. The cloud control computer is invisible to customers and isn’t accessible from customer applications because of server hardware configurations. As a result, customers don’t see this extra hop in their network path.

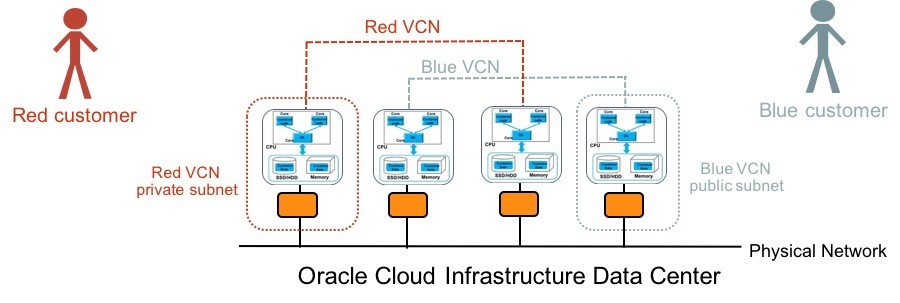

The cloud control computer provides a virtualized network interface card (VNIC), which is attached to customer bare metal or VM instances. The VNICs are grouped into subnets within the customers’ software-defined network, referred to as OCI virtual cloud network (VCN). The cloud control computer maintains state of all VNICs in a customer’s VCN and the network rules necessary to route packets between these VNICs.

Each customer VCN is mapped to its own network namespace, providing network isolation for traffic from different VCNs. To implement the separate network namespace, the cloud control computer transparently encapsulates network packets from customer instances with unique namespace identifiers, before sending the traffic over the physical network. Based on the destination IP address, the cloud control computer determines whether a packet is sent to another VNIC in the customer’s VCN, an OCI service exposed through the Oracle Service Network (OSN), or the internet.

The previous figure shows robust, hardware-enforced network security isolation, provided by the cloud control computer. We have two customers, red and blue, with their own red and blue VCNs. The cloud control computer is indicated by the yellow boxes connected to instances in red and blue VCNs. In terms of security, the cloud control computer does the following jobs:

- Introspects all packets from instances in red and blue VCNs and only allows legitimate traffic into each VCN. If the blue customer tries to spoof the source of an IP address to reach instances in red VCN, the cloud control computer detects the spoofing and drops the packets.

- Implements customer-provided inbound and outbound network security ACLs for their VCNs. Any security issue with the customer instances doesn’t impact the ACLs implemented by the cloud control computer.

- Provides robust isolation between the customer VCNs and OCI control-plane network by allowing only white-listed traffic to access the control-plane network.

With OCI software running on the OCI cloud control computer, the OCI hypervisor that provides virtual machines for customers requires much less privileged code to run within it. The cloud control computer uses hardware technologies, such as SRIOV, to route packets to customer VMs, and the hypervisor only manages these mappings. This architecture allows the hypervisor to be leaner with a smaller attack surface. When the hypervisor software needs updates for security, we can use Oracle’s Ksplice technology to perform in-place, zero-downtime upgrades, even to the kernel.

Putting it all together

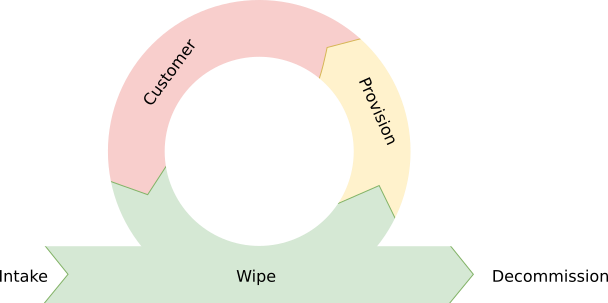

Let’s look at how all these pieces come together. When hardware arrives at an OCI data center, it goes through our comprehensive wipe process. This process ensures that the server is in a pristine state with all mutable storage erased and reinitialized. After we confirm that the hardware is cleaned and ready to go, our systems then allocate capacity either for our customers or our own internal use. Importantly, this process includes reinitializing the cloud control computer that provides OCI’s network virtualization. The system is then locked down through hardware mechanisms and is ready for a customer to use it.

When a customer requests a host from our Console, OCI selects and allocates that host to the customer. Information about resources accessible to the host, such as network or storage virtualization, is provided to the cloud control computer. After the bare metal hardware and cloud control computer are configured and provisioned to match what the customer specified, the OCI control plane instructs the host to boot and begins running the customer workload.

While the customer has the system, the cloud control computer provides the resources the customer expects, and simultaneously protects the customer and OCI from attackers or rogue users of our systems. After the customer is done with a system, we immediately run the wipe process and firmware initialization using the RoT hardware. This helps ensure that customer data is removed from the system and inaccessible even to OCI. The system is then ready for the next customer.

When hardware goes bad, it’s decommissioned. As part of this decommissioning, hardware with customer data, such as drives, is mechanically destroyed within the OCI data center. All the destroyed hardware is tracked through tickets, and the process is reviewed.

Conclusion

Security is top priority at OCI, and we continue to raise the bar on security for our customers. In this blog post, we presented details of two hardware-based security investments we made and the security benefits they enable for all OCI customers. We hear feedback from our enterprise customers on their needs, and we continue to invest in OCI’s security mechanisms to make security both simple and prescriptive for our customers.

Click to view more First Principles posts.