This blog was written in collaboration with Bhakti Hinduja, senior principal application engineer at Ampere.

Many cloud workflows today use scalable search engines. Elasticsearch is a popular and efficient search engine used in a scale-out configuration. Oracle Cloud Infrastructure (OCI) A1 shapes powered by Arm-based Ampere Altra processors are designed to deliver exceptional performance and energy efficiency for cloud native applications like Elasticsearch. In our testing, OCI A1 shapes demonstrated compelling performance and outstanding energy efficiency when tested against comparable x86 processors on the market.

Elasticsearch is a popular distributed, open search and analytics engine for all types of data, including textual, numerical, geospatial, structured, and unstructured. Elasticsearch is built on Apache Lucene by Elastic. Companies like Walmart, T-Mobile, Adobe, Cisco, and Facebook all use Elasticsearch for various use cases, from easy search for content to instantaneous live chat or seamless e-commerce experience. Elastic added support for a prebuilt binary on aarch64 that you can download from their website.

How does Elasticsearch work? Raw data, such as geospatial data, logs, or web data, is inserted into Elasticsearch. The data is then formatted, enriched, and eventually indexed to be optimal to retrieve. After it’s indexed in Elasticsearch, you can run complex queries against your data to retrieve specific data summaries. Given the vast amount of data we have today, optimized indexing and searching using Elasticsearch is critical to access meaningful data.

In this blog, we test Elasticsearch on Ampere Altra-based OCI instances against the Intel IceLake and AMD Milan instances.

Benefits of running ElasticSearch on OCI Ampere A1

OCI A1 shapes, powered by Arm-based Ampere Altra processors, are designed to deliver exceptional performance for cloud native applications like Elasticsearch. They use an innovative architectural design, operate at consistent frequencies, and use single-threaded cores that make applications more resistant to noisy neighbor issues. This setup allows workloads to run predictably with minimal variance under increasing loads.

OCI offers Ampere A1 compute at an attractive price point of $0.01 per core hour with flexible sizing from 1–80 OCPUs and 1–64 GB of memory per core. Ampere Altra processors offer the following benefits:

-

Cloud native: Designed for cloud customers, Ampere Altra processors are ideal for cloud native usages, such as Elasticsearch.

-

Scalable: With an innovative scale-out architecture, Ampere Altra processors have a high core count with compelling single-threaded performance, combined with consistent frequency for all cores delivering greater performance at socket level.

-

Power efficient: Industry-leading energy efficiency allows Ampere Altra processors to hit competitive levels of raw performance, while consuming much lower power than the competition.

Benchmarking configuration

For Elasticsearch benchmarking on OCI Ampere A1, we used Rally as a load generator for benchmarking Elasticsearch. Rally provides several tracks, which are different benchmarking scenarios consisting of varied dataset types, such as “http_logs” consisting of HTTP server log data and “NYC taxis” consisting of Taxi rides in New York in the year 2015. Each track caters to different application data types. Selecting the right data type, we can choose the challenge, such as append-only or index and append.

We recommend using the latest Elasticsearch prebuilt by Elastic for aarch64. We used Oracle Linux 7.9 on OCI (kernel 5.4) with Elasticsearch 7.17 for our tests. For each of the tests, we used similar client machines as load generators for Elasticsearch. We also recommend using the latest Java development kit (JDK) compiled with GNU Compiler Collection (GCC) 10.2 or newer because newer compilers have made significant progress towards generating optimized code that can improve performance for aarch64 processors. For this test we used JDK 17, build with GCC 10.2.

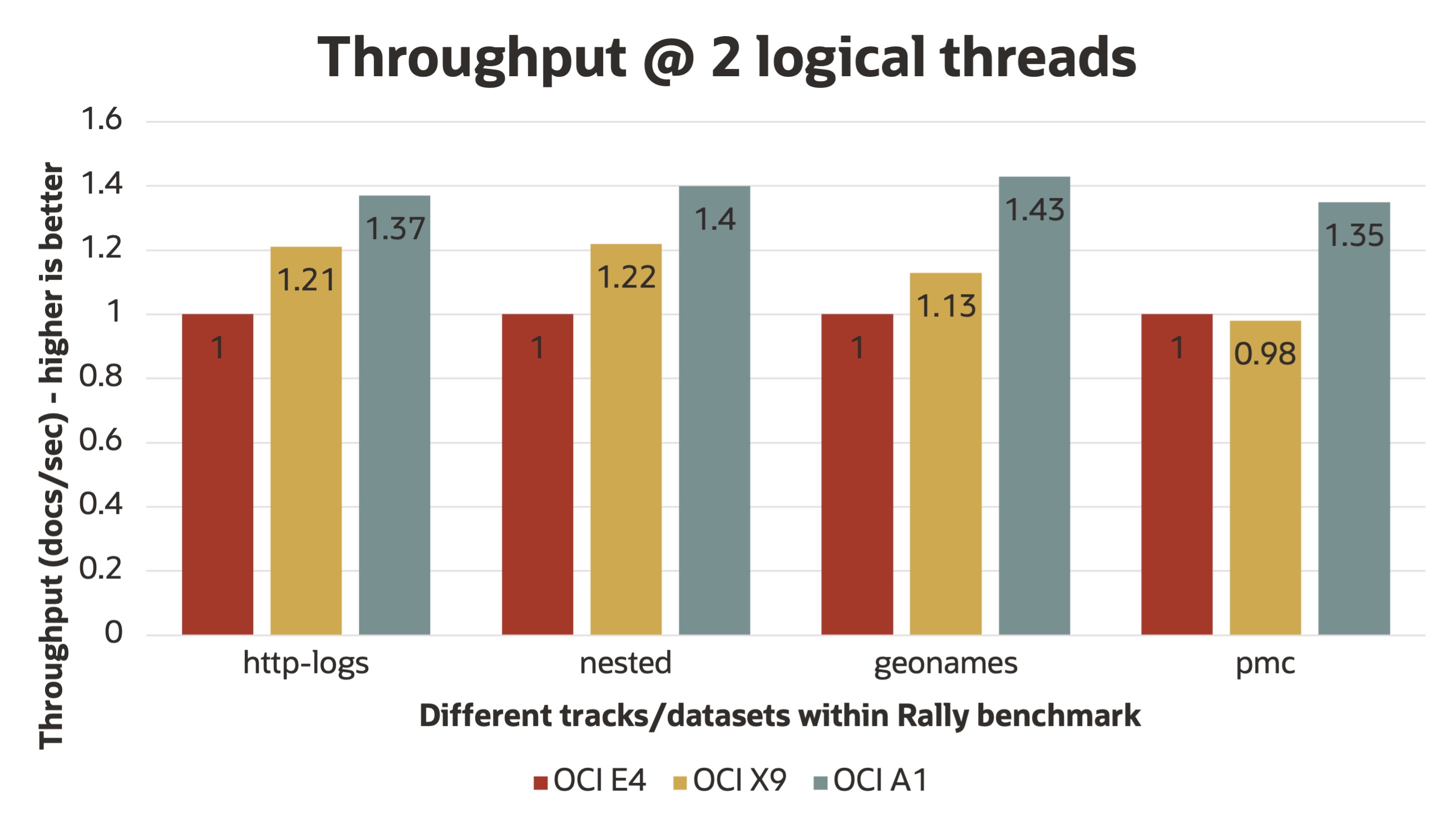

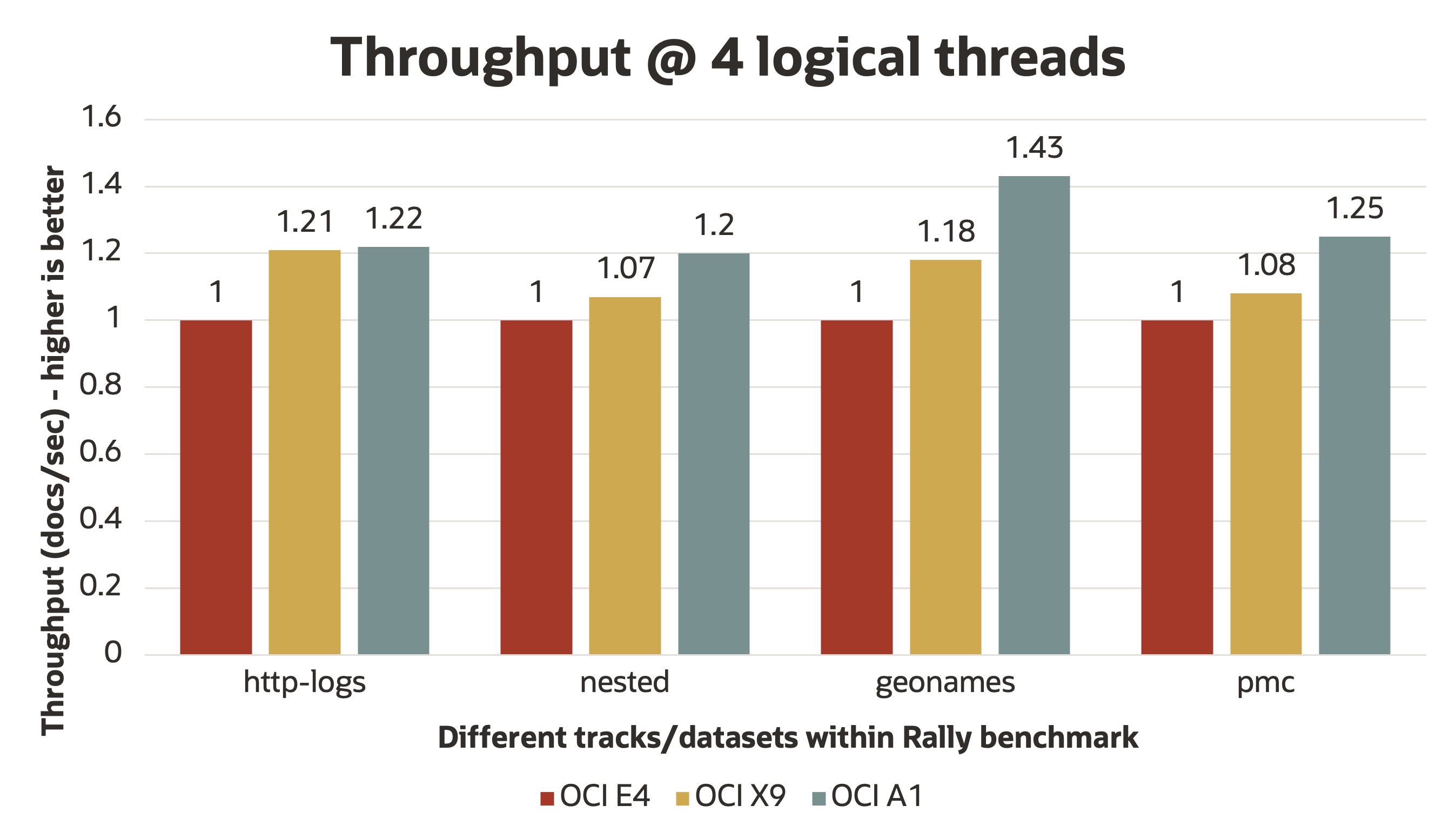

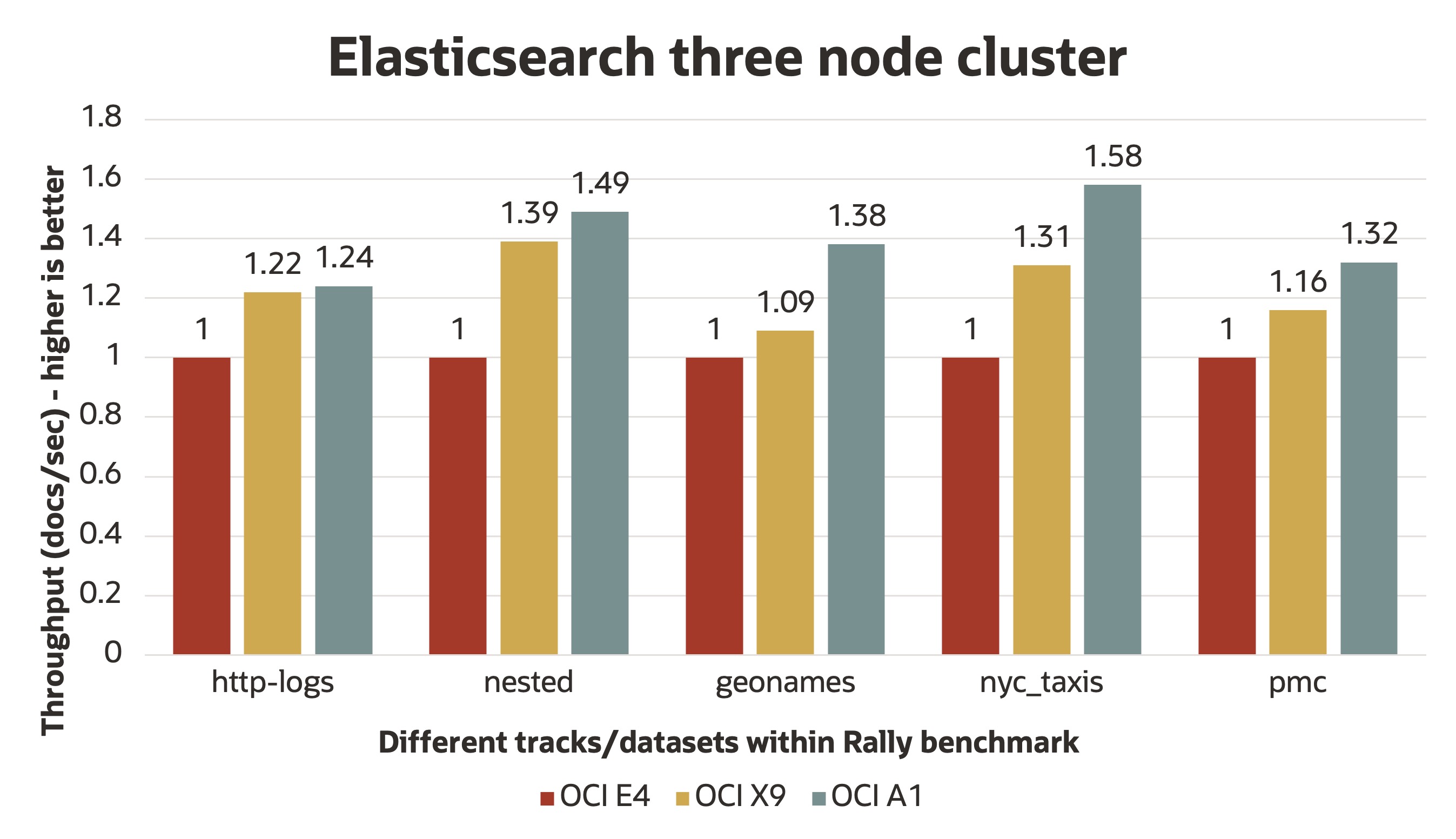

We ran the test under two system setups. First, we compared single node Elasticsearch server on Ampere Altra (OCI VM.Standard.A1), AMD Milan (OCI VM.Standard.E4) and Intel Icelake (OCI VM.Standard3 based on X9 compute) virtual machines (VMs) on OCI. Second, we compared a three-node Elasticsearch cluster on Ampere Altra, AMD Milan, and Intel Icelake VMs on OCI. For the single node tests, we performed tests on two VM sizes, one with two logical threads and one with four logical threads.

In the tests with two logical threads, the VM had 8-GB RAM, out of which 4 GB was allocated to the Java virtual machine (JVM). In the tests with four logical threads, the VM had 16-GB RAM, out of which 8 GB was allocated to the JVM. We used G1GC as our garbage collector and a block volume with an IOPS of 75,000 for storing the data for all the tests.

The following code block shows our esrally test command line example:

esrally race --track=pmc --target-hosts=<private ip of instance on OCI>:9200 --pipeline=benchmark-only --challenge=append-no-conflicts-index-onlyWe used the following tracks for stressing Elasticsearch. Each track has a different dataset or datatype that it supports.

-

http_logs: Contains HTTP server log data

-

PMC: Full text benchmark comprising of academic papers from PubMed Central (PMC)

-

Nested: Contains nested documents from StackOverflow Q&A

-

Geonames: Points of interest from the Geonames geographical database

-

nyc_taxis: Taxi rides in New York in the year 2015

Each test ran three times. The following report uses the median of the results:

Figure 1: Single node throughput with two logical threads

Figure 2: Single node throughput with four logical threads

Figure 3: Elasticsearch three-node cluster throughput

Benchmarking conclusions

In Figure 1, we observed up to 43%-higher throughput on OCI Ampere A1 VMs compared to AMD Milan Vms and 30% higher compared to Intel Icelake on single-node Elasticsearch with two logical threads.

In Figure 2, we observed up to 43%-higher throughput on OCI Ampere A1 VMs compared to AMD Milan VMs and 25% higher compared to Intel Icelake VMs on single-node Elasticsearch with four logical threads.

We also tested Elasticsearch in a three-node cluster. In Figure 3, we observed up to 58%-higher throughput on OCI Ampere A1 VMs compared to AMD Milan VMs and 27% higher compared to Intel Icelake VMs.

Conclusion

OCI A1 offers best-in class performance for Elasticsearch. Combined with the Oracle Cloud Infrastructure’s best in the industry penny-per-core pricing, you can experience unmatched price-performance advantage for ElasticSearch workloads.

For more information, see the following resources: