In recent days, multicloud and migration services from one hyperscaler to another have become more frequent. Customers want to achieve the best results both technically and economically. We recently supported customers who wanted to migrate their services from Microsoft Azure to Oracle Cloud Infrastructure (OCI). Few of their workloads were based on Azure SQL Database, which is a fully managed platform-as-a-service (PaaS) database engine that handles most database management functions, such as upgrading, patching, backups, and monitoring, without user involvement.

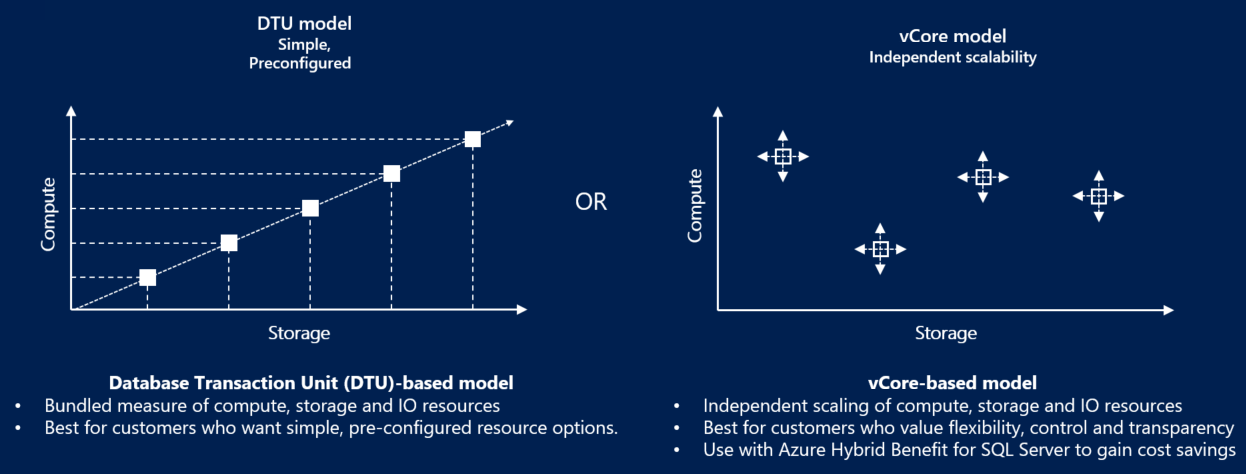

Most of the time, migration services and solutions from one hyperscaler to another means accurately mapping services. Hyperscalers can have many differences, especially in the ways they offer and deliver services to the customers. Specifically for Azure SQL Database, the deployment model is quite important and must be evaluated. You have the following available options in more detail:

-

Virtual core (vCore)-based: This deployment model provides a choice between provisioned or serverless compute tiers.

-

Provisioned compute tier: You choose the exact amount of compute resources that are always provisioned for your workload.

-

Serverless compute tier: You specify the autoscaling of the compute resources over a configurable compute range. The serverless compute tier automatically pauses databases during inactive periods when only storage is billed and automatically resumes databases when activity returns. The vCore unit price per unit of time is lower in the provisioned compute tier than it is in the serverless compute tier.

-

-

Database transaction unit (DTU)-based: This deployment model provides bundled compute and storage resources balanced for common workloads.

In this blog post, we investigate how to migrate Azure SQL Database deployed with the vCore or DTU models. Let’s analyze the two models because it’s critical when evaluating the migration approach to OCI.

vCore model

A virtual core (vCore) represents a logical CPU and offers the option to choose between hardware generations and the hardware physical characteristics, such as the number of cores, the memory, and the storage size. The vCore model gives you flexibility, control, transparency of individual resource consumption, and a straightforward way to translate on-premises workload requirements to the cloud. This model allows you to choose compute, memory, and storage resources based on your workload needs.

In the vCore model for SQL Database, you can choose between the General Purpose, Business Critical, and Hyperscale service tiers. To learn more, see the vCore service tiers.

In the vCore model, cost depends on the choice and usage of the following factors:

-

Service tier

-

Hardware configuration

-

Compute resources (the number of vCores and the amount of memory)

-

Reserved database storage

-

Actual backup storage

DTU model

The DTU model uses a database transaction unit (DTU) to calculate and bundle compute costs. A database transaction unit (DTU) represents a blended measure of CPU, memory, reads, and writes. The DTU model offers a set of preconfigured bundles of compute resources and included storage to drive different levels of application performance. If you prefer the simplicity of a preconfigured bundle and fixed payments each month, the DTU model might be more suitable for your needs.

In the DTU purchasing model, you can choose between the Basic, Standard, and Premium service tiers for Azure SQL Database. To learn more, review the DTU service tiers. Storage is included in the price of the DTU. You can add extra storage in the Standard and Premium tiers.

Resource estimation

When evaluating a migration for existing Azure SQL Database workloads, how you purchase them is important for sizing the resources to map them into OCI. While the vCore purchasing model can be easily converted into OCI resources, such as vCores to OCPUs, storage, and memory, the DTU is not. The DTU model represents a blended measure of different resources, such as CPU, memory, reads, and writes, which makes sizing resources more complicated.

Each tier has different requirements for how many DTUs require a vCore, as shown in the following conversion:

-

Every 100 DTUs in the Basic or Standard tiers require at least 1 vCore.

-

Every 125 DTUs in the Premium tier require at least 1 vCore.

This rule is approximate. It doesn’t account for the specific hardware used for the DTU database. The correct way to estimate the database size is to run the following mapping query on each DTU database to have the correct figures:

WITH dtu_vcore_map AS

(

SELECT rg.slo_name,

CAST(DATABASEPROPERTYEX(DB_NAME(), 'Edition') AS nvarchar(40)) COLLATE DATABASE_DEFAULT AS dtu_service_tier,

CASE WHEN slo.slo_name LIKE '%SQLG4%' THEN 'Gen4' --Gen4 is retired.

WHEN slo.slo_name LIKE '%SQLGZ%' THEN 'Gen4' --Gen4 is retired.

WHEN slo.slo_name LIKE '%SQLG5%' THEN 'standard_series'

WHEN slo.slo_name LIKE '%SQLG6%' THEN 'standard_series'

WHEN slo.slo_name LIKE '%SQLG7%' THEN 'standard_series'

WHEN slo.slo_name LIKE '%GPGEN8%' THEN 'standard_series'

END COLLATE DATABASE_DEFAULT AS dtu_hardware_gen,

s.scheduler_count * CAST(rg.instance_cap_cpu/100. AS decimal(3,2)) AS dtu_logical_cpus,

CAST((jo.process_memory_limit_mb / s.scheduler_count) / 1024. AS decimal(4,2)) AS dtu_memory_per_core_gb

FROM sys.dm_user_db_resource_governance AS rg

CROSS JOIN (SELECT COUNT(1) AS scheduler_count FROM sys.dm_os_schedulers WHERE status COLLATE DATABASE_DEFAULT = 'VISIBLE ONLINE') AS s

CROSS JOIN sys.dm_os_job_object AS jo

CROSS APPLY (

SELECT UPPER(rg.slo_name) COLLATE DATABASE_DEFAULT AS slo_name

) slo

WHERE rg.dtu_limit > 0

AND

DB_NAME() COLLATE DATABASE_DEFAULT <> 'master'

AND

rg.database_id = DB_ID()

)

SELECT dtu_logical_cpus,

dtu_memory_per_core_gb,

dtu_service_tier,

CASE WHEN dtu_service_tier = 'Basic' THEN 'General Purpose'

WHEN dtu_service_tier = 'Standard' THEN 'General Purpose or Hyperscale'

WHEN dtu_service_tier = 'Premium' THEN 'Business Critical or Hyperscale'

END AS vcore_service_tier,

CASE WHEN dtu_hardware_gen = 'Gen4' THEN dtu_logical_cpus * 1.7

WHEN dtu_hardware_gen = 'standard_series' THEN dtu_logical_cpus

END AS standard_series_vcores,

5.05 AS standard_series_memory_per_core_gb,

CASE WHEN dtu_hardware_gen = 'Gen4' THEN dtu_logical_cpus

WHEN dtu_hardware_gen = 'standard_series' THEN dtu_logical_cpus * 0.8

END AS Fsv2_vcores,

1.89 AS Fsv2_memory_per_core_gb,

CASE WHEN dtu_hardware_gen = 'Gen4' THEN dtu_logical_cpus * 1.4

WHEN dtu_hardware_gen = 'standard_series' THEN dtu_logical_cpus * 0.9

END AS M_vcores,

29.4 AS M_memory_per_core_gb

FROM dtu_vcore_map;Consider the following Azure SQL Databases purchased with DTU model must be sized:

-

Standard S0: 10 DTUs

-

Standard S2: 50 DTUs

The mapping query should return the following sample results (some columns are hidden for simplicity):

| dtu_logical_cpus |

dtu_memory_per_core_gb |

standard_series_vcores |

standard_series_memory_per_core_gb |

| 0.50 |

1.30 |

0.500 |

5.05 |

| 1.00 |

2.65 |

1.000 |

5.05 |

To map these two databases to OCI, we must account for the standard_series columns. It means mapping a 10-DTU database requires 0.5 vCores and 5.05-GB RAM per Core and mapping the 50-DTU database requires 1 vCore and 5.05-GB RAM per Core. Converting these figures to OCI is simple, considering 1 OCPU equals 2 vCores.

Migration process

Target database selection

Now that we know the resources required to host the target databases, we can identify the OCI service required. The source Azure SQL Database runs as full PaaS service on highly available infrastructures, so evaluate the target database platform accurately and according to your requirements. The following schema can help in the selection process:

For more information, see Deploying a highly available Microsoft SQL Server database on OCI using Always On availability groups.

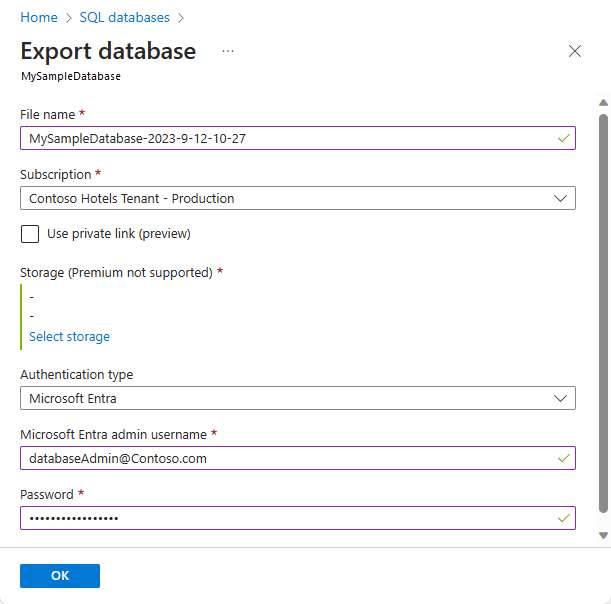

Migration approach using bacpac export and import

When the target database is Microsoft SQL Server on OCI, the easiest option is to create a backup package (bacpac) file of the source Azure SQL Database. You can create it directly on the Azure portal by selecting the related database and exporting it:

When created, the bacpac file must be moved to the MS SQL Server virtual machine (VM) hosted on OCI and then imported by using the Import Data-tier application guide. For detailed steps, see Import a BACPAC File to Create a New User Database.

Migration approach alternatives

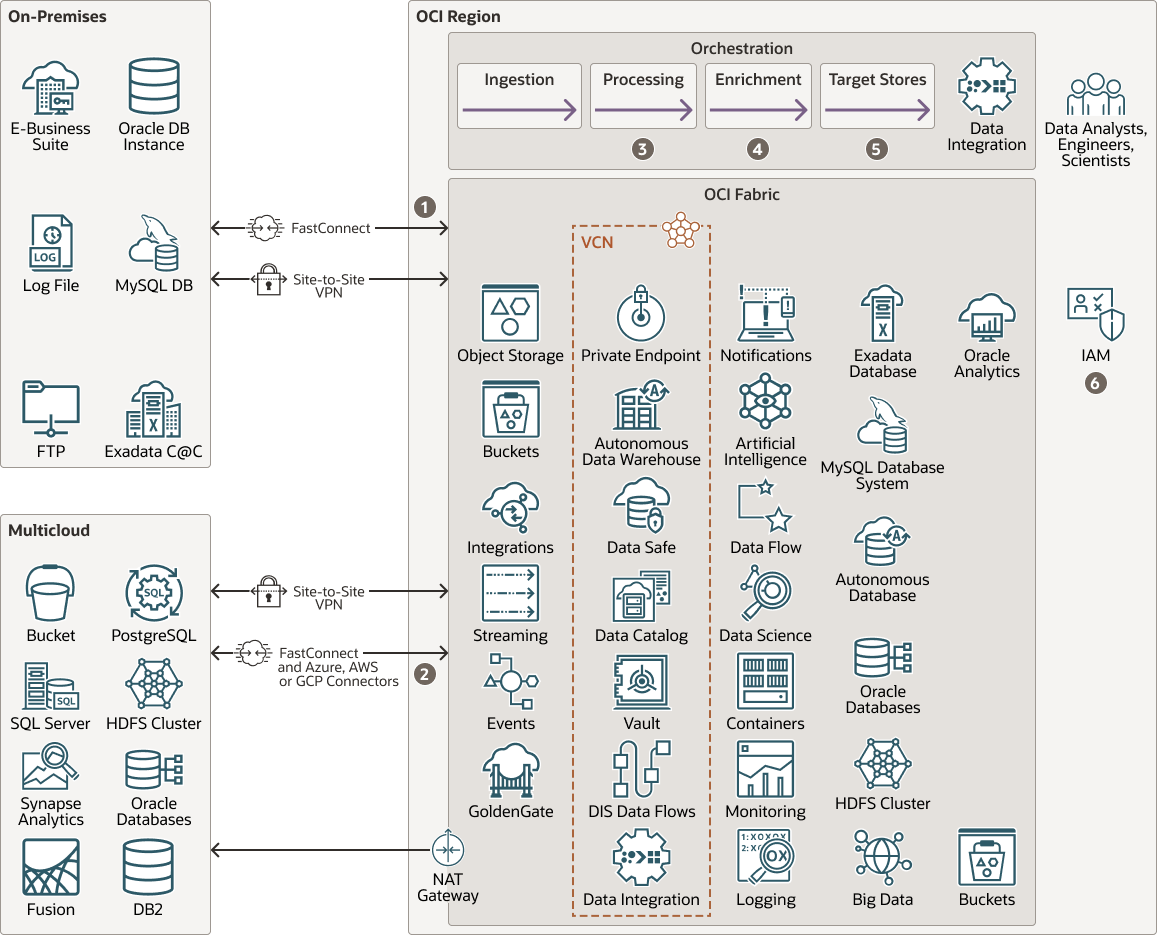

When the target database is different than the source, such as Oracle Autonomous Database, Oracle MySql Database, or Database with PostgreSql, the recommended approach is to migrate the source Azure SQL Database to an OCI database by using Oracle GoldenGate. For more details, refer to the Oracle GoldenGate product page.

Currently, Oracle GoldenGate doesn’t support Azure SQL Database as a source database, only as a target. For this reason, one quick method is to migrate the source Azure SQL Database to a temporary MS SQL Database, such as by using the bacpac export and import, and then using the MS SQL database as the source database for Oracle Goldengate. This method requires extra effort and an extrastage for loading the data.

We recommend the alternative approach of using the OCI Data Integration service. It provides a cloud native, serverless, fully managed extract transform load (ETL) platform that’s scalable and cost effective. OCI Data Integration supports many different data sources for its data assets, including Azure SQL Database.

The following diagram represents the OCI Data Integration reference architecture and data journey:

For more details and how to implement a successful data movement project, refer to the Oracle Data Integration documentation.

Conclusion

Several alternative database services provide feasible solutions when migrating Azure SQL Database to OCI. So, when evaluating a possible migration from Azure SQL Database to Oracle Cloud Infrastructure, select the target database first and then approach the migration as described.

To support your migration process, see the following documentation and resources: