Today we’re excited to announce the launch of Oracle Cloud Infrastructure GU1 instances powered by the NVIDIA Ampere A10 Tensor Core GPU, with RTX Workstations for professional graphics. With 24GB of GPU memory on each GPU, the OCI GU1 instances provide both compute and encoder/decoder capabilities in a compact and low power form-factor that is flexible enough to support a range of graphics intensive and AI workloads. It will be offered in both Bare Metal and VM shapes across our global regions. NVIDIA RTX vWS is the only virtual workstation that supports NVIDIA RTX technology, bringing advanced features like ray tracing, AI-denoising, and Deep Learning Super Sampling (DLSS) to a virtual, cloud-based environment. Supporting the latest generation of NVIDIA GPUs unlocks the best performance possible, so designers and engineers can create their best work faster.

One GPU for both graphics and AI

The NVIDIA A10 GPU is designed for the most graphics-intensive applications and machine learning inference workloads, as well as training of simple or moderate machine learning models.

Graphics Workloads

Customers can use the NVIDIA RTX Virtual Workstation (vWS) software with the A10 GPU to accelerate a broad range of graphics applications like interactive video rendering, video editing, computer-aided design, photorealistic simulations, 3D visualization, virtual desktop infrastructure (VDI) and cloud gaming. The second generation of RT Cores in the A10 speed up the rendering of ray-traced motion blur for faster results with greater visual accuracy. Combined with 24GB GDDR6 memory and 600GB/s of memory bandwidth of the A10, the NVIDIA RTX vWS delivers the performance required for graphics intensive workloads and supports creative and technical professionals.

The NVIDIA RTX vWS with A10 GPUs also adds encoder/decoder hardware that makes it ideal for video streaming and cloud gaming workloads.

Machine Learning Workloads

For machine learning, the A10 GPU delivers real-time AI inference performance at scale for uses such as voice assistants, chatbots, and visual search. Tensor Core capabilities like deep learning super sampling (DLSS) help enhance graphics editing for select applications. To support the diverse needs of these workloads, we combined the A10 GPU with 64 cores of the latest generation Intel Xeon Ice Lake CPU, 1 TB of system memory and 7.68 TB of low latency NVMe local storage for caching data.

We also offer the powerful BM.GPU.GM4.8 instance based on the NVIDIA Ampere A100 Tensor Core GPU, which is ideal for training large models or processing large datasets. However, for small-to-medium sized AI workloads, the OCI GU1 instance offers the perfect balance of performance and features our customers need in a flexible form factor.

Optimized price / performance

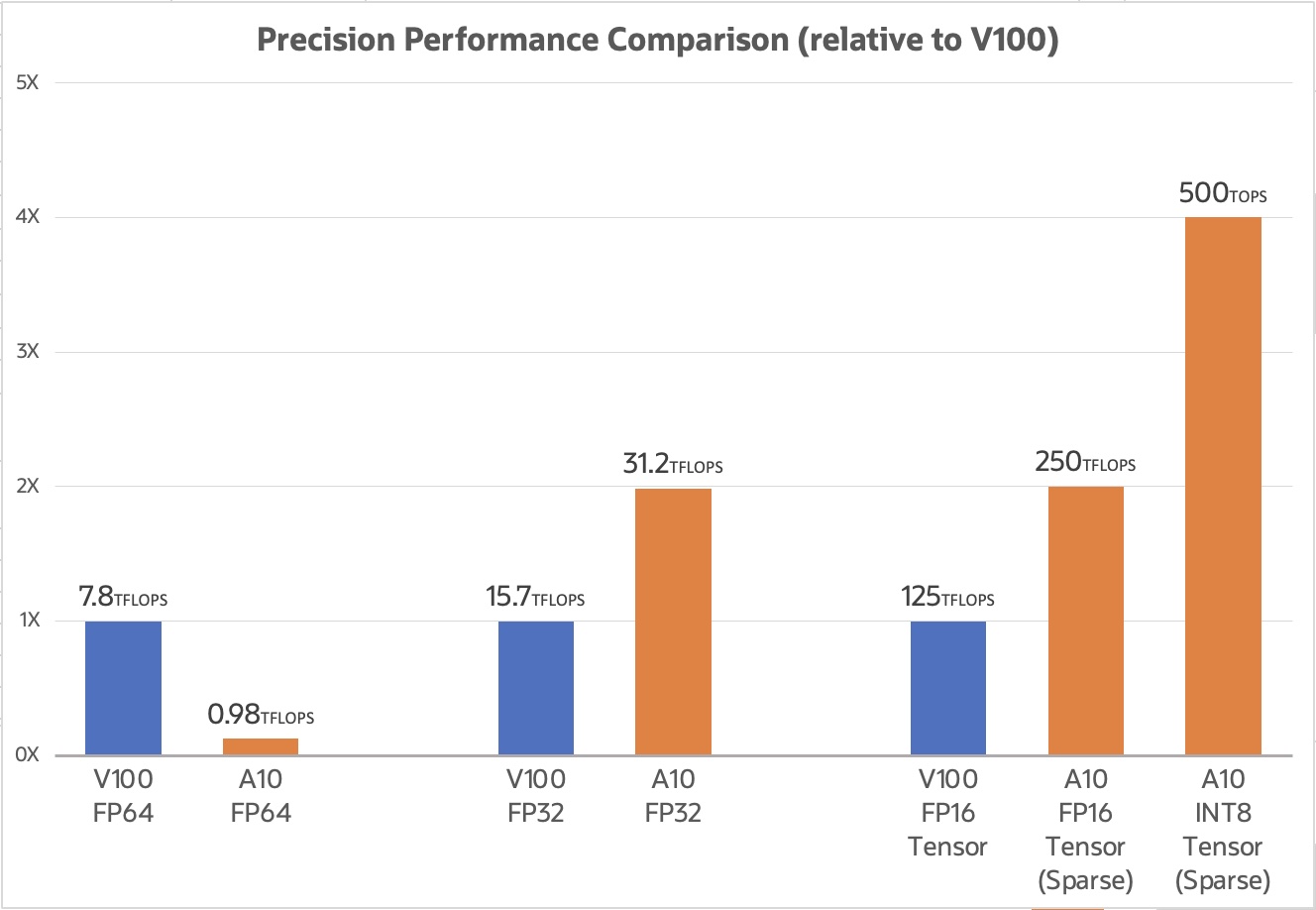

The NVIDIA Ampere generation Tensor Core technology combined with the smaller form factor of the A10 means that customers can now get better performance at a lower cost. Compared to the NVIDIA V100 GPU used in our previous generation GPU3 instances, the A10 is capable of up to 4x peak performance (especially with sparsity) for most AI workloads while costing 32% less based on the list price ($2.95 per hour for GPU3 vs $2 per hour for G1). Note that the V100 GPU performs better for high performance computing workloads that need double precision (FP64) performance.

GPU GU1 shapes

OCI GU1 instances will be available as Bare Metal servers for maximum performance and VM shapes (coming soon) for enhanced flexibility to serve workloads at every scale. The OCI GU1 instance is priced at US$2 per GPU per hour across our global regions, which enables us to serve customer workloads in a more consistent, cost-efficient manner. Key technical specifications are listed below:

|

| GPU | GPU memory | CPU | System memory | Storage | Network | List Price per hour |

|---|---|---|---|---|---|---|---|

| BM.GPU.GU1.4 | 4x NVIDIA A10 | 96 GB | 2x32core Intel 8358 2.6Ghz base 3.4Ghz Turbo | 1 TB DDR4 | 2×3.84TB NVMe Upto 1 PB Block Storage |

2×50 Gbps | $8.00 |

| *VM.GPU.GU1.1 | 1x NVIDIA A10 | 24 GB | 15 core Intel 8358 2.6Ghz base 3.4Ghz Turbo | 240 GB DDR4 | Upto 1 PB Block Storage | 24 Gbps | $2.00 |

| *VM.GPU.GU1.2 | 2x NVIDIA A10 | 48 GB | 30 core Intel 8358 2.6Ghz base 3.4Ghz Turbo | 480 GB DDR4 | Upto 1 PB Block Storage | 48 Gbps | $4.00 |

* VM shapes coming soon

Broad availability

The OCI GU1 instances will be available across eleven regions at launch:

- United States: US East (Ashburn), US West (Phoenix), US West (San Jose), Canada Southeast (Toronto)

- Europe and Middle East: UK South (London), Germany Central (Frankfurt), France Central (Paris), Saudi Arabia West (Jeddah)

- Asia Pacific: Japan East (Tokyo), Japan Central (Osaka), Singapore (Singapore)

Getting Started

For more information about the OIC GU1 instance specifications, pricing and availability, please see our documentation. To learn more about Oracle Cloud Infrastructure’s capabilities, explore the following resources (OCI GPUs for AI innovators, Oracle AI, OCI Compute) to get started.

The addition of the NVIDIA A10 GPU complements our existing line up of NVIDIA GPUs, opening a new era of computing for startups, enterprises, and governments around the world on Oracle Cloud Infrastructure. Not all workloads are the same, and some may require customization to work optimally on the latest-generation GPU hardware. Oracle Cloud offers technical support to get your workload up and running, so please talk to your sales contact for more information.