The Oracle Security Operations Center (SOC) recently observed and mitigated a bot attack on a global media organization by using machine learning and the Oracle Cloud Infrastructure Web Application Firewall (WAF).

The company, which operates in the global online marketing space, was targeted by an orchestrated content scraping operation executed by a sophisticated army of bots. By incorporating machine learning (ML), a subset of artificial intelligence (AI), the Oracle SOC was able to identify the complex attack method and prevent any further impact on the business.

Detection and Baselines

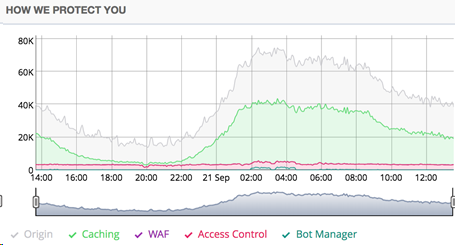

The Oracle SOC first became aware of the attacks when it noticed two anomalies: a spike in the traffic reaching the company’s web applications, and the geolocations of the source of the traffic. This example illustrates the importance of establishing a baseline view of your network activity. By having a clear understanding of what normal activity looks like, you can detect variations in those patterns, and recognize and stop attacks more quickly.

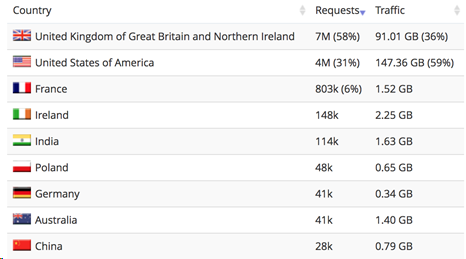

The following charts show the baseline traffic patterns of this particular organization:

Normal traffic pattern

Normal traffic by country of origin

Attack of the Scraper Bots

Scraper bots harvest web content from legitimate sites and sell it to third parties. Because this organization’s revenue depends on providing unique content, the bots were having a detrimental impact on their bottom line, brand reputation, and recognition. This wasn’t just a matter of copyright theft; there was also resource theft. The high volume of nonhuman traffic impeded the user experience and, as a result, forced the organization to increase the number of servers needed to handle the onslaught of requests. If this problem was not resolved soon, the organization projected that they would need to increase hardware spending on web services 100 percent year over year to ensure that sufficient resources were available to handle the significant burden on their services.

To compound matters, these bots were not your typical bots. Although the action of content scraping is fairly common, the way in which these bots were created and executed the attacks was complex and a challenge to identify.

The Oracle Cloud Infrastructure WAF, with the JavaScript Challenge enabled, was unable to solve the problem because the bots looked like legitimate visitors. When a normal user visits a website, the web browser makes a GET request. A GET request “gets” the requested content by temporarily downloading the content on the page as part of the HTTP/HTTPS transaction. That is how the web browser can render the HTML content for the visitor, and these bots were able to do just that. However, that was just one way in which these bots outsmarted the prevention.

Another telltale sign of bot activity is the speed with which they navigate and execute actions on a given page. Human users spend an extended amount of time on each page, digesting content as they read it, scrolling and clicking individual links. Bots typically stay on a page only for a fraction of a second, click hundreds of links simultaneously, and trigger actions that would have otherwise been hidden without scrolling. But these complex bots anticipated the bot challenges and the time-based detection methods normally used to identify this behavior. They purposefully took longer to scan pages, and they divided the scraping among many sources, so it wasn’t all coming from one IP address.

Bots this complex require a solution just as advanced to stop them.

Machine Learning to the Rescue

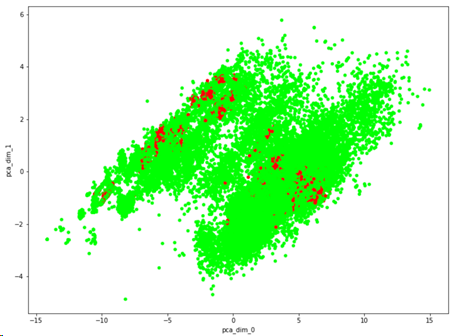

Because of this complexity, a data scientist from the Oracle SOC applied a machine learning algorithm to the malicious requests in an attempt to identify a pattern. They were able to find that the attack had hidden among legitimate traffic over a long period of time, a pattern that was previously indiscernible through human analysis. Without the aid of the automated algorithm applied to a very large data sample, the pattern would have gone undetected through manual human analysis alone.

Bot attack pattern: Legitimate traffic = green, malicious traffic = red

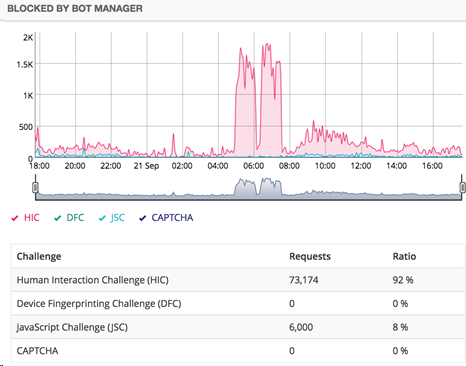

The Oracle SOC was able to stop the scraping attacks with a method known as the Human Interaction Challenge. This challenge is an advanced countermeasure that looks for natural human interactions such as mouse movements, time on site, and page scrolling to identify bots. It also helps identify normal usage patterns for each web application, based on expected visitor behavior. With the help of machine learning, customized security postures were deployed to stop bots that deviated from the standard human-based usage patterns.

Malicious requests blocked by the Human Interaction Challenge

Lesson Learned

No matter how advanced bots are, and no matter how well they can mimic human behavior, you can defeat them because they will never be able to completely copy a web application’s “at peace” profile. This lesson underscores the importance of developing a profile of your web application’s normal, everyday legitimate traffic, regularly monitoring it for anomalies, and challenging all visitors to detect unwanted bot activity.