Oracle continues to push the limits in the cloud on both performance and cost. By running high-performance computing (HPC) on Oracle Cloud Infrastructure, you can get the same performance as on-premises, with the added benefits of being able to deploy instantly and eliminating hardware acquisition and maintenance costs. This opportunity has influenced many enterprise customers to move their HPC workloads to Oracle Cloud. For examples, see Nissan Moves to Oracle Cloud and Oracle Cloud Infrastructure Behind World’s Fastest Supercomputer. The types of workloads running on Oracle Cloud extend into every industry, with some of the most common ones being computational fluid dynamics (CFD) and crash simulation applications.

“At Oracle Cloud, we want to bring the power of supercomputing to every engineer and scientist,” said Karan Batta, Vice President, Oracle Cloud Engineering. “To deliver on this vision, we strive to achieve the best performance in the cloud for our high-performance computing (HPC) customers, investing in technologies like bare metal compute, high-performance networking, and high-throughput NVMe SSD-based storage. These core Oracle Cloud technologies and cutting-edge offerings enable us to deliver predictable performance for HPC applications.”

Top Performing HPC Instances with Intel Technology

At the foundation of our bare metal platform, we rely on Intel to provide world-class processors for our CPU-optimized Compute instances. Our HPC instance is powered by two Intel Xeon Gold 6154 processors with 36 cores, each running at 3.70 GHz. Combined with low latency cluster networking, BM.HPC2.36 is one of the top performing instances in the cloud at one of the lowest prices of $2.70 instance per hour.

We recently partnered with Intel to run computer-aided engineering (CAE) workloads on our HPC Cluster Network platform. We benchmarked the following common applications used for CFD and Crash Simulation analyses:

-

OpenFOAM

-

LS-DYNA

-

Ansys Fluent

From all benchmarks, Oracle’s HPC Cluster Network can match the performance of on-premises environments and achieve efficient scaling for both large and small models.

“Oracle Cloud’s bare metal HPC offering is a great example of Intel and Oracle bringing together leading HPC solutions for the cloud,” said Trish Damkroger, GM and Vice President of High Performance Computing at Intel Corporation. “Built on Intel Xeon technology, Oracle’s bare metal HPC offering provides powerful clusters that deliver exceptional performance for a broad range of HPC workloads at every scale.”

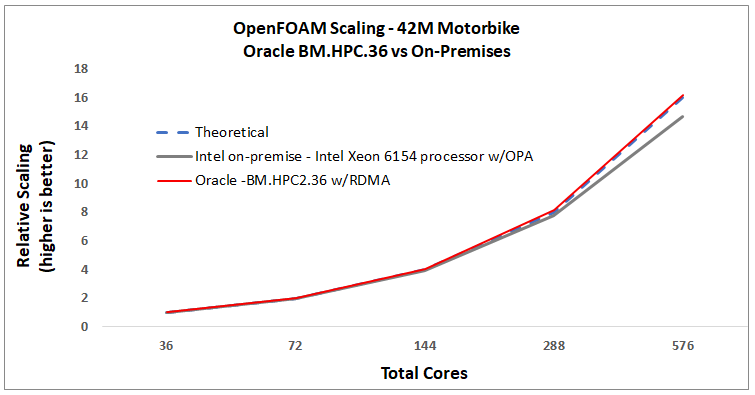

OpenFOAM* Performance

To demonstrate OpenFOAM performance, a 42M motorbike model was chosen because of its popularity and simplicity to run. The model was run across a 16-node BM.HPC2.36 cluster with RDMA low latency networking, using the following inputs:

-

OpenFOAM v2006

-

Hyperthreading enabled

-

36 processes per node

-

Intel MPI 2019.4.243

-

NFS File Server

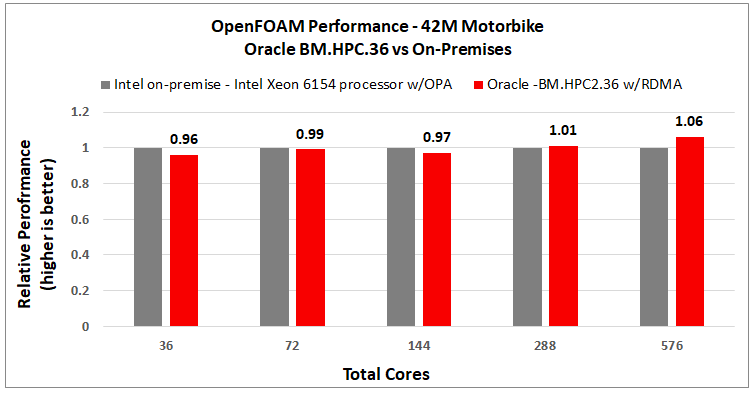

The results of the 42M on Oracle Cloud show performance and scaling consistent with perfect linearity.

The performance in elapsed time compared to an on-premises environment containing Intel Xeon Gold 6154 Processors and an 100Gbps Intel OPA network. Shown as relative performance with the onsite cluster as the baseline, Oracle Cloud outperformed on-premises at higher core counts.

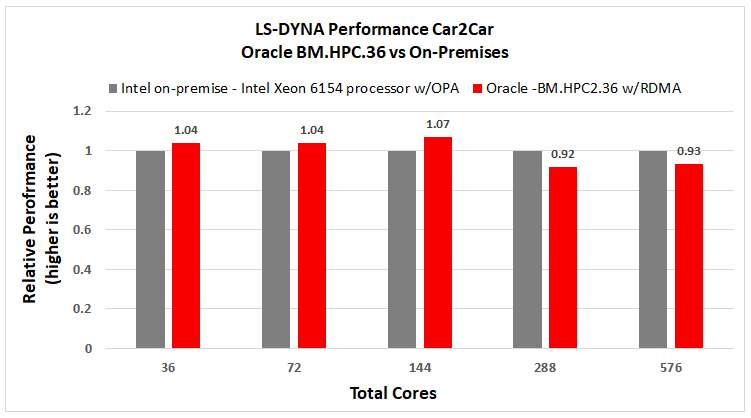

LS-DYNA Performance

The LS-DYNA Car2Car model was chosen because it’s a smaller model that drives a high amount of network traffic and stresses the network. The model was run across a 16-node BM.HPC2.36 cluster with RDMA low latency networking using the following inputs:

-

LS-DYNA R10.1 or R11 with AVX2 CPU optimization

-

Hyperthreading enabled

-

36 processes per node

-

Intel MPI 2018.3.222

The relative performance, shown in the following chart, is a comparison of the elapsed time against data from an on-premises environment containing Intel Xeon Gold 6154 Processors and 100 Gbps Intel OPA network. Oracle Cloud achieved better performance from 72 to 144 cores and dropped off slightly at higher core counts.

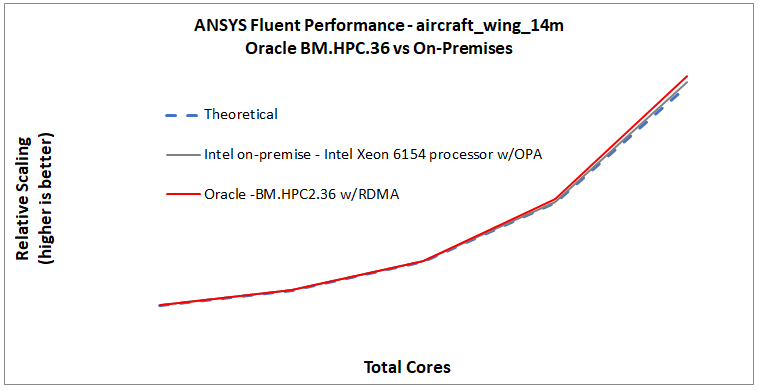

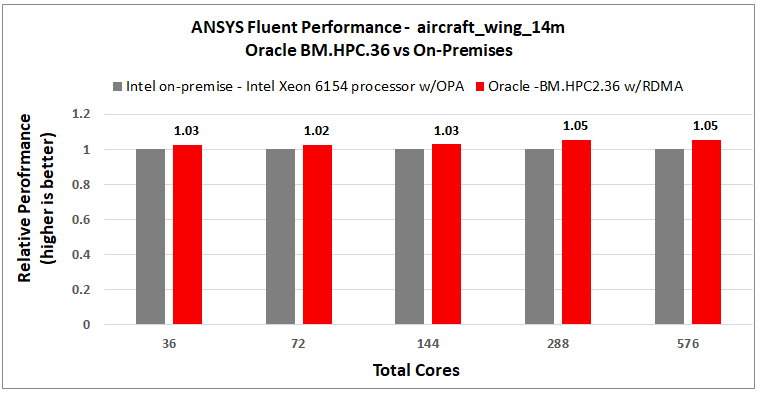

Ansys Fluent Performance

Ansys Fluent is one of the most commonly used CFD applications and contains a wealth of benchmarking models. We chose the Aircraft Wing 14m model to represent small model performance and scalability on our HPC Cluster Networking platform. The model ran across a 16-node BM.HPC2.36 cluster with RDMA low latency networking using the following inputs:

-

Fluent R1 2020

-

Hyperthreading enabled

-

36 processes per node

-

Intel MPI 2018.3.10

-

NFS File Server

The results show scaling consistent with a perfect linear relationship.

The performance was also compared with an on-premises environment containing Intel Xeon Gold 6154 Processors and an 100Gbps Intel OPA network. As shown in the following figures, Oracle Cloud achieved performance similar or better to the onsite cluster.

Deploy Your Own HPC Cluster Network

We encourage you to try your own benchmarks on Oracle HPC Cluster Networking. First, sign up for Oracle Cloud or contact to your Oracle representative. You can then launch a high-performance computing cluster through our Marketplace or a CFD Ready Cluster if you want prerequisite CFD libraries and OpenFOAM-7 installed. For more information on how to set up a cluster and install some of these benchmarks, refer to our CFD Ready Runbook in Oracle QuickStart.

*OpenFOAM Disclaimer: This offering is not approved or endorsed by OpenCFD Limited, producer, and distributor of the OpenFOAM software via www.openfoam.com, and owner of the OPENFOAM and OpenCFD trademark.