Oracle is following a strategy of regional expansion in the cloud. Recently in Europe, Amsterdam and Zurich were added as possible locations for customers to run their workloads.

Based on feedback from customers, this blog addresses multiple-regional architectures. Many customers raised questions on latency expectations and the ability to deploy a database in one region while running the application in another. In this blog post, we use Data Warehouse in Frankfurt and Business Intelligence in Amsterdam.

The Frankfurt and Amsterdam regions are arguably the most obvious choices to connect because they both fall under EU jurisdiction. While more local or industry-specific data sovereignty law may apply, this isn’t the case for most workloads. Therefore, these regions are the ideal choice for disaster recovery scenarios and so on. Those two metros are about 364 km or 226 miles apart, which is far enough to meet common data security guidance (often 100 km, 200 km, or 100 miles) and still close enough to expect relatively low latency, which is primarily influenced by distance.

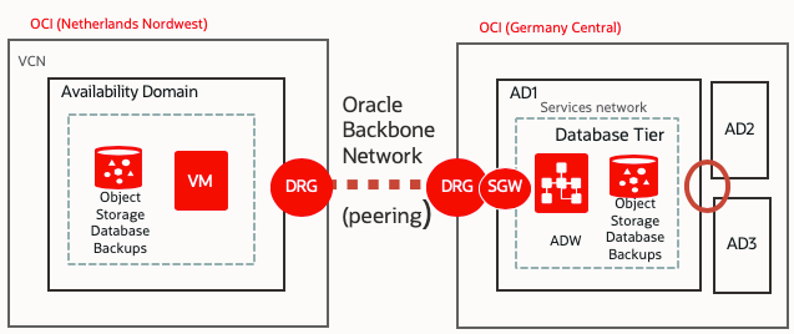

The Setup of the Architecture

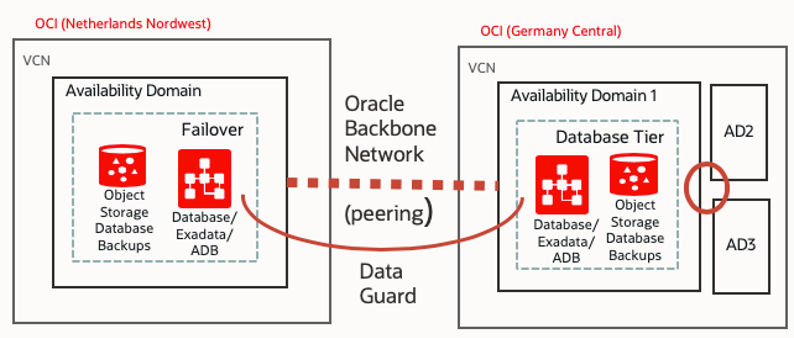

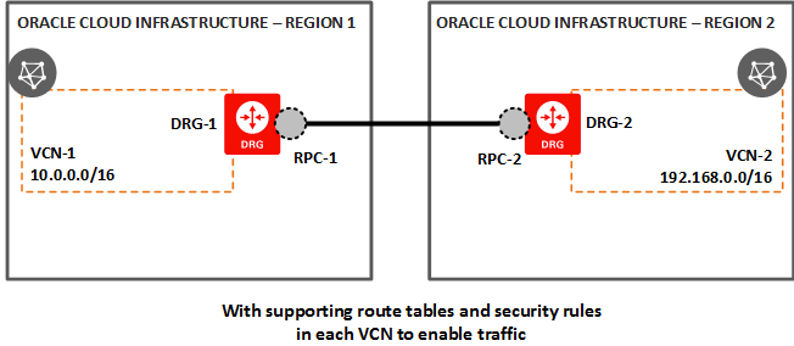

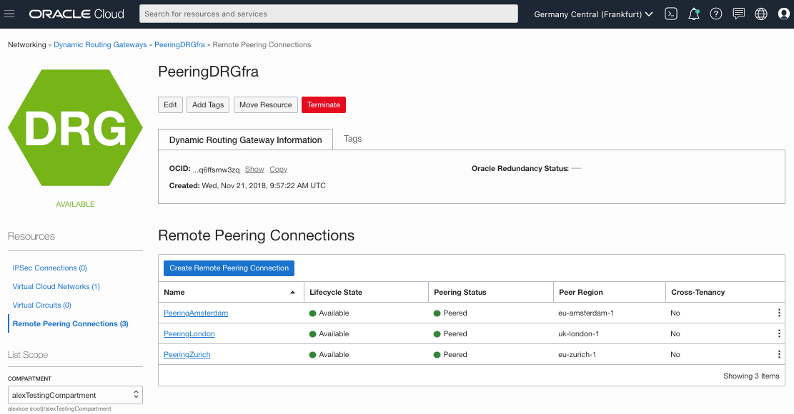

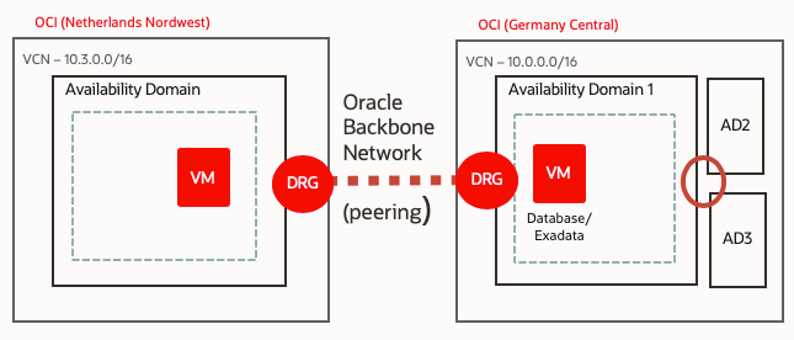

Regions in Oracle Cloud Infrastructure are interconnected through the backbone network. Over this network, you can establish remote virtual cloud network (VCN) peering. For security reasons, many customers don’t want to use the internet for this connectivity. With VCN peering, they don’t have to because the connectivity remains internal.

The major caveat in the setup is that the chosen classless interdomain routing (CIDR) virtual cloud networks (VCNs) in both regions can’t overlap, because they’re directly peered with no network address translation in between. You can’t change CIDRs VCN after creation. Therefore, you have to consider peering in the network design phase.

If you use an autonomous database, you need to consider how it’s delivered. You have two options when connecting to autonomous databases (both Autonomous Data Warehouse and Autonomous Transaction Processing) through internal networking.

The first option is private endpoints. In this case, you can only establish a connection to the database from the internal network. External access requires a bastion host.

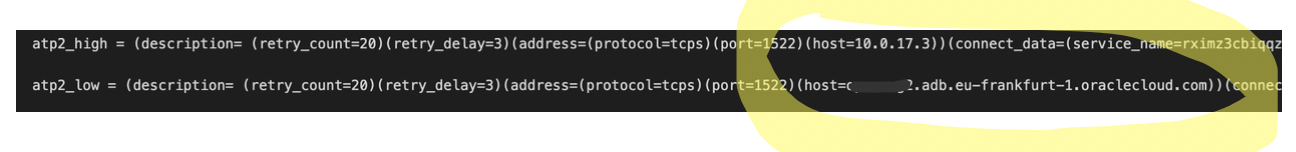

When peering using a private IP, you have to edit the tnsnames.ora file in the database wallet. In the following example, the name is replaced in atp2_high and default in atp_2low.

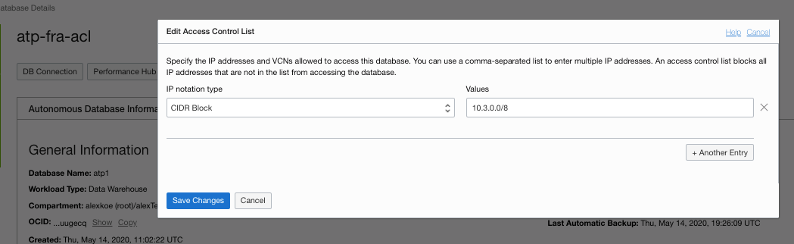

You can also connect autonomous databases through the service gateway. In this case, the autonomous database is protected by an access control list. Use this option if the database is supposed to be reachable from the internet.

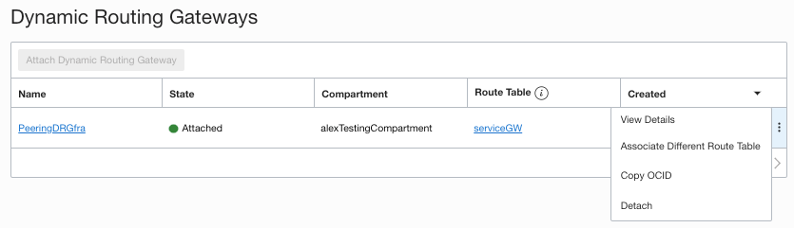

To connect to an autonomous database through a service gateway in a VCN, you have to use transit routing. Here, the dynamic routing gateway (DRG) is given a route to the service gateway for the peered VCN from another region.

Autonomous databased are also protected via access control lists. So, you need to give permission for access to the CIDR of the peered VCN.

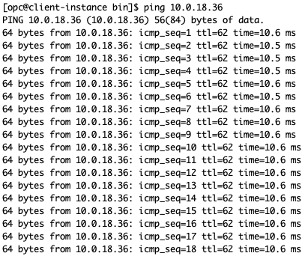

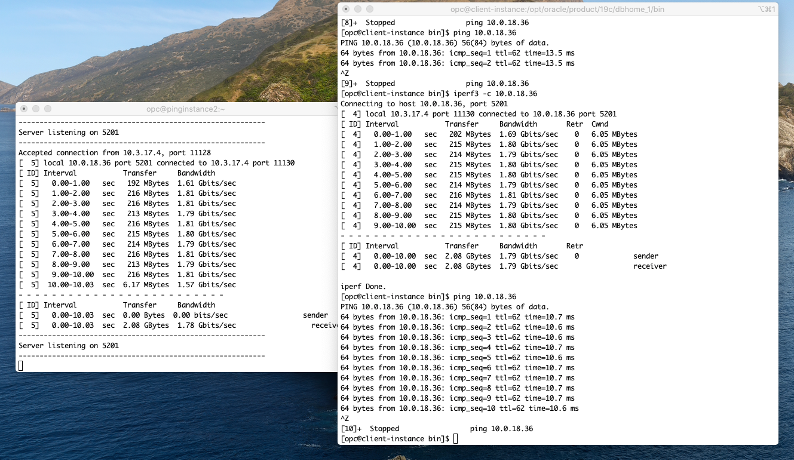

Testing Latency and Bandwidth Between Two Virtual Machines

The ping latency between Amsterdam and Frankfurt measures approximately 10.6 ms.

The bandwidth is around 1.8 Gbits/sec. The maximum only can be reached if the virtual machine shapes used in the scenario permit this bandwidth. The same permission applies for all bare metal shapes, Intel virtual machines larger than two OCPUs, AMD Rome virtual machines larger than two OCPUs, and AMD Epic virtual machines larger than four OCPUs.

| Source | Destination | Time (in ms) |

|---|---|---|

| Frankfurt | London | 17.6 |

| Frankfurt | Zurich | 10.3 |

| Amsterdam | London | 7.0 |

| Amsterdam | Zurich | 23.3 |

| Frankfurt | Ashburn | 91.9 |

| Frankfurt | Phoenix | 160 |

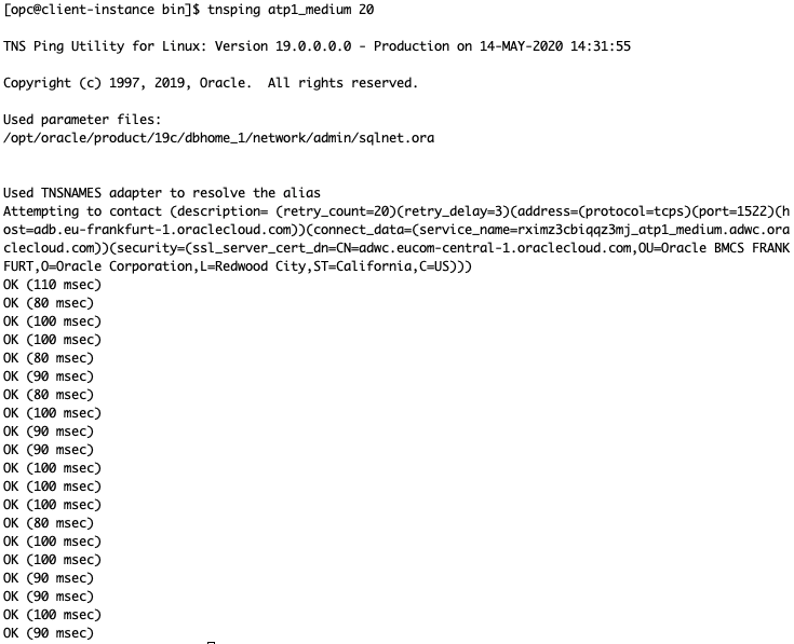

Testing TNSPING from a Virtual Machine in Amsterdam to Autonomous Database in Frankfurt

TNSPING is a network test that represents a loopback time from a client to the database listener. In this example, a virtual machine in Amsterdam is used as a client and the database is an autonomous data warehouse in Frankfurt.

In this scenario, the TNSPING is between 80–100 ms.

Interpreting the Data Results

To better interpret this result, I also tested the same database with a client in Frankfurt. We receive a TNSping in 40– 50 ms, about half the time. The ping latency is around 0.25 ms.

The result didn’t show significant variance when scaling up the database or when more OCPUs are used for the client virtual machine. The TNSPING was also the same, independent of whether the connection is over service gateway or through private endpoints.

Whether the results satisfy depends on the workload. Because of the high bandwidth and a tolerable latency, the connection is sufficient to support most data warehouse workloads, many mixed workloads, and some OLTP workloads.

In Conclusion

This article explains how to peer two or more regions to connect either two virtual machines or a client and a database. The result shows that the scenario is viable for many workloads. The best way to discover how well this scenario works with your workload is deploying the workload. I look forward to receiving feedback.