We’re pleased to release Oracle Cloud Infrastructure (OCI) Resource Manager Stack to deploy IBM Spectrum Scale General Parallel File System (GPFS). Resource Manager offers a web-based graphical user interface (GUI) and internally uses Terraform to install, configure, and manage resources through the infrastructure-as-code model. Resource Manager allows you to share and manage infrastructure configurations and state files across multiple teams and platforms.

This release adds to the Terraform template that we released a few years ago to deploy IBM GPFS on OCI through the Oracle Quickstart GitHub repo.

Architecture

On OCI, you can deploy IBM Spectrum Scale using the following architectures:

-

Network shared disk (NSD) architecture

-

Direct attached architecture

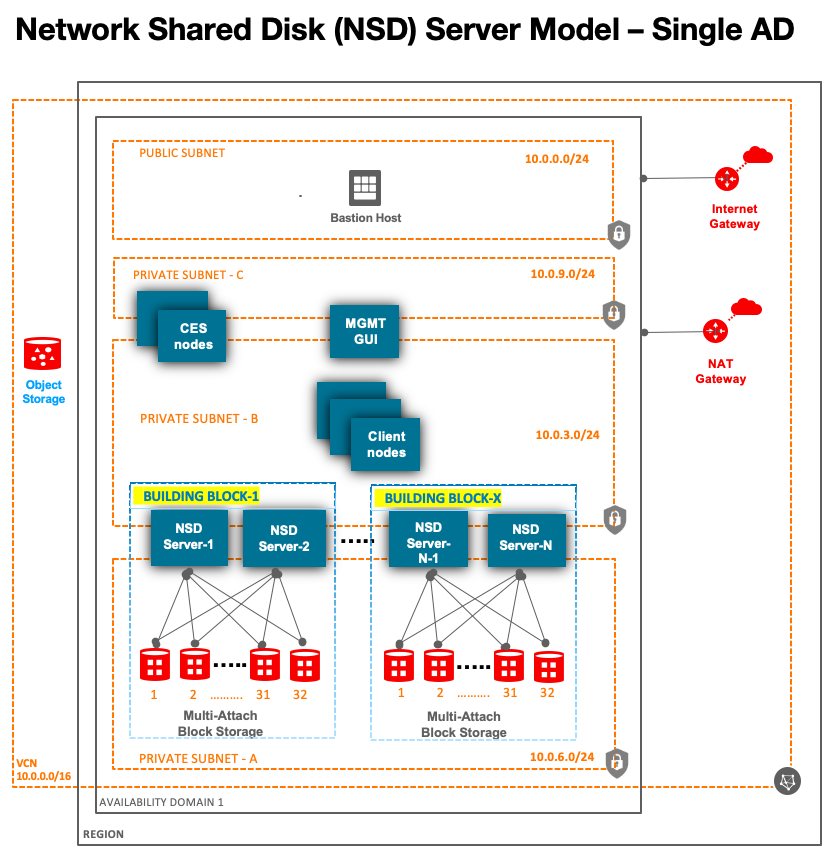

This stack deploys Spectrum Scale with NSD architecture. In this architecture, we use a set of shared disks, which are attached in shareable read/write mode to multiple NSD file servers. A set of two file servers with n shared disks represent the minimum building block. You can. Build large file system clusters by adding more building blocks.

Figure 1: Network Shared Disk architecture with the building blocks approach

Performance

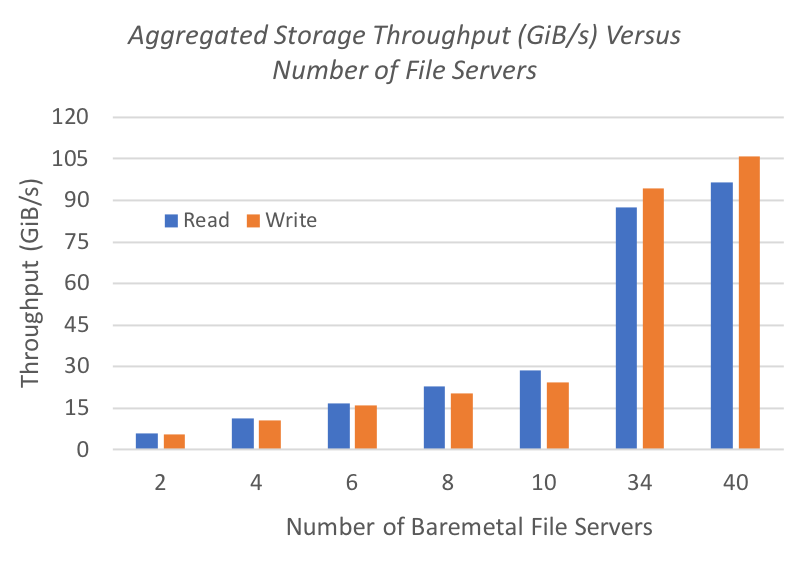

IO throughput scales linearly as we add more file servers and building blocks. Each file server has 2.5 GiB per second and each building block has 5 GiB per second.

Figure 2: Aggregate storage throughput (IOR benchmark) versus file server count

Simple, quick, and easy deployment

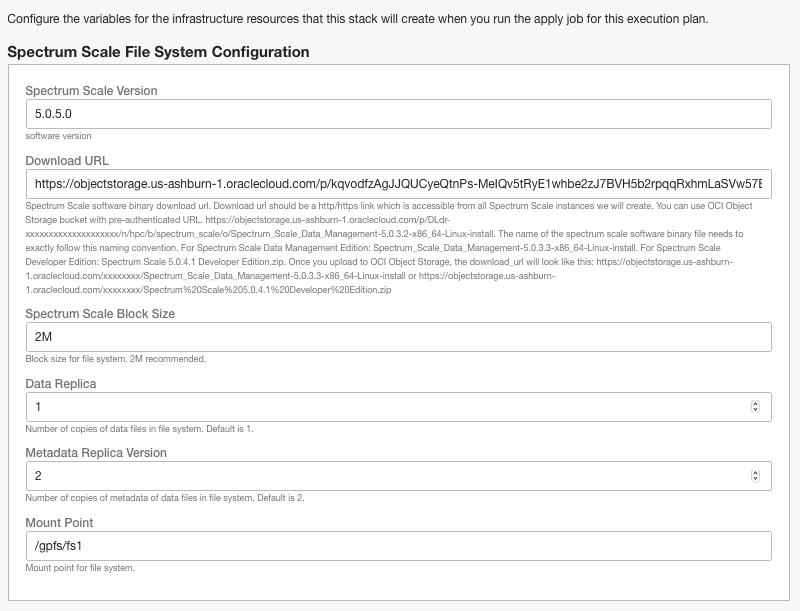

Step 1: Spectrum scale file system configuration

Let’s begin by selecting which version of Spectrum Scale you plan to deploy, then provide a URL to download the Spectrum Scale binary. Upload the spectrum scale software binary to a private OCI Object Storage bucket. You can create a preauthenticated request using these steps.

The name of the spectrum scale software binary file needs to exactly follow this naming convention. The file has the following default name when you download it. Replace the version number with the one that you’re using.

-

Spectrum Scale Data Management Edition: Spectrum_Scale_Data_Management-5.0.3.3-x86_64-Linux-install

-

Spectrum Scale Developer Edition: Spectrum Scale 5.0.4.1 Developer Edition.zip

After you upload to OCI Object Storage, the download_url looks like one of the following urls:

-

https://objectstorage.us-ashburn-1.oraclecloud.com/xxxxxxxx/Spectrum_Scale_Data_Management-5.0.3.3-x86_64-Linux-instal

-

https://objectstorage.us-ashburn-1.oraclecloud.com/xxxxxxxx/Spectrum%20Scale%205.0.4.1%20Developer%20Edition.zip

Next, select a block size for file system. We recommend 2 M on OCI for optimal performance, but you can change it. Spectrum Scale allows up to 16 M for block size.

Next, we recommend using two meta replicas and one data replica at the file system level. On OCI, we use highly durable and redundant OCI Block Volume service, which stores multiple replicas of blocks.

Last, use the mount point to access the Spectrum Scale and GPFS file system.

Figure 3: File system configuration

Step 2: General deployment configuration

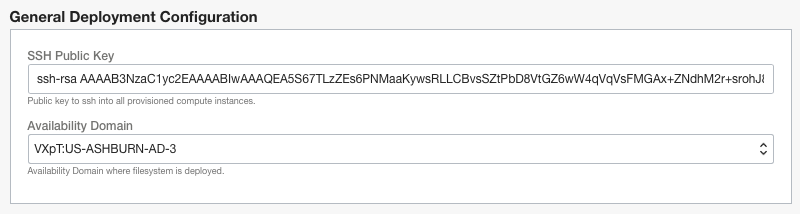

Provide the SSH public key to configure authorized SSH key on all instances to access them through SSH and select the OCI availability domain to deploy the file system. This stack deploys file system in a single availability domain. If you need multiple availability domains for your file system deployment, contact us.

Figure 4: General deployment configuration

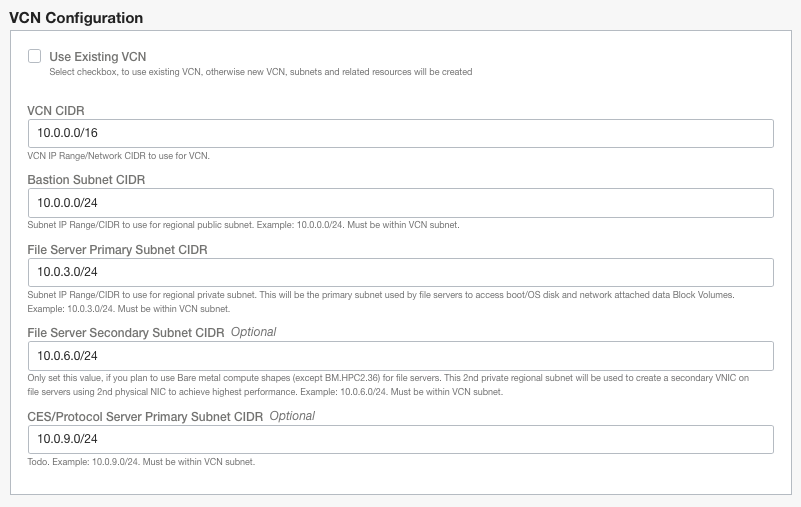

Step 3: Virtual cloud network (VCN) configuration

The stack gives you two choices to select from: new or existing VCN.

New VCN

Create a VCN with one public subnet, three private subnets, and all the associated network resources, such as security list, internet gateway, and NAT gateway.

Figure 5: New VCN configuration

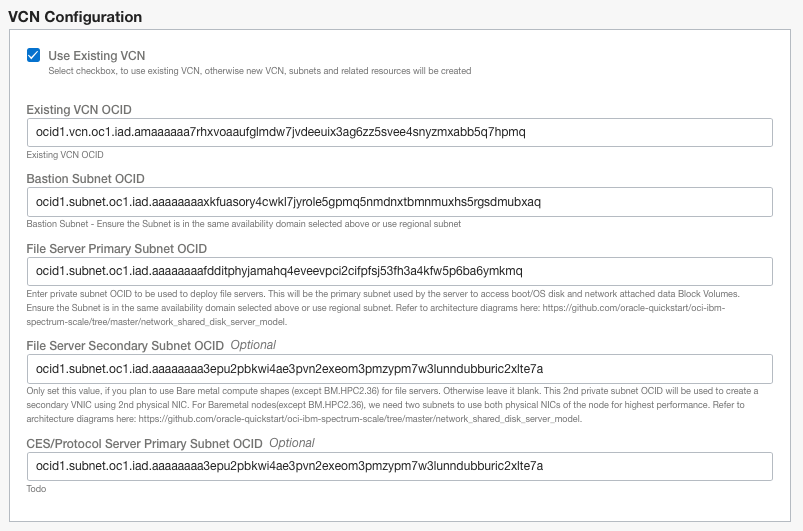

Existing VCN

Before you deploy, ensure that you have three private subnets, one public subnet, an internet gateway, NAT gateway, and allow ingress of TCP and UDP traffic to all protocols from VCN_CIDR range for three private subnets and egress to 0.0.0.0/0 for all protocol from three private subnets. Opening all TCP and UDP traffic to VCN_CIDR is requested to avoid deployment failure because of lack of network access. For production, you can restrict the security list and network traffic to specific ports. For public subnets, configure the security list to allow SSH access over port 22 from 0.0.0.0/0.

Figure 6: Existing VCN configuration

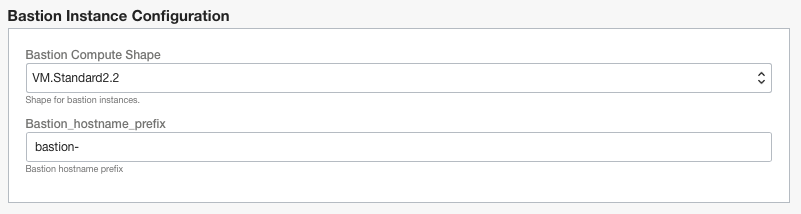

Step 4: Bastion instance configuration

Select a shape for the bastion node and hostname prefix. If the bastion hostname prefix is “bastion-,” then the node name is “bastion-1.”

Figure 7: Bastion node configuration

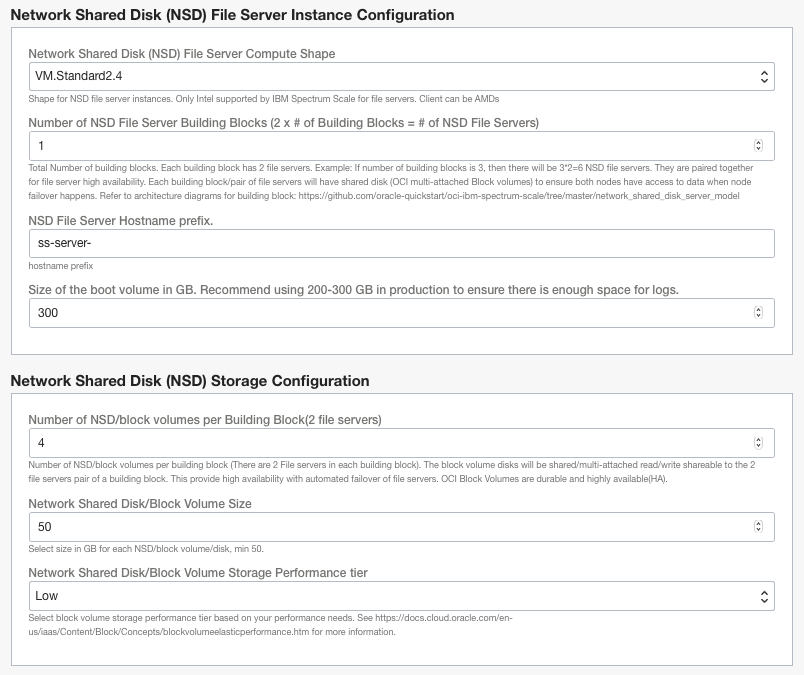

Step 5: File server and storage disk configuration

This step is the most important configuration for the file system. For file servers, you can use any Intel virtual machine (VM) or bare metal Compute shapes. But for best performance, we recommend bare metal nodes, because they come with two physical NICs and have the most network bandwidth to access data from OCI Block Volumes.

The second field asks for how many building blocks, and not for the number of file servers. Each building block has two file servers to make an active-active high availability pair, and they have a set of n shared OCI block volumes and disks attached to them for automatic failover. OCI block volumes are highly durable and store multiple replicas of data on independent hardware. The file servers of a building block are deployed in different fault domains.

For NSD, we use OCI Block Volumes service, which offers multi-attach or shared disk volumes that you can attach to multiple file servers in shareable read/write mode. You can attach up to 32 volumes per building block and each volume size can be 50GB–32TB. So, you can build a 1-PB capacity file system using just one building block.

Block Volumes service comes with three performance tiers to select from: low, balanced, and high. We recommend the balanced tier for most use cases. If your workload requires high IOPS, then select the high-performance tier. For more information on performance tiers, refer to the documentation.

Figure 8: File server and shared disk configuration

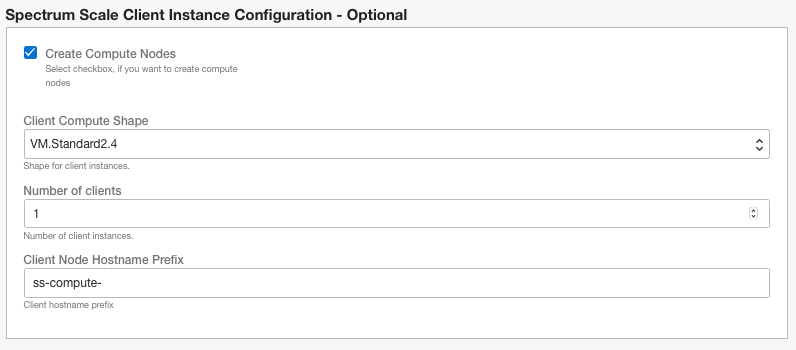

Step 6: Client Compute instance configuration (Optional)

Compute or client instances are clients of the file system. These nodes use native, highly performant Spectrum Scale POSIX-compliant clients to access the file system. Most customers in production prefer to not deploy these nodes as part of file server deployments. In fact, they build their own GPFS client-only cluster separately and use Spectrum Scale multicluster authorization between the file server cluster and the client-only cluster. So, this step is an optional configuration. By default, the check box is not selected, so no instance is created. If you want to deploy a Compute instance, select the check box.

If you’re in the evaluation or proof-of-concept stage, you can deploy client nodes using this option to simplify deployment and separately deploy client nodes later for production. We have Terraform automation for client-only clusters on GitHub.

Figure 9: Compute client configuration

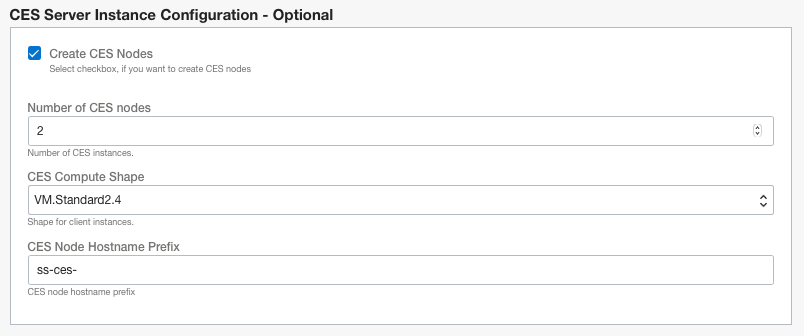

Step 7: CES instance configuration (Optional)

Spectrum Scale recommends running separate Cluster Export service (CES) nodes to access GPFS through NFS or SMB. Because this configuration is optional, the check box is not selected by default, so no instance is created. If you want to deploy CES instances to allow NFS and SMB access to GPFS file system, select the check box.

Figure 10: CES configuration

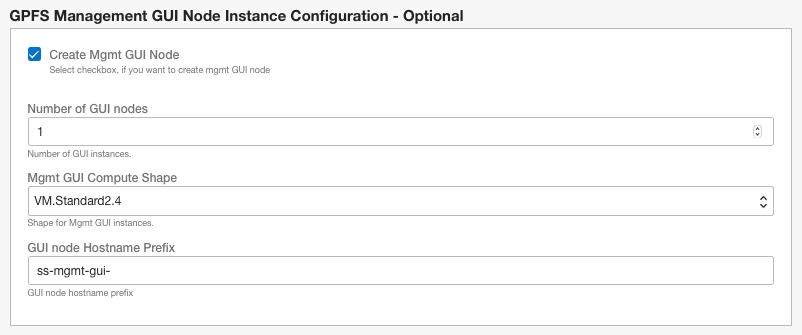

Step 8: Management GUI instance configuration (Optional)

Because this configuration is optional, the check box is not selected by default, so no instance is created. If you want to deploy a GPFS Management GUI instance to monitor and administer GPFS, select the check box. Spectrum Scale also comes preinstalled with CLI tools to administer.

Figure 11: Management GUI configuration

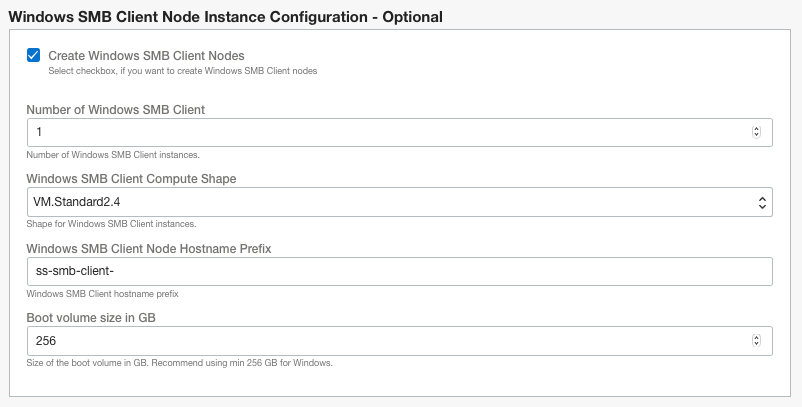

Step 9: Windows SMB client or Compute instance configuration (Optional)

Because this configuration is optional, the check box is not selected by default, so no instance is created. If you want to deploy a Windows instance to use SMB protocol for access GPFS file system, select the check box.

Figure 12: Windows SMB client configuration

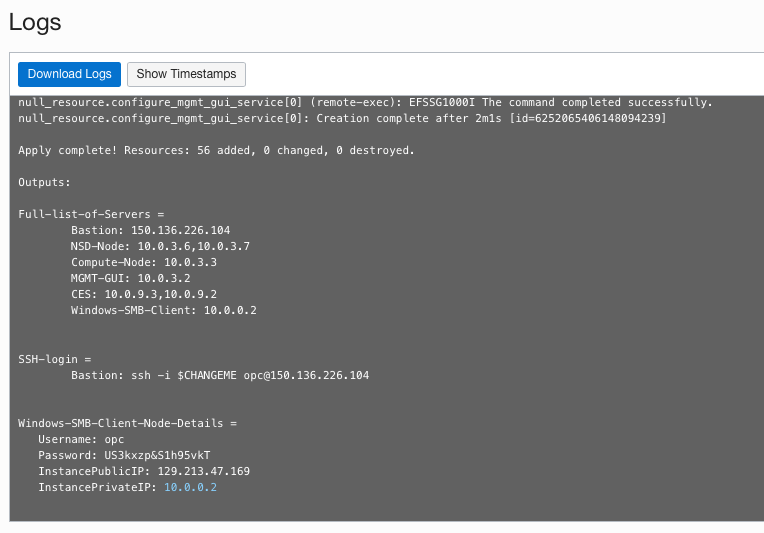

Step 10: Deployment output

When the deployment is complete, it displays the bastion public IP and private IP addresses of various nodes and login credentials for the Windows SMB client, if it was deployed, in the Resource Manager Stack UI on the OCI Console. It also allows you to download the logs.

Figure 13: Deployment output

Try it yourself

Every customer’s file system requirements are different. You can try the deployment yourself using the Resource Manager Stack for IBM Spectrum Scale.

If you have any questions on how to architecture your Spectrum Scale on OCI, contact the OCI HPC team, your Sales Account team, or me.