Oracle Cloud Infrastructure has launched a range of new services that use the capabilities of our Dyn investment to provide a significant enhancement to the native edge management capabilities of our second-generation cloud. These services include:

- Traffic Management Steering Policies

- Health Checks (Edge)

Today, I’d like to talk about how you can use Traffic Management to deploy, control, and optimize globally dispersed application services for your enterprise.

Prerequisites

To work with Traffic Management Steering Policies, you delegate a domain (or subdomain) to the Oracle Cloud Infrastructure DNS service. Many organizations choose to delegate a subdomain specifically for global load balancing activities to help delineate source services (for example, <service>.glb.domain.com). For more information, see Overview of the DNS Service.

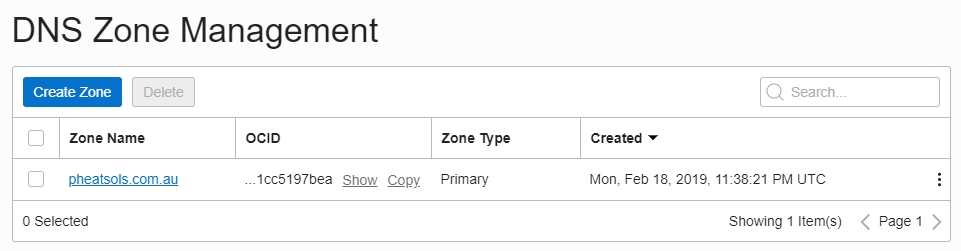

The example in this post uses the pheatsols.com.au domain, which is served via the DNS service.

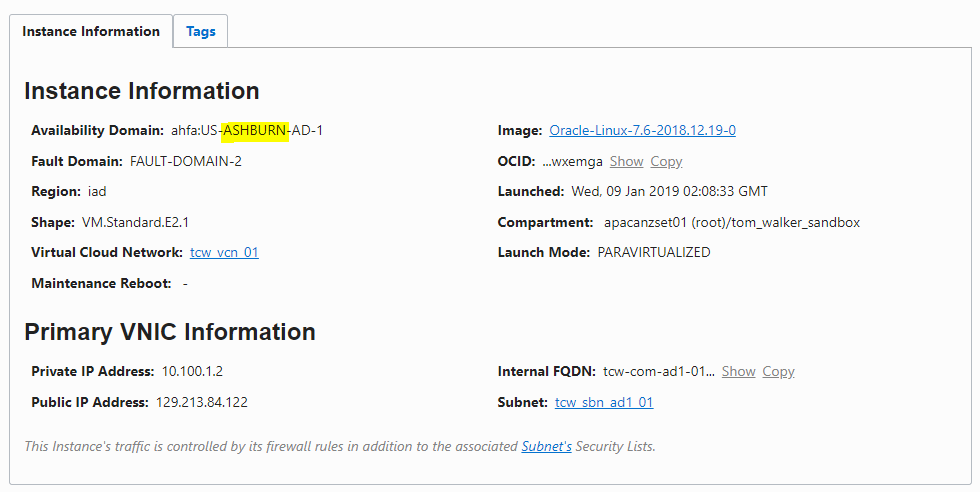

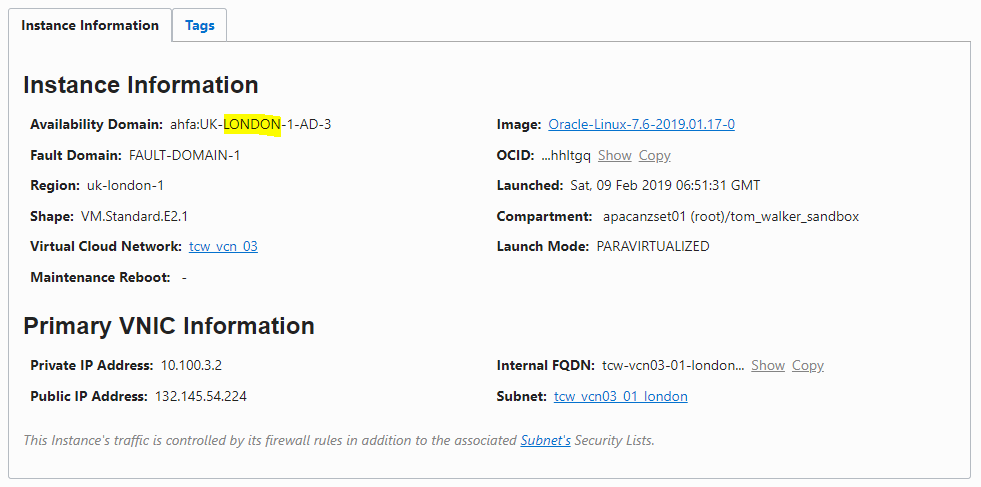

This example also uses two Apache instances, in Ashburn and London, that serve a simple HTML page that identifies their geographic location.

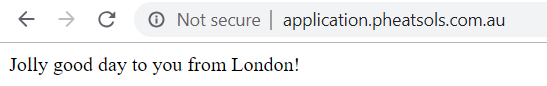

Hitting the IP addresses directly returns the following messages:

Scenario

The preceding setup is a (very) basic example of a web application being served out of two geographically disparate locations. Under a traditional DNS construct, you would have only a few options for directing users to these applications, and each one has inherent limitations:

- Separate Entries: Create a separate DNS entry for each service point (for example, london.pheatsols.com.au and ashburn.pheatsols.com.au) and instruct users to select their closest option. This options puts the responsibility on the user to use the correct server, which results in a sub-par user experience. In the event of failure, you will have to communicate directly with users and readdress any programmatic access to services.

- Single Entry: Create a single DNS entry (for example, application.pheatsols.com.au), nominating a location as the primary site, and manually update the entry in the event of failure or cutover. This option leads to an unbalanced workload—you are essentially running in an active-passive mode. Updating DNS entries in the event of failure can take some time to propagate, which can cause potentially extended outages.

- Round Robin: Create multiple DNS entries under the same address, which will invoke DNS round robin, essentially alternating the server resolved every lookup. This option provides intermittent service in the event of node failure (every second request still sends traffic to a dead node). Depending on application architecture, round robin can cause issues with sessions being redirected to a server that has no prior history of your connection.

Ideally, we’d like to provide a single DNS endpoint to the user that automatically forwards requests to the nearest server but seamlessly fails over to another functioning server in the event of node outage. And with Traffic Management Steering Policies (and the closely related Health Checks service), we can do just that.

Creating a Traffic Management Steering Policy

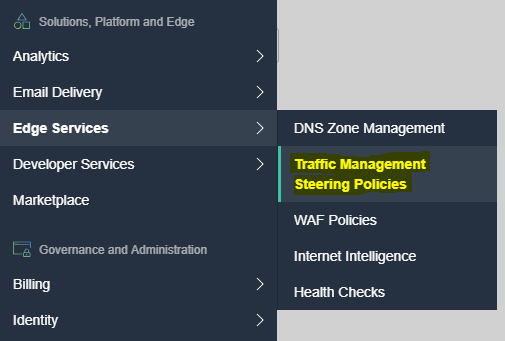

To start, log in to the Oracle Cloud Infrastructure Console. In the navigation menu, select Edge Services, and then select Traffic Management Steering Policies.

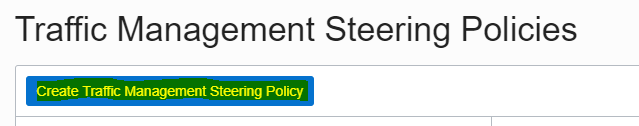

Click Create Traffic Management Steering Policy.

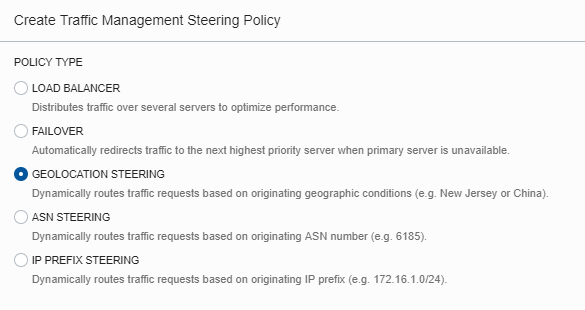

You’re presented with a range of traffic steering policies. Select Geolocation Steering, which dynamically routes traffic requests based on originating geographic conditions. (The other policy types are defined in the “Other Policy Types” section later in this post.)

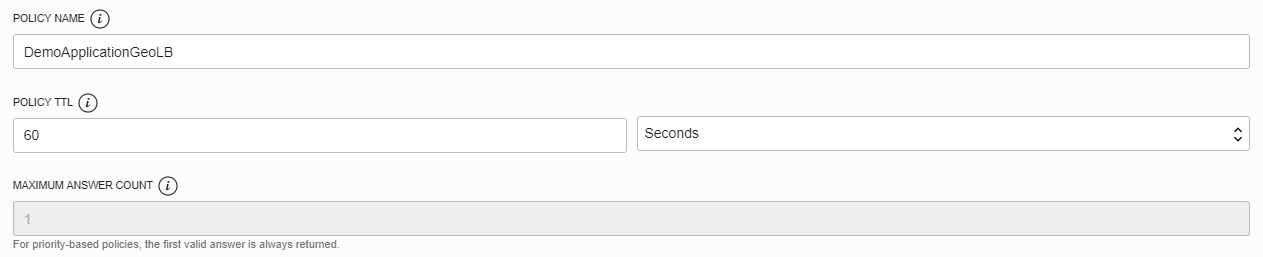

Enter a name for the policy and set the TTL. The TTL defines how long the requesting DNS servers cache the returned entry. Balance this number between the criticality of your application (the lower the TTL, the shorter a potentially “down” server address will be cached) and the chattiness of your DNS server (too short, and your DNS server and client will be working overtime to externally resolve queries). The default of 60 seconds works for this example.

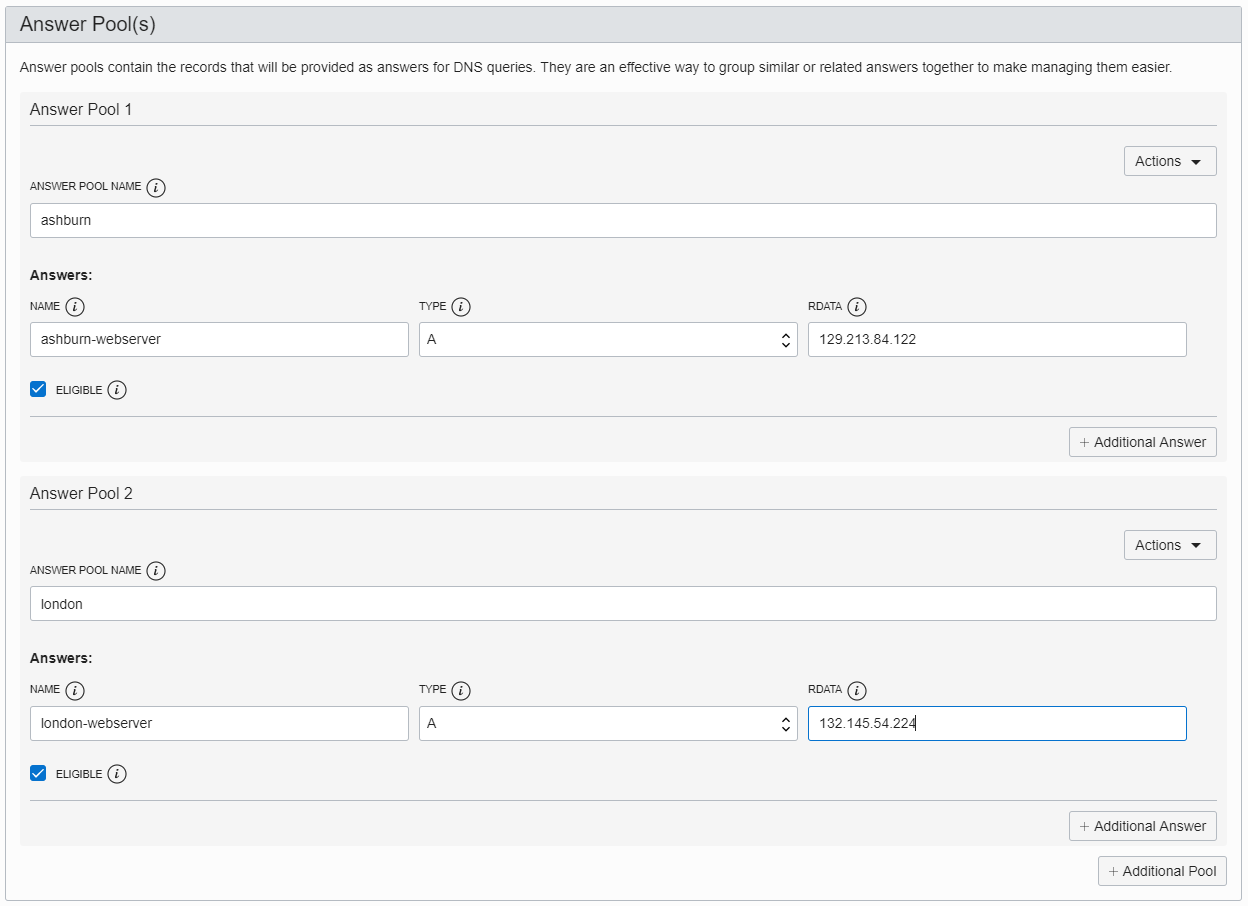

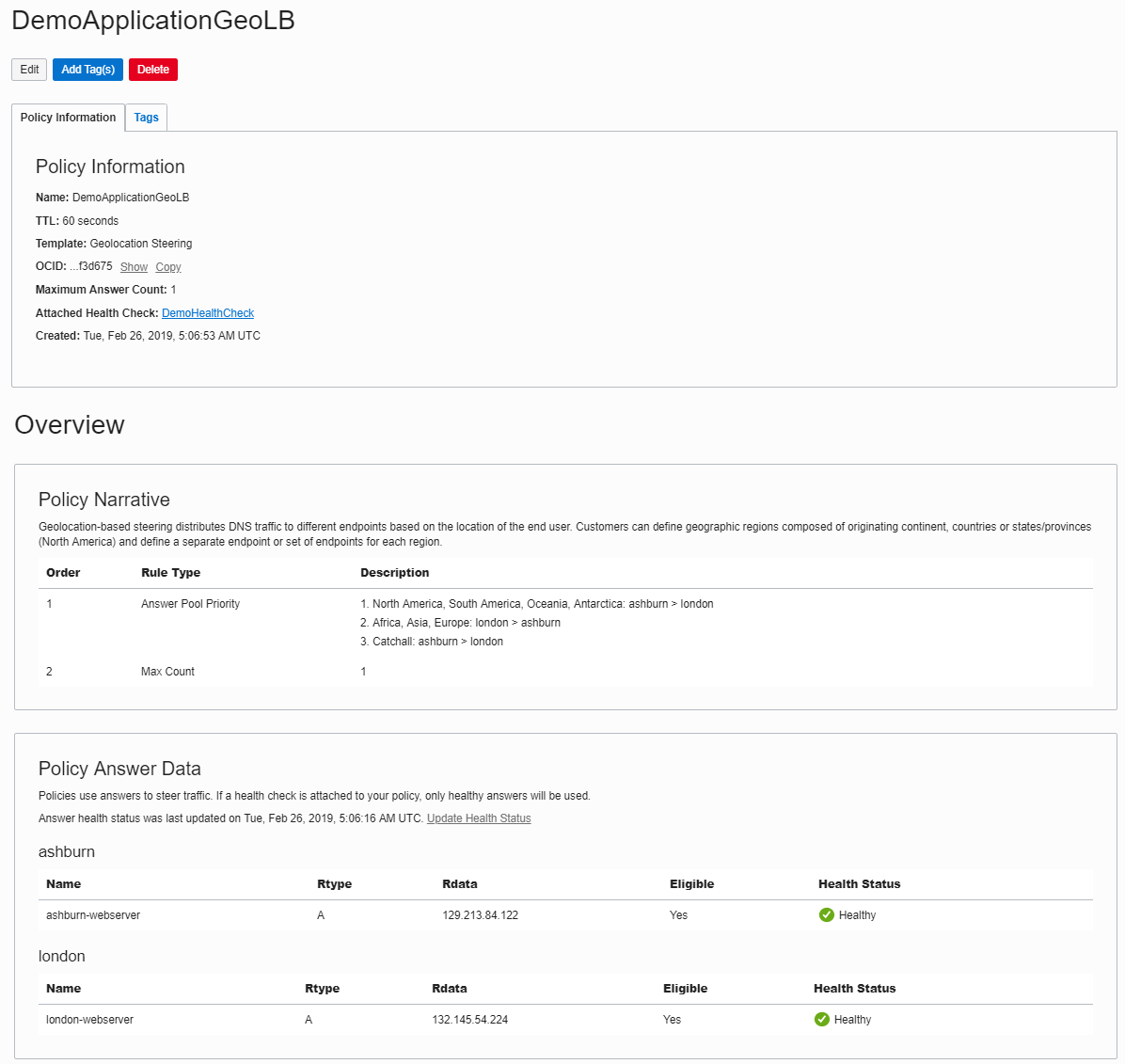

Now define the answer pools, which are server groups based (in this case) on geographic location. This example has two pools, ashburn and london, with a single server in each. If you have multiple servers in each location, you can optionally mark a server as ineligible if you are performing maintenance on that server and don’t want to return that result.

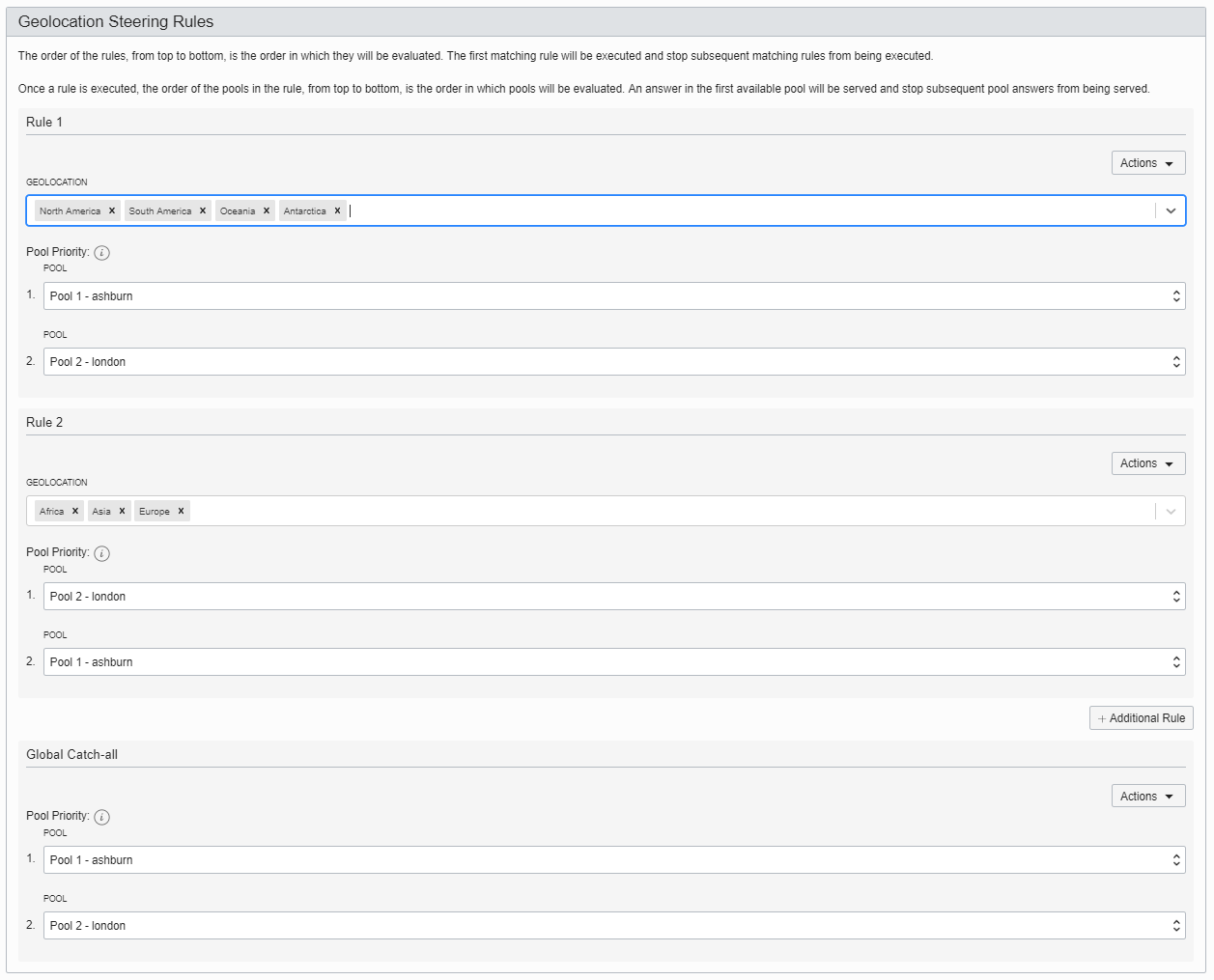

It’s time to set the all-important geolocation steering policies. Rules are parsed in order from top to bottom. In this example, Rule 1 directs traffic from North America, South America, Oceania, and Antarctica to Ashburn first, then fails over to London. (Geolocation can also be defined at a country level, or a state level for the US and Canada.) Rule 2 directs traffic from Africa, Asia, and Europe to London first. A Global Catch-all (in the event no rules are matched) mimics Rule 1.

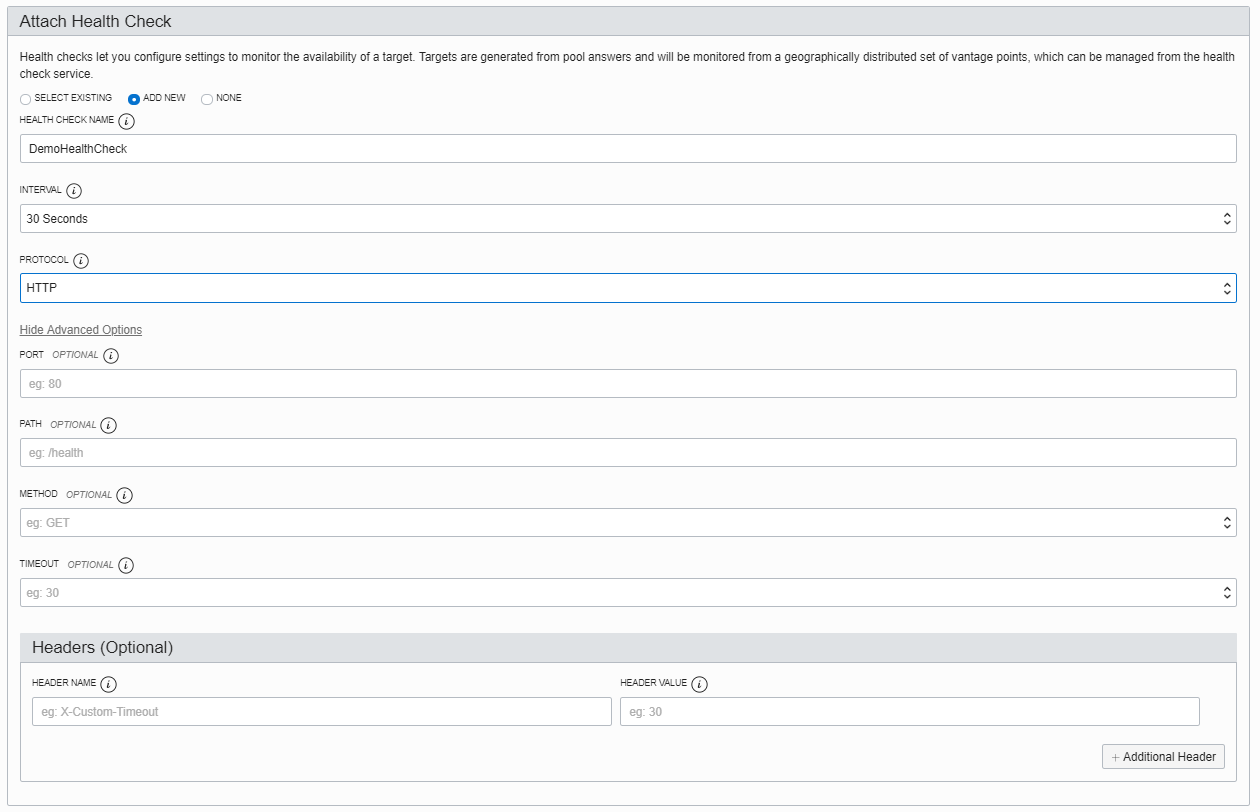

Assign a health check, which removes servers from the pool when they are inactive (and returns them when they go online), in support of an “always available” architecture. This example sets up a basic port 80 GET on the root address of the host, but optional ports, paths, headers, and methods are definable.

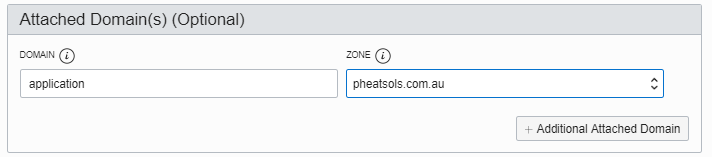

Associate the policy with a DNS entry. This example applies the policy to application.pheatsols.com.au. Any previous static entry for the application is superseded by this dynamic policy.

Finally, click Create Policy. A summary of the policy is displayed. In this example, the Policy Answer Data section lists the health of both servers as Healthy.

Now that the policy is created, it’s time to test it.

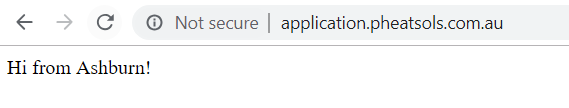

Because I am based in Australia, browsing to application.pheatsols.com.au should direct me to Ashburn, and it does.

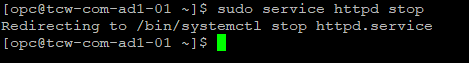

To test the failover, I stop the Apache service in Ashburn.

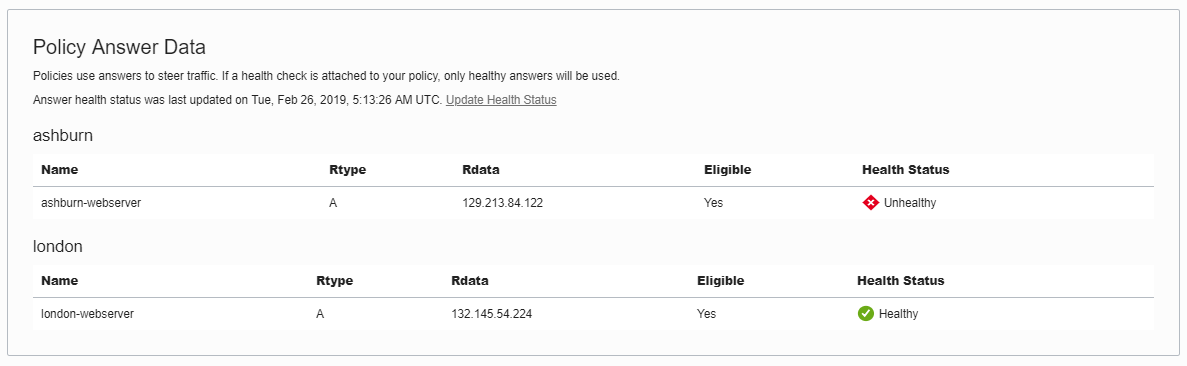

As a result, the Policy Answer Data section of the policy now reports Ashburn as Unhealthy.

And browsing to application.pheatsols.com.au now seamlessly resolves to London.

Success!

Other Policy Types

Although this post focuses on geolocation steering, the following other Traffic Management Steering Policies are available:

- Load Balancer: Allows you to set up a weighted, health-checked load balancer between multiple backend nodes or servers

- Failover: Establishes a sequential, health-check-based answer to support a simple high-availability (HA) architecture

- ASN Steering: Distributes traffic based on the ASN of the origin (used in BGP routing)

- IP Prefix Steering: Distributes traffic based on the IP address or range of the origin

Use Cases

The practical use of Traffic Management Steering Policies extends well beyond simple HA and load balancing. The following scenarios are now simplified or enhanced by our fully integrated second-generation cloud:

- Cloud migration: Weighted load balancing supports controlled migration from your data center to Oracle Cloud Infrastructure servers. You can steer a small amount of traffic to your new resources in the cloud to verify everything is working as expected. You can then increase the ratios until you are comfortable with fully migrating all traffic to the cloud.

- Supporting hybrid environments: Because Traffic Management Steering Policies is an agnostic service, you can use it to steer traffic to any publicly exposed (internet resolvable) resources, including other cloud providers and enterprise data centers.

- Pilot user testing: Leveraging the IP Prefix Steering policy, you can configure policies to serve different responses for your internal users and your external users.

- Geofencing and partner-only access: Limit access to your services to certain geographical locations and partner organizations, optionally serving a “This content not available from your location” as a catch-all.

To learn more about our integrated suite of Edge services, read the full documentation full documentation and the product launch announcement.