One of the first “hello world” challenges in computer vision is creating an image classification system. While interesting, the use cases for such a solution are limited, because many practical image and video sources contain multiple objects. Classifying an image as containing a person when trying to analyze Times Square in New York City, for example, has limited practical use. More interesting is counting the number of people in Times Square at any given moment. The solution to this problem is generalized as object detection and segmentation. New models are typically benchmarked against the Common Objects in Context (OCO) dataset for scoring.

Object detection and segmentation

Computer vision is one of the fastest growing artificial intelligence segments. The 2020 market size of $11 billion is expanding with a compound annual growth rate (CAGR) of 7.6% to reach an estimated size of $19 billion by 2027. The excitement in the area is not surprising, because Hollywood, researchers, and business people alike have been wondering for decades what’s possible if computers could analyze video feeds in detail, automatically.

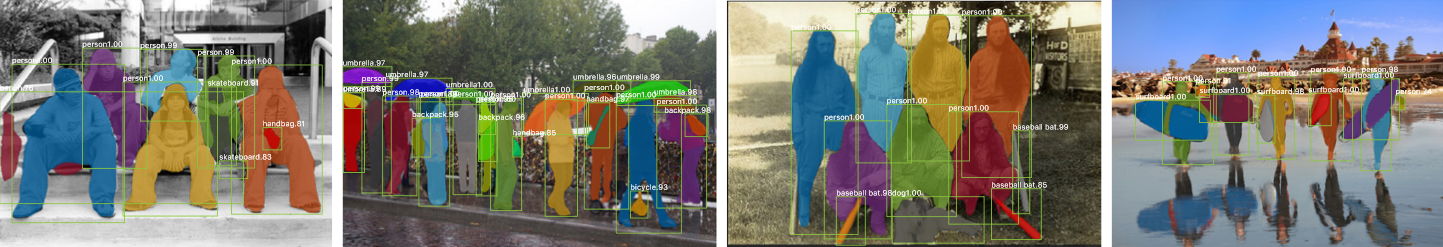

Fast R-CNN, Faster R-CNN, and now Mask R-CNN are Facebook Research’s contributions to this space. These have consistently performed well against the (COCO) dataset, which is a benchmark dataset for the task of object detection and segmentation. Harnessing the power of NVIDIA GPUs, Oracle Cloud Infrastructure (OCI) customers can deploy a sample implementation of Mask R-CNN with Detectron2, a well-documented library to get up and running with the task of object detection and segmentation in images.

From a human perspective, images and videos contain a background, foreground, and subjects such as people, animals, or cars. However, computers only see a set of instructions for what colors to display on the screen. For a computer to identify the target subjects in this unstructured data, the model needs to first draw a rectangular bounding box around a subject, and then use the data in that box to classify the subject and trace its shape. For this reason, training datasets for the task of object detection and segmentation contains the following data:

-

The raw image

-

Bounding boxes for all subjects in that image

-

Mask outlining the edges of the subject

If you’d like to understand how these tasks apply for various situations, the COCO dataset has a handy tool where you can explore the dataset by filtering for various subjects.

Fine-tuning

While it’s true that training artificial intelligence models requires significant computational power, a new mode of development has emerged. It utilizes a performant, general, pretrained model as a backbone that’s tuned for a specific task, which significantly reduces end-to-end training times. Mask R-CNN uses a fully trained ResNet model with either 50 or 101 layers as a starting point.

Training a ResNet 50 model can take about 12 hours on a system with eight V100 GPUs. So, the ability to download the base model cuts down on development lifecycle timelines dramatically. Mask R-CNN only requires training against a dataset consisting of the specific object to be detected. Depending on the complexity of the target, fine-tuning might only take a few hundred images, compared to tens of thousands.

Try it yourself

Oracle Cloud Infrastructure offers a range of GPU shapes that are ideal for training Mask R-CNN workloads. All our services are well documented, and you can follow this guide to create a GPU instance. We have also benchmarked our bare metal V100 system (BM.GPU3.8) and A100 system (BM.GPU4.8) against the task of Mask R-CNN to ensure that our performance matches on-premises system performance. The newest A100 system processes images twice as fast as the V100 system.

Once a GPU-powered instance is created, follow the Detectron installation and then the training and evaluation through the command line. Detectron can detect some of the most interesting subjects already, so if you’re looking to develop a computer vision solution, it can serve as a prebuilt launch pad.