Oracle collaborated with ThinkParQ, to provide high-performance file servers at scale on Oracle Cloud Infrastructure. Established as a spinoff from the Fraunhofer Center for High-Performance Computing, ThinkParQ drives the research and development of BeeGFS, and works closely with system integrators to create turn-key solutions.

BeeGFS from ThinkParQ is a leading parallel cluster file system developed with a strong focus on input-output performance and designed for easy installation and management. BeeGFS transparently spreads user data across multiple servers. By increasing the number of servers and disks in the system, you can scale the performance and capacity of the file system, from small clusters up to enterprise-class systems with thousands of nodes.

Using BeeGFS, you can build a high-performance computing (HPC) file server on Oracle Cloud Infrastructure. The build process can be automated by using a prebuilt Terraform template, oci-beegfs. It’s easy to scale a BeeGFS cluster for higher throughput, higher storage capacity, or both. It costs only a few cents per gigabyte per month, including both compute and storage.

Deployment

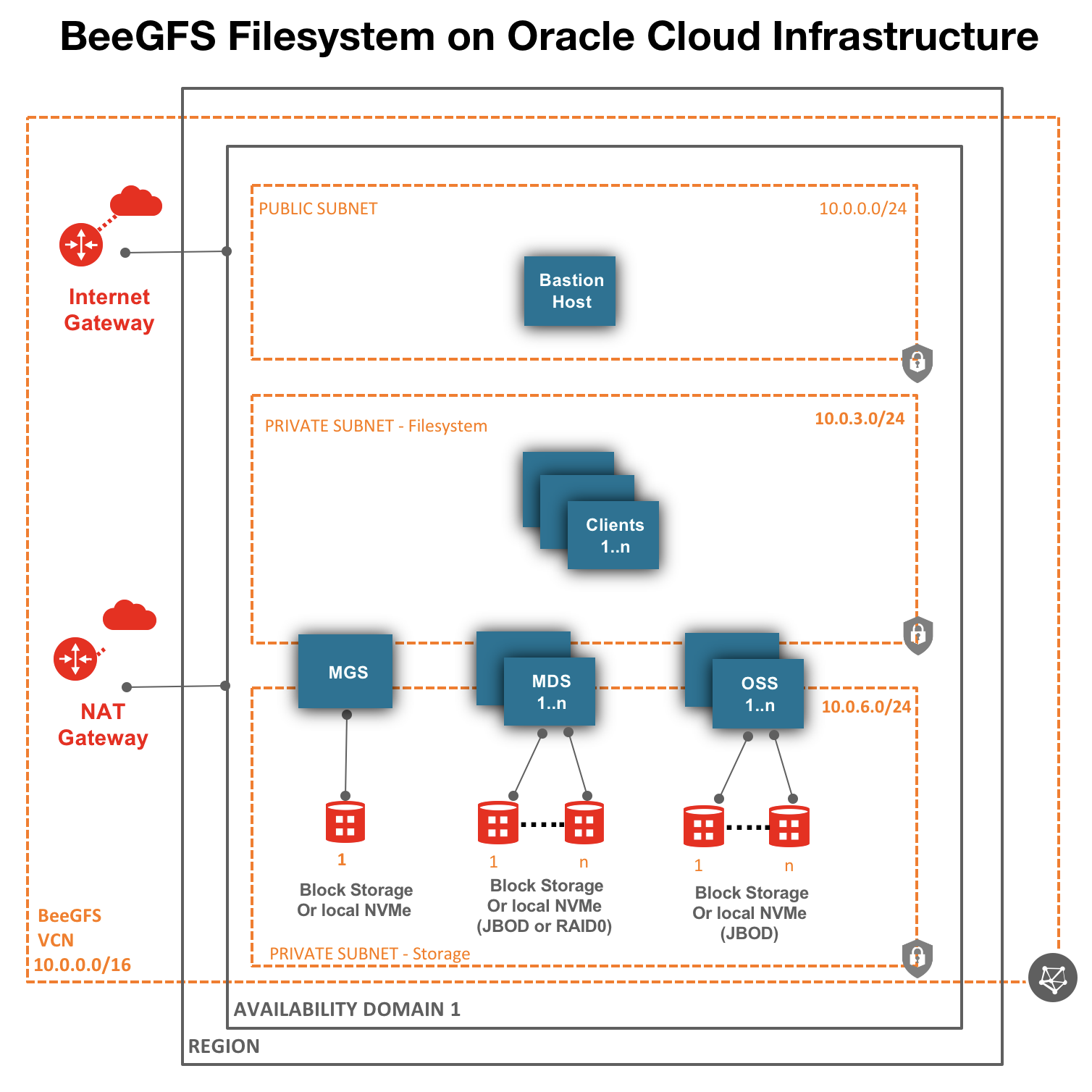

You can deploy BeeGFS using Terraform template or Oracle Resource Manager template. BeeGFS deployment template provisions the required Oracle Cloud Infrastructure components: compute, storage, virtual cloud network, and subnets. The template also deploys the BeeGFS file system software, which includes the following components:

- The management service (MGS) is a “meeting point” for the BeeGFS metadata, storage, and client services.

- The metadata service (MDS) stores information about the data, such as directory information, file and directory ownership, and the location of user file contents on storage targets. The metadata service is a scale-out service, which means that you can use one or many metadata services in a BeeGFS file system.

- The object storage service (OSS) is the main service for storing user file contents, or data chunk files. Similar to the metadata service, the object storage service is based on a scale-out design. An object storage service instance has one or more object storage targets.

- Clients are compute instances that access the BeeGFS file system.

The Terraform deployment supports the following:

- Separate nodes for each service and clients

- Multiple metadata service nodes

- One JBOD or disk for each metadata service node

- Multiple disks attached as RAID 0 for each metadata service node

- Multiple object storage service nodes, with multiple JBODs per node

- Multiple client nodes

- Bare metal and virtual machines

- Single or multiple physical NICs

- TCP

- Disk/JBOD that are network-attached block volume storage or local NVMe SSDs attached to DenseIO compute shapes

- Storage tiering for your hot/warm/cold data using combination of local NVME SSDs, High, Balanced, Low cost Block volume storage for storage pools

You can customize the Terraform template based on your needs, with the following common points of customization:

- Number of metadata service servers and their compute shapes

- Number of metadata service targets and their size (GB)

- Number of object storage service servers and their compute shapes

- Number of object storage service targets and their size (GB)

- Management service deployment on a separate compute node

Figure 1: BeeGFS File System Architecture on Oracle Cloud Infrastructure

The file system can be created with bare metal or virtual machines (VM) Standard or DenseIO compute shapes. However, we recommend using bare metal Standard compute shapes for optimal throughput. These instances come with two physical NICs, each with 25-Gbps network speed. This option allows you to use one NIC for all traffic to block volume storage and another NIC for incoming data to the object storage service and metadata service nodes from the client nodes. Also, the Oracle Cloud Infrastructure Block Volume storage comes with in-built data replication to deliver 99.99% annual durability.

Oracle bare metal compute instances are connected in clusters to a non-oversubscribed 25-gigabit network infrastructure, which guarantees low latency and high throughput—key requirements for a high-performance file system. In fact, Oracle Cloud Infrastructure is the only cloud with a network throughput performance SLA.

For storage, you can use either our Block Volume service or compute-local NVMe SSD capacity. The Block Volume service uses NVMe for support, and an SLA backs block storage performance. Each block of size 1 TB and higher delivers480 MB/s and 25,000 IOPS by default at no additional charge. To use locally attached NVMe SSDs, select DenseIO bare metal or VM compute shapes. For more information, see the Block Volume page, and the Block Volume performance metrics and local NVMe storage metrics blog posts.

Try it Yourself

Every use case is different. The only way to know if Oracle Cloud Infrastructure is right for you is to try it. To try, you can select either Oracle Cloud Free Tier or 30-day free trial that includes US$300 in credit to get you started with a range of services, including compute, storage, and network.