Guest Author: Andy Lerner, Partner Solutions Architect, MapR

The MapR and Oracle Cloud Infrastructure (OCI) partnership allows customers to benefit from a highly integrated data platform for big data and machine learning applications. Oracle and MapR share a common vision for delivering data insights across the enterprise and both are committed to developing and delivering a best in class platform.

Get started: Terraform module to deploy MapR on Oracle Cloud Infrastructure

In this blog post, I will talk about using GPUs for deep learning on Oracle Cloud Infrastructure.

Using GPUs to train neural networks for deep learning is becoming commonplace. However, the cost of GPU servers and the storage infrastructure required to feed GPUs as fast as they can consume data is significant. I wanted to see if I could use a highly reliable, low-cost, easy-to-use Oracle Cloud Infrastructure environment to reproduce the deep-learning benchmark results published by some of the big storage vendors. I also wanted to see if a MapR distributed filesystem in this cloud environment could deliver data to the GPUs as fast as those GPUs could consume data residing in memory on the GPU server.

Setup

For my deep learning job, I created the following setup:

- I trained the ResNet-50 and ResNet-152 networks with the TensorFlow CNN benchmark from tensorflow.org using a batch size of 256 for ResNet-50 and 128 for ResNet-152.

- I used an Oracle Cloud Infrastructure Volta Bare Metal GPU BM.GPU.3.8 instance using ImageNet data stored on a five-node MapR cluster running on five Oracle Cloud Infrastructure Dense I/O BM.DenseIO1.36 instances. The 143-GB ImageNet data was preprocessed into TensorFlow record files of around 140 MB each.

- To simplify my testing, I installed NVIDIA Docker 2 on the GPU server and ran tests from a Docker container.

- I used MapR’s mapr-setup.sh script to build a MapR persistent application client container (PACC) from the NVIDIA GPU Cloud (NGC) TensorFlow container. As a result, my container had NVIDIA’s optimized version of TensorFlow with all their necessary libraries and drivers, and MapR’s container-optimized POSIX client for file access.

Benchmark Execution

First, I ran one benchmark by using data in the local file system, which loaded the Linux buffer cache with all 143 GB of data.

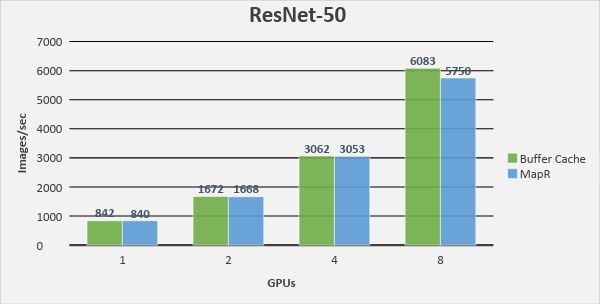

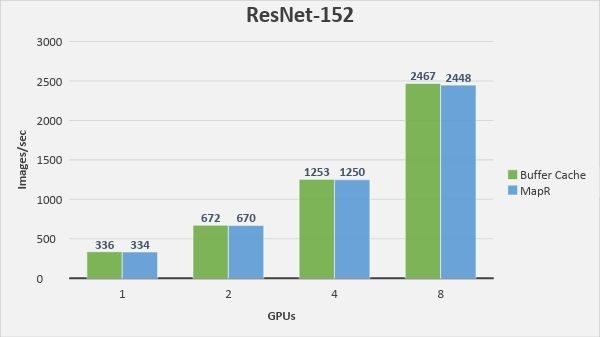

Next, I ran the benchmarks through one epoch against this data with one, two, four, and all eight GPUs on the server. In the following charts, that’s the Buffer Cache number.

Then, I cleared the buffer cache and reran the benchmarks by pulling the data from MapR. I cleared the MapR filesystem caches on each of the MapR servers between each run to ensure that I was pulling data from the physical storage media.

I got some of the best performance numbers that I’ve seen for training these models, and the MapR performance was almost identical to in-memory reads on the local file server.

ResNet-50 Results

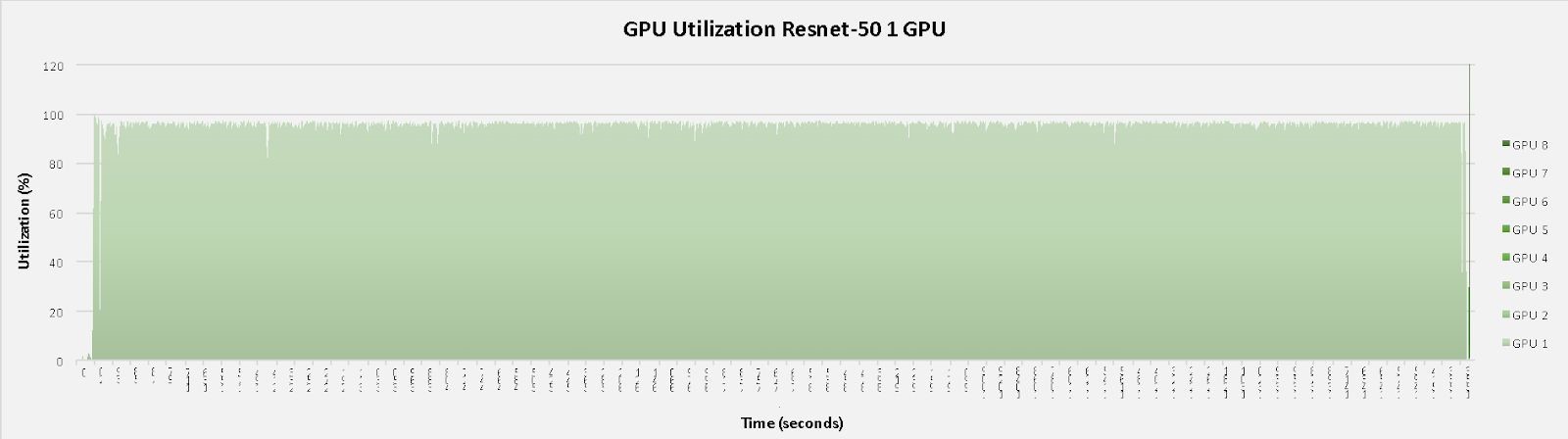

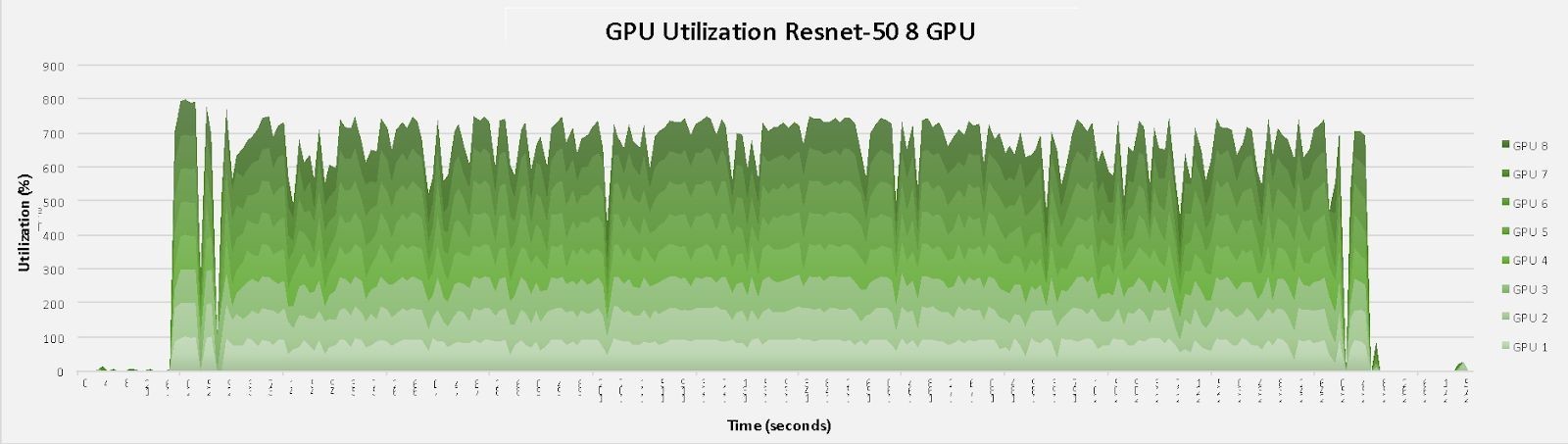

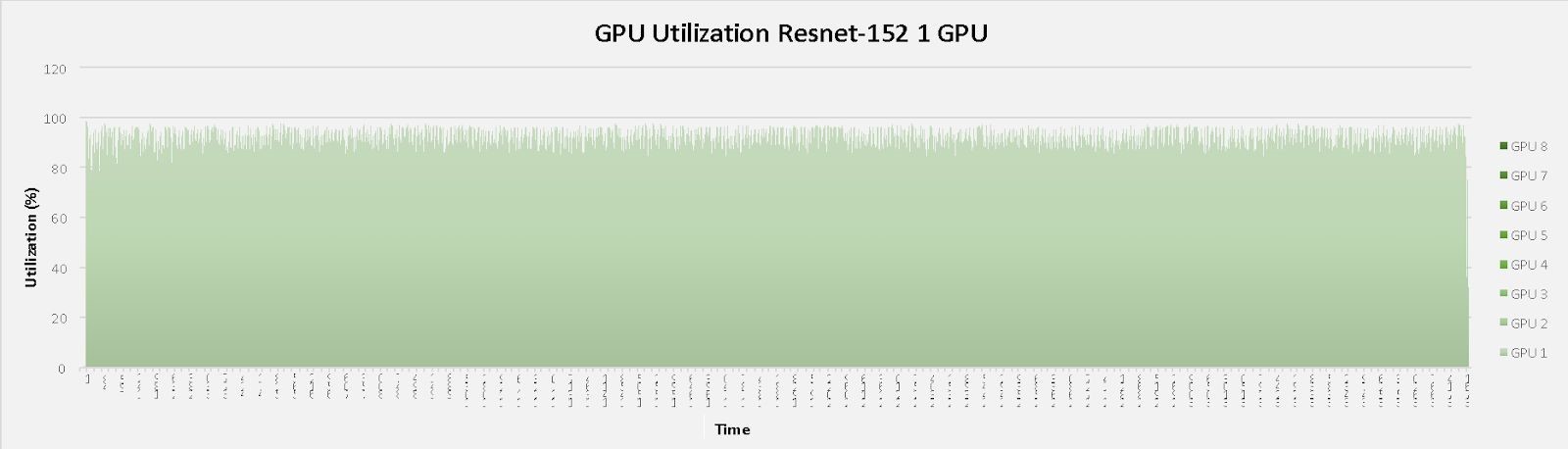

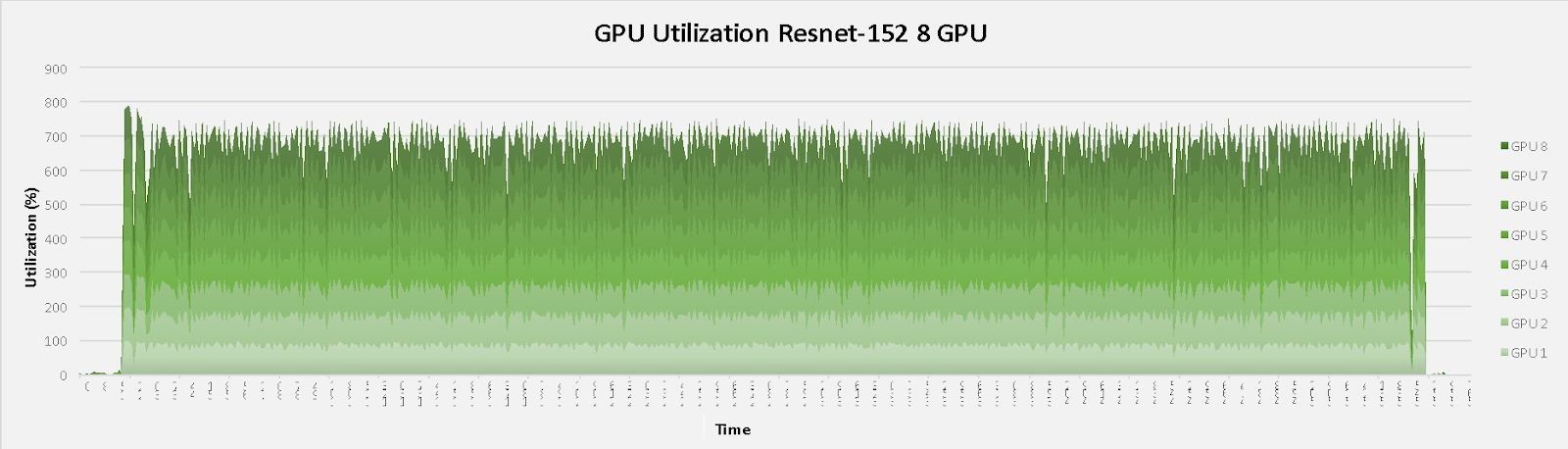

I used nvidia-smi, provided in the NGC container, to collect GPU utilization metrics on the eight GPUs in the cluster to confirm that the GPUs were working at full speed to process the data. The following graphs show the GPU utilization for the 1 GPU and 8 GPU runs pulling data from MapR.

ResNet-152 Results

The 1 GPU and 8 GPU utilization numbers from nvidia-smi for ResNet-152 were as follows:

For just a few dollars per hour, Oracle Cloud Infrastructure gives you the highest-performing NVIDIA GPU enabled servers with highly available, reliable, and massively scalable MapR storage to perform machine-learning tasks faster and more effectively than similar storage infrastructure solutions, with the latter priced orders of magnitude higher.

Try out your own Machine Learning use case on OCI with MapR and let us know what you think.

Additional Resources

- #LetsProveIt on Oracle Cloud: Second-Generation Cloud for Faster Performance

- Oracle Cloud Instance pricing: https://cloud.oracle.com/compute/pricing

- MapR PACC image: https://mapr.com/docs/60/AdvancedInstallation/CreatingPACCImage.html

- NVIDIA NGC TensorFlow image: https://ngc.nvidia.com/registry/nvidia-tensorflow

- TensorFlow CNN benchmark: https://github.com/tensorflow/benchmarks/tree/master/scripts/tf_cnn_benchmarks

- ImageNet: http://image-net.org/