Created by Lehigh University capstone project team: Nate Cable ‘24, Zeeshan Khan ‘24, Dan McClellan ‘24, and Bella Wang ‘24.

Members of the Lehigh University student team recently completed a one-year capstone project at Lehigh University in 2023, aimed at integrating Project Hamilton’s OpenCBDC with the Oracle Autonomous Database and its Blockchain Tables technology. Throughout the project, our team engaged in weekly discussions with Oracle sponsors centered around guidance on Oracle Cloud Infrastructure (OCI), database features, schema design, and blockchain-specific functionalities. This collaboration underscores our commitment to pioneering in the field of digital currency technology.

In this blog post, we detail our journey of integrating OpenCBDC with Oracle Database Blockchain Tables and Oracle Sharding, aiming to utilize Oracle’s scalability and unique blockchain features to enhance the OpenCBDC platform. We start with an overview of Central Bank Digital Currencies (CBDCs) and Oracle’s influential role in this evolving domain, highlighting the innovative solutions emerging from this collaborative effort.

Central Bank Digital Currencies

CBDCs mark a significant milestone in the digitization of money, revolutionizing both retail and interbank transactions. These digital currencies, representing a country’s fiat currency, are issued and regulated by Central Banks. This ensures a level of trust and stability comparable to traditional currencies. CBDCs aim to improve efficiency, security, and financial inclusion, enabling the tokenization of physical currency. They facilitate instant peer-to-peer transactions and streamlined online payments, thereby addressing the growing demand for digital financial services. Furthermore, CBDCs have the potential to transform interbank settlements by reducing costs and speeding up transaction processing times.

CBDCs are not just a theoretical concept but are rapidly becoming a practical reality in various parts of the world. They present an opportunity to reshape the global financial landscape, offering solutions to longstanding issues like financial access for underbanked populations, reducing the reliance on physical cash, and combating financial crimes through traceable transactions. For instance, the Bahamas has introduced the Sand Dollar, aimed at increasing financial inclusion among its scattered islands, while Singapore is exploring the potential of retail CBDCs to enhance its payment ecosystem as detailed in their monograph. In North America, both the US and Canada are actively researching the potential of CBDCs through resources like the Federal Reserve’s FAQ and the Bank of Canada’s exploration of a digital dollar. Each of these efforts underscores the varied approaches and considerations nations must weigh as they venture into the realm of digital currencies. Central banks must navigate the complexities of privacy, cybersecurity, and systemic impact to ensure that CBDCs serve as a beneficial complement to the existing financial architecture.

Oracle and CBDCs

Oracle has been involved with Central Banks around the world on CBDC initiatives, and has been working on addressing many of the issues in this area, as described in the earlier blog post on Privacy, Ledger Architecture, and Cross-border Integrations in Central Bank Digital Currency. In addition to Oracle’s direct engagements with the Central Banks, Oracle has also sponsored academic work in this area, most recently sponsoring a capstone project at Lehigh University focusing on OpenCBDC – a collaboration between the Federal Reserve Bank of Boston and MIT’s Digital Currency Initiative also known as Project Hamilton. This blog post offers a wealth of high-level conceptual explanations, key findings, and crucial conclusions that emerged during the analysis and modification of OpenCBDC.

The OpenCBDC project at MIT’s Digital Currency Initiative has concentrated on optimization for high transaction throughput and low latency, while ensuring robustness against disruptions like geographical data center outages. These efforts were aimed at minimizing downtime and preventing data loss. The insights gleaned from the design choices and challenges encountered during this phase are particularly enlightening, especially for policymakers. They shed light on the various trade-offs and design options available in the realm of CBDC development, providing a comprehensive overview that could guide future implementations of digital currencies by central banks globally.

The framework employs the Unspent Transaction Output (UTXO) model, similar to the transaction model employed in Bitcoin, Cardano, and certain other blockchains for tokenizing currencies like the dollar and is designed to test the efficiency and scalability of different architectural models for CBDCs. Two architectural models were developed and compared by the OpenCBDC team: an Atomizer model, which first explicitly orders all valid transactions and then applies them to the partitioned state in the same order, and a Two-Phase Commit (2PC) model, which achieves atomicity and serializability without actually materializing a linear order. The OpenCBDC white paper has evaluated performance of both models and reported 1.7M transactions/second in the 2PC architecture with less than one second 99% tail latency, under 0.5 seconds 50% latency, and ability to increase throughput by adding more resources. The atomizer model reached a peak of 170K transactions/second with under two seconds 99% tail latency and 0.7s 50% latency. In addition to performance differences, there are other tradeoffs highlighted by the Boston Fed:

“The main functional difference between our two architectures is that one materializes an ordered history for all transactions, while the other does not. This highlights initial tradeoffs we found between scalability, privacy, and auditability. In the architecture that achieves 1.7M transactions per second, we do not keep a history of transactions nor do we use any cryptographic verification inside the core of the transaction processor to achieve auditability. Doing so in the future would help with security and resiliency but might impact performance.”

From Federal Reserve Bank of Boston, accessed 18 December 2023, <Project Hamilton Phase 1 Executive Summary>

The goal of the Lehigh University capstone project was to integrate a more scalable two-phase commit (2PC) architecture with Oracle Database. This integration utilized Oracle Blockchain Tables to ensure auditability by maintaining a transaction history in cryptographically hash-linked table rows, allowing for post-commit verification of transactions. The project demonstrated a viable model for large-scale CBDC deployment that leverages the extreme performance and cryptographic verifiability of Oracle’s Blockchain Tables. Additionally, it employed Oracle Database Sharding for horizontal partitioning of data across multiple independent databases, thus enabling hyperscaling within Oracle Cloud Infrastructure (OCI).

The Capstone Project

This project was a part of the Lehigh University’s Computer Science Capstone program and was completed over the course of a year. The ultimate objective was to port, test, and benchmark OpenCBDC architecture using Oracle Autonomous Database in Oracle Cloud, leveraging Oracle’s database tools and functions for a more efficient platform.

The OpenCBDC architecture is broken up into several architectural layers that communicate with one another via RPCs. Sentinels verify transactions submitted by users. It ensures the payor (transfer initiator) owns the input tokens along with other checks. Once verified, the sentinel batches transactions and passes the batches to Transaction Coordinators to process. The coordinators utilize a 2PC distributed transaction protocol with the Shards to process transactions.

The team focused on adapting the 2PC model to persistent storage using Oracle Database, storing traceability and compliance information in blockchain tables. The initial OpenCBDC 2PC implementation handles transactions in memory, only using a std::unordered_set for internal shared storage and atomic write as well as a persistent implementation of a Raft log, based on NuRaft implementation. A Raft-replicated state machine is used for fault tolerance in 2PC shards and 2PC coordinators. The team decided to replace this usage of in-memory storage with Blockchain Tables in Oracle Database to provide immutability and cryptographic verifiability, supplementing this with additional tables in the schema to fully utilize database capabilities.

Key project achievements, some of which are detailed below, include:

- Deploying OpenCBDC project in Oracle Cloud Infrastructure (OCI) using compute VMs and building the project in OCI environment

- Provisioning and utilizing Oracle Autonomous Database (ADB)

- Designing database schema and creating Blockchain Tables as part of it

- Setting up Oracle Sharding to distribute the database

- Successfully persisting OpenCBDC transaction and auditing data to the database using embedded SQL

- Creating a Wallet Web application and its backend server to support the APIs into OpenCBDC and Oracle Database

- Creating an admin UI with dashboard for visualizing the total number of users, total number of transactions, and payments table.

Persisting OpenCBDC Transaction Data on Oracle

The team initially created a middleware server written in C to isolate Oracle Cloud and Autonomous Database interactions from OpenCBDC code. This approach allowed for thorough testing and refinement of interactions without extensive code modifications in OpenCBDC. Once key interactions were defined and rigorously tested, we transitioned to a direct database connection within OpenCBDC itself using embedded SQL. This shift involved developing a C struct enabling versatile data exchange with the database using Oracle Call Interface (OCI) client. The OCI client was compiled to seamlessly integrate with every part of the project requiring data transmission to the database, utilizing CMake for the build process.

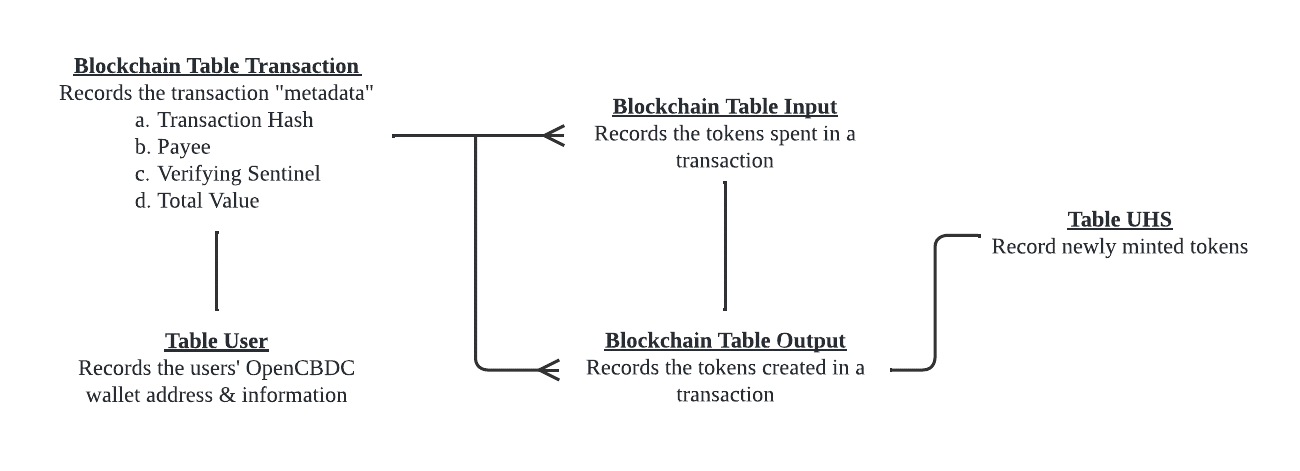

As for the database, our schema has undergone multiple revisions. Originally, we were attempting to store many different aspects within OpenCBDC that made our design very complex and difficult to scale. Through the valuable input provided by our contacts within Oracle, we were able to drastically simplify and improve the database schema to just 3 blockchain tables and 2 mutable tables. With further work, the three blockchain tables can likely be simplified further to a single blockchain table. Below is a diagram of our database schema, followed by a diagram depicting the interactions between OpenCBDC and our database:

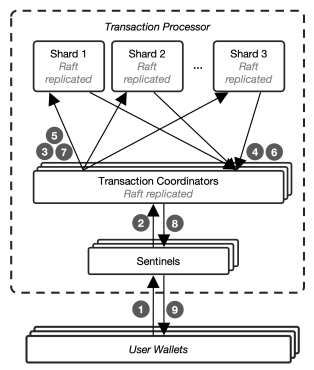

The team’s implementation of the 2PC architecture model shown below used this schema to supplement the Raft state machine for Transaction Processor Shards and to store Sentinels transaction history. The architecture components used in the 2PC model are shown below. For more details, please refer to the original OpenCBDC whitepaper.

Sentinels: Receive transactions from users and perform transaction-local validation, then forward the locally-validated transactions for further processing. When processing a Transfer transaction, the sentinel computes the hashes for input UTXOs, deterministically derives serial numbers for output UTXOs, and computes hashes for output UTXOs. These two sets of hashes form a compact transaction stored as UHS (Unspent funds Hash Set.) Sentinels forward compact transactions to the execution engine

Shards and Transaction Coordinator: Each logical shard is responsible for a subset of the UHS IDs, which are unspent within the system. Coordinator splits input and output UHS IDs to be relevant for each shard and uses 2 Phase Commit (2PC) protocol to record a combination of input and output token IDs for each transaction. First, it issues a prepare with each UHS ID subset. Each shard locks the relevant input IDs and reserves output IDs, records data about the transaction locally, and responds to the coordinator indicating it was successful. The coordinator then issues a commit to each shard. Each shard finalizes the transaction by atomically deleting the input IDs, creating the output IDs, and updating the local transaction state about the status of the transaction. The shard then responds to the coordinator to indicate that the commit was successful. The actual implementation uses batching to reduce locking in the system, with multiple individual transaction UHS IDs sent as a single batch to the Transaction Coordinator (TC). TC then instructs each shard responsible for a UHS ID included in the batch to lock the input UHS IDs and reserve the output UHS IDs. Sentinels batch multiple transfers into single distributed transactions to amortize the cost of durable persistence and replication using Raft distributed state machines.

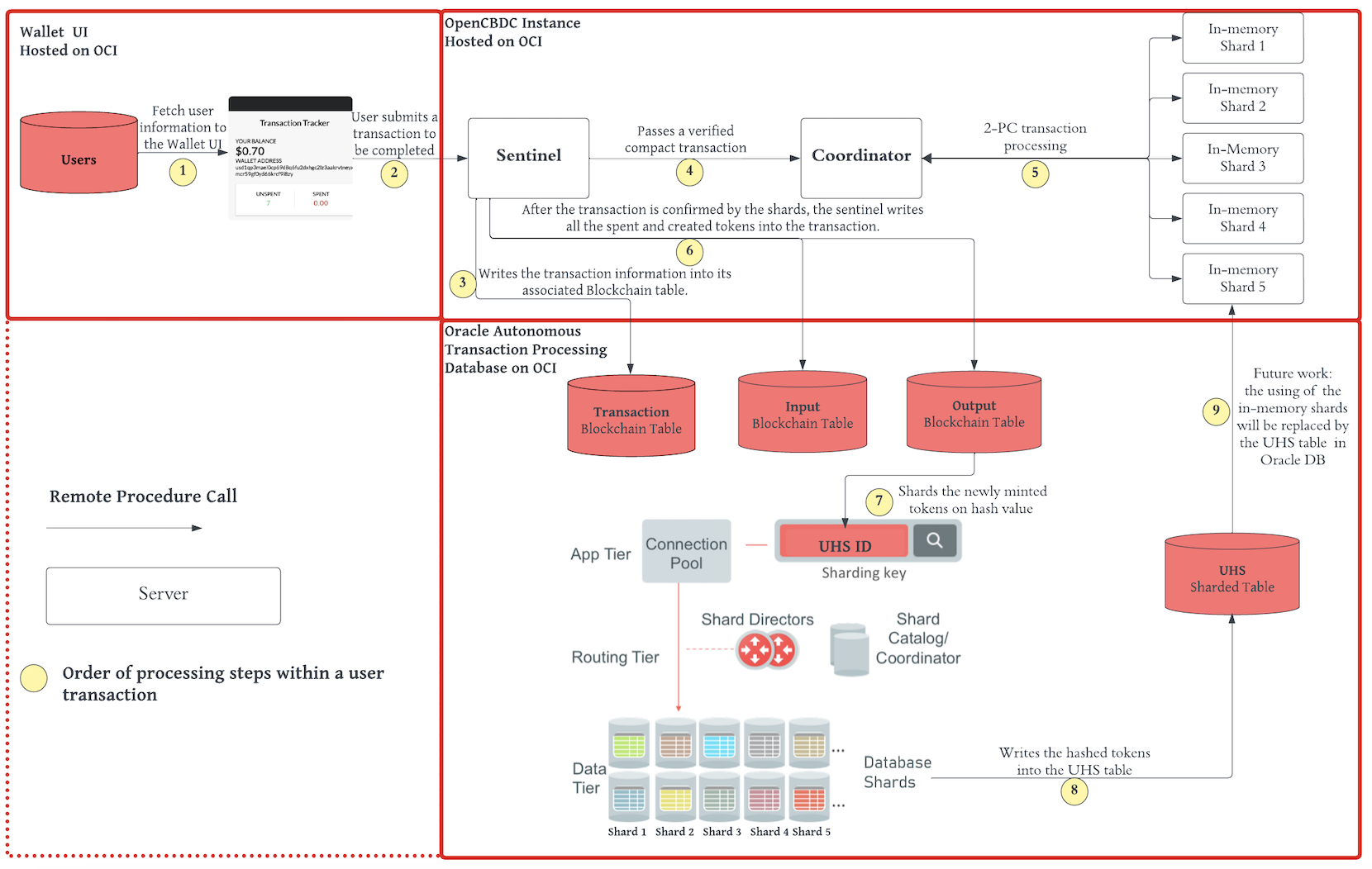

The team largely stayed faithful to the OpenCBDC 2PC architecture since the initial goal was the port itself. Future work includes integrating the port more tightly into the native transaction model of Oracle. The project team implemented integrations into Oracle Database as shown in the diagram below.

Based on the OpenCBDC data flows through the architecture layers, multiple updates were required at various stages of the transaction to record all the necessary data in immutable blockchain tables. When the transaction is submitted, the proposed transaction data is written to the Transaction blockchain table from the sentinel. Upon verification, the transaction data (sender, receiver, value, etc.) was then stored on the blockchain table called “Transaction,” along with tokens spent (input) and tokens created (output) in the transaction in their respective Blockchain tables. In our final iteration of the project, the data stored for every transaction includes the transaction hash, the payee’s wallet address, the amount sent, the address of the sentinel that verified the transaction, and a verification timestamp.

Web Wallet Interface

The original OpenCBDC project utilizes terminal commands for various tasks including initiating wallets, processing transactions, and viewing wallet information. Recognizing the need to demonstrate user interaction in a friendlier manner, we introduced a user-friendly GUI, divided into three distinct sections: Balance View, Transaction History, and Submit Transaction.

This interface allows users to effortlessly view wallet balances, displaying both credits and debits through unspent and spent tokens based on the blockchain table. This ability to quickly get balance information is an important benefit of persisting token history in the database using auditable and cryptographically verifiable blockchain tables.

Furthermore, the GUI provides convenient access to transaction histories and simplifies the process of initiating new transactions. To integrate seamlessly with OpenCBDC, the frontend connects via a wallet Docker container, which is automatically created upon the launch of the frontend. This design not only improves user experience but also allows for both custodial and non-custodial wallet implementations.

Admin and Monitoring Interface

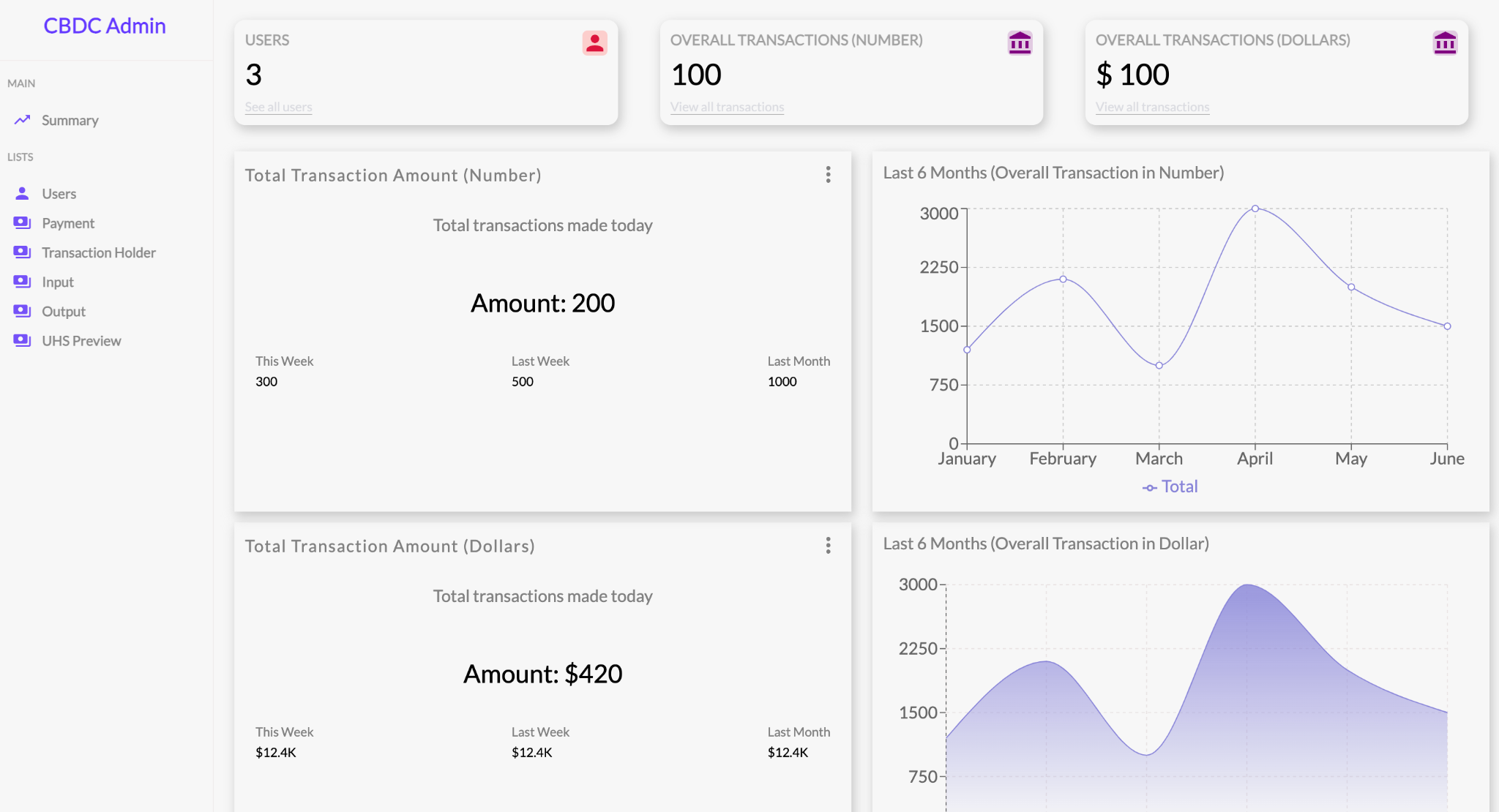

The Admin Panel page is an inclusive interface featuring a summary page, users page, payment page, and others for viewing database tables like the transaction holder, input, output, and UHS preview tables.

On the Summary Page, only the user table currently displays real data, updated in real time for accurate user metrics. Other diagrams and tables use placeholder data to assist in the further design and functional development of the interface. This temporary measure facilitates visual layout and functionality, making it easier for future team members to replace these placeholders with actual database data. This setup aims to transition smoothly from development to a fully operational system with live, accurate data. Several key metrics are displayed:

- Total Amount of Users: This metric tracks the total number of users registered in the system. Plans are in place to further categorize these users into “more active” and “less active” segments in the future.

- Recommendation for User Activity: It’s suggested that users who engage in five or more transactions per day be labeled as more active, while those with fewer than five transactions should be considered less active.

- Total Amount of Transactions: This is a count of the total number of transactions that have taken place.

- Total Dollar Amount of Transactions: This calculates the cumulative sum of all transaction amounts on a daily basis.

- Throughput: This is a feature currently under research and development, intended to provide insights into system performance by displaying throughput information.

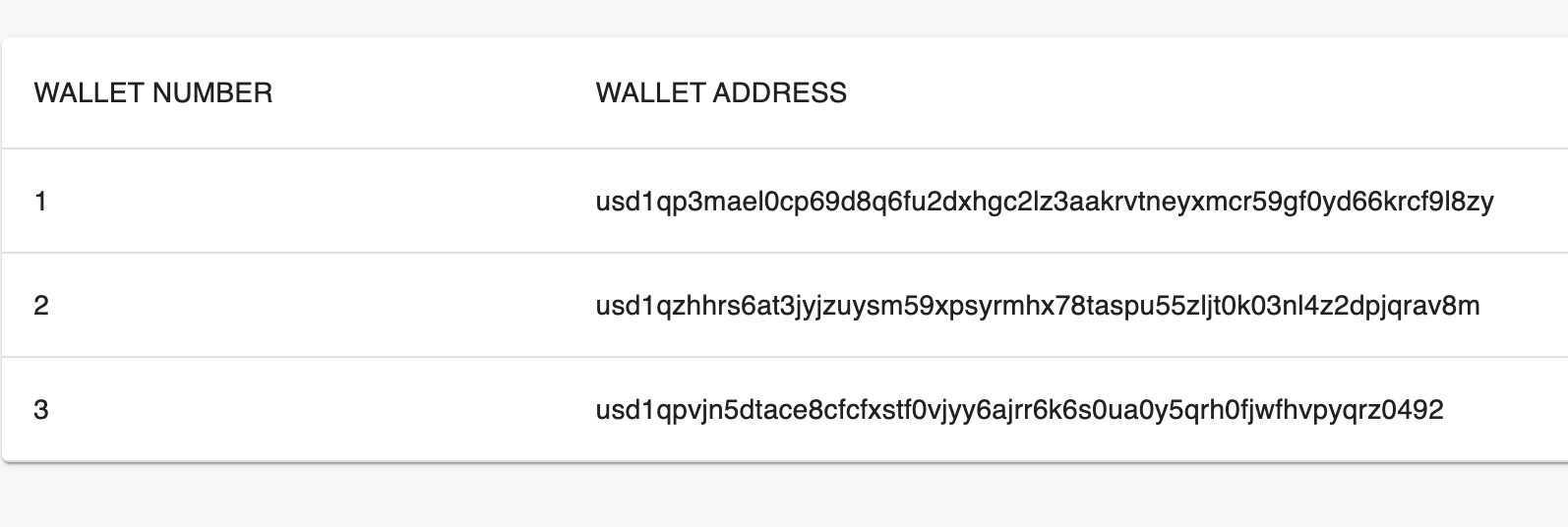

The Users Page is dedicated to individual user information, showcasing each user’s wallet ID and wallet address.

Meanwhile, the Payments Page focuses on transaction details and retrieving data from the Oracle Blockchain table. It includes critical data like the hashing value of each transaction, the wallet addresses of both the remitter and the payee, the payment amount, and the time of the transaction.

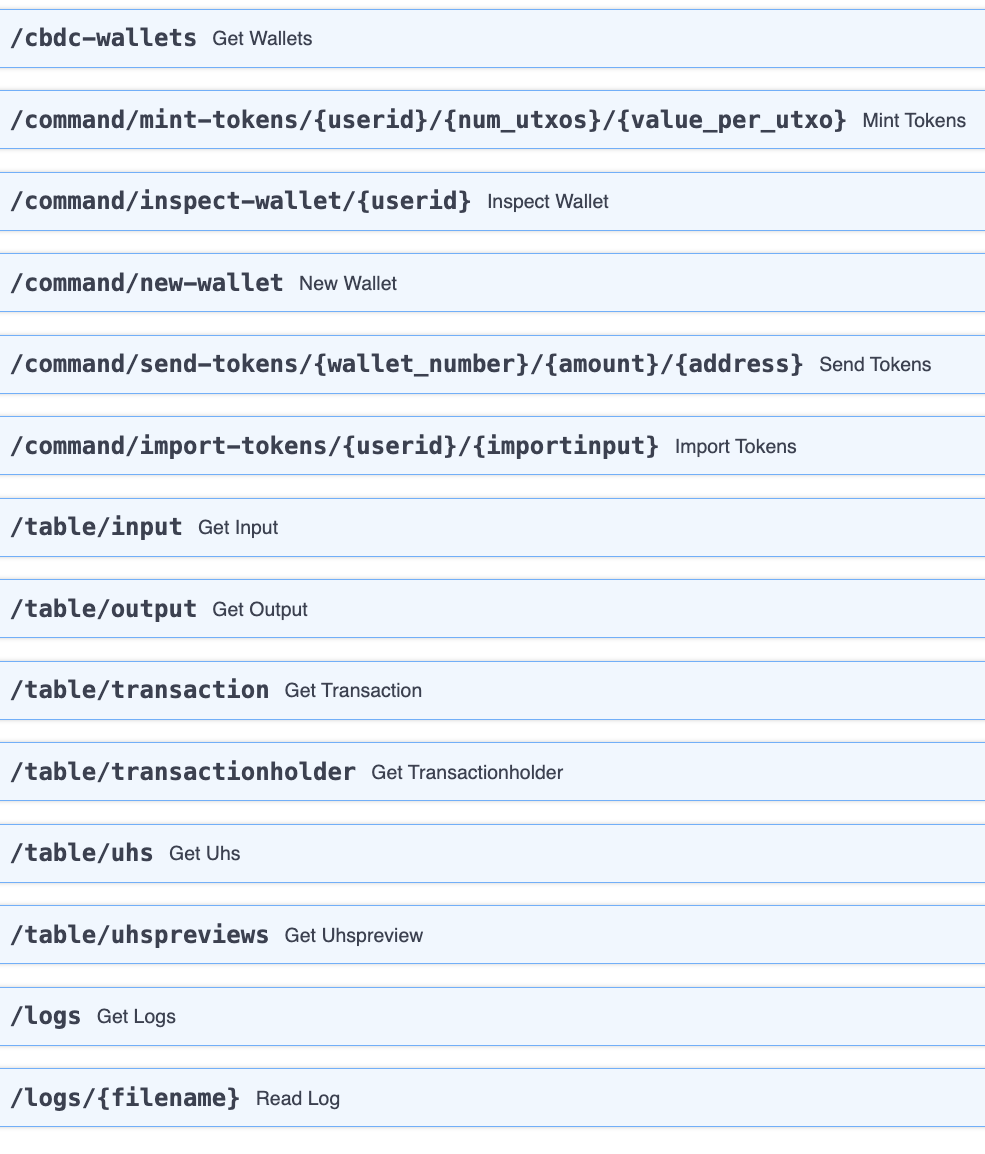

Frontend Wallet Development

The frontend application comprises a number of RESTful API routes that execute various actions. Those nested within the "command" subdirectory are of particular importance. These routes are designed to accept a wide range of parameters, each initiating specific operations within the OpenCBDC framework. The execution of these functions is facilitated through a Docker container, which is directly interfaced with the OpenCBDC to ensure flawless operation.

Among the commands in the "command" subdirectory,

- The ‘mint tokens’ command allows for the creation of tokens, each with an assigned value, and allocates them to a user’s wallet, thereby regulating the token supply within the system.

- The ‘inspect wallet’ route provides a detailed view of the contents of a user’s wallet, while ‘new wallet’ establishes a new wallet and user within the system, assigning a unique address.

- For transactions between users, the ‘send tokens’ enables token transactions between wallets, and the ‘import tokens’ is used to finalize these transactions by updating the wallet’s state.

The system also features a "table" subdirectory, designed to interface with the Autonomous Database. When a user navigates directly to one of these routes, the application dynamically generates and presents a Jinja2 template page. This page displays the data contained in the specified database table, offering a user-friendly view of the stored information. In scenarios where the user’s preference leans towards data manipulation or integration into other applications, the routes under the “table” subdirectory offer an alternative response mode. By sending a request with the ‘application/json’ header, users receive a purely JSON-formatted representation of the table data. This flexibility allows for seamless data integration or further customization on the user’s end.

Lastly, operational oversight is supported by the "logs" subdirectory. where routes like 'GET /logs' and 'GET /logs/{filename}' provide access to the server logs. The route 'GET /cbdc-wallets' within the application is tasked with retrieving and displaying all active wallets in the system, ensuring comprehensive management of wallet-related operations.

Future Work

Optimizing Database Interactions

This project would benefit from additional work to further integrate OpenCBDC and adapt it better to using Oracle Database. Some of the suggested areas are:

- Replacing the in-memory storage with std::unordered_set in OpenCBDC to use the UHS table that we created in Oracle Autonomous Database.

- Using a single database distributed transaction for each OpenCBDC transaction rather than multiple database transactions

- Consider replacing the input token table and output token table with two JSON (or varray) columns in the transaction table. Then the inputs and outputs of an OpenCBDC transaction are in the same database table (and row) as the OpenCBDC transaction rather than in separate input and output tables with one row per token

- Moving some of the steps to use in-database programming via stored procedures and triggers to optimize the performance

- Adding blockchain table verification and table digest capabilities as well as other database security measures

Benchmarking Plan

One of the initial goals of this project was to obtain benchmarks of our implementation utilizing Autonomous Database in OCI and blockchain tables, and to compare these numbers to the ones demonstrated in the OpenCBDC whitepaper for 2PC architecture. An initial benchmarking plan was created, but the effort to port benchmarking scripts was deferred. Benchmarking this in OCI should explore the following scaling capabilities:

- Oracle Autonomous Transaction Processing (ATP) Database Auto-scaling

- Testing on scale-out Exadata Cloud Service infrastructure, which offloads SQL operations to intelligent storage servers that don’t consume CPU resources

- Adjusting the use of Oracle Sharding to eliminate performance bottlenecks

- Distributing Oracle Database Shards geographically (across multiple cloud regions) to achieve extreme availability and fault isolation

Enhancing Wallet and Admin Apps

Initial implementations of these apps can be enhanced in the following ways:

- Admin UI:

- Move metrics dashboards implementation to Oracle Analytics

- Collect additional metrics on issuing, transfers, and burning

- Provide traceability and auditing capability

- Provide verification of data immutability through Blockchain Table procedures

- Wallet Transactions Tracker UI:

- Explore moving to an APEX implementation

- Implement transaction history view

- Enable programmable payments transactions (conditional, time triggered, alerts, etc.)

- Create a mobile app version

Exploring Project Hamilton Phase 2 Requirements

The Phase 2 Project Hamilton objectives described in Project Hamilton Phase 1 Executive Summary include research into “..cryptographic designs for privacy and auditability, programmability and smart contracts, offline payments, secure issuance and redemption, new use cases and access models, techniques for maintaining open access while protecting against denial of service attacks, and new tools for enacting policy.” Several areas could further benefit by leveraging Oracle Autonomous Database’s built-in capabilities, OCI’s cybersecurity and resilience, tools for national and global-scale deployment management and monitoring, as well as various infrastructure and platform services, including the Oracle Blockchain Platform based on Distributed Ledger Technology (DLT).

Learnings

A critical learning outcome was the deepened understanding of blockchain technology’s potential in enhancing financial systems. This knowledge was not just theoretical but was applied in the practical setting of integrating Oracle Blockchain with OpenCBDC. The project provided first-hand experience in handling and overcoming the technical challenges associated with such integration. We learned how to adapt and optimize Oracle’s blockchain technology to meet the specific needs of CBDC platforms, particularly in terms of scalability and security.

Another significant learning aspect was database management using Oracle’s tools. Working on Oracle Autonomous Database, we gained practical skills in managing digital currency transactions and ensuring data integrity and security. This experience was invaluable in understanding the complexities of database management in a blockchain context, and how these technologies can be leveraged to enhance the efficiency and reliability of CBDC platforms.

Throughout the project, we also developed a nuanced understanding of the policy implications and design choices involved in CBDC development. This included an appreciation of the trade-offs between scalability, privacy, and auditability. Understanding these trade-offs was crucial in making informed decisions on the architecture and features of the CBDC platform.

Conclusion

As CBDC developments and explorations around the world accelerate, the questions of scalability and auditability will be key enabling factors to enable meaningful production deployments. Trading them off against each other is not going to be acceptable for any Central Bank, so they need to be addressed together. This Lehigh University capstone project is an effort to combine high performance OpenCBDC 2PC-based architecture with the auditability of Oracle in-database Blockchain Tables, while leveraging the high scalability offered by Oracle Database Sharding, Exadata deployment infrastructure, and OCI hyper scaling capabilities. The project has achieved its initial objective to implement this integration, with further work identified for the follow-on teams.

Additional information on this and other Lehigh University blockchain projects completed in the capstone program this year, including another Oracle-sponsored project on Decentralized Self-Sovereign Identity, are described in this post from Blockchain at Lehigh University.

Acknowledgements

Members of the Lehigh University student team included Nate Cable ‘24, Zeeshan Khan ‘24, Dan McClellan ‘24, and Bella Wang ‘24. Throughout the project, our team engaged in weekly discussions with Oracle sponsors Mark Rakhmilevich, James Stamos, and Bala Vellanki. These discussions centered around progress, pressing topics, and guidance on Oracle Cloud Infrastructure (OCI), database features, schema design, and blockchain-specific functionalities. This collaboration, coordinated by Professor Andrea Smith of Lehigh University and supported by Professor Hank Korth for his blockchain and Oracle Database expertise, underscores our commitment to pioneering in the field of digital currency technology.