[Update from 4/18] OCI Data Science released AI Quick Actions, a no-code solution to fine-tune, deploy, and evaluate popular Large Language Models. You can open a notebook session to try it out. This blog post will guide you on how to work with LLMs via code, for optimal customization and flexibility.

In the world of artificial intelligence (AI) and deep learning, staying at the cutting edge requires a relentless pursuit of more powerful hardware and smarter algorithms. Llama 2 has been one of the large language models (LLMs) in the spotlight thanks to perceptions of its remarkable quality. The largest model in the collection boasts 70 billion parameters and is also the most potent. This massive model has opened new possibilities for natural language processing (NLP) and generation, but it also demands formidable computational resources to reach its full potential.

Oracle Cloud Infrastructure (OCI) Data Science is a fully managed platform for data scientists and machine learning (ML) engineers to train, manage, deploy, and monitor ML models in a scalable, secure enterprise environment. You can train and deploy any model, including LLMs, in the Data Science service. In this article, we show how to deploy LLMs in a more cost-effective way.

The usual suspects when it comes to handling such large models are the NVIDIA A100 and H100 Tensor Core GPUs. They’re incredibly performant, and their massive memory capacity of 40 GB and 80 GB position them as an ideal fit to host those incredibly large models. However, they can come at a higher price point.

The NVIDIA A10 GPU doesn’t have the premium specs of the A100 or H100 GPUs, but it comes in more economical packages and costs less than its siblings. How much less? Let’s compare the options as of November 2023:

| GPU | Total GPU memory |

Price per hour (For pay-as-you-go, on-demand) |

|---|---|---|

| BM.GPU.H100.8 (8x NVIDIA H100 80GB Tensor Core) | 640GB (8x 80GB) | $80 ($10 per node per hour) |

| BM.GPU4.8 (8x NVIDIA A100 40GB Tensor Core) | 320GB (8x 40GB) | $24.4 ($3.05 per node per hour) |

| BM.GPU.A10.4 (4x NVIDIA A10 Tensor Core) | 96GB (4x 24GB) | $8 ($2 per node per hour) |

| VM.GPU.A10.2 (2x NVIDIA A10 Tensor Core) | 48GB (2x 24GB) | $4 ($2 per node per hour) |

| VM.GPU.A10.1 (1x NVIDIA A10 Tensor Core) | 24GB | $2 |

Using A10 GPUs gives a better price point, especially if the A10.1 or A10.2 are used. Is it possible, though?

Let’s do some math: 70 billion parameters at 16-bit precision (2 bytes) equals about 140 GB in memory (70,000,000,000 x 2B = 140 GB). So, even the A10.4 isn’t enough to hold the model in memory. A10 doesn’t support NVLink for multi-GPU memory distribution. What can we do then?

Enter: Quantization

Quantization is a technique to reduce the computational and memory costs of running inference by representing the weights and activations with low-precision data types. You can read about the GPTQ algorithm in depth in this detailed article by Maxime Labonne.

Llama 2 was trained using the bfloat16 data type (2 bytes). So, you can run quantization by reducing the data type of its parameters to use fewer bits. Several experiments found that quantizing to 4 bits, or 0.5 bytes, provides excellent data utilization with only a small decrease in model quality. Going back to the math, our calculations now show that 70 billion parameters multiplied by half a byte results in only 35 GB.

Lo and behold, the model can fit an A10.2! This configuration only costs one-sixth the price of using A100 GPUs. For use cases that require a single LLM, the savings in cost are significant.

Now that we know that we want to use the A10.2 for our Llama 2 70B, let’s see how to achieve that.

Step 1: Prerequisites

You need a notebook session, access to Llama 2 and Hugging Face, and more. The complete list of prerequisites is available in this GitHub sample.

Step 2: Quantize

You can skip this step and take a quantized model directly from Hugging Face. But if you want to fine-tune your model, you must quantize it after the fine-tuning is completed. Plus, you don’t want to skip the fun part, right? Let’s quantize!

In this example, we’re using GPTQ, a very popular algorithm for quantization, which is integrated into Hugging Face transformers and works well with Llama 2. We’re also using the popular ‘wikitext2’ as the calibration dataset for the quantization, but you can use any dataset you want. You can find the full code for quantization, including just loading the model in 4 bits, on GitHub.

We need to specify the maximum memory for the GPUs to load the model, as we need to keep some extra memory for the quantization. The memory management of GPTQ isn’t transparent. We’ve found that limiting each GPU to 5 GB prevents it from going out of memory during the quantization.

The following code loads the model and quantizes it to 4 bits:

import os

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, GPTQConfig

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:512"

model_id = "meta-llama/Llama-2-70b-hf"

dataset_id = "wikitext2"

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=False)

gptq_config = GPTQConfig(bits=4, dataset=dataset_id, tokenizer=tokenizer, model_seqlen=128)

model_quantized = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.float16,

device_map="auto",

max_memory={0: "5GB", 1: "5GB", "cpu": "400GB"},

quantization_config=gptq_config,

)

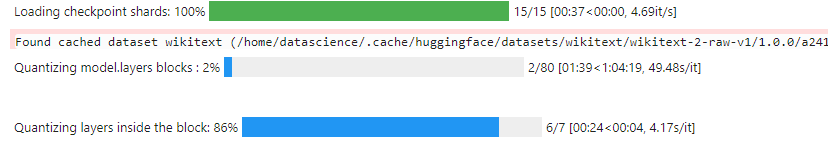

The process will show the progress over the 80 layers. It will take about 1 hour with the wikitext2 dataset.

Note that we cannot run inference on this particular “quantized model” because some blocks are loaded across multiple devices. For inferencing, we need to save the model and load it back. Save the quantized model and the tokenizer with the following command:

save_folder = "Llama-2-70b-hf-quantized" model_quantized.save_pretrained(save_folder) tokenizer.save_pretrained(save_folder)

Because the model was partially offloaded, it set ‘disable_exllama’ to ‘True’ to avoid an error. For inference and production load, we want to use the exllama kernels. So, we need to change the config.json. Edit the config.json file, find the key, ‘disable_exllama,’ and set it to ‘False.’

Now that the model is saved to the disk, we can see that its size is about 34.1 GB. That size aligns with our calculations and can fit 2x A10 GPUs, which can handle up to 48 GB of memory.

We can load the quantized model back without the max_memory limit:

tokenizer_q = AutoTokenizer.from_pretrained(save_folder)

model_quantized = AutoModelForCausalLM.from_pretrained(

save_folder,

device_map="auto",

)

Step 3: Deploy

Let’s save the model to the model catalog, which makes it easier to deploy the model. Follow the steps in this GitHub sample to save the model to the model catalog.

Now follow the steps in the Deploy Llama 2 in OCI Data Science to deploy the model. Use VM.GPU.A10.2 for the deployment.

Conclusion

Quantizing LLMs is an easy way to reduce its memory footprint and save on costs and even performance, without minimal compromise on model quality. The ability to quantize and deploy best-in-class LLMs like Llama 2 70B on NVIDIA A10 GPUs has multiple benefits: these GPUs are easier to find, and they’re more economical than their bigger siblings, the NVIDIA A100 and H100 GPUs.

Quantizing and deploying on OCI Data Science is straightforward and simple. There’s no need to manage the infrastructure and the process can be scaled as needed.

In a future blog post, we will benchmark the performance of quantized models deployed on A10 GPUs versus its foundation model deployed on A100 GPUs and compare the actual costs. Stay tuned!

Try Oracle Cloud Free Trial! A 30-day trial with US$300 in free credits gives you access to Oracle Cloud Infrastructure Data Science service. For more information, see the following resources:

- Full sample, including all files in OCI Data Science sample repository on GitHub.

- Visit our service documentation.

- Watch our tutorials on our YouTube playlist.

- Try one of our LiveLabs. Search for “data science.”

- Got questions? Reach out to us at ask-oci-data-science_grp@oracle.com